What Runs Your Start-up - Imagga

Imagga is a cloud platform that helps businesses and individuals organize their images in a fast and cost-effective way. They develop a range of advanced proprietary image recognition and image processing technologies, which are built into several services such as smart image cropping, color extraction and multi-color search, visual similarity search and auto-tagging.

During Balkan Venture Forum in Sofia I sat down with Georgi Kadrev to talk about technology. Surprisingly this hi-tech service is built on top of standard low-tech components and lots of hard work.

Main Technologies

Core functionality is developed in C and C++ with the OpenCV library. Imagga relies heavily on own image processing algorithms for their core features. These were built as a combination of their own research activities and publications from other researchers.

Image processing is executed by worker nodes configured with their own software stack. Nodes are distributed among Amazon EC2 and other data centers.

Client libraries to access Imagga API are available in PHP, Ruby and Java.

Imagga has built several websites to showcase their technology. Cropp.me, ColorsLike.me, StockPodium and AutoTag.me were built with PHP, JavaScript and jQuery above a standard LAMP stack.

Recently Imagga also started using GPU computing with nVidia Tesla cards. They use C++ and Python bindings for CUDA.

Why Not Something Else?

As an initially bootstrapping start-up we chose something that is basically free, reliable and popular - that's why started with the LAMP stack. It proved to be stable and convenient for our web needs and we preserved it. The use of C++ is a natural choice for computational intensive tasks that we need to perform for the purpose of our core expertise - image processing. Though we initially wrote the whole core technology code from scratch, we later switched to OpenCV for some of the building blocks as it is very well optimized and continuously extended image processing library.

With the raise of affordable high-performance GPU processors and their availability in server instances, we decided it's time to take advantage of this highly parallel architecture, perfectly suitable for image processing tasks.

Georgi Kadrev

Want More Info?

If you’d like to hear more from Imagga please comment below. I will ask them to follow this thread and reply to your questions.

There are comments.

Performance Test: Amazon EBS vs. Instance Storage, Pt.1

I'm exploring the possibility to speed-up my cloud database so I've run some basic tests against storage options available to Amazon EC2 instances. The instance was m1.large with High I/O performance and two additional disks with the same size:

- /dev/xvdb - type EBS

- /dev/xvdc - type instance storage

Both are Xen para-virtual disks. The difference is that EBS is persistent across reboots while instance storage is ephemeral.

hdparm

For a quick test I used hdparm. The manual says:

-T Perform timings of cache reads for benchmark and comparison purposes.

This displays the speed of reading directly from the Linux buffer cache

without disk access. This measurement is essentially an indication of

the throughput of the processor, cache, and memory of the system under test.

-t Perform timings of device reads for benchmark and comparison purposes.

This displays the speed of reading through the buffer cache to the disk

without any prior caching of data. This measurement is an indication of how

fast the drive can sustain sequential data reads under Linux, without any

filesystem overhead.

The results of 3 runs of hdparm are shown below:

# hdparm -tT /dev/xvdb /dev/xvdc

/dev/xvdb:

Timing cached reads: 11984 MB in 1.98 seconds = 6038.36 MB/sec

Timing buffered disk reads: 158 MB in 3.01 seconds = 52.52 MB/sec

/dev/xvdc:

Timing cached reads: 11988 MB in 1.98 seconds = 6040.01 MB/sec

Timing buffered disk reads: 1810 MB in 3.00 seconds = 603.12 MB/sec

# hdparm -tT /dev/xvdb /dev/xvdc

/dev/xvdb:

Timing cached reads: 11892 MB in 1.98 seconds = 5991.51 MB/sec

Timing buffered disk reads: 172 MB in 3.00 seconds = 57.33 MB/sec

/dev/xvdc:

Timing cached reads: 12056 MB in 1.98 seconds = 6075.29 MB/sec

Timing buffered disk reads: 1972 MB in 3.00 seconds = 657.11 MB/sec

# hdparm -tT /dev/xvdb /dev/xvdc

/dev/xvdb:

Timing cached reads: 11994 MB in 1.98 seconds = 6042.39 MB/sec

Timing buffered disk reads: 254 MB in 3.02 seconds = 84.14 MB/sec

/dev/xvdc:

Timing cached reads: 11890 MB in 1.99 seconds = 5989.70 MB/sec

Timing buffered disk reads: 1962 MB in 3.00 seconds = 653.65 MB/sec

Result: Sequential reads from instance storage are 10x faster compared to EBS on average.

IOzone

I'm running MySQL and sequential data reads are probably over idealistic scenario. So I found another benchmark suite, called IOzone. I used the 3-414 version built from the official SRPM.

IOzone performs multiple tests. I'm interested in read/re-read, random-read/write, read-backwards and stride-read.

For this round of testing I've tested with ext4 filesystem with and without journal on both types of disks. I also experimented running Iozone inside a ramfs mounted directory. However I didn't have the time to run the test suite multiple times.

Then I used iozone-results-comparator to visualize the results. (I had to do a minor fix to the code to run inside virtualenv and install all missing dependencies).

Raw IOzone output, data visualization and the modified tools are available in the aws_disk_benchmark_w_iozone.tar.bz2 file (size 51M).

Graphics

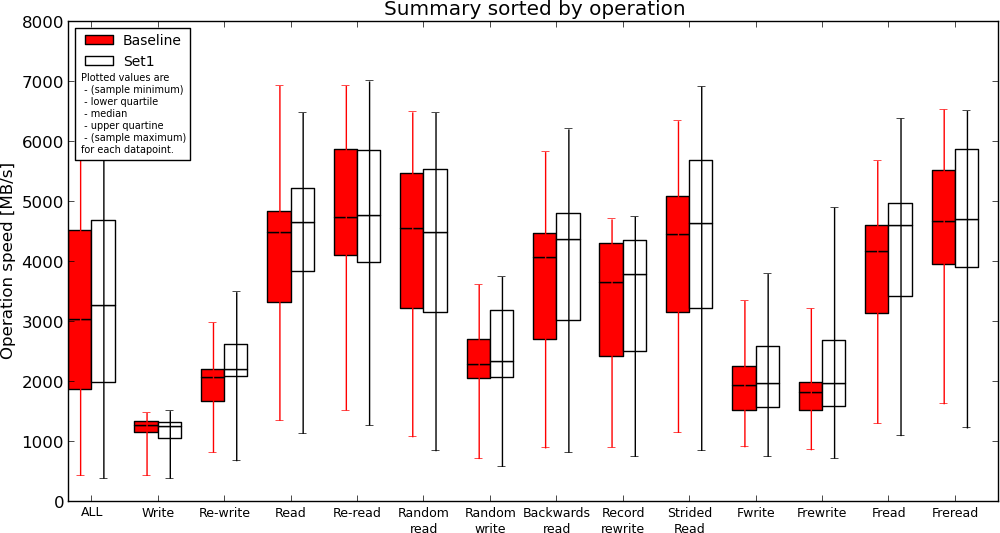

EBS without journal(Baseline) vs. Instance Storage without journal(Set1)

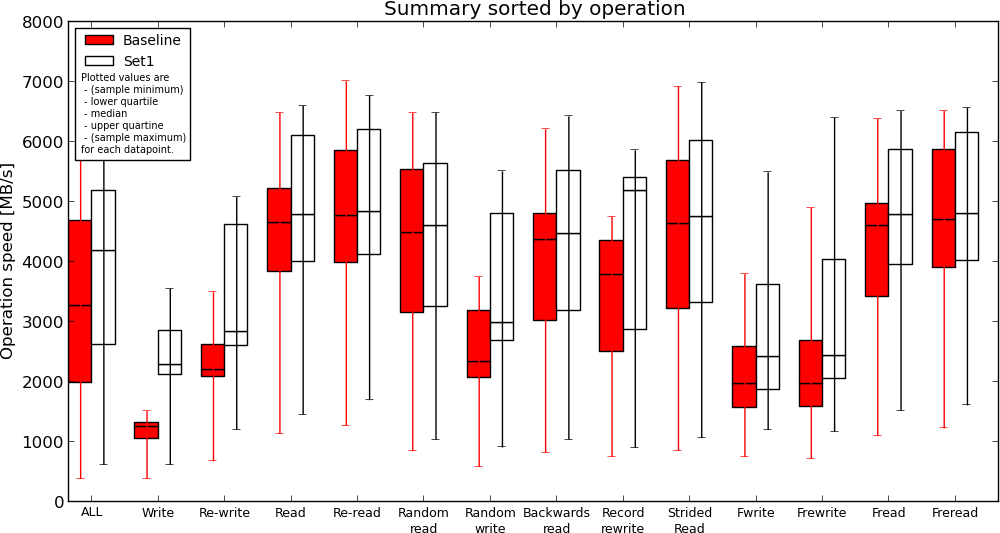

Instance Storage without journal(Baseline) vs. Ramfs(Set1)

Results

- ext4 journal has no effect on reads, causes slow down when writing to disk. This is expected;

- Instance storage is faster compared to EBS but not much. If I understand the results correctly, read performance is similar in some cases;

- Ramfs is definitely the fastest but read performance compared to instance storage is not two-fold (or more) as I expected;

Conclusion

Instance storage appears to be faster (and this is expected) but I'm still not sure if my application will gain any speed improvement or how much if migrated to read from instance storage (or ramfs) instead of EBS. I will be performing more real-world test next time, by comparing execution time for some of my largest SQL queries.

If you have other ideas how to adequately measure I/O performance in the AWS cloud, please use the comments below.

There are comments.