Performance test: Amazon ElastiCache vs Amazon S3

Which Django cache backend is faster? Amazon ElastiCache or Amazon S3 ?

Previously I've mentioned about using Django's cache to keep state between HTTP requests. In my demo described there I was using django-s3-cache. It is time to move to production so I decided to measure the performance difference between the two cache options available at Amazon Web Services.

Update 2013-07-01: my initial test may have been false since I had not configured ElastiCache access properly. I saw no errors but discovered the issue today on another system which was failing to store the cache keys but didn't show any errors either. I've re-run the tests and updated times are shown below.

Test infrastructure

- One Amazon S3 bucket, located in US Standard (aka US East) region;

- One Amazon ElastiCache cluster with one Small Cache Node (cache.m1.small) with Moderate I/O capacity;

- One Amazon Elasticache cluster with one Large Cache Node (cache.m1.large) with High I/O Capacity;

- Update: I've tested both

python-memcachedandpylibmcclient libraries for Django; - Update: Test is executed from an EC2 node in the us-east-1a availability zone;

- Update: Cache clusters are in the us-east-1a availability zone.

Test Scenario

The test platform is Django. I've created a

skeleton project

with only CACHES settings

defined and necessary dependencies installed. A file called test.py holds the

test cases, which use the standard timeit module. The object which is stored in cache

is very small - it holds a phone/address identifiers and couple of user made selections.

The code looks like this:

import timeit

s3_set = timeit.Timer(

"""

for i in range(1000):

my_cache.set(i, MyObject)

"""

,

"""

from django.core import cache

my_cache = cache.get_cache('default')

MyObject = {

'from' : '359123456789',

'address' : '6afce9f7-acff-49c5-9fbe-14e238f73190',

'hour' : '12:30',

'weight' : 5,

'type' : 1,

}

"""

)

s3_get = timeit.Timer(

"""

for i in range(1000):

MyObject = my_cache.get(i)

"""

,

"""

from django.core import cache

my_cache = cache.get_cache('default')

"""

)

Tests were executed from the Django shell on my laptop

on an EC2 instance in the us-east-1a availability zone. ElastiCache nodes

were freshly created/rebooted before test execution. S3 bucket had no objects.

$ ./manage.py shell

Python 2.6.8 (unknown, Mar 14 2013, 09:31:22)

[GCC 4.6.2 20111027 (Red Hat 4.6.2-2)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

(InteractiveConsole)

>>> from test import *

>>>

>>>

>>>

>>> s3_set.repeat(repeat=3, number=1)

[68.089607000350952, 70.806712865829468, 72.49261999130249]

>>>

>>>

>>> s3_get.repeat(repeat=3, number=1)

[43.778793096542358, 43.054368019104004, 36.19232702255249]

>>>

>>>

>>> pymc_set.repeat(repeat=3, number=1)

[0.40637087821960449, 0.3568730354309082, 0.35815882682800293]

>>>

>>>

>>> pymc_get.repeat(repeat=3, number=1)

[0.35759496688842773, 0.35180497169494629, 0.39198613166809082]

>>>

>>>

>>> libmc_set.repeat(repeat=3, number=1)

[0.3902890682220459, 0.30157709121704102, 0.30596804618835449]

>>>

>>>

>>> libmc_get.repeat(repeat=3, number=1)

[0.28874802589416504, 0.30520200729370117, 0.29050207138061523]

>>>

>>>

>>> libmc_large_set.repeat(repeat=3, number=1)

[1.0291709899902344, 0.31709098815917969, 0.32010698318481445]

>>>

>>>

>>> libmc_large_get.repeat(repeat=3, number=1)

[0.2957158088684082, 0.29067802429199219, 0.29692888259887695]

>>>

Results

As expected ElastiCache is much faster (10x) compared to S3. However the difference between the two ElastiCache node types is subtle. I will stay with the smallest possible node to minimize costs. Also as seen, pylibmc is a bit faster compared to the pure Python implementation.

Depending on your objects size or how many set/get operations you perform per second you may need to go with the larger nodes. Just test it!

It surprised me how slow django-s3-cache is.

The false test showed django-s3-cache to be 100x slower but new results are better.

10x decrease in performance sounds about right for a filesystem backed cache.

A quick look at the code of the two backends shows some differences. The one I immediately see is that for every cache key django-s3-cache creates an sha1 hash which is used as the storage file name. This was modeled after the filesystem backend but I think the design is wrong - the memcached backends don't do this.

Another one is that django-s3-cache time-stamps all objects and uses pickle to serialize them. I wonder if it can't just write them as binary blobs directly. There's definitely lots of room for improvement of django-s3-cache. I will let you know my findings once I get to it.

There are comments.

Performance Test: Amazon EBS vs. Instance Storage, Pt.1

I'm exploring the possibility to speed-up my cloud database so I've run some basic tests against storage options available to Amazon EC2 instances. The instance was m1.large with High I/O performance and two additional disks with the same size:

- /dev/xvdb - type EBS

- /dev/xvdc - type instance storage

Both are Xen para-virtual disks. The difference is that EBS is persistent across reboots while instance storage is ephemeral.

hdparm

For a quick test I used hdparm. The manual says:

-T Perform timings of cache reads for benchmark and comparison purposes.

This displays the speed of reading directly from the Linux buffer cache

without disk access. This measurement is essentially an indication of

the throughput of the processor, cache, and memory of the system under test.

-t Perform timings of device reads for benchmark and comparison purposes.

This displays the speed of reading through the buffer cache to the disk

without any prior caching of data. This measurement is an indication of how

fast the drive can sustain sequential data reads under Linux, without any

filesystem overhead.

The results of 3 runs of hdparm are shown below:

# hdparm -tT /dev/xvdb /dev/xvdc

/dev/xvdb:

Timing cached reads: 11984 MB in 1.98 seconds = 6038.36 MB/sec

Timing buffered disk reads: 158 MB in 3.01 seconds = 52.52 MB/sec

/dev/xvdc:

Timing cached reads: 11988 MB in 1.98 seconds = 6040.01 MB/sec

Timing buffered disk reads: 1810 MB in 3.00 seconds = 603.12 MB/sec

# hdparm -tT /dev/xvdb /dev/xvdc

/dev/xvdb:

Timing cached reads: 11892 MB in 1.98 seconds = 5991.51 MB/sec

Timing buffered disk reads: 172 MB in 3.00 seconds = 57.33 MB/sec

/dev/xvdc:

Timing cached reads: 12056 MB in 1.98 seconds = 6075.29 MB/sec

Timing buffered disk reads: 1972 MB in 3.00 seconds = 657.11 MB/sec

# hdparm -tT /dev/xvdb /dev/xvdc

/dev/xvdb:

Timing cached reads: 11994 MB in 1.98 seconds = 6042.39 MB/sec

Timing buffered disk reads: 254 MB in 3.02 seconds = 84.14 MB/sec

/dev/xvdc:

Timing cached reads: 11890 MB in 1.99 seconds = 5989.70 MB/sec

Timing buffered disk reads: 1962 MB in 3.00 seconds = 653.65 MB/sec

Result: Sequential reads from instance storage are 10x faster compared to EBS on average.

IOzone

I'm running MySQL and sequential data reads are probably over idealistic scenario. So I found another benchmark suite, called IOzone. I used the 3-414 version built from the official SRPM.

IOzone performs multiple tests. I'm interested in read/re-read, random-read/write, read-backwards and stride-read.

For this round of testing I've tested with ext4 filesystem with and without journal on both types of disks. I also experimented running Iozone inside a ramfs mounted directory. However I didn't have the time to run the test suite multiple times.

Then I used iozone-results-comparator to visualize the results. (I had to do a minor fix to the code to run inside virtualenv and install all missing dependencies).

Raw IOzone output, data visualization and the modified tools are available in the aws_disk_benchmark_w_iozone.tar.bz2 file (size 51M).

Graphics

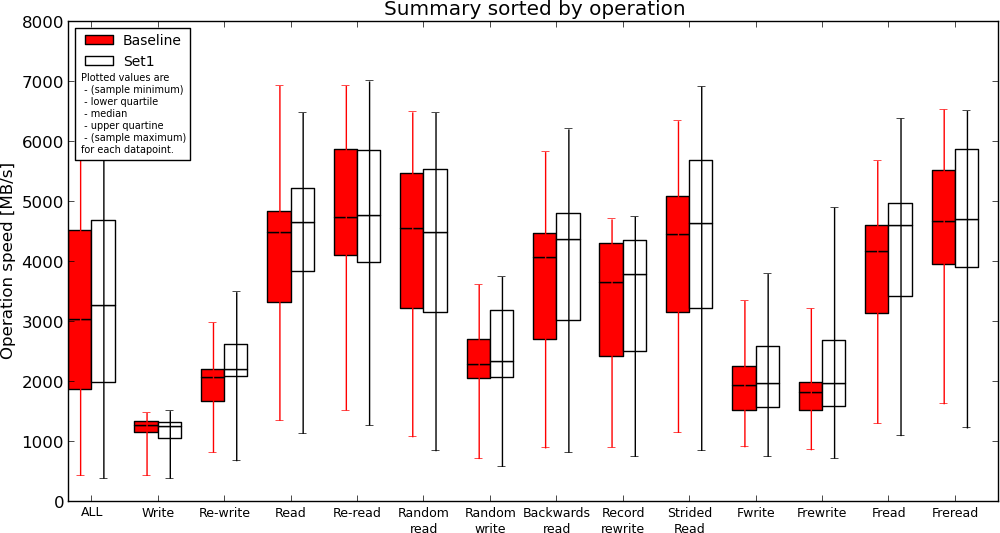

EBS without journal(Baseline) vs. Instance Storage without journal(Set1)

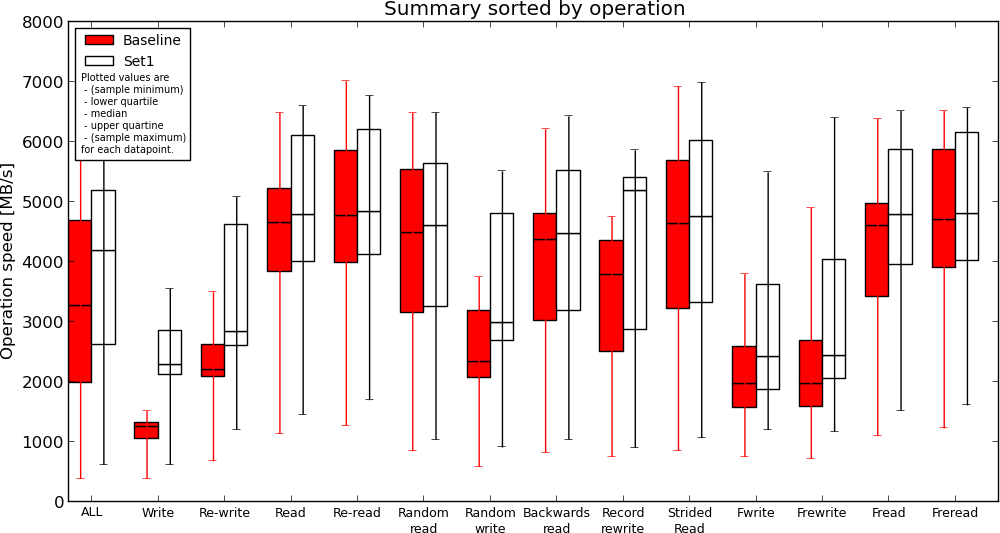

Instance Storage without journal(Baseline) vs. Ramfs(Set1)

Results

- ext4 journal has no effect on reads, causes slow down when writing to disk. This is expected;

- Instance storage is faster compared to EBS but not much. If I understand the results correctly, read performance is similar in some cases;

- Ramfs is definitely the fastest but read performance compared to instance storage is not two-fold (or more) as I expected;

Conclusion

Instance storage appears to be faster (and this is expected) but I'm still not sure if my application will gain any speed improvement or how much if migrated to read from instance storage (or ramfs) instead of EBS. I will be performing more real-world test next time, by comparing execution time for some of my largest SQL queries.

If you have other ideas how to adequately measure I/O performance in the AWS cloud, please use the comments below.

There are comments.

Performance test of MD5, SHA1, SHA256 and SHA512

A few months ago I wrote

django-s3-cache.

This is Amazon Simple Storage Service (S3) cache backend for Django

which uses hashed file names.

django-s3-cache uses sha1 instead of md5 which appeared to be

faster at the time. I recall that my testing wasn't very robust so I did another

round.

Test Data

The file urls.txt contains 10000 unique paths from the dif.io website and looks like this:

/updates/Django-1.3.1/Django-1.3.4/7858/

/updates/delayed_paperclip-2.4.5.2 c23a537/delayed_paperclip-2.4.5.2/8085/

/updates/libv8-3.3.10.4 x86_64-darwin-10/libv8-3.3.10.4/8087/

/updates/Data::Compare-1.22/Data::Compare-Type/8313/

/updates/Fabric-1.4.0/Fabric-1.4.4/8652/

Test Automation

I used the standard timeit module in Python.

#!/usr/bin/python

import timeit

t = timeit.Timer(

"""

import hashlib

for line in url_paths:

h = hashlib.md5(line).hexdigest()

# h = hashlib.sha1(line).hexdigest()

# h = hashlib.sha256(line).hexdigest()

# h = hashlib.sha512(line).hexdigest()

"""

,

"""

url_paths = []

f = open('urls.txt', 'r')

for l in f.readlines():

url_paths.append(l)

f.close()

"""

)

print t.repeat(repeat=3, number=1000)

Test Results

The main statement hashes all 10000 entries one by one. This statement is executed 1000 times in a loop, which is repeated 3 times. I have Python 2.6.6 on my system. After every test run the system was rebooted. Execution time in seconds is available below.

MD5 10.275190830230713, 10.155328989028931, 10.250311136245728

SHA1 11.985718965530396, 11.976419925689697, 11.86873197555542

SHA256 16.662450075149536, 21.551337003707886, 17.016510963439941

SHA512 18.339390993118286, 18.11187481880188, 18.085782051086426

Looks like I was wrong the first time! MD5 is still faster but not that much. I will stick with SHA1 for the time being.

If you are interested in Performance Testing checkout the

performance testing books on Amazon.

As always I’d love to hear your thoughts and feedback. Please use the comment form below.

Python 2.7 vs. 3.6 and BLAKE2

UPDATE: added on June 9th 2017

After request from my reader refi64 I've tested this again between different versions of Python and included a few more hash functions. The test data is the same, the test script was slightly modified for Python 3:

import timeit

print (timeit.repeat(

"""

import hashlib

for line in url_paths:

# h = hashlib.md5(line).hexdigest()

# h = hashlib.sha1(line).hexdigest()

# h = hashlib.sha256(line).hexdigest()

# h = hashlib.sha512(line).hexdigest()

# h = hashlib.blake2b(line).hexdigest()

h = hashlib.blake2s(line).hexdigest()

"""

,

"""

url_paths = [l.encode('utf8') for l in open('urls.txt', 'r').readlines()]

""",

repeat=3, number=1000))

Test was repeated 3 times for each hash function and the best time was taken into account. The test was performed on a recent Fedora 26 system. The results are as follows:

Python 2.7.13

MD5 [13.94771409034729, 13.931367874145508, 13.908519983291626]

SHA1 [15.20741891860962, 15.241390943527222, 15.198163986206055]

SHA256 [17.22162389755249, 17.229840993881226, 17.23402190208435]

SHA512 [21.557533979415894, 21.51376700401306, 21.522911071777344]

Python 3.6.1

MD5 [11.770181038000146, 11.778772834999927, 11.774679265000032]

SHA1 [11.5838599839999, 11.580340686999989, 11.585769942999832]

SHA256 [14.836309305999976, 14.847088003999943, 14.834776135999846]

SHA512 [19.820048629999746, 19.77282728099999, 19.778471210000134]

BLAKE2b [12.665497404000234, 12.668979115000184, 12.667314543999964]

BLAKE2s [11.024885618000098, 11.117366972000127, 10.966767880999669]

- Python 3 is faster than Python 2

- SHA1 is a bit faster than MD5, maybe there's been some optimization

- BLAKE2b is faster than SHA256 and SHA512

- BLAKE2s is the fastest of all functions

Note: BLAKE2b is optimized for 64-bit platforms, like mine and I thought it will be faster than BLAKE2s (optimized for 8- to 32-bit platforms) but that's not the case. I'm not sure why is that though. If you do, please let me know in the comments below!

There are comments.