Idempotent Django Email Sender with Amazon SQS and Memcache

Recently I wrote about my problem with duplicate Amazon SQS messages causing multiple emails for Difio. After considering several options and feedback from @Answers4AWS I wrote a small decorator to fix this.

It uses the cache backend to prevent the task from executing twice during the specified time frame. The code is available at https://djangosnippets.org/snippets/3010/.

As stated on Twitter you should use Memcache (or ElastiCache) for this.

If using Amazon S3 with my

django-s3-cache don't use the

us-east-1 region because it is eventually consistent.

The solution is fast and simple on the development side and uses my existing cache infrastructure so it doesn't cost anything more!

There is still a race condition between marking the message as processed and the second check but nevertheless this should minimize the possibility of receiving duplicate emails to an accepted level. Only time will tell though!

There are comments.

Bug in Python URLGrabber/cURL on Fedora and Amazon Linux

Accidentally I have discovered a bug for Python's URLGrabber module which has to do with change in behavior in libcurl.

>>> from urlgrabber.grabber import URLGrabber

>>> g = URLGrabber(reget=None)

>>> g.urlgrab('https://s3.amazonaws.com/production.s3.rubygems.org/gems/columnize-0.3.6.gem', '/tmp/columnize.gem')

Traceback (most recent call last):

File "<console>", line 1, in <module>

File "/home/celeryd/.virtualenvs/difio/lib/python2.6/site-packages/urlgrabber/grabber.py", line 976, in urlgrab

return self._retry(opts, retryfunc, url, filename)

File "/home/celeryd/.virtualenvs/difio/lib/python2.6/site-packages/urlgrabber/grabber.py", line 880, in _retry

r = apply(func, (opts,) + args, {})

File "/home/celeryd/.virtualenvs/difio/lib/python2.6/site-packages/urlgrabber/grabber.py", line 962, in retryfunc

fo = PyCurlFileObject(url, filename, opts)

File "/home/celeryd/.virtualenvs/difio/lib/python2.6/site-packages/urlgrabber/grabber.py", line 1056, in __init__

self._do_open()

File "/home/celeryd/.virtualenvs/difio/lib/python2.6/site-packages/urlgrabber/grabber.py", line 1307, in _do_open

self._set_opts()

File "/home/celeryd/.virtualenvs/difio/lib/python2.6/site-packages/urlgrabber/grabber.py", line 1161, in _set_opts

self.curl_obj.setopt(pycurl.SSL_VERIFYHOST, opts.ssl_verify_host)

error: (43, '')

>>>

The code above works fine with curl-7.27 or older while it breaks with curl-7.29 and newer. As explained by Zdenek Pavlas the reason is an internal change in libcurl which doesn't accept a value of 1 anymore!

The bug is reproducible with a newer libcurl version and a vanilla urlgrabber==3.9.1 from PyPI (e.g. inside a virtualenv). The latest python-urlgrabber RPM packages in both Fedora and Amazon Linux already have the fix.

I have tested the patch proposed by Zdenek and it works for me. I still have no idea why there aren't any updates released on PyPI though!

There are comments.

This Week: Python Testing, Chris DiBona on Open Source and OpenShift ENV Variables

Here is a random collection of links I came across this week which appear interesting to me but I don't have time to blog about in details.

Making a Multi-branch Test Server for Python Apps

If you are wondering how to test different feature branches of your Python application but don't have the resources to create separate test servers this is for you: http://depressedoptimism.com/blog/2013/10/8/making-a-multi-branch-test-server!

Kudos to the python-django-bulgaria Google group for finding this link!

OpenSource.com Interview with Chris DiBona

Just read it at http://opensource.com/business/13/10/interview-chris-dibona.

I particularly like the part where he called open source "brutal".

You once called open source “brutal”. What did you mean by that?

...

I think that it is because open source projects are able to only work with the productive people and ignore everyone else. That behavior can come across as very harsh or exclusionary, and that's because it is that: brutally harsh and exclusionary of anyone who isn't contributing.

...

So, I guess what I'm saying is that survival of the fittest as practiced in the open source world is a pretty brutal mechanism, but it works very very well for producing quality software. Boy is it hard on newcomers though...

OpenShift Finally Introduces Environment Variables

Yes! Finally!

rhc set-env VARIABLE1=VALUE1 -a myapp

No need for my work around anymore! I will give the new feature a go very soon.

Read more about it at the OpenShift blog: https://www.openshift.com/blogs/taking-advantage-of-environment-variables-in-openshift-php-apps.

Have you found anything interesting this week? Please share in the comments below! Thanks!

There are comments.

Linux and Python Tools To Compare Images

How to compare two images in Python? A tricky question with quite a few answers. Since my needs are simple, my solution is simpler!

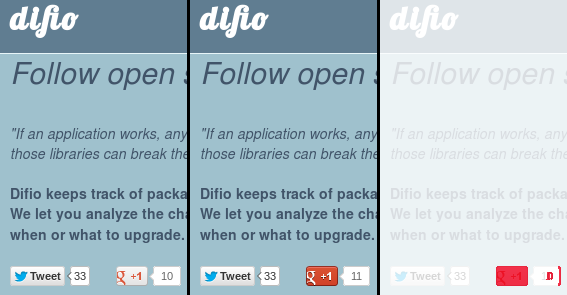

dif.io homepage before and after it got a G+1.

dif.io homepage before and after it got a G+1.

ImageMagic is magic

If you haven't heard of ImageMagic then you've been

living in a cave on a deserted island! The suite contains the compare command

which mathematically and visually annotates the difference between two images.

The third image above was produced with:

$ compare difio_10.png difio_11.png difio_diff.png

Differences are displayed in red (default) and the original image is seen in the

background. As shown, the Google +1 button and count has changed between the two

images. compare is a nice tool for manual inspection and debugging.

It works well in this case because the images are lossless PNGs and are regions of

screen shots where most objects are the same.

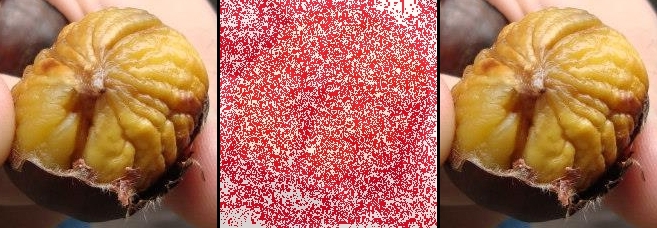

Chestnuts I had in Rome. 100% to 99% quality reduction.

Chestnuts I had in Rome. 100% to 99% quality reduction.

As seen on the second image set only 1% of JPEG quality change leads to many small differences in the image, which are invisible to the naked eye.

Python Imaging Library aka PIL

PIL is another powerful tool for image manipulation. I googled around and found some answers to my original questions here. The proposed solution is to calculate RMS of the two images and compare that with some threshold to establish the level of certainty that two images are identical.

Simple solution

I've been working on a script lately which needs to know what is displayed on the screen and recognize some of the objects. Calculating image similarity is quite complex but comparing if two images are exactly identical is not. Given my environment and the fact that I'm comparing screen shots where only few areas changed (see first image above for example) led to the following solution:

- Take a screen shot;

- Crop a particular area of the image which needs to be examined;

- Compare to a baseline image of the same area created manually;

- Don't use RMS, use the image histogram only to speed up calculation.

I've prepared the baseline images with GIMP and tested couple of scenarios

using compare. Here's how it looks in code:

from PIL import Image

from dogtail.utils import screenshot

baseline_histogram = Image.open('/home/atodorov/baseline.png').histogram()

img = Image.open(screenshot())

region = img.crop((860, 300, 950, 320))

print region.histogram() == baseline_histogram

Results

The presented solution was easy to program, works fast and reliably for my use case. In fact after several iterations I've added a second baseline image to account for some unidentified noise which appears randomly in the first region. As far as I can tell the two checks combined are 100% accurate.

Field of application

I'm working on QA automation where this comes handy. However you may try some lame CAPTCHA recognition by comparing regions to a pre-defined baseline. Let me know if you come up with a cool idea or actually used this in code.

I'd love to hear about interesting projects which didn't get too complicated because of image recognition.

There are comments.

How to Deploy Python Hotfix on RedHat OpenShift Cloud

In this article I will show you how to deploy hotfix versions for Python packages on the RedHat OpenShift PaaS cloud.

Background

You are already running a Python application on your OpenShift instance. You are using some 3rd party dependencies when you find a bug in one of them. You go forward, fix the bug and submit a pull request. You don't want to wait for upstream to release a new version but rather build a hotfix package yourself and deploy to production immediately.

Solution

There are two basic approaches to solving this problem:

- Include the hotfix package source code in your application, i.e. add it to your git tree or;

- Build the hotfix separately and deploy as a dependency. Don't include it in your git tree, just add a requirement on the hotfix version.

I will talk about the later. The tricky part here is to instruct the cloud environment to use your package (including the proper location) and not upstream or their local mirror.

Python applications hosted at OpenShift don't support

requirements.txt which can point to various package sources and even install

packages directly from GitHub. They support setup.py which fetches packages

from http://pypi.python.org but it is flexible enough to support other locations.

Building the hotfix

First of all we'd like to build a hotfix package. This will be the upstream version that we are currently using plus the patch for our critical issue:

$ wget https://pypi.python.org/packages/source/p/python-magic/python-magic-0.4.3.tar.gz

$ tar -xzvf python-magic-0.4.3.tar.gz

$ cd python-magic-0.4.3

$ curl https://github.com/ahupp/python-magic/pull/31.patch | patch

Verify the patch has been applied correctly and then modify setup.py to

increase the version string. In this case I will set it to version='0.4.3.1'.

Then build the new package using python setup.py sdist and upload it to a web server.

Deploying to OpenShift

Modify setup.py and specify the hotfix version. Because this version is not on PyPI

and will not be on OpenShift's local mirror you need to provide the location where it can

be found. This is done with the dependency_links parameter to setup(). Here's how it looks:

diff --git a/setup.py b/setup.py

index c6e837c..2daa2a9 100644

--- a/setup.py

+++ b/setup.py

@@ -6,5 +6,6 @@ setup(name='YourAppName',

author='Your Name',

author_email='example@example.com',

url='http://www.python.org/sigs/distutils-sig/',

- install_requires=['python-magic==0.4.3'],

+ dependency_links=['https://s3.amazonaws.com/atodorov/blog/python-magic-0.4.3.1.tar.gz'],

+ install_requires=['python-magic==0.4.3.1'],

)

Now just git push to OpenShift and observe the console output:

remote: Processing dependencies for YourAppName==1.0

remote: Searching for python-magic==0.4.3.1

remote: Best match: python-magic 0.4.3.1

remote: Downloading https://s3.amazonaws.com/atodorov/blog/python-magic-0.4.3.1.tar.gz

remote: Processing python-magic-0.4.3.1.tar.gz

remote: Running python-magic-0.4.3.1/setup.py -q bdist_egg --dist-dir /tmp/easy_install-ZRVMBg/python-magic-0.4.3.1/egg-dist-tmp-R_Nxie

remote: zip_safe flag not set; analyzing archive contents...

remote: Removing python-magic 0.4.3 from easy-install.pth file

remote: Adding python-magic 0.4.3.1 to easy-install.pth file

Congratulations! Your hotfix package has just been deployed.

This approach should work for other cloud providers and other programming languages as well. Let me know if you have any experience with that.

There are comments.

Using Django built-in template tags and filters in code

In case you are wondering how to use Django's built-in template tags and filters in your source code, not inside a template here is how:

>>> from django.template.defaultfilters import *

>>> filesizeformat(1024)

u'1.0 KB'

>>> filesizeformat(1020)

u'1020 bytes'

>>> filesizeformat(102412354)

u'97.7 MB'

>>>

All built-ins live in pythonX.Y/site-packages/django/template/defaultfilters.py.

There are comments.

Performance test of MD5, SHA1, SHA256 and SHA512

A few months ago I wrote

django-s3-cache.

This is Amazon Simple Storage Service (S3) cache backend for Django

which uses hashed file names.

django-s3-cache uses sha1 instead of md5 which appeared to be

faster at the time. I recall that my testing wasn't very robust so I did another

round.

Test Data

The file urls.txt contains 10000 unique paths from the dif.io website and looks like this:

/updates/Django-1.3.1/Django-1.3.4/7858/

/updates/delayed_paperclip-2.4.5.2 c23a537/delayed_paperclip-2.4.5.2/8085/

/updates/libv8-3.3.10.4 x86_64-darwin-10/libv8-3.3.10.4/8087/

/updates/Data::Compare-1.22/Data::Compare-Type/8313/

/updates/Fabric-1.4.0/Fabric-1.4.4/8652/

Test Automation

I used the standard timeit module in Python.

#!/usr/bin/python

import timeit

t = timeit.Timer(

"""

import hashlib

for line in url_paths:

h = hashlib.md5(line).hexdigest()

# h = hashlib.sha1(line).hexdigest()

# h = hashlib.sha256(line).hexdigest()

# h = hashlib.sha512(line).hexdigest()

"""

,

"""

url_paths = []

f = open('urls.txt', 'r')

for l in f.readlines():

url_paths.append(l)

f.close()

"""

)

print t.repeat(repeat=3, number=1000)

Test Results

The main statement hashes all 10000 entries one by one. This statement is executed 1000 times in a loop, which is repeated 3 times. I have Python 2.6.6 on my system. After every test run the system was rebooted. Execution time in seconds is available below.

MD5 10.275190830230713, 10.155328989028931, 10.250311136245728

SHA1 11.985718965530396, 11.976419925689697, 11.86873197555542

SHA256 16.662450075149536, 21.551337003707886, 17.016510963439941

SHA512 18.339390993118286, 18.11187481880188, 18.085782051086426

Looks like I was wrong the first time! MD5 is still faster but not that much. I will stick with SHA1 for the time being.

If you are interested in Performance Testing checkout the

performance testing books on Amazon.

As always I’d love to hear your thoughts and feedback. Please use the comment form below.

Python 2.7 vs. 3.6 and BLAKE2

UPDATE: added on June 9th 2017

After request from my reader refi64 I've tested this again between different versions of Python and included a few more hash functions. The test data is the same, the test script was slightly modified for Python 3:

import timeit

print (timeit.repeat(

"""

import hashlib

for line in url_paths:

# h = hashlib.md5(line).hexdigest()

# h = hashlib.sha1(line).hexdigest()

# h = hashlib.sha256(line).hexdigest()

# h = hashlib.sha512(line).hexdigest()

# h = hashlib.blake2b(line).hexdigest()

h = hashlib.blake2s(line).hexdigest()

"""

,

"""

url_paths = [l.encode('utf8') for l in open('urls.txt', 'r').readlines()]

""",

repeat=3, number=1000))

Test was repeated 3 times for each hash function and the best time was taken into account. The test was performed on a recent Fedora 26 system. The results are as follows:

Python 2.7.13

MD5 [13.94771409034729, 13.931367874145508, 13.908519983291626]

SHA1 [15.20741891860962, 15.241390943527222, 15.198163986206055]

SHA256 [17.22162389755249, 17.229840993881226, 17.23402190208435]

SHA512 [21.557533979415894, 21.51376700401306, 21.522911071777344]

Python 3.6.1

MD5 [11.770181038000146, 11.778772834999927, 11.774679265000032]

SHA1 [11.5838599839999, 11.580340686999989, 11.585769942999832]

SHA256 [14.836309305999976, 14.847088003999943, 14.834776135999846]

SHA512 [19.820048629999746, 19.77282728099999, 19.778471210000134]

BLAKE2b [12.665497404000234, 12.668979115000184, 12.667314543999964]

BLAKE2s [11.024885618000098, 11.117366972000127, 10.966767880999669]

- Python 3 is faster than Python 2

- SHA1 is a bit faster than MD5, maybe there's been some optimization

- BLAKE2b is faster than SHA256 and SHA512

- BLAKE2s is the fastest of all functions

Note: BLAKE2b is optimized for 64-bit platforms, like mine and I thought it will be faster than BLAKE2s (optimized for 8- to 32-bit platforms) but that's not the case. I'm not sure why is that though. If you do, please let me know in the comments below!

There are comments.

Page 2 / 2