Duplicate Amazon SQS Messages Cause Multiple Emails

Beware if using Amazon Simple Queue Service to send email messages! Sometime SQS messages are duplicated which results in multiple copies of the messages being sent. This happened today at Difio and is really annoying to users. In this post I will explain why there is no easy way of fixing it.

Q: Can a deleted message be received again?

Yes, under rare circumstances you might receive a previously deleted message again. This can occur in the rare situation in which a DeleteMessage operation doesn't delete all copies of a message because one of the servers in the distributed Amazon SQS system isn't available at the time of the deletion. That message copy can then be delivered again. You should design your application so that no errors or inconsistencies occur if you receive a deleted message again.

Amazon FAQ

In my case the cron scheduler logs say:

>>> <AsyncResult: a9e5a73a-4d4a-4995-a91c-90295e27100a>

While on the worker nodes the logs say:

[2013-12-06 10:13:06,229: INFO/MainProcess] Got task from broker: tasks.cron_monthly_email_reminder[a9e5a73a-4d4a-4995-a91c-90295e27100a]

[2013-12-06 10:18:09,456: INFO/MainProcess] Got task from broker: tasks.cron_monthly_email_reminder[a9e5a73a-4d4a-4995-a91c-90295e27100a]

This clearly shows the same message (see the UUID) has been processed twice! This resulted in hundreds of duplicate emails :(.

Why This Is Hard To Fix

There are two basic approaches to solve this issue:

- Check some log files or database for previous record of the message having been processed;

- Use idempotent operations that if you process the message again, you get the same results, and that those results don't create duplicate files/records.

The problem with checking for duplicate messages is:

- There is a race condition between marking the message as processed and the second check;

- You need to use some sort of locking mechanism to safe-guard against the race condition;

- In the event of an eventual consistency of the log/DB you can't guarantee that the previous attempt will show up and so can't guarantee that you won't process the message twice.

All of the above don't seem to work well for distributed applications not to mention Difio processes millions of messages per month, per node and the logs are quite big.

The second option is to have control of the Message-Id or some other email header so that the second message will be discarded either at the server (Amazon SES in my case) or at the receiving MUA. I like this better but I don't think it is technically possible with the current environment. Need to check though.

I've asked AWS support to look into this thread and hopefully they will have some more hints. If you have any other ideas please post in the comments! Thanks!

There are comments.

Email Logging for Django on RedHat OpenShift with Amazon SES

Sending email in the cloud can be tricky. IPs of cloud providers are blacklisted because of frequent abuse. For that reason I use Amazon SES as my email backend. Here is how to configure Django to send emails to site admins when something goes wrong.

# Valid addresses only.

ADMINS = (

('Alexander Todorov', 'atodorov@example.com'),

)

LOGGING = {

'version': 1,

'disable_existing_loggers': False,

'handlers': {

'mail_admins': {

'level': 'ERROR',

'class': 'django.utils.log.AdminEmailHandler'

}

},

'loggers': {

'django.request': {

'handlers': ['mail_admins'],

'level': 'ERROR',

'propagate': True,

},

}

}

# Used as the From: address when reporting errors to admins

# Needs to be verified in Amazon SES as a valid sender

SERVER_EMAIL = 'django@example.com'

# Amazon Simple Email Service settings

AWS_SES_ACCESS_KEY_ID = 'xxxxxxxxxxxx'

AWS_SES_SECRET_ACCESS_KEY = 'xxxxxxxx'

EMAIL_BACKEND = 'django_ses.SESBackend'

You also need the django-ses dependency.

See http://docs.djangoproject.com/en/dev/topics/logging for more details on how to customize your logging configuration.

I am using this configuration successfully at RedHat's OpenShift PaaS environment. Other users have reported it works for them too. Should work with any other PaaS provider.

There are comments.

Click Tracking without MailChimp

Here is a standard notification message that users at Difio receive. It is plain text, no HTML crap, short and URLs are clean and descriptive. As the project lead developer I wanted to track when people click on these links and visit the website but also keep existing functionality.

Standard approach

A pretty common approach when sending huge volumes of email is to use an external service, such as MailChimp. This is one of many email marketing services which comes with a lot of features. The most important to me was analytics and reports.

The downside is that MailChimp (and I guess others) use HTML formatted emails extensively. I don't like that and I'm sure my users will not like it as well. They are all developers. Not to mention that MailChimp is much more expensive than Amazon SES which I use currently. No MailChimp for me!

Another common approach, used by Feedburner by the way, is to use shortened URLs which redirect to the original ones and measure clicks in between. I also didn't like this for two reasons: 1) the shortened URLs look ugly and they are not at all descriptive and 2) I need to generate them automatically and maintain all the mappings. Why bother ?

How I did it?

So I needed something which will do a redirect to a predefined URL, measure how many redirects were there (essentially clicks on the link) and look nice. The solution is very simple, if you have not recognized it by now from the picture above.

I opted for a custom redirect engine, which will add tracking information to the destination URL so I can track it in Google Analytics.

Previous URLs were of the form http://www.dif.io/updates/haml-3.1.2/haml-3.2.0.rc.3/11765/.

I've added the humble /daily/? prefix before the URL path so it becomes

http://www.dif.io/daily/?/updates/haml-3.1.2/haml-3.2.0.rc.3/11765/

Now /updates/haml-3.1.2/haml-3.2.0.rc.3/11765/ becomes a query string parameter which

the /daily/index.html page uses as its destination. Before doing the redirect

a script adds tracking parameters so that Google Analytics will properly

report this visit. Here is the code:

<html>

<head>

<script type="text/javascript">

var uri = window.location.toString();

var question = uri.indexOf("?");

var param = uri.substring(question + 1, uri.length)

if (question > 0) {

window.location.href = param + '?utm_source=email&utm_medium=email&utm_campaign=Daily_Notification';

}

</script>

</head>

<body></body>

</html>

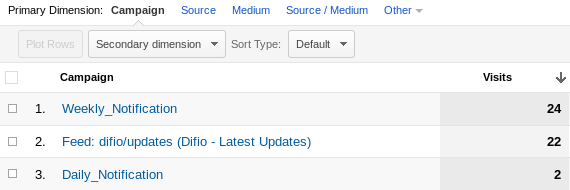

Previously Google Analytics was reporting these visits as direct hits while now it lists them under campaigns like so:

Because all visitors of Difio use JavaScript enabled browsers I combined this approach with another one, to remove query string with JavaScript and present clean URLs to the visitor.

Why JavaScript?

You may be asking why the hell I am using JavaScript and not Apache's wonderful mod_rewrite module? This is because the destination URLs are hosted in Amazon S3 and I'm planning to integrate with Amazon CloudFront. Both of them don't support .htaccess rules nor anything else similar to mod_rewrite.

As always I'd love to hear your thoughts and feedback. Please use the comment form below.

There are comments.