How to create auxiliary build jobs in Travis-CI matrix

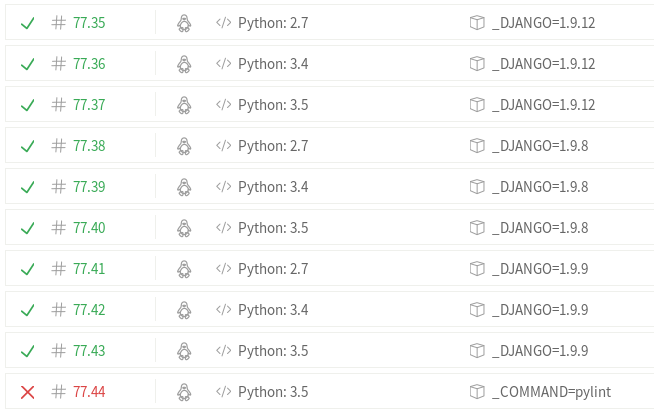

In Travis-CI when you combine the three main configuration options of Runtime (language), Environment and Exclusions/Inclusions you get a build matrix of all possible combinations! For example, for django-chartit the matrix includes 43 build jobs, spread across various Python and Django versions. For reference see Build #75.

For django-chartit I wanted to have an additional build job which would execute pylint. I wanted the job to be independent because currently pylint produces lots of errors and warnings. Having an independent job instead of integrating pylint together with all jobs makes it easier to see if any of the functional tests failed.

Using the inclusion functionality of Travis-CI I was able to define an auxiliary build job. The trick is to provide sane environment defaults for all build jobs (regular and auxiliary ones) so you don't have to expand your environment section! In this case the change looks like this

diff --git a/.travis.yml b/.travis.yml

index 67f656d..9b669f9 100644

--- a/.travis.yml

+++ b/.travis.yml

@@ -2,6 +2,8 @@ after_success:

- coveralls

before_install:

- pip install coveralls

+- if [ -z "$_COMMAND" ]; then export _COMMAND=coverage; fi

+- if [ -z "$_DJANGO" ]; then export _DJANGO=1.10.4; fi

env:

- !!python/unicode '_DJANGO=1.10'

- !!python/unicode '_DJANGO=1.10.2'

@@ -41,6 +43,10 @@ matrix:

python: 3.3

- env: _DJANGO=1.10.4

python: 3.3

+ include:

+ - env: _COMMAND=pylint

+ python: 3.5

+

notifications:

email:

on_failure: change

@@ -50,4 +56,4 @@ python:

- 3.3

- 3.4

- 3.5

-script: make coverage

+script: make $_COMMAND

For more info take a look at commit b22eda7 and Build #77. Note Job #77.44!

Thanks for reading and happy testing!

There are comments.

Overridden let() causes segfault with RSpec

Last week Anton asked me to take a look at one of his RSpec test suites. He was able to consistently reproduce a segfault which looked like this:

/home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:113: [BUG] vm_call_cfunc - cfp consistency error

ruby 2.3.2p217 (2016-11-15 revision 56796) [x86_64-linux]

-- Control frame information -----------------------------------------------

c:0013 p:---- s:0048 e:000047 CFUNC :map

c:0012 p:0011 s:0045 e:000044 BLOCK /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:113

c:0011 p:0035 s:0043 e:000042 METHOD /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/configuration.rb:1835

c:0010 p:0011 s:0040 e:000039 BLOCK /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:112

c:0009 p:0018 s:0037 e:000036 METHOD /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/reporter.rb:77

c:0008 p:0022 s:0033 e:000032 METHOD /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:111

c:0007 p:0025 s:0028 e:000027 METHOD /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:87

c:0006 p:0085 s:0023 e:000022 METHOD /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:71

c:0005 p:0026 s:0016 e:000015 METHOD /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:45

c:0004 p:0025 s:0012 e:000011 TOP /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/exe/rspec:4 [FINISH]

c:0003 p:---- s:0010 e:000009 CFUNC :load

c:0002 p:0136 s:0006 E:001e10 EVAL /home/atodorov/.rbenv/versions/2.3.2/bin/rspec:22 [FINISH]

c:0001 p:0000 s:0002 E:0000a0 (none) [FINISH]

Googling for vm_call_cfunc - cfp consistency error yields

Ruby #10460. Comments on the bug and

particularly this one point towards the error:

> Ruby is trying to be nice about reporting the error; but in the end,

> your code is still broken if it overflows stack.

Somewhere in the test suite was a piece of code that was overflowing the stack. It was somewhere along the lines of

describe '#active_client_for_user' do

context 'matching an existing user' do

it_behaves_like 'manager authentication' do

include_examples 'active client for user with existing user'

end

end

end

Considering the examples in the bug I started looking for patterns where a variable was defined and later redefined, possibly circling back to the previous definition. Expanding the shared examples by hand transformed the code into

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

Line 5. overrode line 3. When line 4. was executed first because of lazy execution and the call execution path became: 4-5-4-5-4-5 ... NOTE: I think we need a warning about that in RuboCop, see RuboCop #3769. The fix however is a no brainer:

- let(:user) { create(:user, :manager) }

- let!(:api_user_authentication) { create(:user_authentication, user: user) }

+ let(:manager) { create(:user, :manager) }

+ let!(:api_user_authentication) { create(:user_authentication, user: manager) }

Thanks for reading and happy testing.

There are comments.

Highlights from ISTA and GTAC 2016

Another two weeks have passed and I'm blogging about another 2 conferences. This year both Innovations in Software Technologies and Automation and Google Test Automation Conference happened on the same day. I was attending ISTA in Sofia during the day and watching the live stream of GTAC during the evenings. Here are some of the things that reflected on me:

- How can I build my software in order to make this a perfect day for the user ?

- People are not the problem which causes bad software to exist. When designing software focus on what people need not on what technology is forcing them to do;

- You need to have blind trust in the people you work with because all the times projects look like they are not going to work until the very end!

- It's good to have diverse set of characters in the company and not homogenize people;

- Team performance grows over time. Effective teams minimize time waste during bad periods. They (have and) extract value from conflicts!

- One-on-one meetings are usually like status reports which is bad. Both parties should bring their own issues to the table;

- To grow an effective team members need to do things together. For example pair programming, writing test scenarios, etc;

- When teams don't take actions after retrospective meetings it is usually a sign of missing foundational trust;

- QA engineers need to be present at every step of the software development life-cycle! This is something I teach my students and have been confirmed by many friends in the industry;

- Agile is all about data, even when it comes to testing and quality. We need to decompose and measure iteratively;

- Agile is also about really skillful people. One way to boost your skills is to adopt the T-shaped specialist model;

- In agile iterative work and continuous delivery is king so QA engineers need to focus even more on visualization (I will also add documentation), refactoring and code reviews;

- Agile teams need to give opportunities to team members for taking risk and taking ownership of their actions in the gray zone (e.g. actions which isn't clear who should be doing).

In the brave new world of micro services end to end testing is no more! We test each level in isolation but keep stable contracts/APIs between levels. That way we can reduce the test burden and still guarantee an acceptable level of risk. This change in software architecture (monolithic vs. micro) leads to change in technologies (one framework/language vs. what's best for the task) which in turn leads to changes in testing approach and testing tools. This ultimately leads to changing people on the team because they now need different skills than when they were hired! This circles back to the T-shaped specialist model and the fact that QA should be integrated in every step of the way! Thanks to Denitsa Evtimova and Lyudmila Labova for this wisdom and the quote pictured above.

Aneta Petkova talked about monitoring of test results which is a topic very close to my work. Imagine you have your automated test suite but still get failures occasionally. Are these bugs or something else broke ? If they are bugs and you are waiting for them to be fixed do you execute the tests against the still broken build or wait ? If you do, what additional info do you get from these executions vs. how much time do you spend figuring out "oh, that's still not fixed or geez, here's another hidden bug in the code" ?

Her team has modified their test execution framework (what I'd usually call a test runner or even test lab) to have knowledge about issues in JIRA and skip some tests when no meaningful information can be extracted from them. I have to point out that this approach may require a lot of maintenance in environments where you have failures due to infrastructure issues. This idea connects very nicely with the general idea behind this year's GTAC - don't run tests if you don't need to aka smart test execution!

Boris Prikhodskiy shared a very simple rule. Don't execute tests

- which have 100 % pass rate;

- during the last month;

- and have been executed at least 100 times before that!

This is what Unity does for their numerous topic branches and reduces test time with 60-70 percent. All of the test suite is still executed against their trunk branch and PR merge queue branches!

At GTAC there were several presentations about speeding up test execution time. Emanuil Slavov was very practical but the most important thing he said was that a fast test suite is the result of many conscious actions which introduced small improvements over time. His team had assigned themselves the task to iteratively improve their test suite performance and at every step of the way they analyzed the existing bottlenecks and experimented with possible solutions.

The steps in particular are (on a single machine):

- Execute tests in dedicated environment;

- Start with empty database, not used by anything else; This also leads to adjustments in your test suite architecture and DB setup procedures;

- Simulate and stub external dependencies like 3rd party services;

- Move to containers but beware of slow disk I/O;

- Run database in memory not on disk because it is a temporary DB anyway;

- Don't clean test data, just trash the entire DB once you're done;

- Execute tests in parallel which should be the last thing to do!

- Equalize workload between parallel threads for optimal performance;

- Upgrade the hardware (RAM, CPU) aka vertical scaling;

- Add horizontal scaling (probably with a messaging layer);

John Micco and Atif Memon talked about flaky tests at Google:

- 84% of the transitions from PASS to FAIL are flakes;

- Almost 16% of their 3.5 million tests have some level of flakiness;

- Flaky failures frequently block and delay releases;

- Google spends between 2% and 16% of their CI compute resources re-running flaky tests;

- Flakiness insertion speed is comparable to flakiness removal speed!

- The optimal setting is 2 persons modifying the same source file at the same time. This leads to minimal chance of breaking stuff;

- Fix or delete flaky tests because you don't get meaningful value out of them.

So Google want to stop a test execution before it is executed if historical data shows that the test has attributes of flakiness. The research they talk about utilizes tons of data collected from Google's CI environment which was the most interesting fact for me. Indeed if we use data to decide which features to build for our customers then why not use data to govern the process of testing? In addition to the video you should read John's post Flaky Tests at Google and How We Mitigate Them.

At the end I'd like to finish with Rahul Gopinath's Code Coverage is a Strong Predictor of Test suite Effectiveness in the Real World. He basically said that code coverage metrics as we know them today are still the best practical indicator of how good a test suite is. He argues that mutation testing is slow and only provides additional 4% to a well designed test suite. This is absolutely the opposite of what Laura Inozemtseva presented last year in her Coverage is Not Strongly Correlated with Test Suite Effectiveness lightning talk. Rahul also made a point about sample size in the two research papers and I had the impression he's saying Laura didn't do a proper academic research.

I'm a heavy contributor to Cosmic Ray, the mutation testing tool for Python and also use mutation testing in my daily job so this is a very interesting topic indeed. I've asked fellow tool authors to have a look at both presentations and share their opinions. I also have an idea about a practical experiment to see if full branch coverage and full mutation coverage will be able to find a known bug in a piece of software I wrote. I will be writing about this experiment next week so stay tuned.

Thanks for reading and happy testing!

There are comments.

Women In Open Source

It's been 2 weeks after OpenFest 2016 and I've promised to blog about what happened during the Women in Open Source presentation, which is the only single talk I did attend.

The presenters were Jona Azizaj, whom I met at FOSDEM earlier this year, Suela Palushi and Kristi Progri, all 3 from Albania. I've went to OpenFest specifically to meet them and listen to their presentation.

They started by explaining their background and telling us more about their respective communities, Fedora Women, WoMoz, GNOME Outreach and the Open Labs hackerspace in Tirana. The girls gave some stats how many women there are in the larger FOSS community and what some newcomer's first impressions could be.

Did you know that in 2002 1.1% of all FOSS participants were female, while in 2013 that was 11% ? A 10x increase but of them only 1.5% are developers.

The presentation was a nice overview of different opportunities to get involved in open source geared towards women. I've specifically asked and the girls responded how they first came to join open source. In general they've had a good and welcoming community around them which made it natural to join and thrive.

Now comes the sad part. Instead of welcoming and supporting these girls that they've stood up to talk about their experiences the audience did the opposite. In particular Maya Milusheva from Plushie Games made a very passionate claim against the topic women in tech. It went like this:

- I am a woman

- I am a good developer

- I am a mother

- I am a CEO of a successful IT company

- when I hire I want the best people for my company and they are men

- women simply don't have the required tech skills/level of expertise

- the whole talk about women in open source/diversity is bullshit

- girls need to sit down on their asses and read more, code more, etc.

In terms of successfullness I think I can compare to Maya. I also have a small child, which I regularly take to conferences with me (the badges above). I also have an IT company which generates a comparable amount of income. I also want to hire the best employees for any given project I'm working on. Sometimes it's happened that to be a woman, sometimes not. The point here however was not about hiring more women per se. It was about giving opportunities in the communities and letting people grow for themselves.

** Yes, she has a point but there's something WRONG in coming to listen to a presenter just to tell them they are full of shit! It's very arrogant shouting around and arguing a point about hiring when in fact the entire presentation was not about hiring! It is totally unacceptable, The NY Times writing about apps you develop and behaving like an asshole at public events at the same time! **

I've been there, the crowd telling me I'm full of shit when I've been presenting about new technologies. I've been there being told that my ideas will not work in this or that way, while in fact the very idea of trying and considering a completely different technological approach was what counted. And finally I've been there years later when the same ideas and technologies have become mainstream and the same crowd was now talking about them!

After Maya there was another person who grabbed the microphone and continued to talk nonsense. Unfortunately he didn't state his name and I don't know the guy in person. What he said was along the lines of little boys play with robots, little girls play with dolls. They like it this way and that's why girls don't get involved in the technical field. Also if a girl played with robots she will be called a tomboy and generally have a negative attitude towards her.

It's absolutely clear this guy has no idea what he's talking about. Everyone who has small children around them will agree that they are born with equal mental capacity. It is up to the environment, parents, teachers, etc to shape this capacity in a positive way. I've seen children who taught themselves speaking English from YouTube and children the same age barely speaking Bulgarian. I've seen children who are curious about the world and how it works and children who can't wipe their own noses. It's not because they like it that way, it's because of their parents and the environment they live in.

Finally I'd like to respond to this guy (I was specifically motioned at the conference not to respond) with this

I have a 5 year old girl. She likes robots as much as she likes dolls. She works with Linux and is lucky enough to have one of the two OLPCs laptops in Bulgaria. She plays SuperTux and has already found a bug in it (I've reported it). She's been to several Linux and IT conferences as you can see from the picture above. She likes being taken to hackathons and learning about inspiring stuff that students are doing.

Are you telling me that because we have the wrong idea women can't be good at technology she can't become a successful engineer ? Are you telling me to basically scratch the next 10 years of her life and tell her she can't become what she wants ? Because if you do I say FUCK OFF!

Even I as a parent don't have the power to tell my child what they can do or not do, what they can accomplish or not. My job is to show them the various possibilities that exist and guide and support them along they way they want to go! This is what we as society also need to do for everyone else!

To wrap up I will tell you about a psychological experiment we devised with Jona and Suela. I've proposed to find a male and female student in Tirana and have them pose online as somebody from the opposite sex, fake accounts and all. They've proposed having the same person both act as male or female for the purposes of evening out the tech skills difference. The goal is to see how does the tech community react to their contributions and try to measure how much does their gender being known affect their performance! I hope the girls will find a way to perform this experiment together with the university Psychology department and share the results with us.

Btw I will be visiting OSCAL'17 to check up on that so see you in Albania!

There are comments.

Updated MacBook Air Drivers for RHEL 7.3

Today I have re-build the wifi and backlight drivers for MacBook Air against the upcoming Red Hat Enterprise Linux 7.3 kernel. wl-kmod again needed a small patch before it can be compiled. mba6x_bl has been updated to the latest upstream and compiled without errors. The current RPM versions are

akmod-wl-6.30.223.248-9.el7.x86_64.rpm

kmod-wl-3.10.0-513.el7.x86_64-6.30.223.248-9.el7.x86_64.rpm

kmod-wl-6.30.223.248-9.el7.x86_64.rpm

wl-kmod-debuginfo-6.30.223.248-9.el7.x86_64.rpm

kmod-mba6x_bl-20161018.d05c125-1.el7.x86_64.rpm

kmod-mba6x_bl-3.10.0-513.el7.x86_64-20161018.d05c125-1.el7.x86_64.rpm

mba6x_bl-common-20161018.d05c125-1.el7.x86_64.rpm

and they seem to work fine for me. Let me know if you have any issues after RHEL 7.3 comes out officially.

PS: The bcwc_pcie driver for the video camera appears to be ready for general use, regardless of some issues. No promises here but I'll try to compile that one as well and provide it in my Macbook Air RHEL 7 repository.

PS2: Sometime after Sept 14th I have probably upgraded my system and now it can't detect external displays if the display is not plugged in during boot. I'm seeing the following

# cat /sys/class/drm/card0-DP-1/enabled

disabled

which appears to be the same issue reported on the ArchLinux forum. I'm in a hurry to resolve this and any help is welcome.

There are comments.

PhantomJS 2.1.1 in Ubuntu different from upstream

For some time now I've been hitting PhantomJS #12506 with the latest 2.1.1 version. The problem is supposedly fixed in 2.1.0 but this is not always the case. If you use a .deb package from the latest Ubuntu then the problem still exists, see Ubuntu #1605628.

It turns out the root cause of this, and probably other problems, is the way PhantomJS packages are built. Ubuntu builds the package against their stock Qt5WebKit libraries which leads to

$ ldd usr/lib/phantomjs/phantomjs | grep -i qt

libQt5WebKitWidgets.so.5 => /lib64/libQt5WebKitWidgets.so.5 (0x00007f5173ebf000)

libQt5PrintSupport.so.5 => /lib64/libQt5PrintSupport.so.5 (0x00007f5173e4d000)

libQt5Widgets.so.5 => /lib64/libQt5Widgets.so.5 (0x00007f51737b6000)

libQt5WebKit.so.5 => /lib64/libQt5WebKit.so.5 (0x00007f5171342000)

libQt5Gui.so.5 => /lib64/libQt5Gui.so.5 (0x00007f5170df8000)

libQt5Network.so.5 => /lib64/libQt5Network.so.5 (0x00007f5170c9a000)

libQt5Core.so.5 => /lib64/libQt5Core.so.5 (0x00007f517080d000)

libQt5Sensors.so.5 => /lib64/libQt5Sensors.so.5 (0x00007f516b218000)

libQt5Positioning.so.5 => /lib64/libQt5Positioning.so.5 (0x00007f516b1d7000)

libQt5OpenGL.so.5 => /lib64/libQt5OpenGL.so.5 (0x00007f516b17c000)

libQt5Sql.so.5 => /lib64/libQt5Sql.so.5 (0x00007f516b136000)

libQt5Quick.so.5 => /lib64/libQt5Quick.so.5 (0x00007f5169dad000)

libQt5Qml.so.5 => /lib64/libQt5Qml.so.5 (0x00007f5169999000)

libQt5WebChannel.so.5 => /lib64/libQt5WebChannel.so.5 (0x00007f5169978000)

While building from the upstream sources gives

$ ldd /tmp/bin/phantomjs | grep -i qt

If you take a closer look at PhantomJS's sources you will notice there are

3 git submodules in their repository - 3rdparty, qtbase and qtwebkit.

Then in their build.py you can clearly see that this local fork of QtWebKit

is built first, then the phantomjs binary is built against it.

The problem is that these custom forks include additional patches to make WebKit suitable for Phantom's needs. And these patches are not available in the stock WebKit library that Ubuntu uses.

Yes, that's correct. We need additional functionality that vanilla QtWebKit doesn't have. That's why we use custom version.

Vitaly Slobodin, PhantomJS

At the moment of this writing Vitaly's qtwebkit fork is 28 commits ahead and 39 commits behind qt:dev. I'm surprised Ubuntu's PhantomJS even works.

The solution IMO is to bundle the additional sources into the src.deb package and use the same building procedure as upstream.

There are comments.

Testing the 8-bit computer Puldin

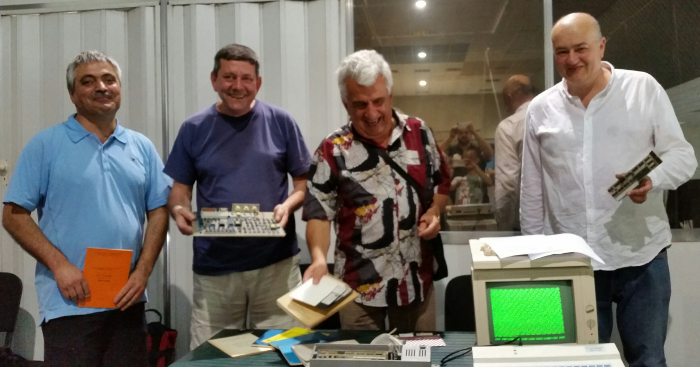

Last weekend I visited TuxCon in Plovdiv and was very happy to meet and talk to some of the creators of the Puldin computer! On the picture above are (left to right) Dimitar Georgiev - wrote the text editor, Ivo Nenov - BIOS, DOS and core OS developer, Nedyalko Todorov - director of the vendor company and Orlin Shopov - BIOS, DOS, compiler and core OS developer.

Puldin is 100% pure Bulgarian development, while the “Pravetz” brand was copy of Apple ][ (Pravetz 8A, 8C, 8M), Oric (Pravets 8D) and IBM-PC (Pravetz 16). The Puldin computers were build from scratch both hardware and software and were produced in Plovdiv in the late 80s and early 90s. 50000 pieces were made, at least 35000 of them have been exported to Russia and paid for. A typical configuration in a Russian class room consisted of several Puldin computers and a single Pravetz 16. According to Russian sources the last usage of these computers was in 2003 serving as Linux terminals and being maintained without any support from the vendor (b/c it ceased to exist).

One of the main objectives of Puldin was full compatibility with IBM-PC. At the time IBM had been releasing extensive documentation about how their software and hardware works which has been used by Puldin's creators as their software specs. Despite IBM-PC using faster CPU the Puldin 601 had a comparable performance due to aggressive software and compiler optimizations.

Testing wise the guys used to compare Puldin's functionality with that of IBM-PC. It was a hard requirement to have full compatibility on the file storage layer, that means floppy disks written on Puldin had to be readable on IBM-PC and vice versa. Same goes for programs compiled on Puldin - they had to execute on IBM-PC.

Everything of course had been tested manually and on top of that all the software had to be burned to ROM before you can do anything with it. As you can imagine the testing process had been quite slow and painful compared to today's standards. I've asked the guys if they'd happened to find a bug in IBM-PC which wasn't present in their code but they couldn't remember.

What was interesting for me on the hardware side was the fact that you can plug the computer directly to a cheap TV set and that it's been one of the first computers which could operate on 12V DC, powered directly from a car battery.

There was also a fully functional Pravetz 8 with an additional VGA port to connect it to the LCD monitor as well as a SD card reader wired to function as a floppy disk reader (the small black dot behind the joystick).

For those who missed it (and understand Bulgarian) I have a video recording on YouTube. For more info about the history and the hardware please check-out Olimex post on Puldin (in English). For more info on Puldin and Pravetz please visit pyldin.info (in Russian) and pravetz8.com (in Bulgarian) using Google translate if need be.

There are comments.

Testing Data Structures in Pykickstart

When designing automated test cases we often think either about increasing coverage or in terms of testing more use-cases aka behavior scenarios. Both are valid approaches to improve testing and both of them seem to focus around execution control flow (or business logic). However program behavior is sometimes controlled via the contents of its data structures and this is something which is rarely tested.

In this comment Brian C. Lane and Vratislav Podzimek from Red Hat are talking about a data structure which maps Fedora versions to particular implementations of kickstart commands. For example

class RHEL7Handler(BaseHandler):

version = RHEL7

commandMap = {

"auth": commands.authconfig.FC3_Authconfig,

"authconfig": commands.authconfig.FC3_Authconfig,

"autopart": commands.autopart.F20_AutoPart,

"autostep": commands.autostep.FC3_AutoStep,

"bootloader": commands.bootloader.RHEL7_Bootloader,

}

In their particular case the Fedora 21 logvol implementation introduced the

--profile parameter but in

Fedora 22 and Fedora 23 the logvol command mapped to the Fedora 20 implementation and the

--profile parameter wasn't available. This is unexpected change in program behavior

although the logvol.py and handlers/f22.py files have

99% and 100% code coverage.

This morning I did some coding and created an automated test for this problem. The test iterates over all command maps. For each command in the map (that is data structure member) we load the module which provides all possible implementations for that command. In the loaded module we search for implementations which have newer versions than what is in the map, but not newer than the current Fedora version under test. With a little bit of pruning the current list of offenses is

ERROR: In `handlers/devel.py` the "fcoe" command maps to "F13_Fcoe" while in

`pykickstart.commands.fcoe` there is newer implementation: "RHEL7_Fcoe".

ERROR: In `handlers/devel.py` "FcoeData" maps to "F13_FcoeData" while in

`pykickstart.commands.fcoe` there is newer implementation: "RHEL7_FcoeData".

ERROR: In `handlers/devel.py` the "user" command maps to "F19_User" while in

`pykickstart.commands.user` there is newer implementation: "F24_User".

ERROR: In `handlers/f24.py` the "user" command maps to "F19_User" while in

`pykickstart.commands.user` there is newer implementation: "F24_User".

ERROR: In `handlers/f22.py` the "logvol" command maps to "F20_LogVol" while in

`pykickstart.commands.logvol` there is newer implementation: "F21_LogVol".

ERROR: In `handlers/f22.py` "LogVolData" maps to "F20_LogVolData" while in

`pykickstart.commands.logvol` there is newer implementation: "F21_LogVolData".

ERROR: In `handlers/f18.py` the "network" command maps to "F16_Network" while in

`pykickstart.commands.network` there is newer implementation: "F18_Network".

The first two are possibly false negatives or related to the naming conventions used

in this module. However the rest appear to be legitimate problems. The user command

has introduced the --groups parameter in Fedora 24 (devel is Fedora 25 currently) but the

parser will fail to recognize this parameter. The logvol problem is recognized as well

since it was never patched. And the Fedora 18 network command implements a new property called

hostname which has probably never been available to be used.

You can follow my current work in PR #91 and happy testing your data structures.

There are comments.

Don't Upgrade Galaxy S5 to Android 6.0

Samsung is shipping out buggy software like a boss, no doubt about it. I've written a bit about their bugs previously. However I didn't expect them to release Android 6.0.1 and render my Galaxy S5 completely useless with respect to the feature I use the most.

Tell me the weather for Brussels

So on Monday I've let Android upgrade to 6.0.1 to be completely surprised

that the lockscreen shows the weather report for Brussels, while

I'm based in Sofia. I've checked AccuWeather (I did go to

Brussels earlier this year)

but it displayed only Sofia and Thessaloniki. To get rid of this widget

go to Settings -> Lockscreen -> Additional information and turn it off!

I think this weather report comes from GPS/Location based data, which I have turned off by default but did use a while back ago. After turning the widget off and back on it didn't appear on the lockscreen. I suspect they fall back to showing the last good known value when data is missing instead of handling the error properly.

Apps are gone

Some of my installed apps are missing now. So far I've noticed that the Gallery and S Health icons have disappeared from my homescreen. I think S Health came from Samsung's app store but still they shouldn't have removed it silently. Now I wonder what happened to my data.

I don't see why Gallery was removed though. The only way to view pictures is to use the camera app preview functionality which is kind of grose.

Grayscale in powersafe mode is gone

The killer feature on these higher end Galaxy devices is the Powersafe mode and Ultra Powersafe mode. I use them a lot and by default have my phone in Powersafe mode with grayscale colors enabled. It is easier on the eyes and also safes your battery.

NOTE: grayscale colors don't affect some displays but these devices use AMOLED screens which need different amounts of power to display different colors. More black means less power required!

After the upgrade grayscale is no more. There's not even an on/off switch.

I've managed to find a workaround though. First you need to enable developer mode

by tapping 7 times on About device -> Build number. Then go to

Settings -> Developer options, look for the Hardware Accelerated Rendering

section and select Simulate Color Space -> Monochromacy! This is a bit ugly

hack and doesn't have the convenience of turning colors on/off by tapping

the quick Powersafe mode button at the top of the screen!

It looks like Samsung didn't think this upgrade well enough or didn't test it well enough ? In my line of work (installation and upgrade testing) I've rarely seen such a big blunder. Thanks for reading and happy testing!

There are comments.

How To Hire Software Testers, Pt. 3

In previous posts (links below) I have described my process of interviewing QA candidates. Today I'm quoting an excerpt from the book Mission: My IT career(Bulgarian only) by Ivaylo Hristov, one of Komfo's co-founders.

He writes

Probably the most important personal trait of a QA engineer is to

be able to think outside given boundaries and prejudices

(about software that is). When necessary to be non-conventional and

apply different approaches to the problems being solved. This will help

them find defect which nobody else will notice.

Most often errors/mistakes in software development are made due to

wrong expectations or wrong assumptions. Very often this happens because

developers hope their software will be used in one particular way

(as it was designed to) or that a particular set of data will be returned.

Thus the skill to think outside the box is the most important skill

we (as employers) are looking to find in a QA candidate. At job interviews

you can expect to be given tasks and questions which examine those skills.

How would you test a pen?

This is Ivaylo's favorite question for QA candidates. He's looking for attention to details and knowing when to stop testing. Some of the possible answers related to core functionality are

- Does the pen write in the correct color

- Does the color fades over time

- Does the pen operate normally at various temperatures? What temperature intervals would you choose for testing

- Does the pen operate normally at various atmospheric pressure

- When writing, does the pen leave excessive ink

- When writing, do you get a continuous line or not

- What pressure does the user need to apply in order to write a continuous line

- What surfaces can the pen write on? What surfaces would you test

- Are you able to write on a piece of paper if there is something soft underneath

- What is the maximum inclination angle at which the pen is able to write without problems

- Does the ink dry fast

- If we spill different liquids onto a sheet of paper, on which we had written something, does the ink stay intact or smear

- Can you use pencil rubber to erase the ink? What else would you test

- How long can you write before we run out of ink

- How fat is the ink line

Then Ivaylo gives a few more non-obvious answers

- Verify that all labels on the pen/ink cartridge are correctly spelled and how durable they are (try to erase them)

- Strength test - what is the maximum height you can drop the pen from without breaking it

- Verify that dimensions are correct

- Test if the pen keeps writing after not being used for some time (how long)

- Testing individual pen components under different temperature and atmospheric conditions

- Verify that materials used to make the pen are safe, e.g. when you put the pen in your mouth

When should you stop ? According to the book there can be between 50 and 100 test cases for a single pen, maybe more. It's not a good sign if you stop at the first 3!

If you want to know what skills are revealed via these questions please read my other posts on the topic:

Thanks for reading and happy testing!

There are comments.

DEVit Conf 2016

It's been another busy week after DEVit conf took place in Thessaloniki. Here are my impressions.

Pre-conference

TechMinistry is Thessaloniki's hacker space which is hosted at a central location, near major shopping streets. I've attended an Open Source Wednesday meeting. From the event description I thought that there was going to be a discussion about getting involved with Firefox. However that was not the case. Once people started coming in they formed organic groups and started discussing various topics on their own.

I was also shown their 3D printer which IMO is the most precise of 3D printers I've seen so far. Imagine what it would be like to click Print, sometime in the future, and have your online orders appear on your desk over night. That would be quite cool!

I've met with Christos Bacharakis, a Mozilla representative for Greece, who gave me some goodies for my students at HackBulgaria!

On Thursday I spent the day merging pull requests for MrSenko/pelican-octopress-theme and attended the DEVit Speakers dinner at Massalia. Food and drinks were very good and I even found a new recipe for mushrooms with ouzo, of which I think I had a bit too many :).

I was also told that "a full stack developer is a developer who can introduce a bug to every layer of the software stack". I can't agree more!

DEVit

The conference day started with a huge delay due to long queues for registration. The fist talk I attended, and the best one IMO was Need It Robust? Make It Fragile! by Yegor Bugayenko (watch the video). There he talked about two different approaches to writing software: fail safe vs. fail fast.

He argues that when software is designed to fail fast bugs are discovered earlier in the development cycle/software lifetime and thus are easier to fix, making the whole system more robust and more stable. On the other hand when software is designed to hide failures and tries to recover auto-magically the same problems remain hidden for longer and when they are finally discovered they are harder to fix. This is mostly due to the fact that the original error condition is hidden and manifested in a different way which makes it harder to debug.

Yegor made several examples, all of which are valid code, which he considers bad practice. For example imagine we have a function that accepts a filename as parameter:

def read_file_fail_safe(fname):

if not os.path.exists(fname):

return -1

# read the file, do something else

...

return bytes_read

def read_file_fail_fast(fname):

if not os.path.exists(fname):

raise Exception('File does not exist')

# read the file, do something else

return bytes_read

In the first example read_file_fail_safe returns -1 on error. The trouble is

whoever is calling this method may not check for errors thus corrupting the

flow of the program further down the line. You may also want to collect metrics and

update your database with the number of bytes processed - this will totally

skew your metrics. C programmers out there will quickly remember at least

one case when they didn't check the return code for errors!

The second example read_file_fail_fast will raise an exception the moment

it encounters a problem. It's not its fault that the file doesn't exist and

there's nothing it can do about it, nor is its job to do anything about it.

Raising an exception will surface back to the caller and they will be notified

about the problem, taking appropriate actions to resolve it.

Yegor was also unhappy that many books teach fail safe and even IDEs (for Java) generate fail safe boiler-plate code (need to check this)! Indeed it is me who asks the first question Are there any tools to detect fail safe code patterns? and it turns out there aren't (for the majority of cases that is). If you happen to know such a tool please post a link in the comments below.

I was a bit disappointed by the rest of the talks. They were all high-level overviews IMO and didn't go deep technical. Last year was better. I also wanted to attend the GitHub Patchwork workshop but looking at the agenda it looked like this is for users who are starting with git and GitHub (which I'm not).

The closing session of the day was "Real time front-end alchemy, or: capturing, playing, altering and encoding video and audio streams, without servers or plugins!" by Soledad Penades from Mozilla. There she gave a demo about the latest and greatest in terms of audio and video capturing, recording and mixing natively in the browser. This is definitively very cool for apps in the audio/video space but I can also imagine an application for us software testers.

Depending on computational and memory requirements you should be able to record everything the user does in their browser (while on your website) and send it back home when they want to report an error or contact support. Definitely better than screenshots and having to go back and forth until the exact steps to reproduce are established.

There are comments.

Mismatch in Pyparted Interfaces

Last week my co-worker Marek Hruscak, from Red Hat, found an interesting case of mismatch between the two interfaces provided by pyparted. In this article I'm going to give an example, using simplified code and explain what is happening. From pyparted's documentation we learn the following

pyparted is a set of native Python bindings for libparted. libparted is the library portion of the GNU parted project. With pyparted, you can write applications that interact with disk partition tables and filesystems.

The Python bindings are implemented in two layers. Since libparted itself is written in C without any real implementation of objects, a simple 1:1 mapping of externally accessible libparted functions was written. This mapping is provided in the _ped Python module. You can use that module if you want to, but it's really just meant for the larger parted module.

_ped libparted Python bindings, direct 1:1: function mapping parted Native Python code building on _ped, complete with classes, exceptions, and advanced functionality.

The two interfaces are the _ped and parted modules. As a user I expect them

to behave exactly the same but they don't. For example some partition properties

are read-only in libparted and _ped but not in parted. This is the mismatch

I'm talking about.

Consider the following tests (also available on GitHub)

diff --git a/tests/baseclass.py b/tests/baseclass.py

index 4f48b87..30ffc11 100644

--- a/tests/baseclass.py

+++ b/tests/baseclass.py

@@ -168,6 +168,12 @@ class RequiresPartition(RequiresDisk):

self._part = _ped.Partition(disk=self._disk, type=_ped.PARTITION_NORMAL,

self._part = _ped.Partition(disk=self._disk, type=_ped.PARTITION_NORMAL,

start=0, end=100, fs_type=_ped.file_system_type_get("ext2"))

+ geom = parted.Geometry(self.device, start=100, length=100)

+ fs = parted.FileSystem(type='ext2', geometry=geom)

+ self.part = parted.Partition(disk=self.disk, type=parted.PARTITION_NORMAL,

+ geometry=geom, fs=fs)

+

+

# Base class for any test case that requires a hash table of all

# _ped.DiskType objects available

class RequiresDiskTypes(unittest.TestCase):

diff --git a/tests/test__ped_partition.py b/tests/test__ped_partition.py

index 7ef049a..26449b4 100755

--- a/tests/test__ped_partition.py

+++ b/tests/test__ped_partition.py

@@ -62,8 +62,10 @@ class PartitionGetSetTestCase(RequiresPartition):

self.assertRaises(exn, setattr, self._part, "num", 1)

self.assertRaises(exn, setattr, self._part, "fs_type",

_ped.file_system_type_get("fat32"))

- self.assertRaises(exn, setattr, self._part, "geom",

- _ped.Geometry(self._device, 10, 20))

+ with self.assertRaises((AttributeError, TypeError)):

+# setattr(self._part, "geom", _ped.Geometry(self._device, 10, 20))

+ self._part.geom = _ped.Geometry(self._device, 10, 20)

+

self.assertRaises(exn, setattr, self._part, "disk", self._disk)

# Check that values have the right type.

diff --git a/tests/test_parted_partition.py b/tests/test_parted_partition.py

index 0a406a0..8d8d0fd 100755

--- a/tests/test_parted_partition.py

+++ b/tests/test_parted_partition.py

@@ -23,7 +23,7 @@

import parted

import unittest

-from tests.baseclass import RequiresDisk

+from tests.baseclass import RequiresDisk, RequiresPartition

# One class per method, multiple tests per class. For these simple methods,

# that seems like good organization. More complicated methods may require

@@ -34,11 +34,11 @@ class PartitionNewTestCase(unittest.TestCase):

# TODO

self.fail("Unimplemented test case.")

-@unittest.skip("Unimplemented test case.")

-class PartitionGetSetTestCase(unittest.TestCase):

+class PartitionGetSetTestCase(RequiresPartition):

def runTest(self):

- # TODO

- self.fail("Unimplemented test case.")

+ with self.assertRaises((AttributeError, TypeError)):

+ #setattr(self.part, "geometry", parted.Geometry(self.device, start=10, length=20))

+ self.part.geometry = parted.Geometry(self.device, start=10, length=20)

@unittest.skip("Unimplemented test case.")

class PartitionGetFlagTestCase(unittest.TestCase):

The test in test__ped_partition.py works without problems, I've modified it for

visual reference only. This was also the inspiration behind the test in

test_parted_partition.py. However the second test fails with

======================================================================

FAIL: runTest (tests.test_parted_partition.PartitionGetSetTestCase)

----------------------------------------------------------------------

Traceback (most recent call last):

File "/tmp/pyparted/tests/test_parted_partition.py", line 41, in runTest

self.part.geometry = parted.Geometry(self.device, start=10, length=20)

AssertionError: (<type 'exceptions.AttributeError'>, <type 'exceptions.TypeError'>) not raised

----------------------------------------------------------------------

Now it's clear that something isn't quite the same between the two interfaces.

If we look at src/parted/partition.py we see the following snippet

137 fileSystem = property(lambda s: s._fileSystem, lambda s, v: setattr(s, "_fileSystem", v))

138 geometry = property(lambda s: s._geometry, lambda s, v: setattr(s, "_geometry", v))

139 system = property(lambda s: s.__writeOnly("system"), lambda s, v: s.__partition.set_system(v))

140 type = property(lambda s: s.__partition.type, lambda s, v: setattr(s.__partition, "type", v))

The geometry property is indeed read-write but the system property is write-only.

git blame leads us to the interesting

commit 2fc0ee2b, which changes

definitions for quite a few properties and removes the _readOnly method which raises

an exception. Even more interesting is the fact that the Partition.geometry property

hasn't been changed. If you look closer you will notice that the deleted definition and

the new one are exactly the same. Looks like the problem existed even before this change.

Digging down even further we find

commit 7599aa1

which is the very first implementation of the parted module. There you can see the

_readOnly method and some properties like path and disk correctly marked as such

but geometry isn't.

Shortly after this commit the first test was added (4b9de0e) and a bit later the second, empty test class, was added (c85a5e6). This only goes to show that every piece of software needs appropriate QA coverage, which pyparted was kind of lacking (and I'm trying to change that).

The reason this bug went unnoticed for so long

is the limited exposure of pyparted. To my knowledge anaconda, the Fedora installer

is its biggest (if not single) consumer and maybe it uses only the _ped

interface (I didn't check) or it doesn't try to do silly things like setting

a value to a read-only property.

** The lesson from this story is to test all of your interfaces and also make sure they are behaving in exactly the same manner! **

Thanks for reading and happy testing!

There are comments.

How To Hire Software Testers, Pt. 2

In my previous post I have described the process I follow when interviewing candidates for a QA position. The first question is designed to expose the applicant's way of thinking. My second question is designed to examine their technical understanding and to a lesser extent their way of thinking.

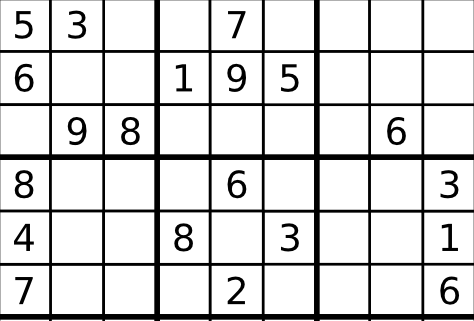

How do You Test a Sudoku Solving Function

You have implementation of a sudoku solver function with the following pseudocode:

func Sudoku(Array[2]) {

...

return Array[2]

}

- The function solves a sudoku puzzle;

- Input parameter is a two-dimensional array with the known numbers (from 1 to 9) in the Sudoku grid;

- The output is a two-dimensional array with the numbers from the solved puzzle.

You have 10 minutes to write down a list of all test cases you can think of!

Behind The Scenes

One set of possible tests is to examine the input and figure out if the function has been passed valid data. In the real-world programs interact with each other, they are not alone. Sometimes it happens that a valid output from one program isn't a valid input for the next one. Also we have malicious users who will try to break the program.

If a person manages to test for this case then I know they have a bit more clue about how software is used in the real-world. This also touches a bit on white-box testing, where the tester has full info about the software under test. In this example the implementation is intentionally left blank.

OTOH I've seen answers where the applicant blindly assumes that the input is 1-9, because the spec says so, and excludes the entire input testing from their scope. I classify this answer as immediate failure, because a tester should never assume anything and test to verify their initial conditions are indeed as stated in the documentation.

Another set of possible tests is to verify the correct work of the function. That is to verify the proposed Sudoku solution is indeed following the rules of the game. This is what we usually refer to black-box testing. The tester doesn't know how the SUT works internally, they only know the input data and the expected output.

If a person fails to describe at least one such test case they have essentially failed the question. What is the point of a SUT which doesn't crash (suppose that all previous tests passed) but doesn't produce the desired correct result ?

Then there are test cases related to the environment in which this Sudoku solver function operates. This is where I examine the creativity of the person, their familiarity with other platforms and to some extent their thinking out of the box. Is the Sudoku solver iterative or recursive ? What if we're on an embedded system and recursion is too heavy for it ? How much power does the function require, how fast it works, etc.

A person that provides at least one answer in this category has bonus points over the others who didn't. IMO it is very important for a tester to have experience with various platforms and environments because this helps them see edge cases which others will not be able to see. I also consider a strong plus if the person shows they can operate outside their comfort zone.

If we have time I may ask the applicant to write the tests using a programming language they know. This is to verify their coding and automation skills.

OTOH having the tests as code will show me how much the person knows about testing

vs. coding. I've seen solutions where people write a for loop, looping over all

numbers from 1 to 100 and testing if they are a valid input to Sudoku().

Obviously this is pointless and they failed the test.

Last but not least, the question asks for testing a particular Sudoku solver implementation. I expect the answers to be designed around the given function. However I've seen answers designed around a Sudoku solver website or described as intermediate states in an interactive Sudoku game (e.g. wrong answers shown in red). I consider these invalid because the question is to test a particular given function, not anything Sudoku related. If you do this in real-life that means you are not testing the SUT directly but maybe touching it indirectly (at best). This is not what a QA job is about.

What Are The Correct Answers

Here are some of the possible tests.

- Test with single dimensional input array - we expect an error;

- Test with 3 dimensional input array - we expect an error;

- Then proceed testing with 2 dimensional array;

- Test with number less than 1 (usually 0) - expect error;

- Test with number greater than 9 (usually 10) - expect error;

- Test how the function handles non-numerical data - chars & symbols (essentially the same thing for our function);

- Test with strings which actually represent a number, e.g. "1";

- Test with floating point numbers, e.g. 1.0, 2.0, 3.0 - may or may not work depending on how the code is written;

- If floating point numbers are accepted, then test with a different locale. Is "1.0" the same as "1,0";

- Test with

null,nil,None(whatever the language supports) - this should be a valid value for unknown numbers and not cause a crash; - Test if the function validates that the provided input follows the Sudoku rules by passing it duplicate numbers in one row, column or square. It should produce an error;

- Test if the input data contains the minimum number of givens, 17 for a general Sudoku, so that a solution can be found. Otherwise the function may go into an endless loop;

- Verify the proposed solution conforms to Sudoku rules;

- Test with a fully solved puzzle as input - output should be exactly the same;

- If on mobile, measure battery consumption for 1 minute of operation. I've seen a game which uses 1% battery power for 1 minute of game play;

- Test for buffer overflows;

- Test for speed of execution (performance);

- Test performance on single and multiple (core) CPUs - depending on the language and how the function is written this may produce a difference or not;

I'm sure I'm missing something so please use the comments below to tell me your suggestions.

Thanks for reading and happy testing!

There are comments.

How To Hire Software Testers, Pt. 1

Many people have asked me how do I make sure a person who applies for a QA/software tester position is a good fit ? On the opposite side people have asked online how do they give correct answers on test related questions at job interviews. I have two general questions to help me decide if a person knows about testing and if they are a good fit for the team or not.

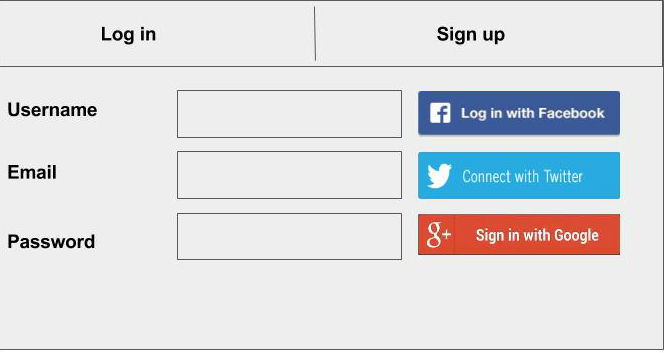

How do You Test a Login Form

You are given the login form above and the following constraints:

- Log in is possible with username and password or through the social networks;

- After successful registration an email with the following content is sent to the user:

Helo and welcome to atodorov.org! Click _here_ to confirm your emeil address.

You have 10 minutes to write down a list of all test cases you can think of!

Behind The Scenes

The question looks trivial but isn't as easy to answer as you may think. If you haven't spent the last 20 years of your life in a cave, chances are that you will give technically correct answers but this is not the only thing I'm looking for.

The question is designed to simulate a real-world scenario, where the QA person is given a piece of software, or requirements document and tasked with creating a test plan for it. The question is intentionally vague because that's how real-world works, most often testers don't have all the requirements and specifications available beforehand.

The time constrain, especially when the interview is performed in person, simulates work under pressure - get the job done as soon as possible.

While I review the answers I'm trying to figure out how does the person think, not how much about technology they know. I'm trying to figure out what are their strong areas and where they need to improve. IMO being able to think as a tester and having attention to details, being able to easily spot corner cases and look at the problem from different angles is much more important than technical knowledge in a particular domain.

As long as a person is suited to think like a tester they can learn to apply their critical thinking to any software under test and use various testing techniques to discover or safeguard against problems.

-

A person that answers quickly and intuitively is better than a person who takes a long time to figure out what to test. I can see they are active thinkers and can work without micro-management and hand-holding.

-

A person that goes on and on describing different test cases is better than one who limits themselves to the most obvious cases. I can see they have an exploratory passion, which is the key to finding many bugs and making the software better;

-

A person that goes to explore the system in breadth is better than one who keeps banging on the same test case with more and more variations. I can see they are noticing the various aspects of the software (e.g. social login, email confirmation, etc) but also to some extent, not investing all of their resources (the remaining time to answer) into a single direction. Also in real-world testing, testing the crap out of something is useful up to a point. Afterwards we don't really see any significant value from additional testing efforts.

-

A person that is quick to figure out one or two corner cases is better than a person who can't. This tells me they are thinking about what goes on under the hood and trying to predict unpredictable behavior - for example what happens if you try to register with already registered username or email?

-

A person that asks questions in order to minimize uncertainty and vagueness is better than the one who doesn't. In real-world if the tester doesn't know something they have to ask. Quite often even developers and product managers don't know the answer. Then how are we developing software if we don't know what it is supposed to do ?

-

If given more time (writing interview), a person that organizes their answers into steps (1, 2, 3) is a bit better than one who simply throws at you random answers without context. Similar thought applies to people who write down their test pre-conditions before writing down scenarios. From this I can see that the person is well organized and will have no trouble writing detailed test cases, with pre-conditions, steps to execute and expected results. This is what QAs do. Also we have the, sometimes tedious, task of organizing all test results into a test case management system (aka test book) for further reference.

-

The question intentionally includes some mistakes. In this example 2 spelling errors in the email text. Whoever manages to spot them and tell me about it is better than others who don't spot the errors or assume that's how it is. QAs job is to always question everything and never blindly trust that the state of the system is the way it is. Also simple errors like typos can be embarrassing or generate unnecessary support calls.

-

Bravo if you tested not only the outgoing email but also social login. This shows attention to details, not to mention social is 1/3rd of our example system. It also shows that QA's job doesn't end with testing the core, perceived functionality of the system. QA tests everything, even interactions with external systems if that is necessary.

What Are The Correct Answers

I will document some of the possible answers as I recall them from memory. I will update the list with other interesting answers given by students who applied to my QA and Automation 101 course, answering this very same question.

- Test if users can register using valid username, email and password;

- Test if SUT gives an error message when email or password (or username) format doesn't follow a particular format (e.g. no special symbols);

- After registration, test that the user can login successfully;

- Depending on requirements test if the user can login before they have confirmed their email address;

- Test that upon registration a confirmation email is actually sent;

- Spell-check the email text;

- Test if the click here piece of text is a hyperlink;

- Verify that when clicked, the hyperlink successfully confirmed email/activates the account (depending on what confirmed/activated means per requirements);

- Test what happens if the link is clicked a second time;

- Test what happens if the link is clicked after 24 or 48 hrs;

- Test that the social network icons, actually link to the desired SN and not someplace else;

- Test if new user accounts can be created via all specified social networks;

- Test what happens if there is an existing user, who registered with a password and they (or somebody else) tries to register via social with an account that has the same email address, aka account hijacking;

- Same as previous test but try to register a new user, using email address that was previously used with social login;

- Test what happens if users forget their password - intentionally we don't have the '[] Forgot my password' checkbox. This is both usability feature and missing requirements;

- Test for simple SQL injections like Bobby Tables. btw I was given this image as an answer which scored high on the geek-o-meter;

- Test for XSS - Tweetdeck didn't;

- Test if non-activated/non-confirmed usernames expire after some time and can be used again;

- Test of fields tab order - something I haven't done in 15 or more years but still valid and I've seen sites getting it wrong quite often;

- When trying to login test what happens when username/password is wrong or empty;

- Test if email is required for login - this isn't clear from the requirements so it is a valid answer. Better answer is to clarify that;

- Test if username/email or password is case sensitive. Valid test and indeed I recently saw a problem where upon registration users entered their emails using some capital letters but they were lower-cased before saving to the DB. Later this broke a piece of code which forgot to apply the lowercase on the input data. The code was handling account reactivation;

- Test if the password field shows the actual password or not. I haven't seen this in person but I'm certain there is some site which maybe used CSS and nice images instead of the default ugly password field and that didn't work on all browsers;

- Test if you can copy&paste the masked password, probably trying to steal somebody else's password. Last time I saw this was on early Windows 95 with the modem connection dialog. Very briefly it allowed you to copy the text from the field and paste it into Notepad to reveal the actual password;

- If we're on mobile (intentionally not specified) test for buffer overflows; Actually test that everywhere and see what happens;

- Test if the social network buttons use the same action verb. In the example we have Log in, Connect and Sign in. This is sort of usability testing and helping have a unified look and feel of the product;

- Test which of the Log in and Sign up tabs is active at the moment. The example is intentionally left to look like a wireframe but it is important for the user to easily tell where they are. Otherwise they'll call support or even worse, simply give up on us;

- Test if all static files (images) will load if they are deployed onto CDN. Not surprisingly I've seen this bug;

- In case we have a "[] Remember me" checkbox, test if it actually remembers the user credentials. Yesterday I saw this same functionality not working on a specialized desktop app in the corner case where you supply a different connection endpoint (server) instead of the ones already provided. The user defined value is accepted but not saved automatically;

- Test if the "Remember me" functionality actually saves your last credentials or only the first ones you provided. There is a similar bug in Grajdanite, where once you enter a wrong email, it is remembered and every time the form is pre-filled with the previous value (which is wrong). I'm yet to report it though;

- Cross-browser testing - hmm, login and registration should work on all browsers you say. It's not browser dependent, is it? Well yeah, login isn't browser dependent unless we did something stupid like pre-handling the form submit via non-cross-platform JavaScript or even accidentally doing so;

- Test with Unicode characters, especially non Latin ones. It's been many years since we had Unicode but quite a few apps haven't learned how to deal with Unicode text properly.

I'm certain there are more answers and I will update the list as I figure them out. You can always post in the comments and tell me something I've missed.

How to Pass The Job Interview

This is a question I often see on Quora. I have a job interview tomorrow. How do I test a login form (or whatever) ?

If this section is what you're after I suspect you are a junior or wanna-be software tester. As you've seen the interviewer isn't really interested in what you know already, at least not as much. We're interested in getting to know how you think in the course of 30-60 minutes.

If you ever find yourself being asked a similar question just start thinking and answering and don't stop. Vocalize your thoughts, even if you don't know what will happen when testing a certain condition. Then keep going on and on. Look at the problem from all angles, explain how you'd test various aspects and features of the SUT. Then move on to the next bit. Always think about what you may have forgotten and revisit your answers - this is what real QAs do - learn from mistakes. Ask questions, don't ever assume anything. If something is unclear ask to be clarified. For example I've seen a person who doesn't use social networks and didn't know how social login/registration worked. They did good by asking me to describe how that works.

Your goal is to make the interviewer ask you to stop answering. Then tell them a few more answers.

However beware of cheating. You may cheat a little bit by saying you will test this and that or design scenarios you have no clue about. Maybe you read them in my blog or elsewhere. If the interviewer knows their job (which they should) they will instantly ask you another question to verify what you say. Don't forget the interviewer is probably an experienced tester and validating assumptions is what they do every day.

For example, if you told me something about security testing or SQL injection or XSS I will ask you to explain that in more details. If you forgot to mention, one of them, say XSS but only heard about SQL injection I will ask you about the other one. This will immediately tell me if you have a clue what you are talking about.

Feel free to send me suggestions and answers in the comments below. You can find the second part of this post at How do you test a Sudoku solving function.

Thanks for reading and happy testing!

There are comments.

Beware of Double Stubs in RSpec

I've been re-factoring some RSpec tests and encountered a method which has been stubbed-out 2 times in a row. This of course led to problems when I tried to delete some of the code, which I deemed unnecessary. Using Treehouse's burger example I've recreated my use-case. Comments are in the code below:

class Burger

attr_reader :options

def initialize(options={})

@options = options

end

def apply_ketchup(number=0)

@ketchup = @options[:ketchup]

# the number is passed from the tests below to make it easier to

# monitor execution of this method.

printf "Ketchup applied %d times\n", number

end

def apply_mayo_and_ketchup(number=0)

@options[:mayo] = true

apply_ketchup(number)

end

def has_ketchup_on_it?

@ketchup

end

end

describe Burger do

describe "#apply_mayo_and_ketchup" do

context "with ketchup and single stubs" do

let(:burger) { Burger.new(:ketchup => true) }

it "1: sets the mayo flag to true, ketchup is nil" do

# this line stubs-out the apply_ketchup method

# and @ketchup will remain nil b/c the original

# method is not executed at all

expect(burger).to receive(:apply_ketchup)

burger.apply_mayo_and_ketchup(1)

expect(burger.options[:mayo]).to eq(true)

expect(burger.has_ketchup_on_it?).to be(nil)

end

it "2: sets the mayo and ketchup flags to true" do

# this line stubs-out the apply_ketchup method

# but in the end calls the non-stubbed out version as well

# so that has_ketchup_on_it? will return true !

expect(burger).to receive(:apply_ketchup).and_call_original

burger.apply_mayo_and_ketchup(2)

expect(burger.options[:mayo]).to eq(true)

expect(burger.has_ketchup_on_it?).to eq(true)

end

end

context "with ketchup and double stubs" do

let(:burger) { Burger.new(:ketchup => true) }

before {

# this line creates a stub for the apply_ketchup method

allow(burger).to receive(:apply_ketchup)

}

it "3: sets the mayo flag to true, ketchup is nil" do

# this line creates a second stub for the fake apply_ketchup method

# @ketchup will remain nil b/c the original method which sets its value

# isn't actually executed. we may as well comment out this line and

# this will not affect the test at all

expect(burger).to receive(:apply_ketchup)

burger.apply_mayo_and_ketchup(3)

expect(burger.options[:mayo]).to eq(true)

expect(burger.has_ketchup_on_it?).to be(nil)

end

it "4: sets the mayo and ketchup flags to true" do

# this line creates a second stub for the fake apply_ketchup method.

# .and_call_original will traverse up the stubs and call the original

# method. If we don't want to assert that the method has been called

# or we don't care about its parameters, but only the end result

# that system state has been changed then this line is redundant!

# Don't stub & call the original, just call the original method, right?

# Commenting out this line will cause a failure due to the first stub

# in before() above. The first stub will execute and @ketchup will remain

# nil! To set things straight also comment out the allow() line in

# before()!

expect(burger).to receive(:apply_ketchup).and_call_original

burger.apply_mayo_and_ketchup(4)

expect(burger.options[:mayo]).to eq(true)

expect(burger.has_ketchup_on_it?).to eq(true)

end

end

end

end

When I see a .and_call_original method

after a stub I immediately delete it because in most of the cases this isn't

necessary. Why stub out something just to call it again later ? See my comments

above. Also the expect to receive && do action

sequence is a bit counter intuitive. I prefer the do action & assert results

sequence instead.

The problem here comes from the fact that RSpec has very flexible syntax for creating stubs which makes it very easy to abuse them, especially when you have no idea what you're doing. If you write tests with RSpec please make a note of this and try to avoid this mistake.

If you'd like to learn more about stubs see Bad Stub Design in DNF.

There are comments.

Hello World QA Challenge

Recently I've been asked on Quora "Do simple programs like hello world have any bugs" ? In particular if the computer hardware and OS are healthy, will there be any bugs in a simple hello-world program?

I'm challenging you to tell me what kinds of bugs have you seen which would easily apply to a very simple program! Below are some I was able to think about.

Localization

Once we add a requirement to our system to work in environment which supports multiple languages and input methods, not supporting them immediately becomes a bug, although the SUT still functions correctly. For example, if using a French locale I would expect the program to print "Bonjour le monde". Same for German, Spanish, Italian, etc. It even becomes trickier with languages using non-latin script like Bulgarian and Japanese for example. Depending on your environment you may not be able to display non-latin script at all. See also How do you test fonts!

Packaging and distribution

This is an entire class of problems not directly related to the SUT but to the way it is packaged and distributed to its target customers. For a Linux system it makes sense to have an RPM or DEB packages. Dependency resolution and proper installation and upgrade for these packages need to be tested and ensured.

A famous example of a high impact packaging bug is

Django #19858. During an urgent

security release it was discovered that the source package was shipping byte-compiled

*.pyc files made with a newer version of Python (2.7). Even worse there were

byte-compiled files without the corresponding source files.

Being a security release everyone

rushed to upgrade immediately. Everyone who had Python 2.6 saw their website

produce ImportError: Bad magic number and crash immediately after the upgrade!

NOTE: byte-compiled files between different versions of Python are incompatible!

Another one is django-facebook #262 in which version 4.3.0 suddenly grew from 200KiB to 23MiB in size, shipping a ton of extra JPEG images.

Portability

There are so many different portability issues which may affect an otherwise working program. You only need to add a requirement to build/execute on another OS or CPU architecture - for example aarch64 (64-bit ARM). This resulted in hundreds of bugs reported by Dennis Gilmore, for example RHBZ #926850 which is also related to packaging and the build chain.

Then we have possibility for big endian vs. little endian issues especially if we run on Power 8 CPU which supports both modes.

Another one could be 16bit vs. 32bit vs 64bit memory addressing. For example on platforms like IBM mainframe (s390) they reserved the most significant bit to easily support applications expecting 24-bit addressing, as well as to sidestep a problem with extending two instructions to handle 32-bit unsigned addresses, which made the address space 31-bits!

Performance

Not all processors are created equal! Both Intel (x86_64), ARM and PowerPC have different instruction sets and numbers of registers. Depending on what sort of calculations you perform one of the architectures may be more suitable than the other.

Typos

It not uncommon to mistype even common words like "hello" and "world" and I've rarely seen QAs and developers running spell checkers on all of their source strings. We do this for documentation and occasionally for man pages but for the actual program output or widget labels - almost never.

Challenge

I find the original question very interesting and a good metal exercise for IT professionals. I will be going through Bugzilla to find examples which illustrate the above points and even more possible problems with a program as simple as hello world and will update this blog accordingly!

Tell me what kinds of bugs have you seen which would easily apply to a very simple program! It's best if you can post links to public bugs and/or detailed explanation. Thanks!

There are comments.

QA Switch from Waterfall to BDD

For the last two weeks I've been experimenting with Behavior-Driven Development (BDD) in order to find out what it takes for the Quality Assurance department to switch from using the Waterfall method to BDD. Here are my initial observations and thoughts for further investigation.

Background

Developing an entire Linux distribution (or any large product for that matter) is a very complicated task. Traditionally QA has been involved in writing the test plans for the proposed technology updates, then execute and maintain them during the entire product life-cycle reporting and verifying tons of bugs along the way. From the point of view of the entire product the process is very close to the traditional waterfall development method. I will be using the term waterfall to describe the old way of doing things and BDD the new one. In particular I'm referring to the process of analyzing the proposed feature set for the next major version of the product (e.g. Fedora) and designing the necessary test plans documents and test cases.

To get an idea about where does QA join the process see the Fedora 24 Change set. When the planning phase starts we are given these "feature pages" from which QA needs to distill test plans and test cases. The challenges with the waterfall model are that QA joins the planning process rather late and there is not enough time to iron out all the necessary details. Add to this the fact that feature pages are often incomplete and vaguely described and sometimes looking for the right answers is the hardest part of the job.

QA and BDD

Right now I'm focusing on using the Gherkin Given-When-Then language to prepare feature descriptions and test scenarios from the above feature pages. You can follow my work on GitHub and I will be using them as examples below. Also see examples from my co-workers 1, 2.