Automatic Upstream Dependency Testing

Ever since RHEL 7.2 python-libs broke s3cmd I've been pondering an age old problem: How do I know if my software works with the latest upstream dependencies ? How can I pro-actively monitor for new versions and add them to my test matrix ?

Mixing together my previous experience with Difio and monitoring upstream sources, and Forbes Lindesay's GitHub Automation talk at DEVit Conf I came together with a plan:

- Make an application which will execute when new upstream version is available;

- Automatically update

.travis.ymlfor the projects I'm interested in; - Let Travis-CI execute my test suite for all available upstream versions;

- Profit!

How Does It Work

First we need to monitor upstream! RubyGems.org has nice webhooks interface, you can even trigger on individual packages. PyPI however doesn't have anything like this :(. My solution is to run a cron job every hour and parse their RSS stream for newly released packages. This has been working previously for Difio so I re-used one function from the code.

After finding anything we're interested in comes the hard part - automatically

updating .travis.yml using the GitHub API. I've described this in more detail

here. This time

I've slightly modified the code to update only when needed and accept more

parameters so it can be reused.

Travis-CI has a clean interface to specify environment variables and

defining several

of them crates a test matrix. This is exactly what I'm doing.

.travis.yml is updated with a new ENV setting, which determines the upstream

package version. After commit new build is triggered which includes the expanded

test matrix.

Example

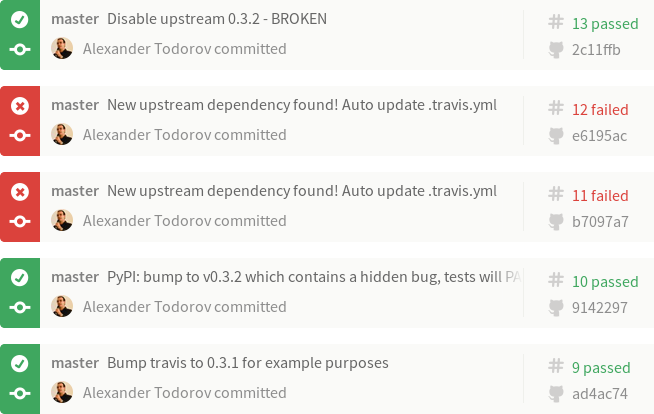

Imagine that our Project 2501 depends on FOO version 0.3.1. The build log illustrates what happened:

- Build #9 is what we've tested with FOO-0.3.1 and released to production. Test result is PASS!

- Build #10 - meanwhile upstream releases FOO-0.3.2 which causes our project to break. We're not aware of this and continue developing new features while all test results still PASS! When our customers upgrade their systems Project 2501 will break ! Tests didn't catch it because test matrix wasn't updated. Please ignore the actual commit message in the example! I've used the same repository for the dummy dependency package.

- Build #11 - the monitoring solution finds FOO-0.3.2 and updates the test matrix automatically. The build immediately breaks! More precisely the test with version 0.3.2 fails!

- Build #12 - we've alerted FOO.org about their problem and they've released FOO-0.3.3. Our monitor has found that and updated the test matrix. However the 0.3.2 test job still fails!

- Build #13 - we decide to workaround the 0.3.2 failure or simply handle the

error gracefully. In this example I've removed version 0.3.2 from the test

matrix to simulate that. In reality I wouldn't touch

.travis.ymlbut instead update my application and tests to check for that particular version. All test results are PASS again!

Btw Build #11 above was triggered manually (./monitor.py) while Build #12 came from OpenShit, my hosting environment.

At present I have this monitoring enabled for my new Markdown extensions and will also add it to django-s3-cache once it migrates to Travis-CI (it uses drone.io now).

Enough Talk, Show me the Code

#!/usr/bin/env python

import os

import sys

import json

import base64

import httplib

from pprint import pprint

from datetime import datetime

from xml.dom.minidom import parseString

def get_url(url, post_data = None):

# GitHub requires a valid UA string

headers = {

'User-Agent' : 'Mozilla/5.0 (X11; Linux x86_64; rv:10.0.5) Gecko/20120601 Firefox/10.0.5',

}

# shortcut for GitHub API calls

if url.find("://") == -1:

url = "https://api.github.com%s" % url

if url.find('api.github.com') > -1:

if not os.environ.has_key("GITHUB_TOKEN"):

raise Exception("Set the GITHUB_TOKEN variable")

else:

headers.update({

'Authorization': 'token %s' % os.environ['GITHUB_TOKEN']

})

(proto, host_path) = url.split('//')

(host_port, path) = host_path.split('/', 1)

path = '/' + path

if url.startswith('https'):

conn = httplib.HTTPSConnection(host_port)

else:

conn = httplib.HTTPConnection(host_port)

method = 'GET'

if post_data:

method = 'POST'

post_data = json.dumps(post_data)

conn.request(method, path, body=post_data, headers=headers)

response = conn.getresponse()

if (response.status == 404):

raise Exception("404 - %s not found" % url)

result = response.read().decode('UTF-8', 'replace')

try:

return json.loads(result)

except ValueError:

# not a JSON response

return result

def post_url(url, data):

return get_url(url, data)

def monitor_rss(config):

"""

Scan the PyPI RSS feeds to look for new packages.

If name is found in config then execute the specified callback.

@config is a dict with keys matching package names and values

are lists of dicts

{

'cb' : a_callback,

'args' : dict

}

"""

rss = get_url("https://pypi.python.org/pypi?:action=rss")

dom = parseString(rss)

for item in dom.getElementsByTagName("item"):

try:

title = item.getElementsByTagName("title")[0]

pub_date = item.getElementsByTagName("pubDate")[0]

(name, version) = title.firstChild.wholeText.split(" ")

released_on = datetime.strptime(pub_date.firstChild.wholeText, '%d %b %Y %H:%M:%S GMT')

if name in config.keys():

print name, version, "found in config"

for cfg in config[name]:

try:

args = cfg['args']

args.update({

'name' : name,

'version' : version,

'released_on' : released_on

})

# execute the call back

cfg['cb'](**args)

except Exception, e:

print e

continue

except Exception, e:

print e

continue

def update_travis(data, new_version):

travis = data.rstrip()

new_ver_line = " - VERSION=%s" % new_version

if travis.find(new_ver_line) == -1:

travis += "\n" + new_ver_line + "\n"

return travis

def update_github(**kwargs):

"""

Update GitHub via API

"""

GITHUB_REPO = kwargs.get('GITHUB_REPO')

GITHUB_BRANCH = kwargs.get('GITHUB_BRANCH')

GITHUB_FILE = kwargs.get('GITHUB_FILE')

# step 1: Get a reference to HEAD

data = get_url("/repos/%s/git/refs/heads/%s" % (GITHUB_REPO, GITHUB_BRANCH))

HEAD = {

'sha' : data['object']['sha'],

'url' : data['object']['url'],

}

# step 2: Grab the commit that HEAD points to

data = get_url(HEAD['url'])

# remove what we don't need for clarity

for key in data.keys():

if key not in ['sha', 'tree']:

del data[key]

HEAD['commit'] = data

# step 4: Get a hold of the tree that the commit points to

data = get_url(HEAD['commit']['tree']['url'])

HEAD['tree'] = { 'sha' : data['sha'] }

# intermediate step: get the latest content from GitHub and make an updated version

for obj in data['tree']:

if obj['path'] == GITHUB_FILE:

data = get_url(obj['url']) # get the blob from the tree

data = base64.b64decode(data['content'])

break

old_travis = data.rstrip()

new_travis = update_travis(old_travis, kwargs.get('version'))

# bail out if nothing changed

if new_travis == old_travis:

print "new == old, bailing out", kwargs

return

####

#### WARNING WRITE OPERATIONS BELOW

####

# step 3: Post your new file to the server

data = post_url(

"/repos/%s/git/blobs" % GITHUB_REPO,

{

'content' : new_travis,

'encoding' : 'utf-8'

}

)

HEAD['UPDATE'] = { 'sha' : data['sha'] }

# step 5: Create a tree containing your new file

data = post_url(

"/repos/%s/git/trees" % GITHUB_REPO,

{

"base_tree": HEAD['tree']['sha'],

"tree": [{

"path": GITHUB_FILE,

"mode": "100644",

"type": "blob",

"sha": HEAD['UPDATE']['sha']

}]

}

)

HEAD['UPDATE']['tree'] = { 'sha' : data['sha'] }

# step 6: Create a new commit

data = post_url(

"/repos/%s/git/commits" % GITHUB_REPO,

{

"message": "New upstream dependency found! Auto update .travis.yml",

"parents": [HEAD['commit']['sha']],

"tree": HEAD['UPDATE']['tree']['sha']

}

)

HEAD['UPDATE']['commit'] = { 'sha' : data['sha'] }

# step 7: Update HEAD, but don't force it!

data = post_url(

"/repos/%s/git/refs/heads/%s" % (GITHUB_REPO, GITHUB_BRANCH),

{

"sha": HEAD['UPDATE']['commit']['sha']

}

)

if data.has_key('object'): # PASS

pass

else: # FAIL

print data['message']

if __name__ == "__main__":

config = {

"atodorov-test" : [

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/bztest',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

}

],

"Markdown" : [

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/Markdown-Bugzilla-Extension',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

},

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/Markdown-No-Lazy-Code-Extension',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

},

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/Markdown-No-Lazy-BlockQuote-Extension',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

},

],

}

# check the RSS to see if we have something new

monitor_rss(config)

There are comments.

Hosting Multiple Python WSGI Scripts on OpenShift

With OpenShift you can host WSGI Python applications. By default the Python cartridge comes with a simple WSGI app and the following directory layout

./

./.openshift/

./requirements.txt

./setup.py

./wsgi.py

I wanted to add my GitHub Bugzilla Hook in a subdirectory (git submodule actually) and simply reserve a URL which will be served by this app. My intention is also to add other small scripts to the same cartridge in order to better utilize the available resources.

Using WSGIScriptAlias inside .htaccess DOESN'T WORK! OpenShift errors

out when WSGIScriptAlias is present. I suspect this to be a known limitation

and I have an open support case with Red Hat to confirm this.

My workaround is to configure the URL paths from the wsgi.py file in the root

directory. For example

diff --git a/wsgi.py b/wsgi.py

index c443581..20e2bf5 100644

--- a/wsgi.py

+++ b/wsgi.py

@@ -12,7 +12,12 @@ except IOError:

# line, it's possible required libraries won't be in your searchable path

#

+from github_bugzilla_hook import wsgi as ghbzh

+

def application(environ, start_response):

+ # custom paths

+ if environ['PATH_INFO'] == '/github-bugzilla-hook/':

+ return ghbzh.application(environ, start_response)

ctype = 'text/plain'

if environ['PATH_INFO'] == '/health':

This does the job and is almost the same as configuring the path in .htaccess.

I hope it helps you!

There are comments.

This Week: Python Testing, Chris DiBona on Open Source and OpenShift ENV Variables

Here is a random collection of links I came across this week which appear interesting to me but I don't have time to blog about in details.

Making a Multi-branch Test Server for Python Apps

If you are wondering how to test different feature branches of your Python application but don't have the resources to create separate test servers this is for you: http://depressedoptimism.com/blog/2013/10/8/making-a-multi-branch-test-server!

Kudos to the python-django-bulgaria Google group for finding this link!

OpenSource.com Interview with Chris DiBona

Just read it at http://opensource.com/business/13/10/interview-chris-dibona.

I particularly like the part where he called open source "brutal".

You once called open source “brutal”. What did you mean by that?

...

I think that it is because open source projects are able to only work with the productive people and ignore everyone else. That behavior can come across as very harsh or exclusionary, and that's because it is that: brutally harsh and exclusionary of anyone who isn't contributing.

...

So, I guess what I'm saying is that survival of the fittest as practiced in the open source world is a pretty brutal mechanism, but it works very very well for producing quality software. Boy is it hard on newcomers though...

OpenShift Finally Introduces Environment Variables

Yes! Finally!

rhc set-env VARIABLE1=VALUE1 -a myapp

No need for my work around anymore! I will give the new feature a go very soon.

Read more about it at the OpenShift blog: https://www.openshift.com/blogs/taking-advantage-of-environment-variables-in-openshift-php-apps.

Have you found anything interesting this week? Please share in the comments below! Thanks!

There are comments.

Tip: Setting Secure ENV variables on Red Hat OpenShift

OpenShift is still missing the client side tools to set environment variables without exposing the values in source code but there is a way to do it. Here is how.

First ssh into your application and navigate to the $OPENSHIFT_DATA_DIR.

Create a file to define your environment.

$ rhc ssh -a difio

Password: ***

[difio-otb.rhcloud.com 51d32a854382ecf7a9000116]\> cd $OPENSHIFT_DATA_DIR

[difio-otb.rhcloud.com data]\> vi myenv.sh

[difio-otb.rhcloud.com data]\> cat myenv.sh

#!/bin/bash

export MYENV="hello"

[difio-otb.rhcloud.com data]\> chmod a+x myenv.sh

[difio-otb.rhcloud.com data]\> exit

Connection to difio-otb.rhcloud.com closed.

Now modify your code and git push to OpenShift. Then ssh into the app once again to verify that your configuration is still in place.

[atodorov@redbull difio]$ rhc ssh -a difio

Password: ***

[difio-otb.rhcloud.com 51d32a854382ecf7a9000116]\> cd $OPENSHIFT_DATA_DIR

[difio-otb.rhcloud.com data]\> ls -l

total 4

-rwxr-xr-x. 1 51d32a854382ecf7a9000116 51d32a854382ecf7a9000116 34 8 jul 14,33 myenv.sh

[difio-otb.rhcloud.com data]\>

Use the defined variables as you wish.

There are comments.

How to Deploy Python Hotfix on RedHat OpenShift Cloud

In this article I will show you how to deploy hotfix versions for Python packages on the RedHat OpenShift PaaS cloud.

Background

You are already running a Python application on your OpenShift instance. You are using some 3rd party dependencies when you find a bug in one of them. You go forward, fix the bug and submit a pull request. You don't want to wait for upstream to release a new version but rather build a hotfix package yourself and deploy to production immediately.

Solution

There are two basic approaches to solving this problem:

- Include the hotfix package source code in your application, i.e. add it to your git tree or;

- Build the hotfix separately and deploy as a dependency. Don't include it in your git tree, just add a requirement on the hotfix version.

I will talk about the later. The tricky part here is to instruct the cloud environment to use your package (including the proper location) and not upstream or their local mirror.

Python applications hosted at OpenShift don't support

requirements.txt which can point to various package sources and even install

packages directly from GitHub. They support setup.py which fetches packages

from http://pypi.python.org but it is flexible enough to support other locations.

Building the hotfix

First of all we'd like to build a hotfix package. This will be the upstream version that we are currently using plus the patch for our critical issue:

$ wget https://pypi.python.org/packages/source/p/python-magic/python-magic-0.4.3.tar.gz

$ tar -xzvf python-magic-0.4.3.tar.gz

$ cd python-magic-0.4.3

$ curl https://github.com/ahupp/python-magic/pull/31.patch | patch

Verify the patch has been applied correctly and then modify setup.py to

increase the version string. In this case I will set it to version='0.4.3.1'.

Then build the new package using python setup.py sdist and upload it to a web server.

Deploying to OpenShift

Modify setup.py and specify the hotfix version. Because this version is not on PyPI

and will not be on OpenShift's local mirror you need to provide the location where it can

be found. This is done with the dependency_links parameter to setup(). Here's how it looks:

diff --git a/setup.py b/setup.py

index c6e837c..2daa2a9 100644

--- a/setup.py

+++ b/setup.py

@@ -6,5 +6,6 @@ setup(name='YourAppName',

author='Your Name',

author_email='example@example.com',

url='http://www.python.org/sigs/distutils-sig/',

- install_requires=['python-magic==0.4.3'],

+ dependency_links=['https://s3.amazonaws.com/atodorov/blog/python-magic-0.4.3.1.tar.gz'],

+ install_requires=['python-magic==0.4.3.1'],

)

Now just git push to OpenShift and observe the console output:

remote: Processing dependencies for YourAppName==1.0

remote: Searching for python-magic==0.4.3.1

remote: Best match: python-magic 0.4.3.1

remote: Downloading https://s3.amazonaws.com/atodorov/blog/python-magic-0.4.3.1.tar.gz

remote: Processing python-magic-0.4.3.1.tar.gz

remote: Running python-magic-0.4.3.1/setup.py -q bdist_egg --dist-dir /tmp/easy_install-ZRVMBg/python-magic-0.4.3.1/egg-dist-tmp-R_Nxie

remote: zip_safe flag not set; analyzing archive contents...

remote: Removing python-magic 0.4.3 from easy-install.pth file

remote: Adding python-magic 0.4.3.1 to easy-install.pth file

Congratulations! Your hotfix package has just been deployed.

This approach should work for other cloud providers and other programming languages as well. Let me know if you have any experience with that.

There are comments.

Email Logging for Django on RedHat OpenShift with Amazon SES

Sending email in the cloud can be tricky. IPs of cloud providers are blacklisted because of frequent abuse. For that reason I use Amazon SES as my email backend. Here is how to configure Django to send emails to site admins when something goes wrong.

# Valid addresses only.

ADMINS = (

('Alexander Todorov', 'atodorov@example.com'),

)

LOGGING = {

'version': 1,

'disable_existing_loggers': False,

'handlers': {

'mail_admins': {

'level': 'ERROR',

'class': 'django.utils.log.AdminEmailHandler'

}

},

'loggers': {

'django.request': {

'handlers': ['mail_admins'],

'level': 'ERROR',

'propagate': True,

},

}

}

# Used as the From: address when reporting errors to admins

# Needs to be verified in Amazon SES as a valid sender

SERVER_EMAIL = 'django@example.com'

# Amazon Simple Email Service settings

AWS_SES_ACCESS_KEY_ID = 'xxxxxxxxxxxx'

AWS_SES_SECRET_ACCESS_KEY = 'xxxxxxxx'

EMAIL_BACKEND = 'django_ses.SESBackend'

You also need the django-ses dependency.

See http://docs.djangoproject.com/en/dev/topics/logging for more details on how to customize your logging configuration.

I am using this configuration successfully at RedHat's OpenShift PaaS environment. Other users have reported it works for them too. Should work with any other PaaS provider.

There are comments.

Using OpenShift as Amazon CloudFront Origin Server

It's been several months after the start of Difio and I started migrating various parts of the platform to CDN. The first to go are static files like CSS, JavaScript, images and such. In this article I will show you how to get started with Amazon CloudFront and OpenShift. It is very easy once you understand how it works.

Why CloudFront and OpenShift

Amazon CloudFront is cheap and easy to setup with virtually no maintenance. The most important feature is that it can fetch content from any public website. Integrating it together with OpenShift gives some nice benefits:

- All static assets are managed with Git and stored in the same place where the application code and HTML is - easy to develop and deploy;

- No need for external service to host the static files;

- CloudFront will be serving the files so network load on OpenShift is minimal;

- Easy to manage versioned URLs because HTML and static assets are in the same repo - more on this later;

Object expiration

CloudFront will cache your objects for a certain period and then expire them. Frequently used objects are expired less often. Depending on the content you may want to update the cache more or less frequently. In my case CSS and JavaScript files change rarely so I wanted to tell CloudFront to not expire the files quickly. I did this by telling Apache to send a custom value for the Expires header.

$ curl http://d71ktrt2emu2j.cloudfront.net/static/v1/css/style.css -D headers.txt

$ cat headers.txt

HTTP/1.0 200 OK

Date: Mon, 16 Apr 2012 19:02:16 GMT

Server: Apache/2.2.15 (Red Hat)

Last-Modified: Mon, 16 Apr 2012 19:00:33 GMT

ETag: "120577-1b2d-4bdd06fc6f640"

Accept-Ranges: bytes

Content-Length: 6957

Cache-Control: max-age=31536000

Expires: Tue, 16 Apr 2013 19:02:16 GMT

Content-Type: text/css

Strict-Transport-Security: max-age=15768000, includeSubDomains

Age: 73090

X-Cache: Hit from cloudfront

X-Amz-Cf-Id: X558vcEOsQkVQn5V9fbrWNTdo543v8VStxdb7LXIcUWAIbLKuIvp-w==,e8Dipk5FSNej3e0Y7c5ro-9mmn7OK8kWfbaRGwi1ww8ihwVzSab24A==

Via: 1.0 d6343f267c91f2f0e78ef0a7d0b7921d.cloudfront.net (CloudFront)

Connection: close

All headers before Strict-Transport-Security come from the origin server.

Versioning

Sometimes however you need to update the files and force CloudFront to update the content. The recommended way to do this is to use URL versioning and update the path to the files which changed. This will force CloudFront to cache and serve the content under the new path while keeping the old content available until it expires. This way your visitors will not be viewing your site with the new CSS and old JavaScript.

There are many ways to do this and there are some nice frameworks as well. For Python there is webassets. I don't have many static files so I opted for no additional dependencies. Instead I will be updating the versions by hand.

What comes to mind is using mod_rewrite to redirect the versioned URLs back to non versioned ones. However there's a catch. If you do this CloudFront will cache the redirect itself, not the content. The next time visitors hit CloudFront they will receive the cached redirect and follow it back to your origin server, which is defeating the purpose of having CDN.

To do it properly you have to rewrite the URLs but still return a 200 response code and the content which needs to be cached. This is done with mod_proxy:

RewriteEngine on

RewriteRule ^VERSION-(\d+)/(.*)$ http://%{ENV:OPENSHIFT_INTERNAL_IP}:%{ENV:OPENSHIFT_INTERNAL_PORT}/static/$2 [P,L]

This .htaccess trick doesn't work on OpenShift though. mod_proxy is not enabled at the moment. See bug 812389 for more info.

Luckily I was able to use symlinks to point to the content. Here's how it looks:

$ pwd

/home/atodorov/difio/wsgi/static

$ cat .htaccess

ExpiresActive On

ExpiresDefault "access plus 1 year"

$ ls -l

drwxrwxr-x. 6 atodorov atodorov 4096 16 Apr 21,31 o

lrwxrwxrwx. 1 atodorov atodorov 1 16 Apr 21,47 v1 -> o

settings.py:

STATIC_URL = '//d71ktrt2emu2j.cloudfront.net/static/v1/'

HTML template:

<link type="text/css" rel="stylesheet" media="screen" href="{{ STATIC_URL }}css/style.css" />

How to implement it

First you need to split all CSS and JavaScript from your HTML if you haven't done so already.

Then place everything under your git repo so that OpenShift will serve the files. For Python applications place the files under wsgi/static/ directory in your git repo.

Point all of your HTML templates to the static location on OpenShift and test if everything works as expected. This is best done if you're using some sort of template language and store the location in a single variable which you can change later. Difio uses Django and the STATIC_URL variable of course.

Create your CloudFront distribution - don't use Amazon S3, instead configure a custom origin server. Write down your CloudFront URL. It will be something like 1234xyz.cludfront.net.

Every time a request hits CloudFront it will check if the object is present in the cache. If not present CloudFront will fetch the object from the origin server and populate the cache. Then the object is sent to the user.

Update your templates to point to the new cloudfront.net URL and redeploy your website!

There are comments.

OpenShift Cron Takes Over Celerybeat

Celery is an asynchronous task queue/job queue based on distributed message passing. You can define tasks as Python functions, execute them in the background and in a periodic fashion. Difio uses Celery for virtually everything. Some of the tasks are scheduled after some event takes place (like user pressed a button) or scheduled periodically.

Celery provides several components of which celerybeat is the periodic task scheduler. When combined with Django it gives you a very nice admin interface which allows periodic tasks to be added to the scheduler.

Why change

Difio has relied on celerybeat for a couple of months. Back then, when Difio launched, there was no cron support for OpenShift so running celerybeat sounded reasonable. It used to run on a dedicated virtual server and for most of the time that was fine.

There were a number of issues which Difio faced during its first months:

-

celerybeat would sometime die due to no free memory on the virtual instance. When that happened no new tasks were scheduled and data was left unprocessed. Let alone that higher memory instance and the processing power which comes with it cost extra money.

-

Difio is split into several components which need to have the same code base locally - the most important are database settings and the periodic tasks code. At least in one occasion celerybeat failed to start because of a buggy task code. The offending code was fixed in the application server on OpenShift but not properly synced to the celerybeat instance. Keeping code in sync is a priority for distributed projects which rely on Celery.

-

Celery and django-celery seem to be updated quite often. This poses a significant risk of ending up with different versions on the scheduler, worker nodes and the app server. This will bring the whole application to a halt if at some point a backward incompatible change is introduced and not properly tested and updated. Keeping infrastructure components in sync can be a big challenge and I try to minimize this effort as much as possible.

-

Having to navigate to the admin pages every time I add a new task or want to change the execution frequency doesn't feel very natural for a console user like myself and IMHO is less productive. For the record I primarily use mcedit. I wanted to have something more close to the write, commit and push work-flow.

The take over

It's been some time since OpenShift introduced the cron cartridge and I decided to give it a try.

The first thing I did is to write a simple script which can execute any task from the difio.tasks module by piping it to the Django shell (a Python shell actually).

#!/bin/bash

#

# Copyright (c) 2012, Alexander Todorov <atodorov@nospam.otb.bg>

#

# This script is symlinked to from the hourly/minutely, etc. directories

#

# SYNOPSIS

#

# ./run_celery_task cron_search_dates

#

# OR

#

# ln -s run_celery_task cron_search_dates

# ./cron_search_dates

#

TASK_NAME=$1

[ -z "$TASK_NAME" ] && TASK_NAME=$(basename $0)

if [ -n "$OPENSHIFT_APP_DIR" ]; then

source $OPENSHIFT_APP_DIR/virtenv/bin/activate

export PYTHON_EGG_CACHE=$OPENSHIFT_DATA_DIR/.python-eggs

REPO_DIR=$OPENSHIFT_REPO_DIR

else

REPO_DIR=$(dirname $0)"/../../.."

fi

echo "import difio.tasks; difio.tasks.$TASK_NAME.delay()" | $REPO_DIR/wsgi/difio/manage.py shell

This is a multicall script which allows symlinks with different names to point to it. Thus to add a new task to cron I just need to make a symlink to the script from one of the hourly/, minutely/, daily/, etc. directories under cron/.

The script accepts a parameter as well which allows me to execute it locally for debugging purposes or to schedule some tasks out of band. This is how it looks like on the file system:

$ ls -l .openshift/cron/hourly/

some_task_name -> ../tasks/run_celery_task

another_task -> ../tasks/run_celery_task

After having done these preparations I only had to embed the cron cartridge and git push to OpenShift:

rhc-ctl-app -a difio -e add-cron-1.4 && git push

What's next

At present OpenShift can schedule your jobs every minute, hour, day, week or month and does so using the run-parts script. You can't schedule a script to execute at 4:30 every Monday or every 45 minutes for example. See rhbz #803485 if you want to follow the progress. Luckily Difio doesn't use this sort of job scheduling for the moment.

Difio is scheduling periodic tasks from OpenShift cron for a few days already. It seems to work reliably and with no issues. One less component to maintain and worry about. More time to write code.

There are comments.

Tip: How to Get to the OpenShift Shell

I wanted to examine the Perl environment on OpenShift and got tired of making snapshots, unzipping the archive and poking through the files. I wanted a shell. Here's how to get one.

-

Get the application info first

$ rhc-domain-info Password: Application Info ================ myapp Framework: perl-5.10 Creation: 2012-03-08T13:34:46-04:00 UUID: 8946b976ad284cf5b2401caf736186bd Git URL: ssh://8946b976ad284cf5b2401caf736186bd@myapp-mydomain.rhcloud.com/~/git/myapp.git/ Public URL: http://myapp-mydomain.rhcloud.com/ Embedded: None

-

The Git URL has your username and host

-

Now just ssh into the application

$ ssh 8946b976ad284cf5b2401caf736186bd@myapp-mydomain.rhcloud.com Welcome to OpenShift shell This shell will assist you in managing OpenShift applications. !!! IMPORTANT !!! IMPORTANT !!! IMPORTANT !!! Shell access is quite powerful and it is possible for you to accidentally damage your application. Proceed with care! If worse comes to worst, destroy your application with 'rhc app destroy' and recreate it !!! IMPORTANT !!! IMPORTANT !!! IMPORTANT !!! Type "help" for more info. [myapp-mydomain.rhcloud.com ~]\>

Voila!

There are comments.

How to Update Dependencies on OpenShift

If you are already running some cool application on OpenShift it could be the case that you have to update some of the packages installed as dependencies. Here is an example for an application using the python-2.6 cartridge.

Pull latest upstream packages

The most simple method is to update everything to the latest upstream versions.

-

Backup! Backup! Backup!

rhc-snapshot -a mycoolapp mv mycoolapp.tar.gz mycoolapp-backup-before-update.tar.gz

-

If you haven't specified any particular version in

setup.pyit will look like this:... install_requires=[ 'difio-openshift-python', 'MySQL-python', 'Markdown', ], ...

-

To update simply push to OpenShift instructing it to rebuild your virtualenv:

cd mycoolapp/ touch .openshift/markers/force_clean_build git add .openshift/markers/force_clean_build git commit -m "update to latest upstream" git push

Voila! The environment hosting your application is rebuilt from scratch.

Keeping some packages unchanged

Suppose that before the update you have Markdown-2.0.1 and you want to keep it!

This is easily solved by adding versioned dependency to setup.py

- 'Markdown',

+ 'Markdown==2.0.1',

If you do that OpenShift will install the same Markdown version when rebuilding your

application. Everything else will use the latest available versions.

Note: after the update it's recommended that you remove the

.openshift/markers/force_clean_build file. This will speed up the push/build process

and will not surprise you with unwanted changes.

Update only selected packages

Unless your application is really simple or you have tested the updates, I suspect that

you want to update only selected packages. This can be done without rebuilding the whole

virtualenv. Use versioned dependencies in setup.py :

- 'Markdown==2.0.1',

- 'django-countries',

+ 'Markdown>=2.1',

+ 'django-countries>=1.1.2',

No need for force_clean_build this time. Just

git commit && git push

At the time of writing my application was using Markdown-2.0.1 and django-countries-1.0.5.

Then it updated to Markdown-2.1.1 and django-countires-1.1.2 which also happened to be

the latest versions.

Note: this will not work without force_clean_build

- 'django-countries==1.0.5',

+ 'django-countries',

Warning

OpenShift uses a local mirror of Python Package Index. It seems to be updated every 24 hours or so. Have this in mind if you want to update to a package that was just released. It will not work! See How to Deploy Python Hotfix on OpenShift if you wish to work around this limitation.

There are comments.

Spinning-up a Development Instance on OpenShift

Difio is hosted on OpenShift. During development I often need to spin-up another copy of Difio to use for testing and development. With OpenShift this is easy and fast. Here's how:

-

Create another application on OpenShift. This will be your development instance.

rhc-create-app -a myappdevel -t python-2.6

-

Find out the git URL for the production application:

$ rhc-user-info Application Info ================ myapp Framework: python-2.6 Creation: 2012-02-10T12:39:53-05:00 UUID: 723f0331e17041e8b34228f87a6cf1f5 Git URL: ssh://723f0331e17041e8b34228f87a6cf1f5@myapp-mydomain.rhcloud.com/~/git/myapp.git/ Public URL: http://myapp-mydomain.rhcloud.com/

-

Push the current code base from the production instance to devel instance:

cd myappdevel git remote add production -m master ssh://723f0331e17041e8b34228f87a6cf1f5@myapp-mydomain.rhcloud.com/~/git/myapp.git/ git pull -s recursive -X theirs production master git push

-

Now your

myappdevelis the same as your production instance. You will probably want to modify your database connection settings at this point and start adding new features.

There are comments.