Learn Python & Selenium Automation in 8 weeks

Couple of months ago I conducted a practical, instructor lead training in Python and Selenium automation for manual testers. You can find the materials at GitHub.

The training consists of several basic modules and practical homework assignments. The modules explain

- The basic structure of a Python program and functions

- Commonly used data types

- If statements and (for) loops

- Classes and objects

- The Python unit testing framework and its assertions

- High-level introduction to Selenium with Python

- High-level introduction to the Page Objects design pattern

- Writing automated tests for real world scenarios without any help from the instructor.

Every module is intended to be taken in the course of 1 week and begins with links to preparatory materials and lots of reading. Then I help the students understand the basics and explain with more examples, often writing code as we go along. At the end there is the homework assignment for which I expect a solution presented by the end of the week so I can comment and code-review it.

All assignments which require the student to implement functionality, not tests, are paired with a test suite, which the student should use to validate their solution.

What worked well

Despite everything I've written below I had 2 students (from a group of 8) which showed very good progress. One of them was the absolute star, taking active participation in every class and doing almost all homework assignments on time, pretty much without errors. I think she'd had some previous training or experience though. She was in the USA, training was done remotely via Google Hangouts.

The other student was in Sofia, training was done in person. He is not on the

same level as the US student but is the best from the Bulgarian team. IMO he

lacks a little bit of motivation. He "cheated" a bit on some tasks providing

non-standard, easier solutions and made most of his assignments. After the first

Selenium session he started creating small scripts to extract results from

football sites or as helpers to be applied in the daily job.

The interesting

fact for me was that he created his programs as unittest.TestCase classes.

I guess because this was the way he knew how to run them!?!

There were another few students which had had some prior experience with programming but weren't very active in class so I can't tell how their careers will progress. If they put some more effort into it I'm sure they can turn out to have decent programming skills.

What didn't work well

Starting from the beginning most students failed to read the preparatory materials. Some of the students did read a little bit, others didn't read at all. At the times when they came prepared I had the feeling the sessions progressed more smoothly. I also had students joining late in the process, which for the most part didn't participate at all in the training. I'd like to avoid that in the future if possible.

Sometimes students complained about lack of example code, although Dive into Python includes tons of examples. I've resorted to sending them the example.py files which I produced during class.

The practical part of the training was mostly myself programming on a big TV screen in front of everyone else. Several times someone from the students took my place. There wasn't much active participation on their part and unfortunately they didn't want to bring personal laptops to the training (or maybe weren't allowed)! We did have a company provided laptop though.

When practicing functions and arithmetic operations the students struggled with basic maths like breaking down a number into its digits or vice versa, working with Fibonacci sequences and the like. In some cases they cheated by converting to/from strings and then iterating over them. Also some hard-coded the first few numbers of the Fibonacci sequence and returned it directly. Maybe an in-place explanation of the underlying maths would have been helpful but honestly I was surprised by this. Somebody please explain or give me an advise here!

I am completely missing examples of the datetime and timedelta classes

which tuned out to be very handy in the practical Selenium tasks and we had

to go over them on the fly.

The OOP assignments went mostly undone, not to mention one of them had bonus tasks which are easily solved using recursion. I think we could skip some of the OOP practice (not sure how safe that is) because I really need classes only for constructing the tests and we don't do anything fancy there.

Page Object design pattern is also OOP based and I think that went somewhat well granted that we are only passing values around and performing some actions. I didn't put constraints nor provided guidance on what the classes should look like and which methods go where. Maybe I should have made it easier.

Anyway, given that Page Objects is being replaced by Screenplay pattern, I think we can safely stick to the all-in-one functional based Selenium tests. Maybe utilize helper functions for repeated tasks (like login). Indeed this is what I was using last year with Rspec & Capybara!

What students didn't understand

Right until the end I had people who had troubles understanding function signatures, function instances and calling/executing a function. Also returning a value from a function vs. printing the (same) value on screen or assigning to the same global variable (e.g. FIB_NUMBERS).

In the same category falls using method parameters vs. using global variables

(which happened to have the same value), using the parameters as arguments to

another function inside the body of the current function, using class attributes

(e.g. self.name) to store and pass values around vs. local variables in methods

vs. method parameters which have the same names.

I think there was some confusion about lists, dictionaries and tuples but we did practice mostly with list structures so I don't have enough information.

I have the impression that object oriented programming (classes and instances, we didn't go into inheritance) are generally confusing to beginners with zero programming experience. The classical way to explain them is by using some abstractions like animal -> dog -> a particular dog breed -> a particular pet. OOP was explained to me in a similar way back in school so these kinds of abstractions are very natural for me. I have no idea if my explanation sucks or students are having hard time wrapping their heads around the abstraction. I'd love to hear some feedback from other instructors on this one.

I think there is some misunderstanding between a class (a definition of behavior)

and an instance/object of this class (something which exists into memory). This

may also explain the difficulty remembering or figuring out what self points to

and why do we need to use it inside method bodies.

For unittest.TestCase we didn't do lots of practice which is my fault.

The homework assignments request the students to go back to solutions

of previous modules and implement more tests for them. Next time I should

provide a module (possibly with non-obvious bugs) and request to write

a comprehensive test suite for it.

Because of the missing practice there was some confusion/misunderstanding

about the setUpClass/tearDownClass and the setUp/tearDown methods.

Also add to the mix that the first are @classmethod while the later

are not. "To be safe" students always defined both as class methods!

I have since corrected the training materials but we didn't have

good examples (nor practiced) explaining the difference between

setUpClass (executed once aka before suite) and setUp

(possibly executed multiple times aka before test method).

On the Selenium side I think it is mostly practice which students lack, not understanding. The entire Selenium framework (any web test framework for that matter) boils down to

- Load a page

- Find element(s)

- Click or hover (that one was tricky) element

- Get element's attribute value or text

- Wait for the proper page to load (or worst case AJAX calls)

IMO finding the correct element on the page is on-par with waiting (which also relies on locating elements) and took 80% of the time we spent working with Selenium.

Thanks for reading and don't forget to comment and give me your feedback!

Image source: https://www.udemy.com/selenium-webdriver-with-python/

There are comments.

Quality Assurance According 2 Einstein

Quality Assurance According 2 Einstein is a talk which introduces several different ideas about how we need to think and approach software testing. It touches on subjects like mutation testing, pairwise testing, automatic test execution, smart test non-execution, using tests as monitoring tools and team/process organization.

Because testing is more thinking than writing I have chosen a different format for this presentation. It contains only slides with famous quotes from one of the greatest thinkers of our time - Albert Einstein!

This blog post includes the accompanying links and references only! It is my first iteration on the topic so expect it to be unclear and incomplete, use your imagination! I will continue working and presenting on the same topic in the next few months so you can expect updates from time to time. In the mean time I am happy to discuss with you down in the comments.

IMAGINATION IS MORE IMPORTANT THAN KNOWLEDGE.

- Hello World bug challenge

- Testing a Sudoku

- https://github.com/weldr/welder-web/pull/56

- https://github.com/weldr/welder-web/pull/59

THE FASTER YOU GO, THE SHORTER YOU ARE.

- Using Statistics to Predict Which Tests to Run

- The framework that knows its bugs

- Testing Red Hat Enterprise Linux the Microsoft way

- Automatic dependency testing with Strazar

- Automatic cargo update, test and pull request

IF THE FACTS DON'T FIT THE THEORY, CHANGE THE FACTS.

- Coverage is Not Strongly Correlated with Test Suite Effectiveness

- Code Coverage is a Strong Predictor of Test Suite Effectiveness

- Mutation testing vs. coverage, Pt. 1

- Mutation testing vs. coverage, Pt. 2

- There are 101 coverage metrics according to Cem Kaner. Which ones are you measuring and what conclusions are you making out of these metrics?

THE WHOLE OF SCIENCE IS NOTHING MORE THAN A REFINEMENT OF EVERYDAY THINKING.

- How to find 1000 bugs in 30 minutes

- How we found a million style and grammar errors in the English Wikipedia

- Simple Testing Can Prevent Most Critical Failures

- Need it robust, make it fragile

- btw its me who asks the first question at the end :)

INSANITY - DOING THE SAME THING OVER AND OVER AND EXPECTING DIFFERENT RESULTS.

This principle can be applied to any team/process within the organization. The above link is reference to a nice book which was recommended to me but the gist of it is that we always need to analyze, ask questions and change is we want to achieve great results. A practicle example of what is possible if you follow this principle is this talk Accelerate Automation Tests From 3 Hours to 3 Minutes.

THE ONLY REASON FOR TIME IS SO THAT EVERYTHING DOESN'T HAPPEN AT ONCE.

The topic here is "using tests as a monitoring tool". This is something I started a while back ago, helping a prominent startup with their production testing but my involvement ended very soon after the framework was deployed live so I don't have lots of insight.

As the first few days this technique identified some unexpected behaviors, for example a 3rd party service was updating very often. Once even they were broken for a few hours - something nobody had information about.

Since then I've heard about 2 more companies using similar techniques to continuously validate that production software continues to work without having a physical person to verify it. In the event of failures there are alerts which are delath with accordingly.

NO PROBLEM CAN BE SOLVED FROM THE SAME LEVEL OF CONSIOUSNESS THAT CREATED IT.

That much must be obvious to us quality engineers. What about the future however?

I don't have anything more concrete here. Just looking towards what is coming next!

DO NOT WORRY ABOUT YOUR DIFFICULTIES IN MATHEMATICS. I CAN ASSURE YOU MINE ARE STILL GREATER.

Thanks for reading and happy testing!

There are comments.

Automatic cargo update & pull requests for Rust projects

If you follow my blog you are aware that I use automated tools to do some boring tasks instead of me. For example they can detect when new versions of dependencies I'm using are available and then schedule testing against them on the fly.

One of these tools is Strazar which I use heavily for my Django based packages. Example: django-s3-cache build job.

Recently I've made a slightly different proof-of-concept for a Rust project.

Because rustc and various dependencies (called crates) are updated very often

we didn't want to expand the test matrix like Strazar does. Instead we wanted to

always build & test against the latest crates versions and if that passes

create a pull request for the update (in Cargo.lock). All of this unattended

of course!

To start create a cron job in Travis CI which will execute once per day and call your test script. The script looks like this:

#!/bin/bash

if [ -z "$GITHUB_TOKEN" ]; then

echo "GITHUB_TOKEN is not defined"

exit 1

fi

BRANCH_NAME="automated_cargo_update"

git checkout -b $BRANCH_NAME

cargo update && cargo test

DIFF=`git diff`

# NOTE: we don't really check the result from testing here. Only that

# something has been changed, e.g. Cargo.lock

if [ -n "$DIFF" ]; then

# configure git authorship

git config --global user.email "atodorov@MrSenko.com"

git config --global user.name "Alexander Todorov"

# add a remote with read/write permissions!

# use token authentication instead of password

git remote add authenticated https://atodorov:$GITHUB_TOKEN@github.com/atodorov/bdcs-api-rs.git

# commit the changes to Cargo.lock

git commit -a -m "Auto-update cargo crates"

# push the changes so that PR API has something to compare against

git push authenticated $BRANCH_NAME

# finally create the PR

curl -X POST -H "Content-Type: application/json" -H "Authorization: token $GITHUB_TOKEN" \

--data '{"title":"Auto-update cargo crates","head":"automated_cargo_update","base":"master", "body":"@atodorov review"}' \

https://api.github.com/repos/atodorov/bdcs-api-rs/pulls

fi

A few notes here:

- You need to define a secret

GITHUB_TOKENvariable for authentication; - The script doesn't force push, but in practice that may be useful (e.g. updating the PR);

- The script doesn't have any error handling;

- If PR is still open GitHub will tell us about it but we ignore the result here;

- DON'T paste this into your

Makefilebecause theGITHUB_TOKENvariable will be expanded into the logs and your secrets go away! Always call the script from yourMakefileto avoid revealing secrets. - I am using topic branches because this is a POC. Switch to master and maybe move all URLs as variables at the top of the script!

- I run this cron build against a fork of the project because the team doesn't feel comfortable having automated commits/pushes. I also create the pull requests against my own fork. You will have to adjust the targets if you want your PR to go to the original repository.

Here is the PR which was created by this script: https://github.com/atodorov/bdcs-api-rs/pull/5

Notice that it includes previous commits b/c they have not been merged to the master branch!

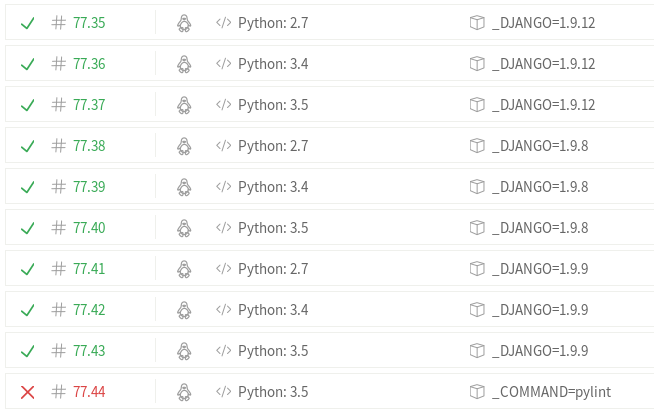

Here's the test job (#77) which generated this PR: https://travis-ci.org/atodorov/bdcs-api-rs/builds/219274916

Here's a test job (#87) which bails out miserably because the PR already exists: https://travis-ci.org/atodorov/bdcs-api-rs/builds/220954269

This post is part of my Quality Assurance According to Einstein series - a detailed description of useful techniques I will be presenting very soon.

Thanks for reading and happy testing!

There are comments.

Testing Red Hat Enterprise Linux the Microsoft way

Pairwise (a.k.a. all-pairs) testing is an effective test case generation technique that is based on the observation that most faults are caused by interactions of at most two factors! Pairwise-generated test suites cover all combinations of two therefore are much smaller than exhaustive ones yet still very effective in finding defects. This technique has been pioneered by Microsoft in testing their products. For an example please see their GitHub repo!

I heard about pairwise testing by Niels Sander Christensen last year at QA Challenge Accepted 2.0 and I immediately knew where it would fit into my test matrix.

This article describes an experiment made during Red Hat Enterprise Linux 6.9 installation testing campaign. The experiment covers generating a test plan (referred to Pairwise Test Plan) based on the pairwise test strategy and some heuristics. The goal was to reduce the number of test cases which needed to be executed and still maintain good test coverage (in terms of breadth of testing) and also maintain low risk for the product.

Product background

For RHEL 6.9 there are 9 different product variants each comprising of particular package set and CPU architecture:

- Server i386

- Server x86_64

- Server ppc64 (IBM Power)

- Server s390x (IBM mainframe)

- Workstation i386

- Workstation x86_64

- Client i386

- Client x86_64

- ComputeNode x86_64

Traditional testing activities are classified as Tier #1, Tier #2 and Tier #3

- Tier #1 - basic form of installation testing. Executed for all arch/variants on all builds, including nightly builds. This group includes the most common installation methods and configurations. If Tier #1 fails the product is considered unfit for customers and further testing blocking the release!

- Tier #2 and #3 - includes additional installation configurations and/or functionality which is deemed important. These are still fairly common scenarios but not the most frequently used ones. If some of the Tier#2 and #3 test cases fail they will not block the release.

This experiment focuses only on Tier #2 and #3 test cases because they generate the largest test matrix! This experiment is related only to installation testing of RHEL. This broadly means "Can the customer install RHEL via the Anaconda installer and boot into the installed system". I do not test functionality of the system after reboot!

I have theorized that from the point of view of installation testing RHEL is mostly a platform independent product!

Individual product variants rarely exhibit differences in their functional behavior because they are compiled from the same code base! If a feature is present it should work the same on all variants. The main differences between variants are:

- What software has been packaged as part of the variant (e.g. base package set and add-on repos);

- Whether or not a particular feature is officially supported, e.g. iBFT on Client variants. Support is usually provided via including the respective packages in the variant package set and declaring SLA for it.

These differences may lead to problems with resolving dependencies and missing packages but historically haven't shown significant tendency to cause functional failures e.g. using NFS as installation source working on Server but not on Client.

The main component being tested, Anaconda - the installer, is also mostly platform independent. In a previous experiment I had collected code coverage data from Anaconda while performing installation with the same kickstart (or same manual options) on various architectures. The coverage report supports the claim that Anaconda is platform independent! See Anaconda & coverage.py - Pt.3 - coverage-diff, section Kickstart vs. Kickstart!

Testing approach

The traditional pairwise approach focuses on features whose functionality is controlled via parameters. For example: RAID level, encryption cipher, etc. I have taken this definition one level up and applied it to the entire product! Now functionality is also controlled by variant and CPU architecture! This allows me to reduce the number of total test cases in the test matrix but still execute all of them at least once!

The initial implementation used a simple script, built with the Ruby pairwise gem, that:

-

Copies verbatim all test cases which are applicable for a single product variant, for example s390x Server or ppc64 Server! There's nothing we can do to reduce these from combinatorial point of view!

-

Then we have the group of test cases with input parameters. For example:

storage / iBFT / No authentication / Network init script storage / iBFT / CHAP authentication / Network Manager storage / iBFT / Reverse CHAP authentication / Network ManagerIn this example the test is

storage / iBFTand the parameters are- Authentication type

- None

- CHAP

- Reverse CHAP

- Network management type

- SysV init

- NetworkManager

For test cases in this group I also consider the CPU architecture and OS variant as part of the input parameters and combine them using pairwise. Usually this results in around 50% reduction of test efforts compared to testing against all product variants!

- Authentication type

-

Last we have the group of test cases which don't depend on any input parameters, for example

partitioning / swap on LVM. They are grouped together (wrt their applicable variants) and each test case is executed only once against a randomly chosen product variant! This is my own heuristic based on the fact that the product is platform independent!NOTE: You may think that for these test cases the product variant is their input parameter. If we consider this to be the case then we'll not get any reduction because of how pairwise generation works (the 2 parameters with the largest number of possible values determine the maximum size of the test matrix). In this case the 9 product variants is the largest set of values!

For this experiment pairwise_spec.rb only produced the list of test scenarios (test cases) to be executed! It doesn't schedule test execution and it doesn't update the test case management system with actual results. It just tells you what to do! Obviously this script will need to integrate with other systems and processes as defined by the organization!

Example results:

RHEL 6.9 Tier #2 and #3 testing

Test case w/o parameters can't be reduced via pairwise

x86_64 Server - partitioning / swap on LVM

x86_64 Workstation - partitioning / swap on LVM

x86_64 Client - partitioning / swap on LVM

x86_64 ComputeNode - partitioning / swap on LVM

i386 Server - partitioning / swap on LVM

i386 Workstation - partitioning / swap on LVM

i386 Client - partitioning / swap on LVM

ppc64 Server - partitioning / swap on LVM

s390x Server - partitioning / swap on LVM

Test case(s) with parameters can be reduced by pairwise

x86_64 Server - rescue mode / LVM / plain

x86_64 ComputeNode - rescue mode / RAID / encrypted

x86_64 Client - rescue mode / RAID / plain

x86_64 Workstation - rescue mode / LVM / encrypted

x86_64 Server - rescue mode / RAID / encrypted

x86_64 Workstation - rescue mode / RAID / plain

x86_64 Client - rescue mode / LVM / encrypted

x86_64 ComputeNode - rescue mode / LVM / plain

i386 Server - rescue mode / LVM / plain

i386 Client - rescue mode / RAID / encrypted

i386 Workstation - rescue mode / RAID / plain

i386 Workstation - rescue mode / LVM / encrypted

i386 Server - rescue mode / RAID / encrypted

i386 Workstation - rescue mode / RAID / encrypted

i386 Client - rescue mode / LVM / plain

ppc64 Server - rescue mode / LVM / plain

s390x Server - rescue mode / RAID / encrypted

s390x Server - rescue mode / RAID / plain

s390x Server - rescue mode / LVM / encrypted

ppc64 Server - rescue mode / RAID / encrypted

Finished in 0.00602 seconds (files took 0.10734 seconds to load)

29 examples, 0 failures

In this example there are 9 (variants) * 2 (partitioning type) * 2 (encryption type) == 32 total combinations! As you can see pairwise reduced them to 20! Also notice that if you don't take CPU arch and variant into account you are left with 2 (partitioning type) * 2 (encryption type) == 4 combinations for each product variant and they can't be reduced on their own!

Acceptance criteria

I did evaluate all bugs which were found by executing the test cases from the pairwise test plan and compared them to the list of all bugs found by the team. This will tell me how good my pairwise test plan was compared to the regular one. "good" meaning:

- How many bugs would I find if I don't execute the full test matrix

- How many critical bugs would I miss if I don't execute the full test matrix

Results:

- Pairwise found 14 new bugs;

- 23 bugs were first found by regular test plan

- some by test cases not included in this experiment;

- pairwise totally missed 4 bugs!

Pairwise test plan missed 3 critical regressions due to:

- Poor planning of pairwise test activity. There was a regression in one of the latest builds and that particular test was simply not executed!

- Human factor aka me not being careful enough and not following the process diligently. I waived a test due to infrastructure issues while there was a bug which stayed undiscovered! I should have tried harder to retest this scenario after fixing my infrastructure!

- Architecture and networking specific regression which wasn't tested on multiple levels and is very narrow corner case. Can be mitigated with more testing upstream, more automation and better understanding of the hidden test requirements (e.g. IPv4 vs IPv6) for all of which pairwise can help (analysis and more available resources).

All of the missed regressions could have been missed by regular test plan as well, however the risk of missing them in pairwise is higher b/c of the reduced test matrix and the fact that you may not execute exactly the same test scenario for quite a long time. OTOH the risk can be mitigated with more automation b/c we now have more free resources.

IMO pairwise test plan did a good job and didn't introduce "dramatic" changes in risk level for the product!

Summary

- 65 % reduction in test matrix;

- Only 1/3rd of team engineers needed;

- keep arch experts around though;

- 2/3rd of team engineers could be free for automation and to create even more test cases;

- Test run execution completion rate is comparable to regular test plan

- average execution completion for pairwise test plan was 76%!

- average execution completion for regular test plan was 85%!

- New bugs found:

- 30% by Pairwise Test Plan

- 30% by Tier #1 test cases (good job here)

- 30% by exploratory testing

- Risk of missing regressions or critical bugs exists (I did miss 3) but can be mitigated;

- Clearly exposes the need of constant review, analysis and improvement of existing test cases;

- Exposes hidden parameters in test scenarios and some hidden relationships;

- Patterns and other optimization techniques observed

Patterns observed:

- Many new test case combinations found, which I had to describe into Nitrate; The longer you use pairwise the less new combinations are discovered (aka undocumented scenarios). The first 3 initial test runs discovered the most of the missing combinations!

- Found quite a few test cases with hidden parameters, for example

swap / recommendedwhich calculates the recommended size of swap partition based on 4 different ranges in which the actual RAM size fits! These ranges became parameters to the test case; - Can combine (2, 3, etc) independent test cases together and consider them as parameters so we can apply pairwise against the combination. This will create new scenarios, broaden the test matrix but not result in significant increase in execution time. I didn't try this because it was not the focus of the experiment;

- Found some redundant/duplicate test cases - test plans need to be constantly analyzed and maintained you know;

- Automated scheduling and tools integration is critical. This needs to be working perfectly in order to capitalize on the newly freed resources;

- Testing on s390x was sub-optimal (mostly my own inexperience with the platform) so for specialized environments we still want to keep the experts around;

- 1 engineer (me) was able to largely keep up with schedule with the rest of the team!

- experiment was conducted during the course of several months

- I have tried to adhere to all milestones and deadlines and mostly succeeded

I have also discovered ideas for new test execution optimization techniques which need to be evaluated and measured further:

- Use a common set-up step for multiple test cases across variants, e.g.

- install a RAID system then;

- perform 3 rescue mode tests (same test case, different variants)

- Pipeline test cases so that the result of one case is the setup for the next, e.g.

- install a RAID system and test for correctness of the installation;

- perform rescue mode test;

- damage one of the RAID partitions while still in rescue mode;

- test installation with damaged disks - it should not crash!

These techniques can be used stand-alone or in combination with other optimization techniques and tooling available to the team. They are specific to my particular kind of testing so beware of your surroundings before you try them out!

Thanks for reading and happy testing!

Cover image copyright: cio-today.com

There are comments.

Mutation Testing vs. Coverage, Pt. 2

In a previous post I have shown an example of real world bugs which we were not able to detect despite having 100% mutation and test coverage. I am going to show you another example here.

This example comes from one of my training courses. The task is to write a class which represents a bank account with methods to deposit, withdraw and transfer money. The solution looks like this

class BankAccount(object):

def __init__(self, name, balance):

self.name = name

self._balance = balance

self._history = []

def deposit(self, amount):

if amount <= 0:

raise ValueError('Deposit amount must be positive!')

self._balance += amount

def withdraw(self, amount):

if amount <= 0:

raise ValueError('Withdraw amount must be positive!')

if amount <= self._balance:

self._balance -= amount

return True

else:

self._history.append("Withdraw for %d failed" % amount)

return False

def transfer_to(self, other_account, how_much):

self.withdraw(how_much)

other_account.deposit(how_much)

Notice that if withdrawal is not possible then the function returns False. The tests

look like this

import unittest

from solution import BankAccount

class TestBankAccount(unittest.TestCase):

def setUp(self):

self.account = BankAccount("Rado", 0)

def test_deposit_positive_amount(self):

self.account.deposit(1)

self.assertEqual(self.account._balance, 1)

def test_deposit_negative_amount(self):

with self.assertRaises(ValueError):

self.account.deposit(-100)

def test_deposit_zero_amount(self):

with self.assertRaises(ValueError):

self.account.deposit(0)

def test_withdraw_positive_amount(self):

self.account.deposit(100)

result = self.account.withdraw(1)

self.assertTrue(result)

self.assertEqual(self.account._balance, 99)

def test_withdraw_maximum_amount(self):

self.account.deposit(100)

result = self.account.withdraw(100)

self.assertTrue(result)

self.assertEqual(self.account._balance, 0)

def test_withdraw_from_empty_account(self):

result = self.account.withdraw(50)

self.assertIsNotNone(result)

self.assertFalse(result)

assert "Withdraw for 50 failed" in self.account._history

def test_withdraw_non_positive_amount(self):

with self.assertRaises(ValueError):

self.account.withdraw(0)

with self.assertRaises(ValueError):

self.account.withdraw(-1)

def test_transfer_negative_amount(self):

account_1 = BankAccount('For testing', 100)

account_2 = BankAccount('In dollars', 10)

with self.assertRaises(ValueError):

account_1.transfer_to(account_2, -50)

self.assertEqual(account_1._balance, 100)

self.assertEqual(account_2._balance, 10)

def test_transfer_positive_amount(self):

account_1 = BankAccount('For testing', 100)

account_2 = BankAccount('In dollars', 10)

account_1.transfer_to(account_2, 50)

self.assertEqual(account_1._balance, 50)

self.assertEqual(account_2._balance, 60)

if __name__ == '__main__':

unittest.main()

Try the following commands to verify that you have 100% coverage

coverage run test.py

coverage report

cosmic-ray run --test-runner nose --baseline 10 example.json bank.py -- test.py`

cosmic-ray report example.json

Can you tell where the bug is ? How about I try to transfer more money than is available from one account to the other

def test_transfer_more_than_available_balance(self):

account_1 = BankAccount('For testing', 100)

account_2 = BankAccount('In dollars', 10)

# transfer more than available

account_1.transfer_to(account_2, 150)

self.assertEqual(account_1._balance, 100)

self.assertEqual(account_2._balance, 10)

If you execute the above test it will fail

FAIL: test_transfer_more_than_available_balance (__main__.TestBankAccount)

----------------------------------------------------------------------

Traceback (most recent call last):

File "./test.py", line 79, in test_transfer_more_than_available_balance

self.assertEqual(account_2._balance, 10)

AssertionError: 160 != 10

----------------------------------------------------------------------

The problem is that when self.withdraw(how_much) fails transfer_to() ignores

the result and tries to deposit the money into the other account! A better

implementation would be

def transfer_to(self, other_account, how_much):

if self.withdraw(how_much):

other_account.deposit(how_much)

else:

raise Exception('Transfer failed!')

In my earlier article the bugs were caused by external environment and tools/metrics like code coverage and mutation score are not affected by it. In fact the jinja-ab example falls into the category of data coverage testing.

The current example on the other hand is ignoring the return value of the withdraw()

function and that's why it fails when we add the appropriate test.

NOTE: some mutation test tools support the removing/modifying return value

mutation. Cosmic Ray doesn't support this at the moment (I should add it). Even if it did

that would not help us find the bug because we would kill the mutation using

the test_withdraw...() test methods, which already assert on the return value!

Thanks for reading and happy testing!

There are comments.

Circo loco 2017

Due to popular demand I'm sharing my plans for the upcoming conference season. Here is a list of events I plan to visit and speak at (hopefully). The list will be updated throughout the year so please subscribe to the comments section to receive a notification when that happens! I'm open to meeting new people so ping me for a beer if you are attending some of these events!

02 February - Hack Belgium Pre-Event Workshops, Brussels

Note: added on Jan 12th

Last year I had an amazing time visiting an Elixir & Erlang workshop so I'm about to repeat the experience. I will be visiting a workshop organized by HackBelgium and keep you posted with the results.

03 February - Git Merge, Brussels

Note: added on Jan 12th

Git Merge is organized by GitHub and will be held in Brussels this year. I will be visiting only the conference track and hopefully giving a lightning talk titled Automatic upstream dependency testing with GitHub API! That and the afterparty of course!

04-05 February - FOSDEM, Brussels

FOSDEM is the largest free and open source gathering in Europe which I have been visiting since 2009 (IIRC). You can checkout some of my reports about FOSDEM 2014, Day 1, FOSDEM 2014, Day 2 and FOSDEM 2016.

I will present my Mutants, tests and zombies talk at the Testing & Automation devroom on Sunday.

UPDATE: Video recording is available here

I will be in Brussels between February 1st and 5th to explore the local start-up scene and get to meet with the Python community so ping me if you are around.

18 March - QA Challenge Accepted 3.0, Sofia

QA: Challenge Accepted is a specialized QA conference in Sofia and most of the sessions are in Bulgarian. I've visited last year and it was great. I even proposed a challenge of my own.

CFP is still open but I have a strong confidence that my talk Testing Red Hat Enterprise Linux the MicroSoft way will be approved. It will describe a large scale experiment with pairwise testing, which btw I learned about at QA: Challenge Accepted 2.0 :).

UPDATE: I will be also on the jury for QA of the year award.

UPDATE: I did a lightning talk about my test case management system (in Bulgarian).

07-08 April - Bulgaria Web Summit, Sofia

I have been a moderator at Bulgaria Web Summit for several years and this year is no exception. This is one of the strongest events held in Sofia and is in English. Last year over 60% of the attendees were from abroad so you are welcome!

I'm not going to speak at this event but will record as much of it as possible. UPDATE: Checkout the recordings on my YouTube channel!

10-12 May - Romanian Testing Conference, Cluj-Napoca

RTC'17 is a new event I found in neighboring Romania. The topic this year is Thriving and remaining relevant in Quality Assurance. My talk is titled Quality Assistance in the Brave New World where I'll share some experiences and visions for the QA profession if that gets accepted.

As it turned out I know a few people living in Cluj so I'll be arriving one day earlier on May 9th to meet the locals.

13-14 May - OSCAL, Tirana

Open Source Conference Albania is the largest OSS event in the country. I'm on a row here to explore the IT scene on the Balkans. Due to traveling constraints my availability will be limited to the conference venue only but I've booked a hotel across the street :).

I will be meeting a few friends in Tirana and hear about the progress of an psychological experiment we devised with Jona Azizaj and Suela Palushi.

Talking wise I'm hoping to get the chance of introducing mutation testing and even host a workshop on the topic.

UPDATE: Here is the video recording from OSCAL. The quality is very poor though.

20-21 May - DEVit, Thessaloniki

This is the 3rd edition of DEVit, the 360° web development conference of Northern Greece. I've been a regular visitor since the beginning and this year I've proposed a session on mutation testing. Because the Thessaloniki community seems more interested in Ruby and Rails my goal is to share more examples from my Ruby work and compare how that is different from the Python world. There is once again an opportunity for a workshop.

So far I've been the only Bulgarian to visit DEVit and also locally known as "The guy who Kosta & Kosta met in Sofia"! Checkout my impressions from DEVit'15 and DEVit'16 if you are still wondering whether to attend or not! I strongly recommend it!

UPDATE: I am still the only Bulgarian visiting DEVit!

22 May - Dev.bg, Sofia

I've hosted a session titled Quality Assurance According to Einstein for the local QA community in Sofia. Video (in Bulgarian) and links are available!

UPDATE: added post-mortem.

01-02 June - Shift Developer Conference, Split

Shift appears to be a very big event in Croatia. My attendance is still unconfirmed due to lots of traveling before that and the general trouble of efficiently traveling on the Balkans. However I have a CFP submitted and waiting for approval. This time it is my Mutation Testing in Patterns, which is a journal of different code patterns found during mutation testing. I have not yet presented it to the public but will blog about it sometime soon so stay tuned.

UPDATE: this one is a no-go!

03-04 June - TuxCon, Plovdiv

TuxCon is held in Plovdiv around the beginning of July. I'm usually presenting some lightning talks there and use the opportunity to meet with friends and peers outside Sofia and catch up with news from the local community. The conference is in Bulgarian with the exception of the occasional foreign speaker. If you understand Bulgarian I recommend the story of Puldin - a Bulgarian computer from the 80s.

UPDATE: I will be opening the conference with QA According to Einstein

17-18 June - How Camp, Varna

How Camp is the little brother of Bulgaria Web Summit and is always held outside of Sofia. This year it will be in Varna, Bulgaria. I will be there of course and depending on the crowd may talk about some software testing patterns.

UPDATE: it looks like this is also a no-go but stay tuned for the upcoming Macedonia Web Summit and Albania Web Summit where yours trully will probably be a moderator!

25-29 September - SuSE CON, Prague

Yeah, this is the conference organized by SuSE. I'm definitely not afraid to visit the competition. I even hope I could teach them something. More details are still TBA because this event is very close to/overlapping with the next two.

28-29 September - SEETEST, Sofia

South East European Software Testing Conference is, AFAIK, an international event which is hosted in a major city on the Balkans. Last year it was held in Bucharest with previous years held in Sofia.

In my view this is the most formal event, especially related to software testing, I'm about to visit. Nevertheless I like to hear about new ideas and some research in the field of QA so this is a good opportunity.

UPDATE: I have submitted a new talk titled If the facts don't fit the theory, change the facts!

30 September-1 October - HackConf, Sofia

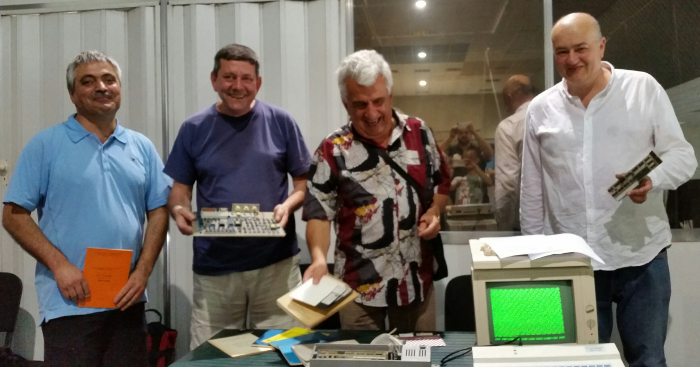

HackConf is one of the largest conferences in Bulgaria, gathering over 1000 people each year. I am strongly affiliated with the people who organize it and even had the opportunity to host the opening session last year. The picture above is from this event.

The audience is still very young and inexperienced but the presenters are above average. Organizers' goal is HackConf to become the strongest technical conference in the country and also serve as a sort of inspiration for young IT professionals.

UPDATE: I have submitted both a talk proposal and a workshop proposal. For the workshop I intend to teach children Python and Selenium Automation in 8 hours. I've also been helping the organizers with bringing some very cool speakers from abroad!

October - IT Weekend, Bulgaria

IT Weekend is organized by Petar Sabev, the same person who's behind QA: Challenge Accepted. It is a non-formal gathering of engineers with the intent to share some news and then discuss and share problems and experiences. Checkout my review of IT Weekend #1 and IT Weekend #3.

The topic revolve around QA, leadership and management but the format is open and the intention is to broaden the topics covered. The event is held outside Sofia at a SPA hotel and makes for a very nice retreat. I don't have a topic but I'm definitely going if time allows. I will probably make something up if we have QA slots available :).

October - Software Freedom Kosova, Prishtina

Software Freedom Kosova is one of the oldest conferences about free and open source software in the region. This is part of my goal to explore the IT communities on the Balkans. Kosovo sounds a bit strange to visit but I did recognize a few names on the speaker list of previous years.

The CFP is not open yet but I'm planning to make a presentation. Also if weather allows I'm planning a road trip on my motorbike :).

UPDATE: I've met with some of the FLOSSK members in Tirana at OSCAL and they seem to be more busy with running the hacker space in Prishtina so the conference is nearly a no-go.

14-15 November - Google Test Automation Conference, London

GTAC 2017 will be held in London. Both speakers and attendees are pre-approved and my goal is to be the first Red Hatter and second Bulgarian to speak at GTAC.

The previous two years saw talks about mutation testing vs. coverage and their respective use to determine the quality of a test suite with both parties arguing against each other. Since I'm working in both of these fields and have at least two practical example I'm trying to gather more information and present my findings to the world.

UPDATE: I've also submitted my Testing Red Hat Enterprise Linux the Microsoft way

16-17 November - ISTA Con, Sofia

Innovations in Software Technologies and Automation started as a QA conference several years ago but it has broaden the range of acceptable topics to include development, devops and agile. I was at the first two editions and then didn't attend for a while until last year when I really liked it. The event is entirely in English with lots of foreign speakers.

Recently I've been working on something I call "Regression Test Monitoring" and my intention is to present this at ISTA 2017 so stay tuned.

UPDATE: I didn't manage to collect enough information on the Regression Test Monitoring topic but have made two other proposals.

Thanks for reading and see you around!

There are comments.

QA & Automation 101 Retrospective

At the beginning of this year I've hosted the first QA related course at HackBulgaria. This is a long overdue post about how the course went, what worked well and what didn't. Brace yourself because it is going to be a long one.

The idea behind a QA course has been lurking in both RadoRado's (from HackBulgaria) and my heads for quite a while. We've been discussing it at least a year before we actually started. One day Rado told me he'd found a sponsor and we have the go ahead for the course and that's how it all started!

The first issue was that we weren't prepared to start at a moments notice. I literally had two weeks to prepare the curriculum and initial interview questions. Next we opened the application form and left it open until the last possible moment. I've been reviewing candidate answers hours before the course started, which was another mistake we made!

On the positive side is that I hosted a Q&A session on YouTube answering general questions about the profession and the course itself. This live stream helped popularize the course.

At the start we had 30 people and around 13 of them managed to "graduate" till the final lesson. The biggest portion of students dropped out after the first 5 lessons of Java crash course! Each lesson was around 4 hours with 20-30 minutes break in the middle.

With respect to the criteria find a first job or find a new/better job I consider the training successful. To my knowledge all students have found better jobs, many of them as software testers!

On the practical side of things students managed to find and report 11 interesting bugs against Fedora. Mind you that these were all found in the wild: fedora-infrastructure #5323, RHBZ #1339701, RHBZ #1339709, RHBZ #1339713, RHBZ #1339719, RHBZ #1339731, RHBZ #1339739, RHBZ #1339742, RHBZ #1339746, RHBZ #1340541, RHBZ #1340891.

Then students also made a few pull requests on GitHub (3 which I know off): commons-math #38, commons-csv #12, commons-email #1.

Lesson format

For reference most lessons were a mix of short presentation about theory and best practices followed by discussions and where appropriate practical sessions with technology or projects. The exercises were designed for individual work, work in pairs or small groups (4-5) on purpose.

By request from the sponsors I've tried to keep a detailed record of each student's performance and personality traits as much as I was able to observe them. I really enjoyed keeping such a journal but didn't share this info with my students which I consider a negative issue. I think knowing where your strong and weak areas are would help you become a better expert in your field!

Feedback from students

- There was little time (to work on the practical examples I guess);

- Not having particular practical tasks was a problem. For example we didn't have tasks of the sort do "X" then "Y";

- They needed more time and more attention to be given to them;

- Installing different pieces of software and tools took a lot of time and frustration. It was also quite problematic sometimes depending on whether they used Linux, Windows or Mac OS X;

- Working with Eclipse IDE was horrible. Nobody new what to do and the interface wasn't newbie friendly. Also it took quite a lot of time to install dependencies and/or import projects to run in Eclipse;

- There were a few problems with Selenium and its different versions being used.

I have to point out that while these are valid concerns and major issues students were at least partially guilty for the last 3 of them. It was my impression that most of them didn't prepare at home, didn't read the next lesson and didn't install prerequisite tools and software!

5x Java Crash Course

We've started with a Java crash course as requested by our sponsors which was extended to 5 instead of the original 3 sessions. RadoRado was teaching Java fundamentals while I was assisting him with comments.

On the good side is that Rado explains very well and in much details. He also writes code to demonstrate what he teaches and while doing so uses only the knowledge he's presented so far. For example if there's a repeating logic/functionality he would just write it twice instead of refactoring that into a separate function with parameters (assuming the students have not learned about functions yet). I think this made it more easier to understand the concepts being taught.

Another positive thing we did was me going behind Rado's computer and modifying some of the code while he was explaining something on screen. If you take the above example and have two methods with print out salutations, e.g. "Good morning, Alex" I would go and modify one of them to include "Mr." while the other will not. This introduced a change in behavior which ultimately results in a bug! This was a nice practical way to demonstrate how some classes of bugs get introduced in reality. We did only a few of these behind the computer changes and I definitely liked them! They were all ad-hoc, not planned for.

On the negative side Java seems hard to learn and after these 5 lessons half of the students dropped out. Maybe part of the reason is they didn't expect to start a QA course with lessons about programming. But that also means they didn't pay enough attention to the curriculum, which was announced in advance!

Lesson 01 - QA Fundamentals

I had made a point to assign time constraints to each exercise in the lessons. While that mostly worked in the first few lessons, where there is more theory, we didn't keep the schedule and were overtime.

Explaining testing theory (based on ISTQB fundamentals) took longer than I expected. it was also evident that we needed more written examples of what different test analysis techniques are (e.g. boundary value analysis). Here Petar Sabev helped me deliver few very nice examples.

One of the exercises was "when to stop testing" with an example of a Sudoku solving function and different environments in which this code operates, e.g. browser, mobile, etc. Students appeared to have a hard time understanding what a "runtime environment" is and define relevant tests based on that! I believe most of the students, due to lack of knowledge and experience, were also having a hard time grasping the concept of non-functional testing.

A positive thing was that students started explaining to one another and giving examples for bugs they've seen outside the course.

Lesson 02 - Software Development Lifecycle

This lesson was designed as role playing game to demonstrate the most common software development methodologies - waterfall and agile and discuss the QA role in both of them. The format by itself is very hard to conduct successfully and this was my first time ever doing this. I've also never taken part of such games until then, only heard about them.

During the waterfall exercise it was harder for the students to follow the game constraints and not exchange information with one another because they were sitting on the same table.

On the positive side all groups came with unique ideas about software features and how they want to develop them. Timewise we managed to do very well. On the negative side is that I was the client for all groups and didn't manage to pay enough attention to everyone, which btw is what clients usually do in real life.

Lesson 03 - Bug Tracking

This lesson was a practical exercise in writing bug reports and figuring out what information needs to be present in a good bug report. Btw this is something I always ask junior members at job interviews.

First we started with working in pairs to define what a good bug report is without actually knowing what that means. Students found it hard to brainstorm together and most of them worked alone during this exercise.

Next students had to write bug reports for some example bugs, which I've explained briefly on purpose and perform peer reviews of their bugs. Reviews took a long time to complete but overall students had a good idea of what information to include in a bug report.

Then, after learning from their mistakes and hearing what others had done, they've learned about some good practices and were tasked to rewrite their bug reports using the new knowledge. I really like the approach of letting students make some mistakes and then showing them the easier/better way of doing things. This is also on-par with Ivan Nemytchenko's methodology of letting his interns learn by their mistakes.

All bug reports can be found in students repositories, which are forked from the curriculum. Check out https://github.com/HackBulgaria/QA-and-Automation-101/network.

I should have really asked everyone to file bugs under the curriculum repository so it is easier for me to track them. On the other hand I wanted each student to start building their own public profile to show potential employers.

Lesson 04 - Test Case Management

This lesson started with an exercise asking students to create accounts for Red Hat's OpenShift cloud platform in the form of a test scenario. The scenario intentionally left out some details. The idea being that missing information introduces inconsistencies during testing and to demonstrate how the same steps were performed slightly differently.

We had some troubles explaining exactly "how did you test" because most inexperienced people would not remember all details about where they clicked, did they use the mouse or the keyboard, was the tab order correct, etc. Regardless students managed to receive different results and discover that some email providers were not supported.

The homework assignment was to create test plans and test cases in Nitrate at https://nitrate-hackbg.rhcloud.com/. Unfortunately the system appears to be down ATM and I don't have time to investigate why. This piece of infrastructure was put together in 2 hours and I'm surprised it lasted without issues during the entire course.

2x Introduction to Linux

This was a crash course in Linux fundamentals and exercise with most common commands and text editors in the console. Most of the students were not prepared with virtual machines. We've also used a cloud provider to give students remote shell but the provider API was failing and we had to deploy docker containers manually. Overall infrastructure was a big problem but we somehow managed. Another problem was with ssh clients from Windows who generated keys in a format that our cloud provider couldn't understand.

Wrt commands and exercises students did well and managed to execute most of them on their own. That's very good for people who've never seen a terminal in their lives (more or less).

Lesson 05 - Testing Fedora 24(25) Changes

Once again nobody was prepared with a virtual machine with Fedora and students were installing software as we go. Because of that we didn't manage to conduct the lesson properly and had to repeat it on the next session.

Rawhide being the bleeding edge of Fedora means it is full of bugs. Well I couldn't keep up with everyone and explain workarounds or how to install/upgrade Fedora. That was a major setback. It also became evident that you can't move quickly if you have no idea what to do and no instructions about it either.

Lesson 05 - Again

Once prepared with the latest and greatest from Rawhide the task was to analyze the proposed feature changes (on the Fedora wiki) and create test plans and design test cases for said changes. Then execute the tests in search for bugs. This is where some of the bugs above came from. The rest were found during upgrades.

This lesson was team work (4-5 students) but the results were mixed. IMO Fedora changes are quite hard to grasp, especially if you lack domain knowledge and broader knowledge about the structure and operation of a Linux distribution. I don't think most teams were able to clearly understand their chosen features and successfully create good plans/scenarios for them. On the other hand in real life software you don't necessarily understand the domain better and know what to do. I've been in situations where whole features have been defined by a single sentence and requested to be tested by QA.

One of the teams didn't manage to install Fedora (IIRC they didn't have laptops capable of running a VM) and were not able to conduct the exercise.

Being able to find real life bugs, some of them serious, and getting traction in Bugzilla is the most positive effect of this lesson. I personally wanted to have more output (e.g. more bugs, more cases defined, etc) but taking into account the blocking factors and setbacks I think this is a good initial result.

Lesson 06 - Unit Testing and Continuous Integration

Here we had a few examples of bad stubs and mocks which were not received very well. The topic is hard in itself and wasn't very well explained with practical examples.

Another negative thing is that students took a lot of time to fiddle around with Eclipse, they were mistyping commands in the terminal and generally not paying enough attention to instructions. This caused the exercises to go slowly.

We've had an exercise which asks the student to write a new test for a non-existing method. Then implement the method and make sure all the tests passed. You guessed it this is Test Driven Development. IIRC one of the students was having a hard time with that exercise so I popped up my editor on the large screen and started typing what she told me, then re-running the tests and asking her to show me the errors I've made and tell me how to correct them. The exercise was received very well and was fun to do.

Due to lack of time we had to go over TravisCI very quickly. The other bad thing about TravisCI is that it requires git/GitHub and the students were generally inexperienced with that. Both GitHub for Windows and Mac OS suck a big time IMO. What you need is the console. However none of the students had any practical experience with git and knew how to commit code and push branches to GitHub. git fundamentals however is a separate one or two lessons by itself which we didn't do.

Lesson 07 - Writing JUnit tests for Apache Commons

Excluding the problems with Eclipse and the GitHub desktop client and missing instructions for Windows the hardest part of this lesson was actually selecting a component to work on, understanding what the code does and actually writing meaningful tests. On top of that most students were not very proficient programmers and Java was completely new to them.

Despite having 3 pull requests on GitHub I consider this lesson to be a failure.

Lesson 08 - Integration Testing with Selenium

This lesson starts with an example of what a flaky test it. At the moment I don't think this lesson is the best place for that example. To make things even more difficult the example is in Python (because that way was the easiest for me to write it) instead of Java. Students had problems installing Python on Windows just to make this example work. They also were lacking the knowledge how to execute a script in the terminal.

One of the students proposed a better flaky example utilizing dates and times and executing it during various hours of the day. I have yet to code this and prepare environment in which it would be executed. Btw recently I've seen similar behavior caused by inconsistent timezone usage in Ruby which resulted in unexpected time offset a little after midnight :).

Once again I have to point out that students came generally unprepared for the lesson and haven't installed prerequisite software and programming languages. This is becoming a trend and needs to be split out into a preparation session, possibly with a check list.

On the Selenium side, starting with Selenium IDE, it was a bit unclear how to use it and what needs to be done. This is another negative trend, where students were missing clear instructions what they are expected to do. At the end we did resort to live demo using Selenium IDE so they can at least get some idea about it.

Lesson 09 and 10 - Writing Selenium tests for Mozilla Add-ons website

IMO these two lessons are the biggest disaster of the entire course. Python & virtualenv on Windows was a total no go but on Linux things weren't much easier because students had no idea what a virtualenv is.

Practice wise they haven't managed to read all the bugs on the Mozilla bug tracker and had a very hard time selecting bugs to write tests for. Not to mention that many of the reported bugs were administrative tasks to create or remove add-on categories. There weren't many functional related bugs to write tests for.

The product under test was also hard to understand and most students were seeing it for the first time, let alone getting to know the devel and testing environments that Mozilla provides. Mozilla's test suite being in Python is just another issue to make contribution harder because we've never actually studied Python.

Between the two lessons there were students who've missed the Selenium introduction lesson and were having even harder time to figure things out. I didn't have the time to explain and go back to the previous lesson for them. Maybe an attendance policy is needed for dependent lessons.

Before the course started I've talked to some guys at Mozilla's IRC channel and they agreed to help but at the end we didn't even engage with them. At this point I'm skeptical that mentoring over IRC would have worked anyway.

Lesson 11 - Introduction to Performance Testing

This was a more theoretical lesson with less practical examples and exercises. I have provided some blog posts of mine related to the topic of performance testing but in general they are related to software that I've used which isn't generally known to a less experienced audience (Celery, Twisted). These blog posts IMO were hard to put into perspective and didn't serve a good purpose as examples.

The practical part of the lesson was a discussion with the goal of creating a performance testing strategy for GitHub's infrastructure. It was me who was doing most of the talking because students have no experience working on such a large infrastructure like GitHub and didn't know what components might be there, how they might be organized (load balancers, fail overs, etc) and what needs to be tested.

There was also a more practical example to create a performance test in Java for

one of the classes found in commons-codec/src/main/java/org/apache/commons/codec/digest.

Again the main difficulty here was working fluently with Eclipse, getting the projects to

build/run and knowing how the software under test was supposed to work and be executed.

Lesson 12 - How to find 1000 bugs in 30 minutes

This was a more relaxed lesson with examples of simple types of bugs found on a large scale. Most examples came from my blog and experiments I've made against Fedora.

While amusing and fun I don't think all of the students understood me and kept their attention. Part of that is because Fedora tends to focus on low level stuff and my examples were not necessarily easy to understand.

Feedback from the sponsor

Experian Bulgaria was the exclusive sponsor for this course. At the end of the summer Rado and I met with them to discuss the results of the training. Here's what they say

- Overall technical knowledge they consider to be weak. What they need are people with basic knowledge in the field of object oriented programming, databases, operating systems and networking. These are skills candidates need in order to work for Experian. It must be noted that most of them were not taught at this course!

- English language proficiency for all students is low on average;

- User level experience with Linux was fine but students were missing deeper knowledge about the operating system. Once again something we didn't teach;

- The programming languages favored at Experian are Java and Ruby and students had poor knowledge of them. They also had weak understanding of OOP principles;

- According to Experian students were more ingrained with the development mindset and were trying their luck in the QA profession instead of genuinely being interested in the field. While this is generally the case I have to point out that as sponsor Experian did a poor job at promoting their company and the QA profession as a whole. What isn't known to the general public is their big in-house QA community which could have served as a source of inspiration for the students!

- Low motivation and lack of fundamental knowledge from university are other traits interviewers at Experian have identified. They argued for a stronger acceptance process and a requirement of minimum 2 years of university education in computer science.

On the topic of testing knowledge candidates did mostly OK, however we don't have enough information about this. Also the hiring process at Experian is more focused on the broader knowledge areas listed above so substantial improvement in the testing knowledge of candidates doesn't given them much head start.

While to my knowledge they didn't hire anyone few people received an offer but declined due to various personal reasons. I view this as poor performance on our side but Experian thinks otherwise and are willing to sponsor another round of training.

Summary

Here is a list of all the things that could be improved

- Take time to develop the curriculum and have it pass QA review by other experienced testers (in particular such that also teach students);

- Make the application process harder to include people with broader IT knowledge;

- Allow time to review all applicants;

- Find a co-trainer and additional mentors for some of the exercises;

- Minimize the number of students accepted in the course so we can handle everyone with more care;

- Give homework assignments and examine them before each lesson;

- Collect and provide performance review at the end of the course;

- Minimize the technology stack and tools used;

- Host a technology preparation session at the beginning paired with a check list of all the things that need to be done. Very likely make the chosen technology stack a hard requirement to avoid setbacks later in the schedule;

- We need more fundamental lessons like practical git tutorial, practical Linux tutorial, databases, networking, etc.

- Same goes for Java or any other programming language. Each of these technologies easily makes a course by itself. Possibly include technology skill assessment in the application form and reject candidates who don't meet the minimum level. This is to ensure the group is able to move at the same pace.

- We need to improve the infrastructure used during the course, especially exercise bug tracking and test case management systems;

- Students need clear instructions for every exercise - what is required from them, what the expected result is and what they need to be doing, etc;

- Provide more examples for just about everything. Also make the examples easier to understand/simple with the harder examples left for further reading;

- Provide easier projects to work on. This means applications whose domain is easier to understand and closer to what experiences the students might have. Also projects need clear instructions how to join and how to contribute with tests.

- The same application/software needs to be used for manual bug finding, unit test writing and integration test with Selenium. This will minimize both context and technology switches and allow to view various testing activities in the context of a single software under test;

- While using open source projects for the above listed purposes sounds great the reality is that they are hard to join and will not move according to our schedule. Maybe less focus on open source per-se and more focus on a particular application under test. Instructors can then proxy all the tests forward to the upstream community;

- Focus more on practice and less on exotic topics like performance testing and large scale bug finding;

- Seek more active participation from sponsors!

If you have suggestions please comment below, especially if you can tell me how to implement them in practice.

Thanks for reading and happy testing!

There are comments.

4 Quick Wins to Manage the Cost of Software Testing

Every activity in software development has a cost and a value. Getting cost to

trend down while increasing value, is the ultimate goal.

This is the introduction of an e-book called 4 Quick Wins to Manage the Cost of Software Testing. It was sent to me by Ivan Fingarov couple of months ago. Just now I've managed to read it and here's a quick summary. I urge everyone to download the original copy and give it a read.

The paper focuses on several practices which organizations can apply immediately in order to become more efficient and transparent in their software testing. While larger organizations (e.g. enterprises) have most of these practices already in place smaller companies (up to 50-100 engineering staff) may not be familiar with them and will reap the most benefits of implementing said practices. Even though I work for a large enterprise I find this guide useful when considered at the individual team level!

The first chapter focuses on Tactics to minimize cost: Process, Tools, Bug System Mining and Eliminating Handoffs.

In Process the goal is to minimize the burden of documenting the test process (aka testing artifacts), allow for better transparency and visibility outside the QA group and streamline the decision making process of what to test and when to stop testing, how much has been tested, what the risk is, ect. The authors propose testing core functionality paired with emerging risk areas based on new features development. They propose making a list of these and sorting that list by perceived risk/priority and testing as much as possible. Indeed this is very similar to the method I've used at Red Hat when designing testing for new features and new major releases of Red Hat Enterprise Linux. A similar method I've seen in place at several start-ups as well, although in the small organization the primary driver for this method is lack of sufficient test resources.

Tools proposes the use of test case management systems to ease the documentation burden. I've used TestLink and Nitrate. From them Nitrate has more features but is currently unmaintained with me being the largest contributor on GitHub. From the paid variants I've used Polarion which I generally dislike. Polarion is most suitable for large organizations because it gives lots of opportunities for tracking and reporting. For small organizations it is an overkill.

Bug System Mining is a technique which involves regularly scanning the bug tracker and searching for patterns. This is useful for finding bug types which appear frequently and generally point to a flaw in the software development process. The fix for these flaws usually is a change in policy/workflow which eliminates the source of the errors. I'm a fan of this technique when joining an existing project and need to assess what the current state is. I've done this when consulting for a few start-ups, including Jitsi Meet (acquired by Atlassian), however I'm not doing bug mining on a regular basis which I consider a drawback and I really should start doing!

For example at one project I found lots of bugs reported against translations, e.g. missing translations, text overflowing the visible screen area or not playing well with existing design, chosen language/style not fitting well with the product domain, etc.

The root cause of the problem was how the software in question has been localized. The translators were given a file of English strings, which they would translate and return back in an spread sheet. Developers would copy&paste the translated strings into localization files and integrate with the software. Then QA would usually inspect all the pages and report the above issues. The solution was to remove devel and QA from the translation process, implement a translation management system together with live preview (web based) so that translators can keep track of what is left to translate and can visually inspect their work immediately after a string was translated. Thus translators are given more context for their work but also given the responsibility to produce good quality translations.

Another example I've seen are many bugs which seem like a follow up/nice to have features of partially implemented functionality. The root cause of this problem turned out to be that devel was jumping straight to implementation without taking the time to brainstorm and consult with QE and product owners, not taking into account corner cases and minor issues which would have easily been raised by skillful testers. This process lead to several iterations until the said functionality was considered initially implemented.

Eliminating Handoffs proposes the use of cross-functional teams to reduce idle time and reduce the back-and-forth communication which happens when a bug is found, reported, evaluated and considered for a fix, fixed by devel and finally deployed for testing. This method argues that including testers early in the process and pairing them with the devel team will produce faster bug fixes and reduce communication burden.

While I generally agree with that statement it's worth noting that cross-functional teams perform really well when all team members have relatively equal skill level on the horizontal scale and strong experience on the vertical scale (think T-shaped specialist). Cross-functional teams don't work well when you have developers who aren't well versed in the testing domain and/or testers who are not well versed in programming or the broader OS/computer science fundamentals domain. In my opinion you need well experienced engineers for a good cross-functional team.

In the chapter Collaboration the paper focuses on pairing, building the right thing and faster feedback loops for developers. This overlaps with earlier proposals for cross-functional teams and QA bringing value by asking the "what if" questions. The chapter specifically talks about the Three Amigos meeting between PM, devel and QA where they discuss a feature proposal from all angles and finally come to a conclusion what the feature should look like. I'm a strong supporter of this technique and have been working with it under one form or another during my entire career. This also touches on the notion that testers need to move into the Quality Assistance business and be proactive during the software development process, which is something I'm hoping to talk about at the Romanian Testing Conference next year!

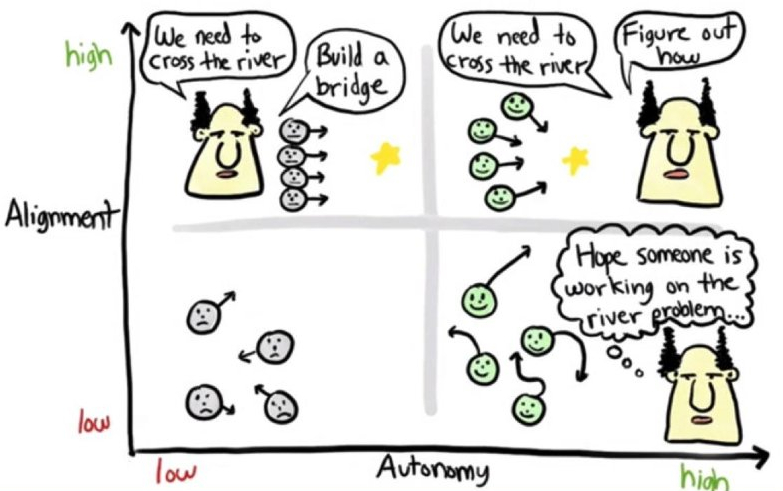

Finally the book talks about Skills Development and makes the distinction between Centers of Excellence (CoE) and Communities of Practice (CoP). Both the book and I are supporters of the CoP approach. This is a bottoms-up approach which is open for everyone to join in and harnesses the team creative abilities. It also takes into account that different teams use different methods and tools and that "one size doesn't fit all"!

Skilled teams find important bugs faster, discover innovative solutions to hard

testing problems and know how to communicate their value. Sometimes, a few super

testers can replace an army of average testers.

While I consider myself to be a "super tester" with thousands of bugs reported there is a very important note to make here. Communities of Practice are successful when their members are self-focused on skill development! In my view and to some extent the communities I've worked with everyone should strive to constantly improve their skills but also exercise peer pressure on their co-workers to not fall behind. This has been confirmed by other folks in the QA industry and I've heard it many times when talking to friends from other companies.

Thanks for reading and happy testing!