How I Created a Website In Two Days Without Coding

This is a simple story about a website I helped create without using any programming at all. It took me two days because of the images and the logo design which I've commissioned to a friend.

The website is obuvki41plus.com which is a re-seller business my spouse runs. It specializes in large size, elegant ladies shoes - Europe size 41 plus (hard to find in Bulgaria), hence the name.

Required Functionality

- Display a catalog of items for sale with detailed information about each item;

- Make it possible for people to comment and share the items;

- Very basic shopping cart which stores the selected items and then redirects to a page with order instructions. Actual order is made via phone for several reasons which I will explain in another post;

- Add a feedback/contact form;

- Look nice on mobile devices.

Technology

- The website is static, all pages are simple HTML and is hosted in Amazon S3;

- Comments are provided by Facebook's Comments Box plug-in;

- Social media buttons and tracking are provided by AddThis;

- Visitors analytics is standard and is from Google Analytics;

- Template is from GitHub Pages with slight modifications; Works on mobile too;

- Logo is custom designed by my friend Polina Valerieva;

- Feedback/contact form is by UserVoice;

- Shopping cart is by simpleCart(js). I've created a simple animation effect when pressing the "ADD TO CART" link to visually alert the user. This is done with jQuery.

I could have used some JavaScript templating engine like Handlebars but at the time I didn't know about it and I prefer not to write JavaScript if possible :).

Colophon

I did some coding after the initial release eventually. I've transformed the website to a Django based site which is exported as static HTML.

This helps me with faster deployment/management as everything is stored in git, allows templates inheritance and also makes the site ready to add more functionality if required.

There are comments.

Performance test: Amazon ElastiCache vs Amazon S3

Which Django cache backend is faster? Amazon ElastiCache or Amazon S3 ?

Previously I've mentioned about using Django's cache to keep state between HTTP requests. In my demo described there I was using django-s3-cache. It is time to move to production so I decided to measure the performance difference between the two cache options available at Amazon Web Services.

Update 2013-07-01: my initial test may have been false since I had not configured ElastiCache access properly. I saw no errors but discovered the issue today on another system which was failing to store the cache keys but didn't show any errors either. I've re-run the tests and updated times are shown below.

Test infrastructure

- One Amazon S3 bucket, located in US Standard (aka US East) region;

- One Amazon ElastiCache cluster with one Small Cache Node (cache.m1.small) with Moderate I/O capacity;

- One Amazon Elasticache cluster with one Large Cache Node (cache.m1.large) with High I/O Capacity;

- Update: I've tested both

python-memcachedandpylibmcclient libraries for Django; - Update: Test is executed from an EC2 node in the us-east-1a availability zone;

- Update: Cache clusters are in the us-east-1a availability zone.

Test Scenario

The test platform is Django. I've created a

skeleton project

with only CACHES settings

defined and necessary dependencies installed. A file called test.py holds the

test cases, which use the standard timeit module. The object which is stored in cache

is very small - it holds a phone/address identifiers and couple of user made selections.

The code looks like this:

import timeit

s3_set = timeit.Timer(

"""

for i in range(1000):

my_cache.set(i, MyObject)

"""

,

"""

from django.core import cache

my_cache = cache.get_cache('default')

MyObject = {

'from' : '359123456789',

'address' : '6afce9f7-acff-49c5-9fbe-14e238f73190',

'hour' : '12:30',

'weight' : 5,

'type' : 1,

}

"""

)

s3_get = timeit.Timer(

"""

for i in range(1000):

MyObject = my_cache.get(i)

"""

,

"""

from django.core import cache

my_cache = cache.get_cache('default')

"""

)

Tests were executed from the Django shell on my laptop

on an EC2 instance in the us-east-1a availability zone. ElastiCache nodes

were freshly created/rebooted before test execution. S3 bucket had no objects.

$ ./manage.py shell

Python 2.6.8 (unknown, Mar 14 2013, 09:31:22)

[GCC 4.6.2 20111027 (Red Hat 4.6.2-2)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

(InteractiveConsole)

>>> from test import *

>>>

>>>

>>>

>>> s3_set.repeat(repeat=3, number=1)

[68.089607000350952, 70.806712865829468, 72.49261999130249]

>>>

>>>

>>> s3_get.repeat(repeat=3, number=1)

[43.778793096542358, 43.054368019104004, 36.19232702255249]

>>>

>>>

>>> pymc_set.repeat(repeat=3, number=1)

[0.40637087821960449, 0.3568730354309082, 0.35815882682800293]

>>>

>>>

>>> pymc_get.repeat(repeat=3, number=1)

[0.35759496688842773, 0.35180497169494629, 0.39198613166809082]

>>>

>>>

>>> libmc_set.repeat(repeat=3, number=1)

[0.3902890682220459, 0.30157709121704102, 0.30596804618835449]

>>>

>>>

>>> libmc_get.repeat(repeat=3, number=1)

[0.28874802589416504, 0.30520200729370117, 0.29050207138061523]

>>>

>>>

>>> libmc_large_set.repeat(repeat=3, number=1)

[1.0291709899902344, 0.31709098815917969, 0.32010698318481445]

>>>

>>>

>>> libmc_large_get.repeat(repeat=3, number=1)

[0.2957158088684082, 0.29067802429199219, 0.29692888259887695]

>>>

Results

As expected ElastiCache is much faster (10x) compared to S3. However the difference between the two ElastiCache node types is subtle. I will stay with the smallest possible node to minimize costs. Also as seen, pylibmc is a bit faster compared to the pure Python implementation.

Depending on your objects size or how many set/get operations you perform per second you may need to go with the larger nodes. Just test it!

It surprised me how slow django-s3-cache is.

The false test showed django-s3-cache to be 100x slower but new results are better.

10x decrease in performance sounds about right for a filesystem backed cache.

A quick look at the code of the two backends shows some differences. The one I immediately see is that for every cache key django-s3-cache creates an sha1 hash which is used as the storage file name. This was modeled after the filesystem backend but I think the design is wrong - the memcached backends don't do this.

Another one is that django-s3-cache time-stamps all objects and uses pickle to serialize them. I wonder if it can't just write them as binary blobs directly. There's definitely lots of room for improvement of django-s3-cache. I will let you know my findings once I get to it.

There are comments.

What Runs Your Start-up - Useful at Night

Useful at Night is a mobile guide for nightlife empowering real time discovery of cool locations, allowing nightlife players to identify opinion leaders. Through geo-location and data aggregation capabilities, the application allows useful exploration of cities, places and parties.

Evelin Velev was kind enough to share what technologies his team uses to run their star-up.

Main Technologies

Main technologies used are Node.js, HTML 5 and NoSQL.

Back-end application servers are written in Node.js and hosted at Heroku, coupled with RedisToGo for caching and CouchDB served by Cloudant for storage.

Their mobile front-end supports both iOS and Android platforms and is built using HTML5 and a homemade UI framework called RAPID. There are some native parts developed in Objective-C and Java respectively.

In addition Useful at Night uses MongoDB for metrics data with a custom metrics solution written in Node.js; Amazon S3 for storing different assets; and a custom storage solution called Divan (simple CouchDB like).

Why Not Something Else?

We chose Node.js for our application servers, because it enables us to build efficient distributed systems while sharing significant amounts of code between client and server. Things get really interesting when you couple Node.js with Redis for data structure sharing and message passing, as the two technologies play very well together.

We chose CouchDB as our main back-end because it is the most schema-less data-store that supports secondary indexing. Once you get fluent with its map-reduce views, you can compute an index out of practically anything. For comparison, even MongoDB requires that you design your documents as to enable certain indexing patterns. Put otherwise, we'd say CouchDB is a data-store that enables truly lean engineering - we have never had to re-bake or migrate our data since day one, while we're constantly experimenting with new ways to index, aggregate and query it.

We chose HTML5 as our front-end technology, because it's cross-platform and because we believe it's ... almost ready. Things are still really problematic on Android, but iOS boasts a gorgeous web presentation platform, and Windows 8 is also joining the game with a very good web engine. Obviously we're constantly running into issues and limitations, mostly related to the unfortunate fact that in spite of some recent developments, a web app is still mostly single threaded. However, we're getting there, and we're proud to say we're running a pretty graphically complex hybrid app with near-native GUI performance on the iPhone 4S and above.

Want More Info?

If you'd like to hear more from Useful at Night please comment below. I will ask them to follow this thread and reply to your questions.

There are comments.

How Large Are My MySQL Tables

Image CC-BY-SA, Michael Mandiberg

Image CC-BY-SA, Michael Mandiberg

I found two good blog posts about investigating MySQL internals: Researching your MySQL table sizes and Finding out largest tables on MySQL Server. Using the queries against my site Difio showed:

mysql> SELECT CONCAT(table_schema, '.', table_name),

-> CONCAT(ROUND(table_rows / 1000000, 2), 'M') rows,

-> CONCAT(ROUND(data_length / ( 1024 * 1024 * 1024 ), 2), 'G') DATA,

-> CONCAT(ROUND(index_length / ( 1024 * 1024 * 1024 ), 2), 'G') idx,

-> CONCAT(ROUND(( data_length + index_length ) / ( 1024 * 1024 * 1024 ), 2), 'G') total_size,

-> ROUND(index_length / data_length, 2) idxfrac

-> FROM information_schema.TABLES

-> ORDER BY data_length + index_length DESC;

+----------------------------------------+-------+-------+-------+------------+---------+

| CONCAT(table_schema, '.', table_name) | rows | DATA | idx | total_size | idxfrac |

+----------------------------------------+-------+-------+-------+------------+---------+

| difio.difio_advisory | 0.04M | 3.17G | 0.00G | 3.17G | 0.00 |

+----------------------------------------+-------+-------+-------+------------+---------+

The table of interest is difio_advisory which had 5 longtext fields. Those fields were

not used for filtering or indexing the rest of the information.

They were just storage fields - a `nice' side effect of using Django's ORM.

I have migrated the data to Amazon S3 and stored it in JSON format there. After dropping these fields the table was considerably smaller:

+----------------------------------------+-------+-------+-------+------------+---------+

| CONCAT(table_schema, '.', table_name) | rows | DATA | idx | total_size | idxfrac |

+----------------------------------------+-------+-------+-------+------------+---------+

| difio.difio_advisory | 0.01M | 0.00G | 0.00G | 0.00G | 0.90 |

+----------------------------------------+-------+-------+-------+------------+---------+

For those interested I'm using django-storages on the back-end to save the data in S3 when generated. On the front-end I'm using dojo.xhrGet to load the information directly into the browser.

I'd love to hear your feedback in the comments section below. Let me know what you found for your own databases. Were there any issues? How did you deal with them?

There are comments.

Click Tracking without MailChimp

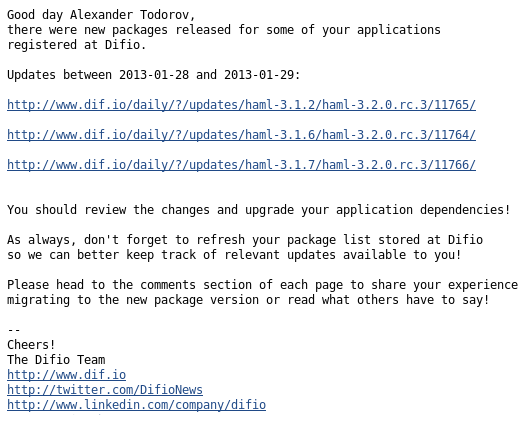

Here is a standard notification message that users at Difio receive. It is plain text, no HTML crap, short and URLs are clean and descriptive. As the project lead developer I wanted to track when people click on these links and visit the website but also keep existing functionality.

Standard approach

A pretty common approach when sending huge volumes of email is to use an external service, such as MailChimp. This is one of many email marketing services which comes with a lot of features. The most important to me was analytics and reports.

The downside is that MailChimp (and I guess others) use HTML formatted emails extensively. I don't like that and I'm sure my users will not like it as well. They are all developers. Not to mention that MailChimp is much more expensive than Amazon SES which I use currently. No MailChimp for me!

Another common approach, used by Feedburner by the way, is to use shortened URLs which redirect to the original ones and measure clicks in between. I also didn't like this for two reasons: 1) the shortened URLs look ugly and they are not at all descriptive and 2) I need to generate them automatically and maintain all the mappings. Why bother ?

How I did it?

So I needed something which will do a redirect to a predefined URL, measure how many redirects were there (essentially clicks on the link) and look nice. The solution is very simple, if you have not recognized it by now from the picture above.

I opted for a custom redirect engine, which will add tracking information to the destination URL so I can track it in Google Analytics.

Previous URLs were of the form http://www.dif.io/updates/haml-3.1.2/haml-3.2.0.rc.3/11765/.

I've added the humble /daily/? prefix before the URL path so it becomes

http://www.dif.io/daily/?/updates/haml-3.1.2/haml-3.2.0.rc.3/11765/

Now /updates/haml-3.1.2/haml-3.2.0.rc.3/11765/ becomes a query string parameter which

the /daily/index.html page uses as its destination. Before doing the redirect

a script adds tracking parameters so that Google Analytics will properly

report this visit. Here is the code:

<html>

<head>

<script type="text/javascript">

var uri = window.location.toString();

var question = uri.indexOf("?");

var param = uri.substring(question + 1, uri.length)

if (question > 0) {

window.location.href = param + '?utm_source=email&utm_medium=email&utm_campaign=Daily_Notification';

}

</script>

</head>

<body></body>

</html>

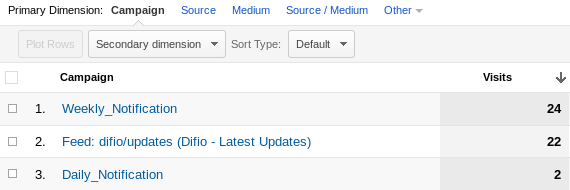

Previously Google Analytics was reporting these visits as direct hits while now it lists them under campaigns like so:

Because all visitors of Difio use JavaScript enabled browsers I combined this approach with another one, to remove query string with JavaScript and present clean URLs to the visitor.

Why JavaScript?

You may be asking why the hell I am using JavaScript and not Apache's wonderful mod_rewrite module? This is because the destination URLs are hosted in Amazon S3 and I'm planning to integrate with Amazon CloudFront. Both of them don't support .htaccess rules nor anything else similar to mod_rewrite.

As always I'd love to hear your thoughts and feedback. Please use the comment form below.

There are comments.