Pedometer Bug in Samsung Gear Fit Smartwatch

Image source Pocketnow

Image source Pocketnow

Recently I've been playing around with a

Samsung Gear Fit

and while the hardware seems good I'm a bit disapointed on the software side.

There is at least one bug which is clearly visible - pedometer counts calories twice

when it's on and exercise mode is started.

How to test:

- Start the Pedometer app and record any initial readings;

- Walk a fixed distance and at the end record all readings;

- Now go back to the Exercise app and select a Walking exercise from the menu. Tap Start;

- Walk back the same distance/road as before. At the end of the journey stop the walking exercise and record all readings.

Expected results:

At the end of the trip I expect to see roughly the same calories burned for both directions.

Actual results:

The return trip counted twice as many calories compared to the forward trip. Here's some actual data to prove it:

+--------------------------+----------+----------------+---------+-------------+---------+

| | Initial | Forward trip | | Return trip | |

| | Readings | Pedometer only | Delta | Pedometer & | Delta |

| | | | | Exercise | |

+--------------------------+----------+----------------+---------+-------------+---------+

| Total Steps | 14409 st | 14798 st | 389 st | 15246 st | 448 st |

+--------------------------+----------+----------------+---------+-------------+---------+

| Total Distance | 12,19 km | 12,52 km | 0,33 km | 12,90 km | 0,38 km |

+--------------------------+----------+----------------+---------+-------------+---------+

| Cal burned via Pedometer | 731 Cal | 751 Cal | 20 Cal | 772 Cal | 21 Cal |

+--------------------------+----------+----------------+=========+-------------+=========+

| Cal burned via Exercise | 439 Cal | 439 Cal | 0 | 460 Cal | 21 Cal |

+--------------------------+----------+----------------+---------+-------------+=========+

| Total calories burned | 1170 Cal | 1190 Cal | 20 cal | 1232 Cal | 42 Cal |

+--------------------------+----------+----------------+=========+-------------+=========+

Note: Data values above were taken from Samsung's S Health app which is easier to work with instead of the Gear Fit itself.

The problem is that both apps are accessing the sensor simultaneously and not aware of each other. In theory it should be relatively easy to block access of one app while the other is running but that may not be so easy to implement on the limited platform the Gear Fit is.

There are comments.

2 Barcode Related Bugs in MyFitnessPal

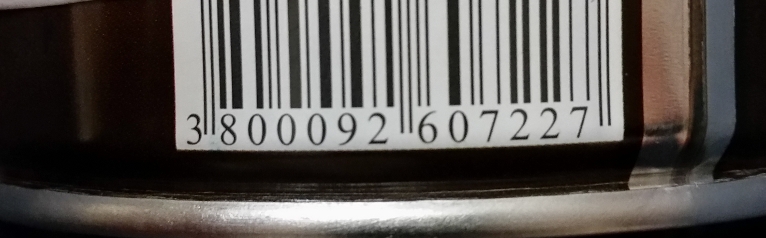

Did you know that the popular MyFitnessPal application can't scan barcodes printed on curved surfaces? The above barcode fails to scan because it is printed on a metal can full of roasted almonds :). In contrast the Barcode Scanner from ZXing Team understands it just fine. My bet is MyFitnessPal uses less advanced barcode scanning library. Judging from the visual clues in the app the issue is between 6 and 0 where white space is wider.

Despite being a bit blurry this second barcode is printed on a flat surface and is understood by both MyFitnessPal and "ZXing Barcode Scanner".

NOTE I get the same results regardless if I try to scan the actual barcode printed on packaging, a picture from a mobile device screen or these two images from the laptop screen.

MyFitnessPal also has problems with duplicate barcodes! Barcodes are not unique and many producers use the same code for multiple products. I've seen this in the case of two different varieties of salami from the same manufacturer on the good end and two different products produced across the world (eggs and popcorn) on the extreme end.

Once the user scans their barcodes and establish that the existing information is not correct they can Create a Food and update the calories database. This is then synced back to MyFitnesPal servers and overrides any existing information. When the same barcode is scanned for the second time only the new DB entry is visible.

How to reproduce:

- Scan an existing barcode and enter it to MFP database if not already there;

- Scan the same barcode one more time and pretend the information is not correct;

- Click the Create a Food button and fill-in the fields. For example use a different food name to distinguish between the two database entries. Save!

- From another device with different account (to verify information in DB) scan the same barcode again.

Actual results: The last entered information is shown.

Expected results: User is shown both DB records and can select between them.

There are comments.

Endless Loop Bug in Candy Crush Saga Level 80

Happy new year everyone. During the holidays I've discovered several interesting bugs which will be revealed in this blog. Starting today with a bug in the popular game Candy Crush Saga.

In level 80 one teleport is still open but the chocolates are blocking the rest. The game has ended but candies keep flowing through the teleport and the level doesn't exit. My guess is that the game logic is missing a check whether or not it will go into an endless loop.

This bug seems to be generic for the entire game. It pops up also on level 137 in the Owl part of the game (recorded by somebody else):

There are comments.

BlackBerry Z10 is Killing My WiFi Router

Few days ago I've resurrected my BlackBerry Z10 only to find out that it kills my WiFi router shortly after connecting to the network. It looks like many people are having the same problem with BlackBerry but most forum threads don't offer a meaningful solution so I did some tests.

Everything works fine when WiFi mode is set to either 11bgn mixed or 11n only and WiFi security is disabled.

When using WPA2/Personal security mode and AES encryption the problem occurs regardless of which WiFi mode is used. There is another type of encryption called TKIP but the device itself warns that this is not supported by the 802.11n specification (all my devices use it anyway).

So to recap: BlackBerry Z10 causes my TP-Link router to die if using WPA2/Personal security with AES Encryption. Switching to open network with MAC address filtering works fine!

I haven't had the time to upgrade the firmware of this router and see if the problem persists. Most likely I'll just go ahead and flash it with OpenWRT.

There are comments.

Speed Comparison of Web Proxies Written in Python Twisted and Go

After I figured out that Celery is rather slow I moved on to test another part of my environment - a web proxy server. The test here compares two proxy implementations - one with Python Twisted, the other in Go. The backend is a simple web server written in Go, which is probably the fastest thing when it comes to serving HTML.

The test content is a snapshot of the front page of this blog taken few days ago. The system is a standard Lenovo X220 laptop, with Intel Core i7 CPU, with 4 cores. The measurement instrument is the popular wrk tool with a custom Lua script to redirect the requests through the proxy.

All tests were repeated several times, only the best results are shown here.

I've taken time between the tests in order for all open TCP ports to close.

I've also observed the number of open ports (e.g. sockets) using netstat.

Baseline

Using wrk against the web server in Go yields around 30000 requests per second with an average of 2000 TCP ports in use:

$ ./wrk -c1000 -t20 -d30s http://127.0.0.1:8000/atodorov.html

Running 30s test @ http://127.0.0.1:8000/atodorov.html

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 304.43ms 518.27ms 1.47s 82.69%

Req/Sec 1.72k 2.45k 17.63k 88.70%

1016810 requests in 29.97s, 34.73GB read

Non-2xx or 3xx responses: 685544

Requests/sec: 33928.41

Transfer/sec: 1.16GB

Python Twisted

The Twisted implementation performs at little over 1000 reqs/sec with an average TCP port use between 20000 and 30000:

./wrk -c1000 -t20 -d30s http://127.0.0.1:8080 -s scripts/proxy.lua -- http://127.0.0.1:8000/atodorov.html

Running 30s test @ http://127.0.0.1:8080

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 335.53ms 117.26ms 707.57ms 64.77%

Req/Sec 104.14 72.19 335.00 55.94%

40449 requests in 30.02s, 3.67GB read

Socket errors: connect 0, read 0, write 0, timeout 8542

Non-2xx or 3xx responses: 5382

Requests/sec: 1347.55

Transfer/sec: 125.12MB

Go proxy

First I've run several 30 seconds tests and performance was around 8000 req/sec

with around 20000 ports used (most of them remain in TIME_WAIT state for a while).

Then I've modified proxy.go to make use of all available CPUs on the system and let

the test run for 5 minutes.

$ ./wrk -c1000 -t20 -d300s http://127.0.0.1:9090 -s scripts/proxy.lua -- http://127.0.0.1:8000/atodorov.html

Running 5m test @ http://127.0.0.1:9090

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 137.22ms 437.95ms 4.45s 97.55%

Req/Sec 669.54 198.52 1.71k 76.40%

3423108 requests in 5.00m, 58.27GB read

Socket errors: connect 0, read 26, write 181, timeout 24268

Non-2xx or 3xx responses: 2870522

Requests/sec: 11404.19

Transfer/sec: 198.78MB

Performance peaked at 10000 req/sec. TCP port usage initially rose to around 30000

but rapidly dropped and stayed around 3000. Both webserver.go and proxy.go were

printing the following messages on the console:

2014/11/18 21:53:06 http: Accept error: accept tcp [::]:9090: too many open files; retrying in 1s

Conclusion

There's no doubt that Go is blazingly fast compared to Python and I'm most likely to use it further in my experiments. Still I didn't expect a 3x difference in performance from webserver vs. proxy.

Another thing that worries me is the huge number of open TCP ports which then drops and stays consistent over time and the error messages from both webserver and proxy (maybe per process sockets limit).

At the moment I'm not aware of the internal workings of neither wrk, nor Go itself, nor the goproxy library to make conclusion if this is a bad thing or expected. I'm eager to hear what others think in the comments. Thanks!

Update 2015-01-27

I have retested with PyPy but on a different system so I'm giving all the test results

on it as well. /proc/cpuinfo says we have 16 x Intel(R) Xeon(R) CPU E5-2450L 0 @ 1.80GHz

CPUs.

Baseline - Go server:

$ ./wrk -c1000 -t20 -d30s http://127.0.0.1:8000/atodorov.html

Running 30s test @ http://127.0.0.1:8000/atodorov.html

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 15.57ms 20.38ms 238.93ms 98.11%

Req/Sec 3.55k 1.32k 15.91k 82.49%

1980738 requests in 30.00s, 174.53GB read

Socket errors: connect 0, read 0, write 0, timeout 602

Non-2xx or 3xx responses: 60331

Requests/sec: 66022.87

Transfer/sec: 5.82GB

Go proxy (30 sec):

$ ./wrk -c1000 -t20 -d30s http://127.0.0.1:9090 -s scripts/proxy.lua -- http://127.0.0.1:8000/atodorov.html

Running 30s test @ http://127.0.0.1:9090

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 68.93ms 718.98ms 12.60s 99.58%

Req/Sec 1.61k 784.01 4.83k 62.50%

942757 requests in 30.00s, 32.16GB read

Socket errors: connect 0, read 26, write 0, timeout 3050

Non-2xx or 3xx responses: 589940

Requests/sec: 31425.47

Transfer/sec: 1.07GB

Python proxy with Twisted==14.0.2 and pypy-2.2.1-2.el7.x86_64:

$ ./wrk -c1000 -t20 -d30s http://127.0.0.1:8080 -s scripts/proxy.lua -- http://127.0.0.1:8000/atodorov.html

Running 30s test @ http://127.0.0.1:8080

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 858.75ms 1.47s 6.00s 88.09%

Req/Sec 146.39 104.83 341.00 54.18%

85645 requests in 30.00s, 853.54MB read

Socket errors: connect 0, read 289, write 0, timeout 3297

Non-2xx or 3xx responses: 76567

Requests/sec: 2854.45

Transfer/sec: 28.45MB

Update 2015-01-27-2

Python proxy with Twisted==14.0.2 and python-2.7.5-16.el7.x86_64:

$ ./wrk -c1000 -t20 -d30s http://127.0.0.1:8080 -s scripts/proxy.lua -- http://127.0.0.1:8000/atodorov.html

Running 30s test @ http://127.0.0.1:8080

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 739.64ms 1.58s 14.22s 96.18%

Req/Sec 84.43 36.61 157.00 67.79%

49173 requests in 30.01s, 701.77MB read

Socket errors: connect 0, read 240, write 0, timeout 2463

Non-2xx or 3xx responses: 41683

Requests/sec: 1638.38

Transfer/sec: 23.38MB

As seen Go proxy is slower than the Go server by factor of 2. Python proxy is slower by than the Go server by factor of 20. These results are similar to previous ones so I don't think PyPy makes any significant difference.

There are comments.

Proxy Support for wrk HTTP Benchmarking Tool

Few times recently I've seen people using an HTTP benchmarking tool called wrk and decided to give it a try. It is a very cool instrument but didn't fit my use case perfectly. What I needed is to be able to redirect the connection through a web proxy and measure how much the proxy slows down things compared to hitting the web server directly with wrk. In other words - how fast is the proxy server.

How does a proxy work

I've examined the source code of two proxies (one in Python and another one in Go) and what happens is this:

- The proxy server starts listening to a TCP port

- A client (e.g. the browser) sends the request using an absolute URL (GET http://example.com/about.html)

- Instead of connecting directly to the web server behind example.com the client connects to the proxy

- The proxy server does connect to example.com directly, reads the response and delivers it back to the client.

Proxy in wrk

Luckily wrk supports the execution of Lua scripts so we can make a simple script like this:

init = function(args)

target_url = args[1] -- proxy needs absolute URL

end

request = function()

return wrk.format("GET", target_url)

end

Then update your command line to something like this: ./wrk [options] http://proxy:port -s proxy.lua -- http://example.com/about.html

This causes wrk to connect to our proxy server but instead issue GET requests for another URL.

Depending on how your proxy works you may need to add the Host: example.com header as well.

Now let's do some testing.

There are comments.

HackFMI SMS Delivery Powered by Twilio

Ten days ago the regular HackFMI competition was held. This year they tried sending SMS notifications to all participants which was powered by Twilio both in terms of infrastructure and cost.

Twilio provided a 20$ upgrade code valid in the next 6 months for all new accounts. This was officially announced at the opening ceremony (although at the last possible time) of the competition, however no teams decided to incorporate SMS/Voice into their games. I'm a little disappointed by this fact.

In terms of software a simple Django app was used. It is by no means production ready but does the job and was quick to write.

A little over 300 messages were sent with number distribution as follows:

- Mtel - 166

- Globul - 86

- Vivacom - 50

The total price for Mtel and Globul messages is roughly the same because sending SMS to Globul via Twilio is as twice expensive. The total sums up to about 25 $. HackFMI team used two accounts to send the messages - one using the provided 20$ upgrade code from Twilio and the second one was mine.

There are comments.

Speeding Up Celery Backends, Part 3

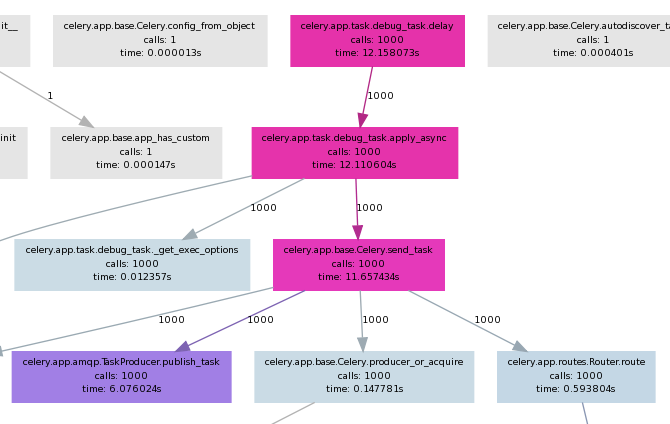

In the second part of this article we've seen how slow Celery actually is. Now let's explore what happens inside and see if we can't speed things up.

I've used pycallgraph to create call graph visualizations of my application. It has the nice feature to also show execution time and use different colors for fast and slow operations.

Full command line is:

pycallgraph -v --stdlib --include ... graphviz -o calls.png -- ./manage.py celery_load_test

where the --include is used to limit the graph to a particular Python module(s).

General findings

- The first four calls is where most of the time is spent as seen on the picture.

- As it seems most of the slow down comes from Celery itself, not the underlying messaging transport Kombu (not shown on picture)

celery.app.amqp.TaskProducer.publish_tasktakes half of the execution time ofcelery.app.base.Celery.send_taskcelery.app.task.Task.delaydirectly executes.apply_asyncand can be skipped if one rewrites the code.

More findings

In celery.app.base.Celery.send_task there is this block of code:

349 with self.producer_or_acquire(producer) as P:

350 self.backend.on_task_call(P, task_id)

351 task_id = P.publish_task(

352 name, args, kwargs, countdown=countdown, eta=eta,

353 task_id=task_id, expires=expires,

354 callbacks=maybe_list(link), errbacks=maybe_list(link_error),

355 reply_to=reply_to or self.oid, **options

356 )

producer is always None because delay() doesn't pass it as argument.

I've tried passing it explicitly to apply_async() as so:

from djapp.celery import *

# app = debug_task._get_app() # if not defined in djapp.celery

producer = app.amqp.producer_pool.acquire(block=True)

debug_task.apply_async(producer=producer)

However this doesn't speedup anything. If we replace the above code block like this:

349 with producer as P:

it blows up on the second iteration because producer and its channel is already None !?!

If you are unfamiliar with the with statement in Python please read

this article. In short the with statement is

a compact way of writing try/finally. The underlying kombu.messaging.Producer class does a

self.release() on exit of the with statement.

I also tried killing the with statement and using producer directly but with limited success. While it was not released(was non None) on subsequent iterations the memory usage grew much more and there wasn't any performance boost.

Conclusion

The with statement is used throughout both Celery and Kombu and I'm not at all sure if there's a mechanism for keep-alive connections. My time constraints are limited and I'll probably not spend anymore time on this problem soon.

Since my use case involves task producer and consumers on localhost I'll try to workaround the current limitations by using Kombu directly (see this gist) with a transport that uses either a UNIX domain socket or a name pipe (FIFO) file.

There are comments.

Speeding up Celery Backends, Part 2

In the first part of this post I looked at a few celery backends and discovered they didn't meet my needs. Why is the Celery stack slow? How slow is it actually?

How slow is Celery in practice

- Queue: 500`000 msg/sec

- Kombu: 14`000 msg/sec

- Celery: 2`000 msg/sec

Detailed test description

There are three main components of the Celery stack:

- Celery itself

- Kombu which handles the transport layer

- Python Queue()'s underlying everything

Using the Queue and Kombu tests run for 1 000 000 messages I got the following results:

- Raw Python Queue: Msgs per sec: 500`000

- Raw Kombu without Celery where

kombu/utils/__init__.py:uuid()is set to return 0- with json serializer: Msgs per sec: 5`988

- with pickle serializer: Msgs per sec: 12`820

- with the custom mem_serializer from part 1: Msgs per sec: 14`492

Note: when the test is executed with 100K messages mem_serializer yielded 25`000 msg/sec then the performance is saturated. I've observed similar behavior with raw Python Queue()'s. I saw some cache buffers being managed internally to avoid OOM exceptions. This is probably the main reason performance becomes saturated over a longer execution.

- Using celery_load_test.py modified to loop 1 000 000 times I got 1908.0 tasks created per sec.

Another interesting this worth outlining - in the kombu test there are these lines:

with producers[connection].acquire(block=True) as producer:

for j in range(1000000):

If we swap them the performance drops down to 3875 msg/sec which is comparable with the

Celery results. Indeed inside Celery there's the same with producer.acquire(block=True)

construct which is executed every time a new task is published. Next I will be looking

into this to figure out exactly where the slowliness comes from.

There are comments.

Speeding up Celery Backends, Part 1

I'm working on an application which fires a lot of Celery tasks - the more the better! Unfortunately Celery backends seem to be rather slow :(. Using the celery_load_test.py command for Django I was able to capture some metrics:

- Amazon SQS backend: 2 or 3 tasks/sec

- Filesystem backend: 2000 - 2500 tasks/sec

- Memory backend: around 3000 tasks/sec

Not bad but I need in the order of 10000 tasks created per sec! The other noticeable thing is that memory backend isn't much faster compared to the filesystem one! NB: all of these backends actually come from the kombu package.

Why is Celery slow ?

Using celery_load_test.py together with

cProfile I

was able to pin-point some problematic areas:

-

kombu/transports/virtual/__init__.py: class Channel.basic_publish() - does self.encode_body() into base64 encoded string. Fixed with custom transport backend I called fastmemory which redefines the body_encoding property:@cached_property def body_encoding(self): return None -

Celery uses json or pickle (or other) serializers to serialize the data. While json yields between 2000-3000 tasks/sec, pickle does around 3500 tasks/sec. Replacing with a custom serializer which just returns the objects (since we read/write from/to memory) yields about 4000 tasks/sec tops:

from kombu.serialization import register def loads(s): return s def dumps(s): return s register('mem_serializer', dumps, loads, content_type='application/x-memory', content_encoding='binary') -

kombu/utils/__init__.py: def uuid() - generates random unique identifiers which is a slow operation. Replacing it withreturn "00000000"boosts performance to 7000 tasks/sec.

It's clear that a constant UUID is not of any practical use but serves well to illustrate how much does this function affect performance.

Note:

Subsequent executions of celery_load_test seem to report degraded performance even with

the most optimized transport backend. I'm not sure why is this. One possibility is the random

UUID usage in other parts of the Celery/Kombu stack which drains entropy on the system and

generating more random numbers becomes slower. If you know better please tell me!

I will be looking for a better understanding of these IDs in Celery and hope to be able to produce a faster uuid() function. Then I'll be exploring the transport stack even more in order to reach the goal of 10000 tasks/sec. If you have any suggestions or pointers please share them in the comments.

There are comments.

Performance Profiling in Python with cProfile

This is a quick reference on profiling Python applications with cProfile:

$ python -m cProfile -s time application.py

The output is sorted by execution time -s time

9072842 function calls (8882140 primitive calls) in 9.830 CPU seconds

Ordered by: internal time

ncalls tottime percall cumtime percall filename:lineno(function)

61868 0.575 0.000 0.861 0.000 abstract.py:28(__init__)

41250 0.527 0.000 0.660 0.000 uuid.py:101(__init__)

61863 0.405 0.000 1.054 0.000 abstract.py:40(as_dict)

41243 0.343 0.000 1.131 0.000 __init__.py:143(uuid4)

577388 0.338 0.000 0.649 0.000 abstract.py:46(<genexpr>)

20622 0.289 0.000 8.824 0.000 base.py:331(send_task)

61907 0.232 0.000 0.477 0.000 datastructures.py:467(__getitem__)

20622 0.225 0.000 9.298 0.000 task.py:455(apply_async)

61863 0.218 0.000 2.502 0.000 abstract.py:52(__copy__)

20621 0.208 0.000 4.766 0.000 amqp.py:208(publish_task)

462640 0.193 0.000 0.247 0.000 {isinstance}

515525 0.162 0.000 0.193 0.000 abstract.py:41(f)

41246 0.153 0.000 0.633 0.000 entity.py:143(__init__)

In the example above (actual application) first line is kombu's

abstract.py: class Object(object).__init__()

and the second one is Python's

uuid.py: class UUID().__init__().

There are comments.

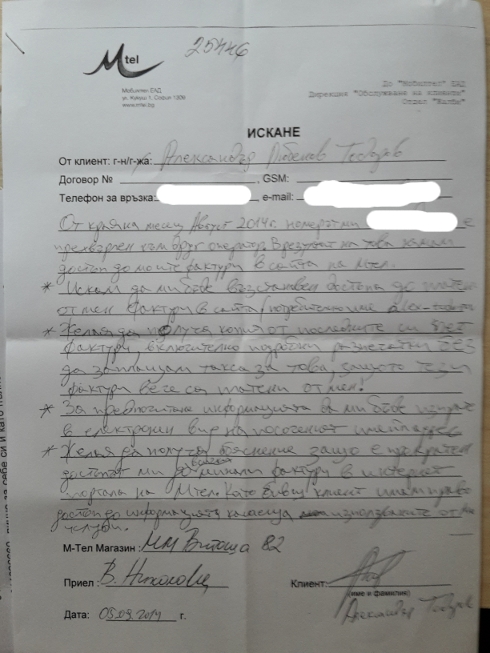

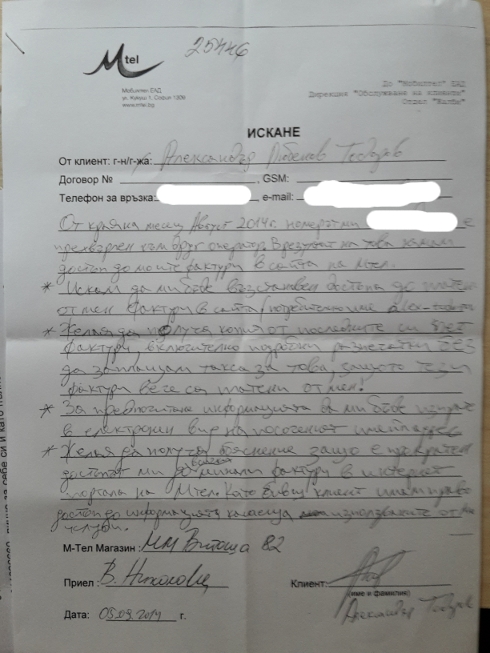

How Mtel, the Biggest Mobile Operator in Bulgaria, Fucked-Up Their Online Invoice System

Prompted by Rado's post about Mtel not fixing a business customer Internet connection I want to tell you a story about how the big company totally fucked up their online invoice system and give you a few more hints about how stupidity reigns supreme even in big companies, especially in Bulgaria.

I've never received a paper invoice for my phone bill and used the website to access any information about my bills and calls duration, etc. The problem is that I ported my number to another operator.

Porting a number away from Mtel automatically cuts access to your previous invoices online

The problem in technical terms

This is a tech blog so let's be technical. Users can register at Mtel's website via username and password. If they are customers then they can add their numbers to their profile. Once the number is verified (via SMS code), the user has access to additional services one of which is online invoices and monthly usage reports.

Migrating away to another operator "erases" your number from the system but this is not true actually. All the information is still there because they can give me print outs if I pay all the extra fees and simply because no business will voluntarily erase their database records.

The genius who designed the if-number-migrated-then-delete-from-user-profile-and-cut-off-access business process/software implementation is the biggest idiot in the world.

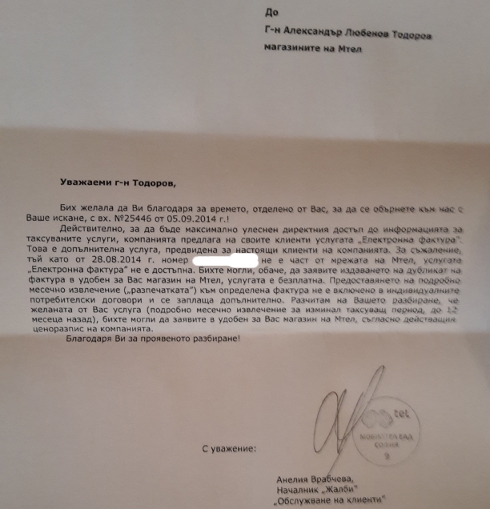

How Mtel handled my complaint

My request for explanation (see below) was answered with a nice letter, basically telling me to piss off. None of my questions were answered. To make things worse they want to charge extra fees for detailed print outs.

What I've asked for:

- To be granted access back to all of my invoices online. Not only I paid for them but this is something of a personal information;

- To be given copies of my last 5 invoices, preferably via email;

- To be given explanation why my online access to previous invoices was cut off;

- Mtel to stop doing this and allow access to online invoices for everyone of their past or current customers;

I've also told them I'm available to help them fix their system if they don't have the resources to do so (hint, hint: it's an if-then condition or something very close I bet).

A copy of my complaint and the response to it (in Bulgarian only):

There are comments.

Shutdown Your Startup in 7 Steps

A month ago one of my startups Difio stopped working forever. This is the story of how to go about shutting down a working web service and not about why it came around to this.

Step #1: Disable new registrations

You obviously need to make sure new customers arriving at your web site will not sing up to only find the service is shutting down later.

Disable whatever sign-on/registration system you have in place but leave currently registered users to login as they wish.

Step #2: Disable payments

Difio had paying customers, just not enough of them and it was based on a subscription model which was automatically renewed without any interaction from the customer.

The first thing I did was to disable all payments for the service which was quite easy (just a few comments) because Difio used an external payment processor.

Next thing was to go through all subscriptions that were still active and cancel them. This prevented the payment processor to automatically charge the customers next time their subscription renewal was due.

Because all subscriptions were charged in advance and when canceled were still considered active (due to expire at some later date) Difio had to keep operating at least one month after all subscriptions have been canceled.

Step #3: Notify all customers that you are shutting down

I scheduled this to happen right after the last subscription was canceled. An email to everyone who registered to the website and a blog post should work for most startups. See ours here.

Make sure to provide a gratis period if necessary. Difio had a gratis period of one month after the shutdown announcement.

Step #4: Disable all external triggers

Difio was a complex piece of software which relied on external triggers like web hooks and repetitive tasks executed by cron.

Disabling these will prevent external services or hosting providers getting errors about your URLs not being accessible. It is just polite to do so.

You may want to keep these still operational during the gratis period before the physical shutdown or disable them straight away. In Difio's case they were left operational because there were customers who have paid in advance and relied on this functionality.

Step #5: Prepare your 'Service Disabled' banner

You will probably want to let people know why something isn't working as it used (or is expected) to be. A simple page explaining that you're going to shut down should be enough.

Difio required the user to be logged in to see most of the website. This made it very easy to redirect everything to the same page. A few more places were linking to public URLs which were manually rewritten to point to the same 'Service Disabled' page.

It is the same page used previously to redirect new registrations to.

Step #6: Terminate all processing resources

Difio used both AWS EC2 instances and an OpenShift PaaS instance to do its processing. Simply terminating all of them was enough. The only thing left is a couple of static HTML pages behind the domain.

Step #7: Database archival

The last thing you need to do is archive your database. Although the startup is out of business already you have gathered additional information which may come handy at a later time.

Difio didn't collect any personal information about its users, except email and didn't store financial information either. This made it safe to just make a backup of the database and leave it lurking around on disk.

However beware if you have collected personal and/or financial information from your customers. You may want to erase/anonymize some of it before doing your backups and probably safeguard them from unauthorized access.

That's it, your startup is officially dead now! Let me know if I've missed something in the comments below.

There are comments.

Traction: A Startup Guide to Getting Customers

Many entrepreneurs who build great products simply don't have a good distribution strategy.

Mark Andreessen, venture capitalist

Traction: A Startup Guide to Getting Customers

introduces startup founders and employees to the "Bullseye Framework,"

a five-step process successful companies use to get traction.

This framework helps founders find the marketing channel that will be key to unlocking the next stage of growth.

Too often, startups building a product struggle with traction once they launch. This struggle has startups trying random tactics - some ads, a blog post or two - in an unstructured way that leads to failure. Traction shows readers how to systematically approach marketing, and covers how successful businesses have grown through each of the following 19 channels:

- Viral Marketing

- Public Relations (PR)

- Unconventional PR

- Search Engine Marketing (SEM)

- Social and Display Ads

- Offline Ads

- Search Engine Optimization (SEO)

- Content Marketing

- Email Marketing

- Engineering as Marketing

- Target Market Blogs

- Business Development (BD)

- Sales

- Affiliate Programs

- Existing Platforms

- Trade Shows

- Offline Events

- Speaking Engagements

- Community Building

The book is very easy to read and full of practical advice which should serve as a starting point and give you more ideas how to approach a particular distribution channel. It took me two days to read and I already had some ideas to test even before reading the whole of it. My next steps are to apply the principles to my current startup Obuvki 41 Plus and a future one I have in mind.

To anyone building a startup of any kind I would recommend the following selection of books:

- Hooked: How to Build Habit-Forming Products

- to learn how to make products (especially mobile apps) that customers keep using on their own without additional external marketing (review here)

- UX for Lean Startups

- lean product development (review here)

- Traction: A Startup Guide to Getting Customers

- customer growth development

Start reading right now (and also support this blog) by following the links below:

There are comments.

Book Review: The 4-Hour Workweek

The 4-Hour Workweek: Escape 9-5, Live Anywhere, and Join the New Rich

by Timothy Ferriss is one of my all time favorite books.

The basic idea is to ditch the traditional working environment

and work less utilizing more automation.

Whenever you find yourself on the side of the majority, it is time to pause and reflect.

MARK TWAIN

The book starts with a story about the Tango World Championship semifinals in Argentina and poses the question “What on earth would I be doing right now, if I hadn’t left my job and the U.S. over a year ago?” Can you answer this? Keep reading!

Step 1: Definition

Tim defines two groups of people. The Deferrers, those who save it all for the end only to find that life has passed them by and the New Rich.

The employee who rearranges his schedule and negotiates a remote work agreement to achieve 90% of the results in one-tenth of the time, which frees him to practice cross-country skiing and take road trips with his family two weeks per month.

The business owner who eliminates the least profitable customers and projects, outsources all operations entirely, and travels the world collecting rare documents, all while working remotely on a website to showcase her own illustration work.

The student who elects to risk it all—which is nothing—to establish an online video rental service that delivers $5,000 per month in income from a small niche of Blu-ray aficionados, a two-hour-per-week side project that allows him to work full-time as an animal rights lobbyist.

The possibilities are endless. What defines the New Rich is their unrestricted mobility and availability of free time! Money alone doesn't count anymore. Its practical value is multiplied by the what, when, where and with whom you do what you do.

From that point of view earning less money but spending far less time on that is much more powerful than working 80 hours per week for a million dollars.

Step 2: Elimination

One does not accumulate but eliminate. It is not daily increase but daily decrease. The height of cultivation always runs to simplicity.

BRUCE LEE

In this chapter Tim talks about developing selective ignorance of information, the 80-20 Pareto principle, gives tips for faster reading and battling interruption like checking your e-mail and smart phone.

Step 3: Automation

Outsourcing and technical automation are the keys here but there is more. Tim talks about income autopilot - designing your income sources in such a way so that they don't consume much of your time and continue to produce income even after the initial time investment.

Think about the following: working a 9-to-5 job yields an income only during office hours. Having written a book yields income whenever a copy is sold, which is while you're asleep and long after the initial time investment required to write the book.

Then you can diversify your income streams and voila - you're making money automatically :)

Tim also refers to the business management side of things. Why become the manager when you can be the owner of the business ? It's kind of hard to let virtual assistants run your business and resolve issues for you but that frees up your time which is more valuable.

In between he also mentions why as a business you should keep prices high! Counter intuitive, isn't it?

This is easier said than done but I've been working on it for the last couple of years and its starting to take shape nicely so there's truth to it.

Step 4: Liberation

This is the chapter which helps you escape the 9-5 office hours through some interesting techniques. This is not only for freelancers like myself but also for the regular employee. One of the principles is to ask for forgiveness, not a permission (which will be denied anyway).

Another one boils down to:

- Increase company investment into you so that the loss is greater if you quit, e.g. corporate training;

- Prove increased output offsite - call in sick Tuesday to Thursday but continue working. Produce more and leave some sort of digital trail, emails, etc;

- Prepare the quantifiable business benefit - you need to present remote working as a good business decision and not a personal perk, for example you've managed to bill more hours to your company's customers. As explanation use removal of commute and fewer distractions from the office noise;

- Propose a revocable trial period - plan everything that will be said but play it cool and casual. You want to avoid the impression that remote working will be something permanent (for now). Find a relaxed afternoon and give it a shot!

- Expand remote time by making sure you're most productive on your days out of the office and if need be lower the productivity inside the office a bit. Then give it a shot for a longer trial period or more days working remotely;

There's also another one called the hourglass approach, so named because you use a long proof-of-concept up front to get a short remote agreement and then negotiate back up to full-time out of the office.

I personally had it easier in terms of remote working. Before I became a contractor I've been working with folks in the US to whom it doesn't really matter whether I was based in Czech Republic or in Bulgaria. Also I've been sick at that time and had an important project to manage which all just played nicely in proving that I can be productive in any location.

Then comes one of my favorite sections Killing Your Job. Boy you just have to read this. Lots of people need to read this! Everything you are afraid of and keeps you from quitting your job is total bulshit. There are always options. It might be emotionally difficult, but you won’t starve!

Extended edition

This extended edition of the book completes with blog articles and bonus sections like Killing Your BlackBerry.

I’m a 37-year-old Subway franchisee owning and operating 13 stores. Been doing this for seven years. Prior to reading 4HWW I was KING at W4W (translate: work for work’s sake)

Crunched my “always open” workweek into four days and 20 hours. I immediately began taking Mondays OFF, giving me a nice three-day weekend. Tuesday to Friday I work 11 A.M.–4 P.M. (20 hours per week).

I was forced to appraise everything through the 80/20 filter and found that 50% of the 80% was pure crap and the other 50% of the 80% could be done by someone on my payroll.

I still carry portable e-mail but I’ve killed “auto-sync”. Now it’s on a Tues–Fri, 11 A.M.–4 P.M. schedule.

My e-mail autoresponder eliminated 50% of my e-mail within two weeks as people sending me meaningless crap got fed up looking at my autoresponder and stopped including me.

ANDREW, self-employed in the UK

There are comments.

Book Review: How to Win Friends

How to Win Friends & Influence People

by Dale Carnegie teaches you how to deal with people. The book briefly explains

some easy to use principles, why and how they work and then provides tons of

real life examples behind those principles. This book is a must for everyone but

especially parents and teachers and folks in sales, management or business leaders.

I will only highlight the key points. What follows are direct quotes from the book.

Part One: Fundamental Techniques in Handling People

'If You Want to Gather Honey, Don't Kick Over the Beehive' - PRINCIPLE 1: Don't criticise, condemn or complain.

The Big Secret of Dealing with People - PRINCIPLE 2: Give honest and sincere appreciation.

'He Who Can Do This Has the Whole World with Him. He Who Cannot Walks a Lonely Way' - PRINCIPLE 3: Arouse in the other person an eager want.

Part Two: Six Ways to Make People Like You

Do This and You'll Be Welcome Anywhere - PRINCIPLE 1: Become genuinely interested in other people.

A Simple Way to Make a Good First Impression - PRINCIPLE 2: Smile.

If You Don't Do This, You Are Headed for Trouble - PRINCIPLE 3: Remember that a person's name is to that person the sweetest and most important sound in any language.

An Easy Way to Become a Good Conversationalist - PRINCIPLE 4: Be a good listener. Encourage others to talk about themselves.

How to Interest People - PRINCIPLE 5: Talk in terms of the other person's interests.

How to Make People Like You Instantly - PRINCIPLE 6: Make the other person feel important – and do it sincerely.

Part Three: How to Win People to Your Way of Thinking

You Can't Win an Argument - PRINCIPLE 1: The only way to get the best of an argument is to avoid it.

A Sure Way of Making Enemies – and How to Avoid It - PRINCIPLE 2: Show respect for the other person's opinions. Never say, 'You're wrong'.

If You're Wrong, Admit It - PRINCIPLE 3: If you are wrong, admit it quickly and emphatically.

A Drop of Honey - PRINCIPLE 4: Begin in a friendly way.

The Secret of Socrates - PRINCIPLE 5: Get the other person saying 'yes, yes' immediately.

The Safety Valve in Handling Complaints - PRINCIPLE 6: Let the other person do a great deal of the talking.

How to Get Cooperation - PRINCIPLE 7: Let the other person feel that the idea is his or hers.

A Formula That Will Work Wonders for You - PRINCIPLE 8: Try honestly to see things from the other person's point of view.

What Everybody Wants - PRINCIPLE 9: Be sympathetic with the other person's ideas and desires.

An Appeal That Everybody Likes - PRINCIPLE 10: Appeal to the nobler motives.

The Movies Do It. TV Does It. Why Don't You Do It? - PRINCIPLE 11: Dramatise your ideas.

When Nothing Else Works, Try This - PRINCIPLE 12: Throw down a challenge.

Part Four: Be a Leader: How to Change People Without Giving Offense or Arousing Resentment

If You Must Find Fault, This Is the Way to Begin - PRINCIPLE 1: Begin with praise and honest appreciation.

How to Criticise – and Not Be Hated for It - PRINCIPLE 2: Call attention to people's mistakes indirectly.

Talk About Your Own Mistakes First - PRINCIPLE 3: Talk about your own mistakes before criticising the other person.

No One Likes to Take Orders - PRINCIPLE 4: Ask questions instead of giving direct orders.

Let the Other Person Save Face - PRINCIPLE 5: Let the other person save face.

How to Spur People On to Success - PRINCIPLE 6: Praise the slightest improvement and praise every improvement. Be 'hearty in your approbation and lavish in your praise'.

Give a Dog a Good Name - PRINCIPLE 7: Give the other person a fine reputation to live up to.

Make the Fault Seem Easy to Correct - PRINCIPLE 8: Use encouragement. Make the fault seem easy to correct.

Making People Glad to Do What You Want - PRINCIPLE 9: Make the other person happy about doing the thing you suggest.

There are comments.

SNAKE is no Longer Needed to Run Installation Tests in Beaker

This is a quick status update for one of the pieces of Fedora QA infrastructure and mostly a self-note.

Previously to control the kickstart configuration used during installation in Beaker one

had to either modify the job XML in Beaker or use SNAKE (bkr workflow-snake) to render

a kickstart configuration from a Python template.

SNAKE presented challenges when deploying and using beaker.fedoraproject.org and is virtually unmaintained.

I present the new bkr workflow-installer-test which uses Jinja2 templates to

generate a kickstart configuration when provisioning the system. This is already

available in beaker-client-0.17.1.

The templates make use of all Jinja2 features (as far as I can tell) so you can create very complex ones. You can even include snippets from one template into another if required. The standard context that is passed to the template is:

- DISTRO - if specified, the distro name

- FAMILY - as returned by Beaker server, e.g. RedHatEnterpriseLinux6

- OS_MAJOR and OS_MINOR - also taken from Beaker server. e.g. OS_MAJOR=6 and OS_MINOR=5 for RHEL 6.5

- VARIANT - if specified

- ARCH - CPU architecture like x86_64

- any parameters passed to the test job with

--taskparam. They are processed last and can override previous values.

Installation related tests at fedora-beaker-tests

have been updated with a ks.cfg.tmpl templates to use with this new workflow.

This workflow also has the ability to return boot arguments for the installer if needed.

If any, they should be defined in a {% block kernel_options %}{% endblock %}

block inside the template. A simpler variant is to define a comment line that stars with

## kernel_options:

There are still a few issues which need to be fixed before beaker.fedoraproject.org can be used by the general public though. I will be writing another post about that so stay tuned.

There are comments.

Tip: Collecting Emails - Webhooks for UserVoice and WordPress.com

In my practice I like to use webhooks and integrate auxiliary services with my internal processes or businesses. One of these is the collection of emails. In this short article I'll show you an example of how to collect email addresses from the comments of a WordPress.com blog and the UserVoice feedback/ticketing system.

WordPress.com

For your WordPress.com blog from the Admin Dashboard navigate to

Settings -> Webhooks and add a new webhook with action comment_post

and fields comment_author, comment_author_email. A simple

Django view that handles the input is shown below.

@csrf_exempt

def hook_wp_comment_post(request):

if not request.POST:

return HttpResponse("Not a POST\n", content_type='text/plain', status=403)

hook = request.POST.get("hook", "")

if hook != "comment_post":

return HttpResponse("Go away\n", content_type='text/plain', status=403)

name = request.POST.get("comment_author", "")

first_name = name.split(' ')[0]

last_name = ' '.join(name.split(' ')[1:])

details = {

'first_name' : first_name,

'last_name' : last_name,

'email' : request.POST.get("comment_author_email", ""),

}

store_user_details(details)

return HttpResponse("OK\n", content_type='text/plain', status=200)

UserVoice

For UserVoice navigate to Admin Dashboard -> Settings -> Integrations -> Service Hooks and add a custom web hook for the New Ticket notification. Then use a sample code like that:

@csrf_exempt

def hook_uservoice_new_ticket(request):

if not request.POST:

return HttpResponse("Not a POST\n", content_type='text/plain', status=403)

data = request.POST.get("data", "")

event = request.POST.get("event", "")

if event != "new_ticket":

return HttpResponse("Go away\n", content_type='text/plain', status=403)

data = json.loads(data)

details = {

'email' : data['ticket']['contact']['email'],

}

store_user_details(details)

return HttpResponse("OK\n", content_type='text/plain', status=200)

store_user_details() is a function which handles the email/name received in the webhook,

possibly adding them to a database or anything else.

I find webhooks extremely easy to setup and develop and used them whenever they are supported by the service provider. What other services do you use webhooks for? Please share your story in the comments.

There are comments.

Software Design Vol. 1

I'm starting a new series where I will share my thoughts about software design, usability and other related topics with examples of items I like or dislike. I've written before about the topic so consider this post as a sequel. I'm starting with couple of examples from the mobile world. Plese use the comments to tell if there is something that you particulary like or dislike in software or hardware design (and apologies to my readers for not being able to post more frequently lately).

BlackBerry Camera Burst Feature vs. Android

BlackBerry 10 Camera's burst mode is well made in my opinion. It is capable of taking thousands of pictures and saving the to disk without interruption. Thus I am capable of taking pictures of fast moving objects like flash lights.

On the other hand I have access to an

HTC One smartphone

with Android. The burst

mode feature there is particularly crappy. It shoots between 20 to 50 photos

(haven't counted how much exactly), then takes a second to flash everything to disk,

then pops a question asking the user to select the best one and possibly delete

the rest. If you don't want all of this just tap the back button to return to

shooting mode.

BlackBerry Hub

Hub is another well designed application which integrates all of your accounts and messaging into the core of the OS although other mobile OSes have a similar feature as well if I'm not mistaken. I just mention it because it is easy and comfortable to use.

BlackBerry Memory Management

BlackBerry 10 OS memory management is particularly crappy with respect to the end user. I'm not sure whether this is due to QNX being real-time OS or just the Java stack keeping stuff in memory.

After some usage my development Z10 will begin experiencing out of memory issues (it has 1 GB of RAM vs. 2 GB in production hardware). This will lead to apps crashing, web pages that were able to open, not being able to load and the most annoying of all - camera starts taking pictures with random color spots.

After restarting the device everything is back to normal :(.

BlackBerry Date and Time Synchronization

This one I also hate a lot. It appears that the software is designed to automatically synchronize over the Internet and without a reliable connection fails miserably. After reboot (or even worse battery removal) the device will start with a fixed date and time somewhere in the past. If it fails to synchronize or takes too long several things happen:

- The Blackberry Hub application loads first (email and other accounts) which causes all sorts of warnings of invalid SSL certificates. Which in their own right are annoying because Cancel means dismiss the warning until the next connection retry; which blocks access to the main menu for manual configuration;

- All images taken by the Camera app will be saved on disk with the wrong date which makes you think they are gone; I've found this by accident while scrolling way back in my history looking for something unrelated.

Multiple File Selection

I haven't tested this explicitly on Android or other mobile devices but on BlackBerry

the user needs to tap each individual object to select it. My guess is this is similar

on all devices utilizing a touch screen. However such interface makes it damn hard to

select a thousand files for deletion (the unusable results from the camera burst mode feature).

Luckily Z10 (and possibly others) has a shell application with cp, mv and rm -f commands.

There are comments.

Using D-Link DAP-1320 Wireless Range Extender with MAC Filtering

Recently I've purchased a

wireless range extender

as the one shown here. It had troubles connecting to the upstream Wi-Fi router

because it used MAC filtering instead of password security. Luckily there was

a forum thread which helped

me figure it out.

DAP 1320 uses two MAC addresses

Everything was working just fine with MAC filtering disabled on the upstream router but failed miserably when enabled. I thought the MAC address provided on the DAP 1320 packaging was wrong.

It turned out the device had 2 addresses. The one on the packaging is 70:62:B8:07:0B:76 and it didn't matter if that is enabled or disabled in the router settings. The second MAC is used when trying to forward connections through the router. Both addresses differ by the second symbol with a difference of 2. So I've enabled 72:62:B8:07:0B:76 in the router settings and everything worked like a charm.

Other findings

Unfortunately if a device is connected to the wifi extender's network it will bypass the MAC filtering employed on the upstream wifi router. As much as I dislike using passwords for Wi-Fi I had to configure one for the extended network.

I've also found that when you save the configuration file from the device on your hard drive it comes in a base64-encoded-line-by-line format. Pretty awkward.

Another pleasant (but not entirely surprising) finding was that D-Link included a written acknowledgment of using open source components and an offer to provide source code upon request.

There are comments.

Page 8 / 16