Summary of Evolve Digital

Yesterday I've visited an event organized by the Atlantic Club of Bulgaria which officially marked the launch of a digital national alliance in Bulgaria. This is part of an initiative of the European Commission, called Grand Coalition for Digital Jobs, which aims to address the lack of sufficient amount and quality professionals in Europe working in the field of Information and Communication technologies.

The first part was few short keynotes, including one from deputy prime minister and memorandum signing and group photo. In the keynotes (and afterwards) the stress was on the lack of relevant ICT skills and the need of an estimated 1 million digital workers by 2020 in EU alone.

The next two sessions were panel discussions. The first one presenting the local chapter of the Digital Coalition in Bulgaria.

The goals of the coalition are to:

- help more people master more digital skills (not only as developers but also proper usage of social media, how to create content, etc)

- enable more people to choose digital jobs

- help local talents present themselves on the global scene

How it (is supposed to) works:

- initiate own ideas and propose them for implementation (to the ICT industry and broad public I guess)

- stimulate learning through doing, learning by example using real use cases

- help (and possibly sponsor) outside projects and ideas

Then the various companies supporting the coalition pledged how they are going to help. The most interesting news from this panel was the announcement of a new HP data center in Sofia which will be used as a training lab with access available to students.

The second panel was about education and how to bridge the gap! The interesting points were:

- Ministry of Education and Science admits they have finally realized that our educational system and programs are behind current tendencies and need concrete steps in order to boost education and science research;

- Samsung will open their innovation lab at Sofia University to outside students as well;

- We need to pay more attention to content and training of teachers because they also have a skills gap while young children do not;

I particularly liked what Prof. Petar Kenderov from the Institute of Mathematics and Informatics at Bulgarian Academy of Sciences said - in the outside world children are constantly sharing and communicating with each other while at school (also to some extent in universities) they are expected to not communicate, not share and keep quiet. He emphasized the value of learning through experimentation vs. learning through reading and writing, e.g. the current way things work and the old way of teaching.

Another thing that was obvious was that otherwise competing companies (e.g. Microsoft, Oracle, SAP, VMware) were working together to fix some of the problems mentioned. However I'm a bit skeptical that large multi-national companies will be flexible enough to make a positive change. I'd bet on the independent organizations like HackBulgaria and Svetlin Nakov's Software University or initiatives by local Bulgarian companies like the already proven Telerik Academy.

Here's the entire program of the event, for anyone interested:

“EVOLVE DIGITAL” 10th June 2014 (Tuesday)

10:00 – 10:10 Opening: Gergana Passy, Digital Champion Bulgaria

10:10 – 10:20 Daniela Bobeva, Deputy Prime-Minister of the Republic of Bulgaria

10:20 – 10:30 Constantijn van Oranje, Head of Cabinet of Vice-President of EC Neelie Kroes

10:30 – 10:40 Jonathan Murray, Director, Digital Europe Special guest: Sandi Češko, Founder & President, Studio Moderna

10:40 – 10:50 Memorandum Signing and Group Photo

10:50 – 11:00 Pause

11:00 – 12:15 Digital Coalition Bulgaria Moderator: Boyan Benev, Founder, Forward.li Rennie Popcheva, CEO, Digital Coalition Bulgaria Boyko Iaramov, Co-founder & Chief Process Officer, Telerik Marta Poslad, Senior Policy Advisor, CEE Google Petar Ivanov, General Manager, Microsoft Bulgaria Iravan Hira, General Manager, Hewlett-Packard Bulgaria Atanas Dobrev, CEO, VIVACOM Danny Gooris, Senior Manager, Oracle Academy Dimitar Dimitrov, Relationship Business Development, Lenovo Stamen Kochkov, Vice-president, SAP Labs Bulgaria, Chairman of BASSCOM

12:15 – 12:30 Coffee Break

12:30 – 13:45 Education in the Digital Era Moderator: Boyan Benev, Founder, Forward.li Ivan Krastev, Deputy Minister, Ministry of Education and Science, Republic of Bulgaria George Stoytchev, Executive Director, Open Society Institute Sofia Sabina Stirb, Public Affairs & Corporate Citizenship Manager, Samsung Prof. Petar Kenderov, Institute of Mathematics and Informatics, Bulgarian Academy of Sciences Dr. George Sharkov, Director, European Software Institute - Center Eastern Europe Elena Marinova, President, Musala Soft, Chair of the Educational Commission, Bulgarian Association of Information Technologies Svetlin Nakov, Founder, Software University

13:45 – Lunch in Restaurant “At the Eagles” 11 Dyakon Ygnatiy str., in the building of Ministry of transport, 18th Floor

There are comments.

Bulgaria Web Summit Report

Last week ended up with Bulgaria Web Summit. The event was very big this year, with 4 halls and 600 visitors. I was moderating the so called JavaScript hall and will concentrate on what happened there.

This is what my hall looked like at around 10:00 and stayed pretty much the same

during the entire day.

This is what my hall looked like at around 10:00 and stayed pretty much the same

during the entire day.

Speakers at hall Rhodopi were (in order of appearance)

- Delian Delchev talking about the JavaScript revolution taking over the world and how this language has become the most widely used programming language in the world.

- Haralan Dobrev with some tips about development workflows, git, continuous integration and unit testing.

- Yoga for Geeks during the lunch break.

- Boyan Dzhumakov with topics about home automation and Internet of things, who demonstrated his bed side lamp controlled via a light sensor and Arduino.

- Neven Boyanov continuing on the hardware topics with comparison of ATmel, Arduino and Raspberry Pi and showing more examples of small programmable devices.

- Angel Todorov from Infragistics with a very interesting talk about JavaScript instrumentation for performance analysis. A very cool presentation and you should definitely check out his cheetah.js framework.

- Krasimir Tsonev with AbsurdJS - a JavaScript library with superpowers. Teaser: Krasimir is also publishing a book caled Node.js Blueprints.

- Vasil Kolev with a talk about security and web service design and how most of the web is doing it wrong :).

Unfortunately there is no video or audio recording available. If you find some of the talks interesting and would like to get more info about it let me know. I will ask the presenters to share their slides or give more details where possible.

Don't worry if you've missed the Web Summit in Sofia. There will be another one in the autumn, held at Veliko Tarnovo. For more info please subscribe to the newsletter.

There are comments.

DigitalK Day 2 Report

Continuing to report on the last few events that took place in Sofia this week.

Day #2 of DigitalK started with the presentation of Piotr Jas of BlaBla Car. What I really liked about it (picture TBA) was one particular graph depicting the various types of travel (time before travel vs. cost of travel) and visualizing different industries (e.g. air travel) or competitor companies. The image clearly exhibited a blank space which is the exact market segment where BlaBla Car have positioned themselves in.

A very practical and easy to use tool for entrepreneurs in my opinion.

From the rest of the keynotes I can outline two in particular: Ivan Hernandez' Disruptive Innovation and the future of Digital Transformation and The future of Tech and Communities by Tim Röhrich.

I liked them because both were very inspirational although not telling you something which you haven't heard before. They were basically reinforcing the spirit of the event.

In the small hall there was a workshop called How to turn a good idea into a successful start-up led by Daniela Neumann, which didn't turn out to be what I expected. She was talking about the early cycle of idea/customer validation and I don't remember any particular example being shown. Frankly most of the people have heard about this before (myself included) and were quite bored. Not many of them stayed till the end.

The evening continued with the already traditional Silicon Drinkabout in Sofia which hosted many of the conference attendees. I did a quick visit only because I had to prepare myself for Bulgaria Web Summit on the next day!

There are comments.

Twilio meetup and DigitalK 2014 Day 1

The conference season (this week) is officially open! So here's my report about it.

It started by accident last evening with a warm-up event sponsored by Twilio at betahaus Sofia. I had the pleasure to have a long chat with Lisa from Twilio over a few beers.

Today was Day #1 of DigitalK which is the leading SEE technology event. I've also visited the event last year and must say that this time it is bigger. The main hall was totally packed. The WiFi connectivity has been improved since last year but still sucked. I had more success using my 3G instead.

The most interesting part of Day #1 was the mini Seedcamp session which presented 10 startups. In order of appearance they are

Viblast, Stepsss and Talkie were the 3 finalists who also presented at the main hall. Needless to say the coolest one from technology point of view is Viblast, which is a peer-to-peer video streaming platform, utilizing WebRTC. They were also strong at the presentation and look very strong at the business level as well. I wish them good luck.

Stepsss is essentially a shoe sole with sensors paired with smart-phone apps to give runners more info and feedback. I like it because they are a hardware company although there's lots of competition in this space. I'd like to beta test their product and see what happens when I go dancing all night long :).

Talkie is an educational app for children which helps them learn new languages. I had the pleasure to see it in action before the actual presentation. The design is very cool and the app does seem usable and complete. However I don't see much of a technological challenge here and I'm not sure how will they deal with the strong competition in the ed-tech space. We'll have to wait and see.

From the rest ScaleWhale and Smartoken do seem interesting but I really failed to get how things will be organized on the technology side. Both ideas are run by a single person team, which is a recipe for disaster (judging from my own experience).

Tomorrow is Day #2 of DigitalK and on Saturday I'm moderating one of the rooms at Bulgaria Web Summit. Expect more reports soon.

There are comments.

I Want to Be a Robot - Book Review: The Singularity Is Near

I've just finished reading

The Singularity Is Near: When Humans Transcend Biology

and all I have to say is "I want to be a robot"!

This is one of the books that took me the longest time to read. It's a hard to read book because it is full of technical and scientific details, quotes a great deal of facts and research and leads your mind into fields which deserve a separate books for themselves.

The purely technical side of the book makes it a bit hard to follow as you need to have a good deal of understanding of computing technology and concepts and keep in mind what's been said in previous chapters.

Ray Kurzweil starts with historical data about evolution and technological progress. He postulates his theory of technology evolution called "The Law of Accelerating Returns" and lists a great deal of examples to prove that evolutionary processes are indeed not linear but exponential.

The next two chapters explain how much is the computational capacity of the human brain, how to achieve that and how to reverse engineer the brain itself. Think about 3-D molecular computing, quantum computing, brain imaging and scanning :)

Increased computing capacity and understanding of the human brain (and general progress of science and technology in the mean time) will lead to the three revolutions which will make the Singularity possible: Genetics, Nanotechnology and Robotics (Strong AI). Ray gives a lot of examples and current research which is well under way currently or will become a reality in the next 10 to 30 years.

Following in the book is a list of impacts caused by the advancement of technology and the Singularity itself. On the human body and brain, on longevity, on warfare, on work and learning and play, on the Cosmos.

Because the Singularity is not a single event but rather many events which happen in parallel and gradually over time we will have a hard time defining what a human means. What is human, what is consciousness and where the line is are questions which need to be taken into consideration. Ultimately the human race will become (predominantly) non-biological.

How do you deal with dangers and shortcomings in technology? I myself as a QA engineer have seen software fail in spectacular ways. How about machine failures? Now how about nanobots in your blood stream or strong AI gone wild? Ray explains some of the possible threats and proposals to overcome them. His point is that benefits from advanced technology will be far greater than dangers and we will be able to first design our defense systems before anything else that may threaten our existence.

The last chapter contains examples of criticism and explanations why they are incorrect which is the first of its kind I've seen in a book.

Epilogue

Human Centrality. A common view is that science has consistently been correcting our overly inflated view of our own significance. Stephen Jay Gould said, "The most important scientific revolutions all include, as their only common feature, the dethronement of human arrogance from one pedestal after another of previous convictions about our centrality in the cosmos."

But it turns out that we are central, after all. Our ability to create models-virtual realities-in our brains, combined with our modest-looking thumbs, has been sufficient to usher in another form of evolution: technology. That development enabled the persistence of the accelerating pace that started with biological evolution. It will continue until the entire universe is at our fingertips.

There are comments.

Book Review - Last 3 Months

Hello folks, this is my book list for the past 3 months. It ranges from tech and start-up related to Japanese and kid stories. Here's my quick review.

Lean UX

Lean UX: Applying Lean Principles to Improve User Experience

is the second book I read on the subject after first reading

UX for Lean Startups.

It is published before UX for Lean Startups and is much more about principles than any practical methods. Honestly I'm not sure if I took any real value out of it. Maybe if I had read these two books in reverse order it would have been better.

The Hacienda - How Not to Run a Club

The Hacienda: How Not to Run a Club

by Peter Hook is one of my favorites. It covers a great deal of music and clubland history,

depicts crazy parties and describes the adventure of owning one of the most

popular nightclubs in the world. All of that while struggling to make a buck and

pouring countless pounds into a black hole.

The irony is The Hacienda became a legendary place only after it had closed down and later on being demolished.

A must read for anyone who is considering business in the entertainment industry or wants to read a piece of history. My favorite quote of the book:

Years after, Tony Wilson found himself sitting opposite Madonna at dinner.

‘I eventually plucked up the courage to look across the table to Madonna and ask, “Are you aware that the first place you appeared outside of New York was our club in Manchester?”

‘She gave me an ice-cold stare and said, “My memory seems to have wiped that.”’

Simple Science Experiments

Simple Science Experiments

by Hans Jürgen Press is a very old book listing 200 experiments which you can

do at home using household materials. It is great for teaching basic science

to children. The book is very popular and is available in many languages

and editions - just search for it.

I used to have this as a kid and was able to purchase the 1987 Bulgarian edition at an antique bookstore in Varna two months ago.

Ronia, the Robber's Daughter

Decided to experiment a little bit and found

Ronia, the Robber's Daughter.

It's a child's book telling the story of two kids whose fathers are rival

robbers. The book is an easy read (2-3 hrs before bed time) with stories

of magic woods, dwarfs and scary creatures mixed with human emotions

and the good vs. bad theme.

Japanese Short Stories

I've managed to find a 1973 compilation of Japanese short stories translated into Bulgarian. Also one of my favorite books.

If I'm not mistaken these are classic Japanese authors, nothing modern or cutting edge. Most of the action happens during the early 1900s as far as I can tell. What impresses me most is the detailed description of nature and surrounding details in all of the stories.

The Singularity Is Near

I've also started

The Singularity Is Near: When Humans Transcend Biology

by Ray Kurzweil.

It's a bit hard to read because the book is full of so many technical details about genetics, nanotechnology, robotics and AI.

Ray depicts a bright future where humans will transcend our biological limitations and essentially become pure intelligence. Definitely a good read and I will tell you more about it when I finish it.

What have you been reading since January ? I'd love to see your book list or connect on Goodreads.

There are comments.

OpenSource.com article - 10 steps to migrate your closed software to open source

Difio is a Django based application that keeps track of packages and tells you when they change. Difio was created as closed software, then I decided to migrate it to open source ....

Read more at OpenSource.com

Btw I'm wondering if Telerik will share their experience opening up the core of their Kendo UI framework on the webinar tomorrow.

There are comments.

Screen Magnifier and Smart Phone!

Hey guys, I want to

replace my laptop for a smartphone.

There's the issue with a desk working environment and one solution is to

buy an external display

The other one is to use a

screen magnifier

as shown on the picture.

Ultimately it should be possible to use

any 3x Fresnel lens magnifier and make your 5" phone display into a 15" laptop

size one, which is perfect for me!

The magnifiers used for reading, like

this one

are cheap and portable but don't look very well around the edges.

None of the

phone screen magnifiers

on Amazon ships to my country so I don't have any experience with them,

although they appear to be exactly what I need. If anyone has such a device

at hand I'd love to hear how well this works for you. Please comment below.

There are comments.

Howto: Django Forms with Dynamic Fields

Last week at HackFMI 3.0 one team had to display a form which presented multiple choice selection for filtering, where the filter keys are read from the database. They've solved the problem by simply building up the HTML required inside the view. I was wondering if this can be done with forms.

>>> from django import forms

>>>

>>> class MyForm(forms.Form):

... pass

...

>>> print(MyForm())

>>> MyForm.__dict__['base_fields']['name'] = forms.CharField()

>>> MyForm.__dict__['base_fields']['age'] = forms.IntegerField()

>>> print(MyForm())

<tr><th><label for="id_name">Name:</label></th><td><input id="id_name" name="name" type="text" /></td></tr>

<tr><th><label for="id_age">Age:</label></th><td><input id="id_age" name="age" type="number" /></td></tr>

>>>

>>>

>>> POST = {'name' : 'Alex', 'age' : 0}

>>> f = MyForm(POST)

>>> print(f)

<tr><th><label for="id_name">Name:</label></th><td><input id="id_name" name="name" type="text" value="Alex" /></td></tr>

<tr><th><label for="id_age">Age:</label></th><td><input id="id_age" name="age" type="number" value="0" /></td></tr>

>>> f.is_valid()

True

>>> f.is_bound

True

>>> f.errors

{}

>>> f.cleaned_data

{'age': 0, 'name': u'Alex'}

So if we were to query all names from the database then we could build up the class by adding a BooleanField using the object primary key as the name.

>>> MyForm.__dict__['base_fields']['123'] = forms.BooleanField()

>>> print(MyForm())

<tr><th><label for="id_123">123:</label></th><td><input id="id_123" name="123" type="checkbox" /></td></tr>

>>> f = MyForm({'123' : True})

>>> f.is_valid()

True

>>> f.cleaned_data

{'123': True}

There are comments.

HackFMI 3.0 Post-mortem

The fourth HackFMI, now traditional, hackathon was held this weekend. It is over and I still can't wrap my head around what happened during these 3 nights. Here's bits of code, beer, energy drinks and fun as I saw it.

A big thanks goes to Lilly and Misha who were running around like robots managing the day-to-day activities. Kudos to the rest of the team as well because they've established HackFMI as a tradition and people already ask when is going to be the next event.

What

This year the topic was Hack for charity and immediate goal of raising money for a sick kid. Most of the teams got to work to meet these goals. Only a few had worked on slightly different charity (broadly defined) ideas.

My favorite two apps were Blago-darenie and SMShelp although they were not developed with Django.

Blago-darenie is a simple WordPress site listing donation campaigns. Instead of directly donating money one needs to promise something (an action, an object, etc) and put a price tag on it. When the promise is claimed the two parties donate the money to that particular campaign and exchange the promised goods or services. I've promised to cook dinner involving tasty meatballs from horse meat and serve one of my wine bottles to whoever decides to donate 25 EUR. (disclaimer: I'm a good cook and love wine more than code).

SMShelp is an aggregator of donation campaigns via SMS which are very popular in the country but lack a central repository for all of them. A simple web site, live Android app and wonderful design secured the team the first place! BTW Team 8 was Adrian and Vihren who took part in all previous editions as well.

TODO

I'm glad both organizers and teams had listened to some of my feedback but there are still things to improve. The most obvious one was that a quick communication channel to all the teams is needed. Facebook and email just didn't cut it.

I already have couple of quick ideas involving Django and Twilio's cloud services. Let me take a few more days to get it clear before going any further.

/me

I found myself mentoring as much as I could helping folks with Django or just with general ideal or concepts, serving cake provided by Chaos Group and opening stacks of energy drinks, going door to door and letting teams know deployment and presentation details for the last day.

What surprised me a bit was that there were many new teams (some involving previous contestants) who were very diverse in their technological background. This presented a challenge to some of them as they had to use a technology which nobody on the team knew very well and had to make a working app with that. One team even changed from Python to PHP in the middle of day 2.

On Sunday I was pretty much helping the HackFMI team with whatever I can, checking on my favorite teams from time to time and giving access to cloud servers left and right to people who needed them.

Unfortunately I missed the Grand Finale due to unexpected hardware problems involving big iron and 150 litters of loose water :(. See you next time!

There are comments.

Spoiler: How to Open Source Existing Proprietary Code in 10 Steps

We've heard about companies opening up their proprietary software products, this is hardly news nowadays. But have you wondered what it is like to migrate production software from closed to open source? I would like to share my own experience about going open source as seen from behind the keyboard.

Difio was recently open sourced and the steps to go through were:

- Simplify - remove everything that can be deleted

- Create self contained modules aka re-organize the file structure

- Separate internal from external modules

- Refactor the existing code

- Select license and update copyright

- Update 3rd party dependencies to latest versions and add requirements.txt

- Add README and verbose settings example

- Split difio/ into its own git repository

- Test stand alone deployments on fresh environment

- Announce

Do you want to know more? Use the comments and tell me what exactly! I'm writing a longer version of this article so stay tuned!

There are comments.

Beware of Django default Model Field Option When Using datetime.now()

Beware if you are using code like this:

models.DateTimeField(default=datetime.now())

i.e. passing a function return value as the default option for a model field in Django. In some cases the value will be calculated once when the application starts or the module is imported and will not be updated later. The most common scenario is DateTime fields which default to now(). The correct way is to use a callable:

models.DateTimeField(default=datetime.now)

I've hit this issue on a low volume application which uses cron to collect its own metrics by calling an internal URL. The app was running as WSGI app and I wondered why I got records with duplicate dates in the DB. A more detailed (correct) example follows:

def _strftime():

return datetime.now().strftime('%Y-%m-%d')

class Metrics(models.Model):

key = models.IntegerField(db_index=True)

value = models.FloatField()

added_on = models.DateTimeField(db_index=True, default=datetime.now)

added_on_as_text = models.CharField(max_length=16, default=_strftime)

Difio also had the same bug but didn't exhibit the problem because all objects with default date-time values were created on the backend nodes which get updated and restarted very often.

For more info read this

blog.

For general info on Django, please check out

Django books on Amazon.

There are comments.

Positive Biological Effects of Open Source on Humans

Recently I watched a talk by Simon Sinek about leadership. He talks about Endorphins, Dopamine, Serotonin and Oxytocin and how they make us feel and act a particular way. Then I though - maybe that's why working in the open source field always felt great and natural to me. Maybe we humans are programmed to follow the open source way!

This article scratches the surface where body chemistry and open source intersect. I hope it will help both volunteers and community managers get an insight of the driving forces in our bodies, how they relate to the open source world and promt further exploration. By seeking to better understand the positive effects and avoid the negative ones we can become better contributors and leaders which ultimately helps our communities.

Endorphins

Endorphins stand for endurance. Their job is to mask physical pain and it has been suggested to have evolutionary roots based on the theory that they helped with the survival of early humans. Athletes experience so called Runner's high.

In the open source world one may work on a feature or task for hours and hours without feeling exhausted. The task itself keeps you going and excited. This is endorphins going through your brain.

In software one may experience an endorphin rush during public release days for example. For large projects like Fedora the release process includes many steps and may take several hours. During all that time the release engineer is usually available regardless of their native time zone.

Effects of endorphins could potentially increase the likelihood of injury or extreme exhaustion, as pain sensation could be more easily ignored. Work and rest cycles need to be properly balanced.

Dopamine

This is the feeling when we achieve our goals or found something we were looking for. Dopamine helps us get things done! This is why we're told to write down our goals and then cross them off. It makes people more productive.

Getting dopamine through open source is very easy - all you need to do is fix a bug, then another one, and another one, and another one ... After every task you complete the body gets a small dopamine fix. "Release early, release often ..." and you get your fix :).

Dopamine however is highly addictive and destructive if unbalanced. It has the same negative effects as any other addiction - alcohol, drugs, etc. Be aware of that and don't fall for the performance trap.

Endorphins and Dopamine are so called selfish chemicals. You can get them without external help. The next two are the social chemicals.

Serotonin

It is responsible for feelings of pride and status and assessing social rank. Serotonin is produced when you are recognized for achievements by the open source community or credited by somebody (e.g. Johnny Bravo mentioned a great idea on IRC today).

As a contributor one may work on items which will help you get recognition but ultimately this is not for you to decide. However practice shows that credit and recognition are relatively easy to get in the open source world provided one has contributed to make the project and the community better in some sort.

In software this is being granted commit rights to a repository, being in the top spot of some metrics, having your blog read by other members or simply people asking for help or what you think about some topic.

Serotonin is considered the leadership chemical. As one becomes a leader recognized by the community there's a catch - the more your status goes up the more work you have to do. The more people recognize you as the leader the more they expect you to sacrifice yourself in case it all goes Pete Tong. If you are not ready to step up find a more suitable place in the community instead.

Oxytocin

Oxytocin is responsible for feelings of love, trust and friendship. It makes us feel safe. It is also very good for the body because it makes us healthier, boosts our immune system, increases ability to solve problems and increases creativity.

One way to get Oxytocin is by physical touch - e.g. a hand shake. This is probably one of the reasons beer gatherings are so popular among open source developers. Working digitally we need a way to reinforce human bonds in our communities. Knowing the person on the other end of the wire ultimately makes us feel safer. If you are in open source just go for that conference or a local beer bash you wanted to go - it is good for you (but don't get drunk).

Another way to get Oxytocin is by performing or witnessing acts of human generosity. This comes natural in open source world where people give up their free time and energy to work towards a shared goal. Just by working in an open source environment you get all that goodness for you.

The best thing about Oxytocin is that it is not addictive and slowly builds up in the body. The bad side is that it takes a while to build up. This is why you have to stay a little longer in open source before it starts feeling safe and welcoming.

Cortisol

The last chemical Simon talks about is Cortisol. It is bad, very bad. It will crash your body. Cortisol means stress. It is designed to keep humans (and animals) alive by hyper tuning our senses in case of danger. Trouble is you are not supposed to have it in your body for long periods of time because it shuts down non-essential systems to deliver that extra energy.

Luckily most open source projects are not stressful and I think can be considered a safe place to work in. In the end one can always shift to another role or move to another project if it becomes too pressing.

By committing to help another member or perform service to the community our bodies get all the good stuff and beat the negative. Service to a community is exactly what open source does! See, humans are programmed to live and work the open source way!

There are comments.

Happy April Fools Day

Happy April Fools Day everyone! Here are few stories that I find particularly amusing.

Top 5 Pranks Game Developers Pulled on Players - now that's what I call dedication. #1 is definitely worth a peek :)

April Fools Pranks for Developers or office workers! This is pure evil which messes up with the default CSS settings on your computer.

What other developer related pranks did you find ?

There are comments.

I Need an App to Connect With My Facebook Page Fans

The biggest problem of Facebook is the sheer number of news items which are filtered out if users are not actively engaged with them. For a page admin/business owner this means Facebook makes a poor job at reaching to my fans and potential customers unless I keep paying them money! I need two basic features to solve this problem.

Contact All Users App aka Newsletter

I need to be able to message my fan base like a newsletter with the following requirements in mind:

- Users have already liked the page, don't make them sign-up or follow anything else;

- Preferably use Facebook messages instead of email;

- Let them unsubscribe from the newsletter without going away/blocking the page;

The first one is straight forward - I've already put some money in promoting my page and collecting fans. No need for more hurdles to get started. For reference out of 500 users none has actually subscribed to the page. This gives you an idea how much they will subscribe to newsletters and such. They click LIKE and forget about it.

The second one is dependent on the target audience. I have the feeling that my target audience is reading Facebook messages much more than email.

My primary use case of this will be to send new offers and validation queries to the target audience in order to tailor the content and business to them.

Weekly Digest App

Looking at my Facebook page stats it is clear that only promoted content gets a higher reach. This includes both content paid for and content posted during periods where the page itself had an active advertisement.

This is no coincidence I think. When the page is promoted to new audience they will get around the available tabs, explore and like pictures and scroll down to see some of the older content. Assuming the person becomes a page fan they will fall into the previously described trap (content filter) and not see much of the page activity.

I need an application which will aggregate the content from the last week and send it to everyone. This app needs to:

- Be able to configure digest period/cut-off days and when to send the digest;

- Allow users to unsubscribe or alter their digest preferences;

Primary use case is to alert users of content which they may have missed.

- Bonus feature: exclude content which was seen by the specific person or they somehow reacted to it;

Where to next

I am actively looking for such kinds of apps but haven't found any yet. When time allows I will be looking at the Facebook API to see if this is at all possible to implement.

Any suggestions ?

There are comments.

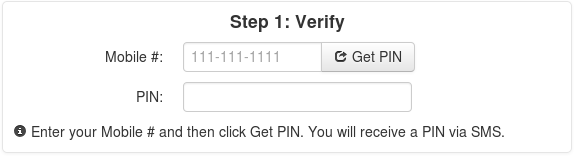

SMS PIN Verification with Twilio and Django

This is a quick example of SMS PIN verification using Twilio cloud services and Django which I did for a small site recently.

The page template contains the following HTML snippet

<input type="text" id="mobile_number" name="mobile_number" placeholder="111-111-1111" required>

<button class="btn" type="button" onClick="send_pin()"><i class="icon-share"></i> Get PIN</button>

and a JavaScript function utilizing jQuery:

function send_pin() {

$.ajax({

url: "{% url 'ajax_send_pin' %}",

type: "POST",

data: { mobile_number: $("#mobile_number").val() },

})

.done(function(data) {

alert("PIN sent via SMS!");

})

.fail(function(jqXHR, textStatus, errorThrown) {

alert(errorThrown + ' : ' + jqXHR.responseText);

});

}

Then create the following views in Django:

def _get_pin(length=5):

""" Return a numeric PIN with length digits """

return random.sample(range(10**(length-1), 10**length), 1)[0]

def _verify_pin(mobile_number, pin):

""" Verify a PIN is correct """

return pin == cache.get(mobile_number)

def ajax_send_pin(request):

""" Sends SMS PIN to the specified number """

mobile_number = request.POST.get('mobile_number', "")

if not mobile_number:

return HttpResponse("No mobile number", mimetype='text/plain', status=403)

pin = _get_pin()

# store the PIN in the cache for later verification.

cache.set(mobile_number, pin, 24*3600) # valid for 24 hrs

client = TwilioRestClient(settings.TWILIO_ACCOUNT_SID, settings.TWILIO_AUTH_TOKEN)

message = client.messages.create(

body="%s" % pin,

to=mobile_number,

from_=settings.TWILIO_FROM_NUMBER,

)

return HttpResponse("Message %s sent" % message.sid, mimetype='text/plain', status=200)

def process_order(request):

""" Process orders made via web form and verified by SMS PIN. """

form = OrderForm(request.POST or None)

if form.is_valid():

pin = int(request.POST.get("pin", "0"))

mobile_number = request.POST.get("mobile_number", "")

if _verify_pin(mobile_number, pin):

form.save()

return redirect('transaction_complete')

else:

messages.error(request, "Invalid PIN!")

else:

return render(

request,

'order.html',

{

'form': form

}

)

PINs are only numeric and are stored in the CACHE instead of the database which I think is simpler and less expensive in terms of I/O operations.

There are comments.

How Do You Test Thai Scalable Fonts

Recently I wrote about testing fonts. I finally managed to get an answer from the authors of thai-scalable-fonts.

What is your approach for testing Fonts-TLWG?

It's not automated test. What it does is generate PDF with sample texts at several sizes (the waterfall), pangrams, and glyph table. It needs human eyes to investigate.

What kind of problems is your test suite designed for ?

- Shaping

- Glyph coverage

- Metrics

We also make use of fontforge features to make spotting errors easier, such as - Show extremas - Show almost vertical/horizontal lines/curves

Theppitak Karoonboonyanan, Fonts-TLWG

There are comments.

Reducing AWS Cloud Costs - Real Money Example

Last month Amazon reduced by 50% prices for EBS storage. This, combined with shrinking EBS root volume size and moving /tmp to instance storage allowed me to reduce EBS related costs behind Difio by around 50%. Following are the real figures from my AWS Bill.

EBS costs for Difio were gradually rising up with every new node added to the cluster and increased package processing (resulting in more I/O):

- November 2013 - $7.38

- December 2013 - $10.55

- January 2014 - $11.97

January 2014:

EBS

$0.095 per GB-Month of snapshot data stored 9.052 GB-Mo $0.86

$0.10 per GB-month of provisioned storage 101.656 GB-Mo $10.17

$0.10 per 1 million I/O requests 9,405,243 IOs $0.94

Total: $11.97

In February there was one new system added to process additional requests (cluster nodes run as spot instances) and an increased number of temporary instances (although I haven't counted them) while I was restructuring AMI internals to accommodate the open source version of Difio. My assumption (based on historical data) is this would have driven the costs up in the region of $15 per month only for EBS.

After implementing the stated minimal improvements and having Amazon reduced the prices by half the bill looks like this:

February 2014:

EBS

$0.095 per GB-Month of snapshot data stored 8.668 GB-Mo $0.82

$0.05 per GB-month of provisioned storage 58.012 GB-Mo $2.90

$0.05 per 1 million I/O requests 5,704,482 IOs $0.29

Total: $4.01

Explanation

Snapshot data stored is the volume of snapshots (AMIs, backups, etc) which I have. This is fairly constant.

Provisioned storage is the volume of EBS storage provisioned for running instances (e.g. root file system, data partitions, etc.). This was reduced mainly because of shrinking the root volumes. (Previously I've used larger root volumes for a bigger /tmp).

I/O requests is the number of I/O requests associated with your EBS volumes. As far as I understand Amazon doesn't charge for I/O related to ephemeral storage. Moving /tmp from EBS to instance storage is the reason this was reduced roughly by half.

Where To Next

I've reduced the root volumes back to the 8GB defaults but this has still room for improvement b/c the AMI is quite minimal. This will bring the largest improvements. Another thing is the still relatively high I/O rate that touches EBS volumes. I haven't investigated where this comes from though.

There are comments.

How do You Test Fonts

Previously I mentioned about testing fonts but didn't have any idea how this is done. Authors Khaled Hosny of Amiri Font and Steve White of GNU FreeFont provided valuable insight and material for further reading. I've asked them:

- What is your approach for testing ?

- What kind of problems is your test suite designed for ?

Here's what they say:

Currently my test suite consists of text strings (or lists of code points) and expected output glyph sequences and then use HarfBuzz (through its hb-shape command line tool) to check that the fonts always output the expected sequence of glyphs, sometimes with the expected positioning as well. Amiri is a complex font that have many glyph substitution and positioning rules, so the test suite is designed to make sure those rules are always executed correctly to catch regressions in the font (or in HarfBuzz, which sometimes happens since the things I do in my fonts are not always that common).

I think Lohit project do similar testing for their fonts, and HarfBuzz itself has a similar test suite with a bunch of nice scripts (though they are not installed when building HarfBuzz, yet[1]).

Recently I added more kinds of tests, namely checking that OTS[2] sanitizes the fonts successfully as this is important for using them on the web, and a test for a common mistakes I made in my feature files that result in unexpected blank glyphs in the fonts.

Khaled Hosny, Amiri Font

The answer is complicated. I'll do what I can to answer.

First, the FontForge application has a "verification" function which can be run from a script, and which identifies numerous technical problems.

FontForge also has a "Find Problems" function that I run by hand.

The monospaced face has special restrictions, first that all glyphs of non-zero width must be of the same width, and second, that all glyphs lie within the vertical bounds of the font.

Beside this, I have several other scripts that check for a few things that FontForge doesn't (duplicate names, that glyph slots agree with Unicode code within Unicode character blocks).

Several tests scripts have yet to be uploaded to the version control system -- because I'm unsure of them.

There is a more complicated check of TrueType tables, which attempts to find cases of tables that have been "shadowed" by the script/language specification of another table. This is helpful, but works imperfectly.

ALL THAT SAID,

In the end, every script used in the font has to be visually checked. This process takes me weeks, and there's nothing systematic about it, except that I look at printout of documents in each language to see if things have gone awry.

For a few documents in a few languages, I have images of how text should look, and can compare that visually (especially important for complex scripts.)

A few years back, somebody wrote a clever script that generated images of text and compared them pixel-by-pixel. This was a great idea, and I wish I could use it more effectively, but the problem was that it was much too sensitive. A small change to the font (e.g. PostScript parameters) would cause a small but global change in the rendering. Also the rendering could vary from one version of the rendering software to another. So I don't use this anymore.

That's all I can think of right now.

In fact, testing has been a big problem in getting releases out. In the past, each release has taken at least two weeks to test, and then another week to fix and bundle...if I was lucky. And for the past couple of years, I just haven't been able to justify the time expenditure. (Besides this, there are still a few serious problems with the fonts--once again, a matter of time.)

Have a look at the bugs pages, to get an idea of work being done.

http://savannah.gnu.org/bugs/?group=freefont

Steve White, GNU FreeFont

I'm not sure if ImageMagic or PIL can help solve the rendering and compare problem Steve is talking about. They can definitely be used for image comparison so maybe coupled with some rendering library it's worth a quick try.

If you happen to know more about fonts, please join me in improving overall test coverage in Fedora by designing test suites for fonts packages.

There are comments.

Last Week in Fedora QA

Here are some highlights from the past week discussions in Fedora which I found interesting or participated in.

Call to Action: Improving Overall Test Coverage in Fedora

I can not stress enough how important it is to further improve test coverage in Fedora! You can help too. Here's how:

- Join upstream and create a test suite for a package you find interesting;

- Provide patches - first patch came in less than 30 minutes of initial announcement :);

- Review packages in the wiki and help identify false negatives;

- Forward to people who may be interested to work on these items;

- Share and promote in your local open source and developer communities;

Auto BuildRequires

Auto-BuildRequires

is a simple set of scripts which compliments rpmbuild by

automatically suggesting BuildRequires lines for the just built package.

It would be interesting to have this integrated into Koji and/or continuous integration environment and compare the output between every two consecutive builds (iow older and newer package versions). It sounds like a good way to identify newly added or removed dependencies and update the package specs accordingly.

How To Test Fonts Packages

This is exactly what Christopher Meng asked and frankly I have no idea.

I've come across a few fonts packages (amiri-fonts, gnu-free-fonts and thai-scalable-fonts) which seem to have some sort of test suites but I don't know how they work or what type of problems they test for. On top of that all three have a different way of doing things (e.g. not using a standardized test framework or a variation of such).

I'll keep you posted on this once I manage to get more info from upstream developers.

Is URL Field in RPM Useless

So is it? Opinions here differ from totally useless to "don't remove it, I need it". However I run a small test and from 2574 RPMs on the source DVD there is around 40% of "something different than HTTP 200 OK". This means 40% potentially broken URLs!

The majority are responses in the 3XX range and only less than 10% are actual errors (4XX, 5XX, missing URLs or connection errors).

It will be interesting to see if this can be removed from rpm altogether.

I don't think it will happen soon but if we don't use it why have it there?

My script for the test is here.

There are comments.

Page 9 / 16