Reducing AWS Cloud Costs - Real Money Example

Last month Amazon reduced by 50% prices for EBS storage. This, combined with shrinking EBS root volume size and moving /tmp to instance storage allowed me to reduce EBS related costs behind Difio by around 50%. Following are the real figures from my AWS Bill.

EBS costs for Difio were gradually rising up with every new node added to the cluster and increased package processing (resulting in more I/O):

- November 2013 - $7.38

- December 2013 - $10.55

- January 2014 - $11.97

January 2014:

EBS

$0.095 per GB-Month of snapshot data stored 9.052 GB-Mo $0.86

$0.10 per GB-month of provisioned storage 101.656 GB-Mo $10.17

$0.10 per 1 million I/O requests 9,405,243 IOs $0.94

Total: $11.97

In February there was one new system added to process additional requests (cluster nodes run as spot instances) and an increased number of temporary instances (although I haven't counted them) while I was restructuring AMI internals to accommodate the open source version of Difio. My assumption (based on historical data) is this would have driven the costs up in the region of $15 per month only for EBS.

After implementing the stated minimal improvements and having Amazon reduced the prices by half the bill looks like this:

February 2014:

EBS

$0.095 per GB-Month of snapshot data stored 8.668 GB-Mo $0.82

$0.05 per GB-month of provisioned storage 58.012 GB-Mo $2.90

$0.05 per 1 million I/O requests 5,704,482 IOs $0.29

Total: $4.01

Explanation

Snapshot data stored is the volume of snapshots (AMIs, backups, etc) which I have. This is fairly constant.

Provisioned storage is the volume of EBS storage provisioned for running instances (e.g. root file system, data partitions, etc.). This was reduced mainly because of shrinking the root volumes. (Previously I've used larger root volumes for a bigger /tmp).

I/O requests is the number of I/O requests associated with your EBS volumes. As far as I understand Amazon doesn't charge for I/O related to ephemeral storage. Moving /tmp from EBS to instance storage is the reason this was reduced roughly by half.

Where To Next

I've reduced the root volumes back to the 8GB defaults but this has still room for improvement b/c the AMI is quite minimal. This will bring the largest improvements. Another thing is the still relatively high I/O rate that touches EBS volumes. I haven't investigated where this comes from though.

There are comments.

Moving /tmp from EBS to Instance Storage

I've seen a fair amount of stories about moving away from Amazon's EBS volumes

to ephemeral instance storage. I've decided to give it a try starting with /tmp

directory where Difio operates.

It should be noted that although instance storage may be available for some instance types it may not be attached by default. Use this command to check:

$ curl http://169.254.169.254/latest/meta-data/block-device-mapping/

ami

root

swap

In the above example there is no instance storage present.

You can attach one either when launching the EC2 instance or when creating a customized AMI (instance storage devices are pre-defined in the AMI). When creating an AMI you can attach more ephemeral devices but they will not available when instance is launched. The maximum number of available instance storage devices can be found in the docs. That is to say if you have an AMI which defines 2 ephemeral devices and launch a standard m1.small instance there will be only one ephemeral device present.

Also note that for M3 instances, you must specify instance store volumes in the block device mapping for the instance. When you launch an M3 instance, Amazon ignores any instance store volumes specified in the block device mapping for the AMI.

As far as I can see the AWS Console doesn't indicate if instance storage is attached or not. For instance with 1 ephemeral volume:

$ curl http://169.254.169.254/latest/meta-data/block-device-mapping/

ami

ephemeral0

root

swap

$ curl http://169.254.169.254/latest/meta-data/block-device-mapping/ephemeral0

sdb

Ephemeral devices can be mounted in /media/ephemeralX/, but not all volumes.

I've found that usually only ephemeral0 is mounted automatically.

$ curl http://169.254.169.254/latest/meta-data/block-device-mapping/

ami

ephemeral0

ephemeral1

root

$ ls -l /media/

drwxr-xr-x 3 root root 4096 21 ное 2009 ephemeral0

For Difio I have an init.d script which executes when the system

boots. To enable /tmp on ephemeral storage I just added the following snippet:

echo $"Mounting /tmp on ephemeral storage:"

for ef in `curl http://169.254.169.254/latest/meta-data/block-device-mapping/ 2>/dev/null | grep ephemeral`; do

disk=`curl http://169.254.169.254/latest/meta-data/block-device-mapping/$ef 2>/dev/null`

echo $"Unmounting /dev/$disk"

umount /dev/$disk

echo $"mkfs /dev/$disk"

mkfs.ext4 -q /dev/$disk

echo $"Mounting /dev/$disk"

mount -t ext4 /dev/$disk /tmp && chmod 1777 /tmp && success || failure

done

NB: success and failure are from /etc/rc.d/init.d/functions.

If you are using LVM or RAID you need to reconstruct your block devices

accordingly!

If everything goes right I should be able to reduce my AWS costs by saving on provisioned storage and I/O requests. I'll keep you posted on this after a month or two.

There are comments.

AWS Tip: Shrinking EBS Root Volume Size

Amazon's Elastic Block Store volumes are easy to use and expand but notoriously hard to shrink once their size has grown. Here is my tip for shrinking EBS size and saving some money from over-provisioned storage. I'm assuming that you want to shrink the root volume which is on EBS.

- Write down the block device name for the root volume (/dev/sda1) - from AWS console: Instances; Select instance; Look at Details tab; See Root device or Block devices;

- Write down the availability zone of your instance - from AWS console: Instances; column Availability Zone;

- Stop instance;

- Create snapshot of the root volume;

- From the snapshot, create a second volume, in the same availability zone as your instance (you will have to attach it later). This will be your pristine source;

- Create new empty EBS volume (not based on a snapshot), with smaller size,

in the same availability zone - from AWS console: Volumes; Create Volume;

Snapshot == No Snapshot; IMPORTANT - size should be large enough to hold

all the files from the source file system (try

df -hon the source first); - Attach both volumes to instance while taking note of the block devices names you assign for them in the AWS console;

For example: In my case /dev/sdc1 is the source snapshot and /dev/sdd1 is the

empty target.

- Start instance;

- Optionally check the source file system with

e2fsck -f /dev/sdc1; - Create a file system for the empty volume -

mkfs.ext4 /dev/sdd1; - Mount volumes at

/sourceand/targetrespectively; - Now sync the files:

rsync -aHAXxSP /source/ /target. Note the missing slash (/) after/target. If you add it you will end up with files inside/target/source/which you don't want; - Quickly verify the new directory structure with

ls -l /target; - Unmount

/target; - Optionally check the new file system for consistency

e2fsck -f /dev/sdd1; - IMPORTANT - check how

/boot/grub/grub.confspecifies the root volume - by UUID, by LABEL, by device name, etc. You will have to duplicate the same for the new smaller volume or update/target/boot/grub/grub.confto match the new volume. Check/target/etc/fstabas well!

In my case I had to e2label /dev/sdd1 / because both grub.conf and fstab were

using the device label.

- Shutdown the instance;

- Detach all volumes;

- IMPORTANT - attach the new smaller volume to the instance using the same block device

name from the first step (e.g.

/dev/sda1); - Start the instance and verify it is working correctly;

- DELETE auxiliary volumes and snapshots so they don't take space and accumulate costs!

There are comments.

Idempotent Django Email Sender with Amazon SQS and Memcache

Recently I wrote about my problem with duplicate Amazon SQS messages causing multiple emails for Difio. After considering several options and feedback from @Answers4AWS I wrote a small decorator to fix this.

It uses the cache backend to prevent the task from executing twice during the specified time frame. The code is available at https://djangosnippets.org/snippets/3010/.

As stated on Twitter you should use Memcache (or ElastiCache) for this.

If using Amazon S3 with my

django-s3-cache don't use the

us-east-1 region because it is eventually consistent.

The solution is fast and simple on the development side and uses my existing cache infrastructure so it doesn't cost anything more!

There is still a race condition between marking the message as processed and the second check but nevertheless this should minimize the possibility of receiving duplicate emails to an accepted level. Only time will tell though!

There are comments.

Duplicate Amazon SQS Messages Cause Multiple Emails

Beware if using Amazon Simple Queue Service to send email messages! Sometime SQS messages are duplicated which results in multiple copies of the messages being sent. This happened today at Difio and is really annoying to users. In this post I will explain why there is no easy way of fixing it.

Q: Can a deleted message be received again?

Yes, under rare circumstances you might receive a previously deleted message again. This can occur in the rare situation in which a DeleteMessage operation doesn't delete all copies of a message because one of the servers in the distributed Amazon SQS system isn't available at the time of the deletion. That message copy can then be delivered again. You should design your application so that no errors or inconsistencies occur if you receive a deleted message again.

Amazon FAQ

In my case the cron scheduler logs say:

>>> <AsyncResult: a9e5a73a-4d4a-4995-a91c-90295e27100a>

While on the worker nodes the logs say:

[2013-12-06 10:13:06,229: INFO/MainProcess] Got task from broker: tasks.cron_monthly_email_reminder[a9e5a73a-4d4a-4995-a91c-90295e27100a]

[2013-12-06 10:18:09,456: INFO/MainProcess] Got task from broker: tasks.cron_monthly_email_reminder[a9e5a73a-4d4a-4995-a91c-90295e27100a]

This clearly shows the same message (see the UUID) has been processed twice! This resulted in hundreds of duplicate emails :(.

Why This Is Hard To Fix

There are two basic approaches to solve this issue:

- Check some log files or database for previous record of the message having been processed;

- Use idempotent operations that if you process the message again, you get the same results, and that those results don't create duplicate files/records.

The problem with checking for duplicate messages is:

- There is a race condition between marking the message as processed and the second check;

- You need to use some sort of locking mechanism to safe-guard against the race condition;

- In the event of an eventual consistency of the log/DB you can't guarantee that the previous attempt will show up and so can't guarantee that you won't process the message twice.

All of the above don't seem to work well for distributed applications not to mention Difio processes millions of messages per month, per node and the logs are quite big.

The second option is to have control of the Message-Id or some other email header so that the second message will be discarded either at the server (Amazon SES in my case) or at the receiving MUA. I like this better but I don't think it is technically possible with the current environment. Need to check though.

I've asked AWS support to look into this thread and hopefully they will have some more hints. If you have any other ideas please post in the comments! Thanks!

There are comments.

How I Created a Website In Two Days Without Coding

This is a simple story about a website I helped create without using any programming at all. It took me two days because of the images and the logo design which I've commissioned to a friend.

The website is obuvki41plus.com which is a re-seller business my spouse runs. It specializes in large size, elegant ladies shoes - Europe size 41 plus (hard to find in Bulgaria), hence the name.

Required Functionality

- Display a catalog of items for sale with detailed information about each item;

- Make it possible for people to comment and share the items;

- Very basic shopping cart which stores the selected items and then redirects to a page with order instructions. Actual order is made via phone for several reasons which I will explain in another post;

- Add a feedback/contact form;

- Look nice on mobile devices.

Technology

- The website is static, all pages are simple HTML and is hosted in Amazon S3;

- Comments are provided by Facebook's Comments Box plug-in;

- Social media buttons and tracking are provided by AddThis;

- Visitors analytics is standard and is from Google Analytics;

- Template is from GitHub Pages with slight modifications; Works on mobile too;

- Logo is custom designed by my friend Polina Valerieva;

- Feedback/contact form is by UserVoice;

- Shopping cart is by simpleCart(js). I've created a simple animation effect when pressing the "ADD TO CART" link to visually alert the user. This is done with jQuery.

I could have used some JavaScript templating engine like Handlebars but at the time I didn't know about it and I prefer not to write JavaScript if possible :).

Colophon

I did some coding after the initial release eventually. I've transformed the website to a Django based site which is exported as static HTML.

This helps me with faster deployment/management as everything is stored in git, allows templates inheritance and also makes the site ready to add more functionality if required.

There are comments.

What Runs Your Start-up - Imagga

Imagga is a cloud platform that helps businesses and individuals organize their images in a fast and cost-effective way. They develop a range of advanced proprietary image recognition and image processing technologies, which are built into several services such as smart image cropping, color extraction and multi-color search, visual similarity search and auto-tagging.

During Balkan Venture Forum in Sofia I sat down with Georgi Kadrev to talk about technology. Surprisingly this hi-tech service is built on top of standard low-tech components and lots of hard work.

Main Technologies

Core functionality is developed in C and C++ with the OpenCV library. Imagga relies heavily on own image processing algorithms for their core features. These were built as a combination of their own research activities and publications from other researchers.

Image processing is executed by worker nodes configured with their own software stack. Nodes are distributed among Amazon EC2 and other data centers.

Client libraries to access Imagga API are available in PHP, Ruby and Java.

Imagga has built several websites to showcase their technology. Cropp.me, ColorsLike.me, StockPodium and AutoTag.me were built with PHP, JavaScript and jQuery above a standard LAMP stack.

Recently Imagga also started using GPU computing with nVidia Tesla cards. They use C++ and Python bindings for CUDA.

Why Not Something Else?

As an initially bootstrapping start-up we chose something that is basically free, reliable and popular - that's why started with the LAMP stack. It proved to be stable and convenient for our web needs and we preserved it. The use of C++ is a natural choice for computational intensive tasks that we need to perform for the purpose of our core expertise - image processing. Though we initially wrote the whole core technology code from scratch, we later switched to OpenCV for some of the building blocks as it is very well optimized and continuously extended image processing library.

With the raise of affordable high-performance GPU processors and their availability in server instances, we decided it's time to take advantage of this highly parallel architecture, perfectly suitable for image processing tasks.

Georgi Kadrev

Want More Info?

If you’d like to hear more from Imagga please comment below. I will ask them to follow this thread and reply to your questions.

There are comments.

Performance test: Amazon ElastiCache vs Amazon S3

Which Django cache backend is faster? Amazon ElastiCache or Amazon S3 ?

Previously I've mentioned about using Django's cache to keep state between HTTP requests. In my demo described there I was using django-s3-cache. It is time to move to production so I decided to measure the performance difference between the two cache options available at Amazon Web Services.

Update 2013-07-01: my initial test may have been false since I had not configured ElastiCache access properly. I saw no errors but discovered the issue today on another system which was failing to store the cache keys but didn't show any errors either. I've re-run the tests and updated times are shown below.

Test infrastructure

- One Amazon S3 bucket, located in US Standard (aka US East) region;

- One Amazon ElastiCache cluster with one Small Cache Node (cache.m1.small) with Moderate I/O capacity;

- One Amazon Elasticache cluster with one Large Cache Node (cache.m1.large) with High I/O Capacity;

- Update: I've tested both

python-memcachedandpylibmcclient libraries for Django; - Update: Test is executed from an EC2 node in the us-east-1a availability zone;

- Update: Cache clusters are in the us-east-1a availability zone.

Test Scenario

The test platform is Django. I've created a

skeleton project

with only CACHES settings

defined and necessary dependencies installed. A file called test.py holds the

test cases, which use the standard timeit module. The object which is stored in cache

is very small - it holds a phone/address identifiers and couple of user made selections.

The code looks like this:

import timeit

s3_set = timeit.Timer(

"""

for i in range(1000):

my_cache.set(i, MyObject)

"""

,

"""

from django.core import cache

my_cache = cache.get_cache('default')

MyObject = {

'from' : '359123456789',

'address' : '6afce9f7-acff-49c5-9fbe-14e238f73190',

'hour' : '12:30',

'weight' : 5,

'type' : 1,

}

"""

)

s3_get = timeit.Timer(

"""

for i in range(1000):

MyObject = my_cache.get(i)

"""

,

"""

from django.core import cache

my_cache = cache.get_cache('default')

"""

)

Tests were executed from the Django shell on my laptop

on an EC2 instance in the us-east-1a availability zone. ElastiCache nodes

were freshly created/rebooted before test execution. S3 bucket had no objects.

$ ./manage.py shell

Python 2.6.8 (unknown, Mar 14 2013, 09:31:22)

[GCC 4.6.2 20111027 (Red Hat 4.6.2-2)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

(InteractiveConsole)

>>> from test import *

>>>

>>>

>>>

>>> s3_set.repeat(repeat=3, number=1)

[68.089607000350952, 70.806712865829468, 72.49261999130249]

>>>

>>>

>>> s3_get.repeat(repeat=3, number=1)

[43.778793096542358, 43.054368019104004, 36.19232702255249]

>>>

>>>

>>> pymc_set.repeat(repeat=3, number=1)

[0.40637087821960449, 0.3568730354309082, 0.35815882682800293]

>>>

>>>

>>> pymc_get.repeat(repeat=3, number=1)

[0.35759496688842773, 0.35180497169494629, 0.39198613166809082]

>>>

>>>

>>> libmc_set.repeat(repeat=3, number=1)

[0.3902890682220459, 0.30157709121704102, 0.30596804618835449]

>>>

>>>

>>> libmc_get.repeat(repeat=3, number=1)

[0.28874802589416504, 0.30520200729370117, 0.29050207138061523]

>>>

>>>

>>> libmc_large_set.repeat(repeat=3, number=1)

[1.0291709899902344, 0.31709098815917969, 0.32010698318481445]

>>>

>>>

>>> libmc_large_get.repeat(repeat=3, number=1)

[0.2957158088684082, 0.29067802429199219, 0.29692888259887695]

>>>

Results

As expected ElastiCache is much faster (10x) compared to S3. However the difference between the two ElastiCache node types is subtle. I will stay with the smallest possible node to minimize costs. Also as seen, pylibmc is a bit faster compared to the pure Python implementation.

Depending on your objects size or how many set/get operations you perform per second you may need to go with the larger nodes. Just test it!

It surprised me how slow django-s3-cache is.

The false test showed django-s3-cache to be 100x slower but new results are better.

10x decrease in performance sounds about right for a filesystem backed cache.

A quick look at the code of the two backends shows some differences. The one I immediately see is that for every cache key django-s3-cache creates an sha1 hash which is used as the storage file name. This was modeled after the filesystem backend but I think the design is wrong - the memcached backends don't do this.

Another one is that django-s3-cache time-stamps all objects and uses pickle to serialize them. I wonder if it can't just write them as binary blobs directly. There's definitely lots of room for improvement of django-s3-cache. I will let you know my findings once I get to it.

There are comments.

Twilio is Located in Amazon Web Services US East

Where do I store my audio files in order to minimize download and call wait time?

Twilio is a cloud vendor that provides telephony services.

It can download and <Play> arbitrary audio files and will cache the files

for better performance.

Twilio support told me they are not disclosing the location of their servers, so from my web application hosted in AWS US East:

[ivr-otb.rhcloud.com logs]\> grep TwilioProxy access_log-* | cut -f 1 -d '-' | sort | uniq

10.125.90.172

10.214.183.239

10.215.187.220

10.245.155.18

10.255.119.159

10.31.197.102

Now let's map these addresses to host names. From another EC2 system, also in Amazon US East:

[ec2-user@ip-10-29-206-86 ~]$ dig -x 10.125.90.172 -x 10.214.183.239 -x 10.215.187.220 -x 10.245.155.18 -x 10.255.119.159 -x 10.31.197.102

; <<>> DiG 9.8.2rc1-RedHat-9.8.2-0.17.rc1.29.amzn1 <<>> -x 10.125.90.172 -x 10.214.183.239 -x 10.215.187.220 -x 10.245.155.18 -x 10.255.119.159 -x 10.31.197.102

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 43245

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;172.90.125.10.in-addr.arpa. IN PTR

;; ANSWER SECTION:

172.90.125.10.in-addr.arpa. 113 IN PTR ip-10-125-90-172.ec2.internal.

;; Query time: 1 msec

;; SERVER: 172.16.0.23#53(172.16.0.23)

;; WHEN: Mon Jun 24 20:48:21 2013

;; MSG SIZE rcvd: 87

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 52693

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;239.183.214.10.in-addr.arpa. IN PTR

;; ANSWER SECTION:

239.183.214.10.in-addr.arpa. 42619 IN PTR domU-12-31-39-0B-B0-01.compute-1.internal.

;; Query time: 0 msec

;; SERVER: 172.16.0.23#53(172.16.0.23)

;; WHEN: Mon Jun 24 20:48:21 2013

;; MSG SIZE rcvd: 100

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 25255

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;220.187.215.10.in-addr.arpa. IN PTR

;; ANSWER SECTION:

220.187.215.10.in-addr.arpa. 43140 IN PTR domU-12-31-39-0C-B8-2E.compute-1.internal.

;; Query time: 0 msec

;; SERVER: 172.16.0.23#53(172.16.0.23)

;; WHEN: Mon Jun 24 20:48:21 2013

;; MSG SIZE rcvd: 100

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 15099

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;18.155.245.10.in-addr.arpa. IN PTR

;; ANSWER SECTION:

18.155.245.10.in-addr.arpa. 840 IN PTR ip-10-245-155-18.ec2.internal.

;; Query time: 0 msec

;; SERVER: 172.16.0.23#53(172.16.0.23)

;; WHEN: Mon Jun 24 20:48:21 2013

;; MSG SIZE rcvd: 87

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 28878

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;159.119.255.10.in-addr.arpa. IN PTR

;; ANSWER SECTION:

159.119.255.10.in-addr.arpa. 43140 IN PTR domU-12-31-39-01-70-51.compute-1.internal.

;; Query time: 0 msec

;; SERVER: 172.16.0.23#53(172.16.0.23)

;; WHEN: Mon Jun 24 20:48:21 2013

;; MSG SIZE rcvd: 100

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 28727

;; flags: qr rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;102.197.31.10.in-addr.arpa. IN PTR

;; ANSWER SECTION:

102.197.31.10.in-addr.arpa. 840 IN PTR ip-10-31-197-102.ec2.internal.

;; Query time: 0 msec

;; SERVER: 172.16.0.23#53(172.16.0.23)

;; WHEN: Mon Jun 24 20:48:21 2013

;; MSG SIZE rcvd: 87

In short:

ip-10-125-90-172.ec2.internal.

ip-10-245-155-18.ec2.internal.

ip-10-31-197-102.ec2.internal.

domU-12-31-39-01-70-51.compute-1.internal.

domU-12-31-39-0B-B0-01.compute-1.internal.

domU-12-31-39-0C-B8-2E.compute-1.internal.

The ip-*.ec2.internal are clearly in US East. The domU-*.computer-1.internal also

look like US East although I'm not 100% sure what is the difference between the two.

The later ones look like HVM guests while the former ones are para-virtualized.

For comparison here are some internal addresses from my own EC2 systems:

- ip-10-228-237-207.eu-west-1.compute.internal - EU Ireland

- ip-10-248-19-46.us-west-2.compute.internal - US West Oregon

- ip-10-160-58-141.us-west-1.compute.internal - US West N. California

After relocating my audio files to an S3 bucket in US East the average call length dropped from 2:30 min to 2:00 min for the same IVR choices. This also minimizes the costs since Twilio charges per minute of incoming/outgoing calls. I think the audio quality has improved as well.

There are comments.

Tip: Caching Large Objects for Celery and Amazon SQS

Some time ago a guy called Matt asked about passing large objects through their messaging queue. They were switching from RabbitMQ to Amazon SQS which has a limit of 64K total message size.

Recently I've made some changes in Difio which require passing larger objects as parameters to a Celery task. Since Difio is also using SQS I faced the same problem. Here is the solution using a cache back-end:

from celery.task import task

from django.core import cache as cache_module

def some_method():

... skip ...

task_cache = cache_module.get_cache('taskq')

task_cache.set(uuid, data, 3600)

handle_data.delay(uuid)

... skip ...

@task

def handle_data(uuid):

task_cache = cache_module.get_cache('taskq')

data = task_cache.get(uuid)

if data is None:

return

... do stuff ...

Objects are persisted in a secondary cache back-end, not the default one, to avoid

accidental destruction. uuid parameter is a string.

Although the objects passed are smaller than 64K I haven't seen any issues with this solution so far. Let me know if you are using something similar in your code and how it works for you.

There are comments.

What Runs Your Start-up - Useful at Night

Useful at Night is a mobile guide for nightlife empowering real time discovery of cool locations, allowing nightlife players to identify opinion leaders. Through geo-location and data aggregation capabilities, the application allows useful exploration of cities, places and parties.

Evelin Velev was kind enough to share what technologies his team uses to run their star-up.

Main Technologies

Main technologies used are Node.js, HTML 5 and NoSQL.

Back-end application servers are written in Node.js and hosted at Heroku, coupled with RedisToGo for caching and CouchDB served by Cloudant for storage.

Their mobile front-end supports both iOS and Android platforms and is built using HTML5 and a homemade UI framework called RAPID. There are some native parts developed in Objective-C and Java respectively.

In addition Useful at Night uses MongoDB for metrics data with a custom metrics solution written in Node.js; Amazon S3 for storing different assets; and a custom storage solution called Divan (simple CouchDB like).

Why Not Something Else?

We chose Node.js for our application servers, because it enables us to build efficient distributed systems while sharing significant amounts of code between client and server. Things get really interesting when you couple Node.js with Redis for data structure sharing and message passing, as the two technologies play very well together.

We chose CouchDB as our main back-end because it is the most schema-less data-store that supports secondary indexing. Once you get fluent with its map-reduce views, you can compute an index out of practically anything. For comparison, even MongoDB requires that you design your documents as to enable certain indexing patterns. Put otherwise, we'd say CouchDB is a data-store that enables truly lean engineering - we have never had to re-bake or migrate our data since day one, while we're constantly experimenting with new ways to index, aggregate and query it.

We chose HTML5 as our front-end technology, because it's cross-platform and because we believe it's ... almost ready. Things are still really problematic on Android, but iOS boasts a gorgeous web presentation platform, and Windows 8 is also joining the game with a very good web engine. Obviously we're constantly running into issues and limitations, mostly related to the unfortunate fact that in spite of some recent developments, a web app is still mostly single threaded. However, we're getting there, and we're proud to say we're running a pretty graphically complex hybrid app with near-native GUI performance on the iPhone 4S and above.

Want More Info?

If you'd like to hear more from Useful at Night please comment below. I will ask them to follow this thread and reply to your questions.

There are comments.

Email Logging for Django on RedHat OpenShift with Amazon SES

Sending email in the cloud can be tricky. IPs of cloud providers are blacklisted because of frequent abuse. For that reason I use Amazon SES as my email backend. Here is how to configure Django to send emails to site admins when something goes wrong.

# Valid addresses only.

ADMINS = (

('Alexander Todorov', 'atodorov@example.com'),

)

LOGGING = {

'version': 1,

'disable_existing_loggers': False,

'handlers': {

'mail_admins': {

'level': 'ERROR',

'class': 'django.utils.log.AdminEmailHandler'

}

},

'loggers': {

'django.request': {

'handlers': ['mail_admins'],

'level': 'ERROR',

'propagate': True,

},

}

}

# Used as the From: address when reporting errors to admins

# Needs to be verified in Amazon SES as a valid sender

SERVER_EMAIL = 'django@example.com'

# Amazon Simple Email Service settings

AWS_SES_ACCESS_KEY_ID = 'xxxxxxxxxxxx'

AWS_SES_SECRET_ACCESS_KEY = 'xxxxxxxx'

EMAIL_BACKEND = 'django_ses.SESBackend'

You also need the django-ses dependency.

See http://docs.djangoproject.com/en/dev/topics/logging for more details on how to customize your logging configuration.

I am using this configuration successfully at RedHat's OpenShift PaaS environment. Other users have reported it works for them too. Should work with any other PaaS provider.

There are comments.

Performance Test: Amazon EBS vs. Instance Storage, Pt.1

I'm exploring the possibility to speed-up my cloud database so I've run some basic tests against storage options available to Amazon EC2 instances. The instance was m1.large with High I/O performance and two additional disks with the same size:

- /dev/xvdb - type EBS

- /dev/xvdc - type instance storage

Both are Xen para-virtual disks. The difference is that EBS is persistent across reboots while instance storage is ephemeral.

hdparm

For a quick test I used hdparm. The manual says:

-T Perform timings of cache reads for benchmark and comparison purposes.

This displays the speed of reading directly from the Linux buffer cache

without disk access. This measurement is essentially an indication of

the throughput of the processor, cache, and memory of the system under test.

-t Perform timings of device reads for benchmark and comparison purposes.

This displays the speed of reading through the buffer cache to the disk

without any prior caching of data. This measurement is an indication of how

fast the drive can sustain sequential data reads under Linux, without any

filesystem overhead.

The results of 3 runs of hdparm are shown below:

# hdparm -tT /dev/xvdb /dev/xvdc

/dev/xvdb:

Timing cached reads: 11984 MB in 1.98 seconds = 6038.36 MB/sec

Timing buffered disk reads: 158 MB in 3.01 seconds = 52.52 MB/sec

/dev/xvdc:

Timing cached reads: 11988 MB in 1.98 seconds = 6040.01 MB/sec

Timing buffered disk reads: 1810 MB in 3.00 seconds = 603.12 MB/sec

# hdparm -tT /dev/xvdb /dev/xvdc

/dev/xvdb:

Timing cached reads: 11892 MB in 1.98 seconds = 5991.51 MB/sec

Timing buffered disk reads: 172 MB in 3.00 seconds = 57.33 MB/sec

/dev/xvdc:

Timing cached reads: 12056 MB in 1.98 seconds = 6075.29 MB/sec

Timing buffered disk reads: 1972 MB in 3.00 seconds = 657.11 MB/sec

# hdparm -tT /dev/xvdb /dev/xvdc

/dev/xvdb:

Timing cached reads: 11994 MB in 1.98 seconds = 6042.39 MB/sec

Timing buffered disk reads: 254 MB in 3.02 seconds = 84.14 MB/sec

/dev/xvdc:

Timing cached reads: 11890 MB in 1.99 seconds = 5989.70 MB/sec

Timing buffered disk reads: 1962 MB in 3.00 seconds = 653.65 MB/sec

Result: Sequential reads from instance storage are 10x faster compared to EBS on average.

IOzone

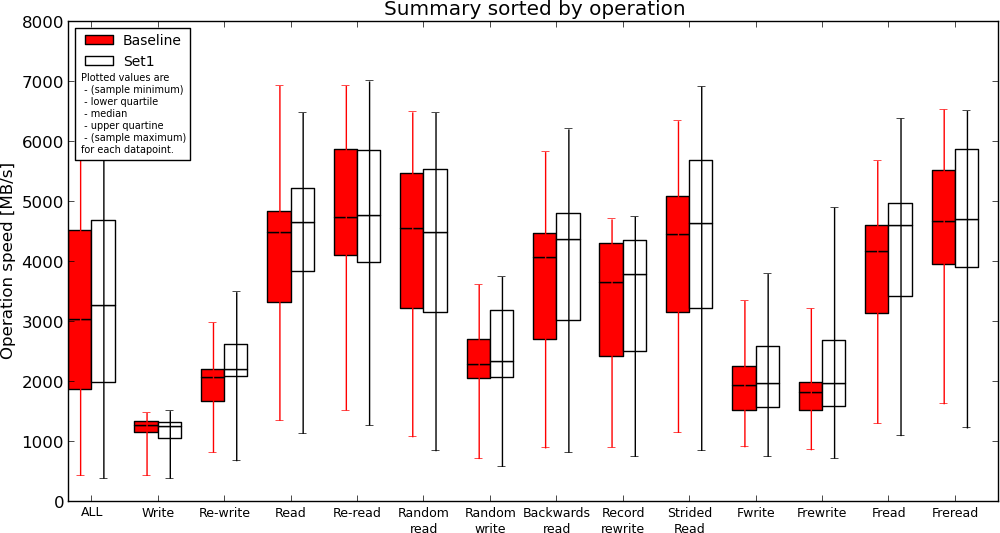

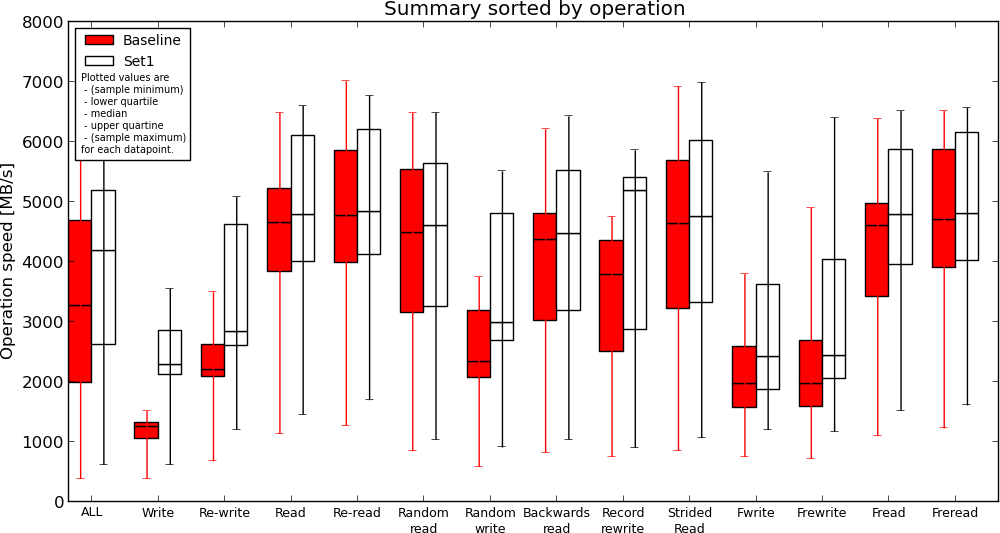

I'm running MySQL and sequential data reads are probably over idealistic scenario. So I found another benchmark suite, called IOzone. I used the 3-414 version built from the official SRPM.

IOzone performs multiple tests. I'm interested in read/re-read, random-read/write, read-backwards and stride-read.

For this round of testing I've tested with ext4 filesystem with and without journal on both types of disks. I also experimented running Iozone inside a ramfs mounted directory. However I didn't have the time to run the test suite multiple times.

Then I used iozone-results-comparator to visualize the results. (I had to do a minor fix to the code to run inside virtualenv and install all missing dependencies).

Raw IOzone output, data visualization and the modified tools are available in the aws_disk_benchmark_w_iozone.tar.bz2 file (size 51M).

Graphics

EBS without journal(Baseline) vs. Instance Storage without journal(Set1)

Instance Storage without journal(Baseline) vs. Ramfs(Set1)

Results

- ext4 journal has no effect on reads, causes slow down when writing to disk. This is expected;

- Instance storage is faster compared to EBS but not much. If I understand the results correctly, read performance is similar in some cases;

- Ramfs is definitely the fastest but read performance compared to instance storage is not two-fold (or more) as I expected;

Conclusion

Instance storage appears to be faster (and this is expected) but I'm still not sure if my application will gain any speed improvement or how much if migrated to read from instance storage (or ramfs) instead of EBS. I will be performing more real-world test next time, by comparing execution time for some of my largest SQL queries.

If you have other ideas how to adequately measure I/O performance in the AWS cloud, please use the comments below.

There are comments.

How Large Are My MySQL Tables

Image CC-BY-SA, Michael Mandiberg

Image CC-BY-SA, Michael Mandiberg

I found two good blog posts about investigating MySQL internals: Researching your MySQL table sizes and Finding out largest tables on MySQL Server. Using the queries against my site Difio showed:

mysql> SELECT CONCAT(table_schema, '.', table_name),

-> CONCAT(ROUND(table_rows / 1000000, 2), 'M') rows,

-> CONCAT(ROUND(data_length / ( 1024 * 1024 * 1024 ), 2), 'G') DATA,

-> CONCAT(ROUND(index_length / ( 1024 * 1024 * 1024 ), 2), 'G') idx,

-> CONCAT(ROUND(( data_length + index_length ) / ( 1024 * 1024 * 1024 ), 2), 'G') total_size,

-> ROUND(index_length / data_length, 2) idxfrac

-> FROM information_schema.TABLES

-> ORDER BY data_length + index_length DESC;

+----------------------------------------+-------+-------+-------+------------+---------+

| CONCAT(table_schema, '.', table_name) | rows | DATA | idx | total_size | idxfrac |

+----------------------------------------+-------+-------+-------+------------+---------+

| difio.difio_advisory | 0.04M | 3.17G | 0.00G | 3.17G | 0.00 |

+----------------------------------------+-------+-------+-------+------------+---------+

The table of interest is difio_advisory which had 5 longtext fields. Those fields were

not used for filtering or indexing the rest of the information.

They were just storage fields - a `nice' side effect of using Django's ORM.

I have migrated the data to Amazon S3 and stored it in JSON format there. After dropping these fields the table was considerably smaller:

+----------------------------------------+-------+-------+-------+------------+---------+

| CONCAT(table_schema, '.', table_name) | rows | DATA | idx | total_size | idxfrac |

+----------------------------------------+-------+-------+-------+------------+---------+

| difio.difio_advisory | 0.01M | 0.00G | 0.00G | 0.00G | 0.90 |

+----------------------------------------+-------+-------+-------+------------+---------+

For those interested I'm using django-storages on the back-end to save the data in S3 when generated. On the front-end I'm using dojo.xhrGet to load the information directly into the browser.

I'd love to hear your feedback in the comments section below. Let me know what you found for your own databases. Were there any issues? How did you deal with them?

There are comments.

Tip: Save Money on Amazon - Buy Used Books

I like to buy books, the real ones, printed on paper. This however comes at a certain price when buying from Amazon. The book price itself is usually bearable but many times shipping costs to Bulgaria will double the price. Especially if you are making a single book order.

To save money I started buying used books when available. For books that are not so popular I look for items that have been owned by a library.

This is how I got a hardcover 1984 edition of

The Gentlemen's Clubs of London

by Anthony Lejeune for $10. This is my best deal so far.

The book was brand new I dare to say. There was no edge wear, no damaged pages,

with nice and vibrant colors. The second page had the library sign and no other marks.

Let me know if you had an experience buying used books online? Did you score a great deal like I did?

There are comments.

Click Tracking without MailChimp

Here is a standard notification message that users at Difio receive. It is plain text, no HTML crap, short and URLs are clean and descriptive. As the project lead developer I wanted to track when people click on these links and visit the website but also keep existing functionality.

Standard approach

A pretty common approach when sending huge volumes of email is to use an external service, such as MailChimp. This is one of many email marketing services which comes with a lot of features. The most important to me was analytics and reports.

The downside is that MailChimp (and I guess others) use HTML formatted emails extensively. I don't like that and I'm sure my users will not like it as well. They are all developers. Not to mention that MailChimp is much more expensive than Amazon SES which I use currently. No MailChimp for me!

Another common approach, used by Feedburner by the way, is to use shortened URLs which redirect to the original ones and measure clicks in between. I also didn't like this for two reasons: 1) the shortened URLs look ugly and they are not at all descriptive and 2) I need to generate them automatically and maintain all the mappings. Why bother ?

How I did it?

So I needed something which will do a redirect to a predefined URL, measure how many redirects were there (essentially clicks on the link) and look nice. The solution is very simple, if you have not recognized it by now from the picture above.

I opted for a custom redirect engine, which will add tracking information to the destination URL so I can track it in Google Analytics.

Previous URLs were of the form http://www.dif.io/updates/haml-3.1.2/haml-3.2.0.rc.3/11765/.

I've added the humble /daily/? prefix before the URL path so it becomes

http://www.dif.io/daily/?/updates/haml-3.1.2/haml-3.2.0.rc.3/11765/

Now /updates/haml-3.1.2/haml-3.2.0.rc.3/11765/ becomes a query string parameter which

the /daily/index.html page uses as its destination. Before doing the redirect

a script adds tracking parameters so that Google Analytics will properly

report this visit. Here is the code:

<html>

<head>

<script type="text/javascript">

var uri = window.location.toString();

var question = uri.indexOf("?");

var param = uri.substring(question + 1, uri.length)

if (question > 0) {

window.location.href = param + '?utm_source=email&utm_medium=email&utm_campaign=Daily_Notification';

}

</script>

</head>

<body></body>

</html>

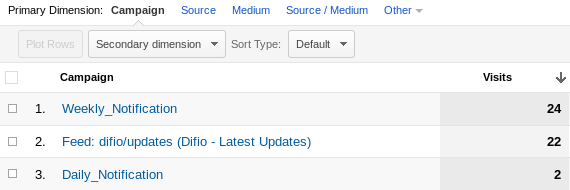

Previously Google Analytics was reporting these visits as direct hits while now it lists them under campaigns like so:

Because all visitors of Difio use JavaScript enabled browsers I combined this approach with another one, to remove query string with JavaScript and present clean URLs to the visitor.

Why JavaScript?

You may be asking why the hell I am using JavaScript and not Apache's wonderful mod_rewrite module? This is because the destination URLs are hosted in Amazon S3 and I'm planning to integrate with Amazon CloudFront. Both of them don't support .htaccess rules nor anything else similar to mod_rewrite.

As always I'd love to hear your thoughts and feedback. Please use the comment form below.

There are comments.

Cross-domain AJAX Served From CDN

This week Amazon announced support for dynamic content in their CDN solution Amazon CloudFront. The announce coincided with my efforts to migrate more pieces of Difio's website to CloudFront.

In this article I will not talk about hosting static files on CDN. This is easy and I've already written about it here. I will show how to cache AJAX(JSONP actually) responses and serve them directly from Amazon CloudFront.

Background

For those of you who may not be familiar (are there any) CDN stands for Content Delivery Network. In short this employs numerous servers with identical content. The requests from the browser are served from the location which gives best performance for the user. This is used by all major websites to speed-up static content like images, video, CSS and JavaScript files.

AJAX means Asynchronous JavaScript and XML. This is what Google uses to create dynamic user interface which doesn't require to reload the page.

Architecture

Difio has two web interfaces. The primary one is a static HTML website which employs JavaScript for the dynamic areas. It is hosted on the dif.io domain. The other one is powered by Django and provides the same interface plus the applications dashboard and several API functions which don't have a visible user interface. This is under the *.rhcloud.com domain b/c it is hosted on OpenShift.

The present state of the website is the result of rapid development using conventional methods - HTML templates and server-side processing. This is migrating to modern web technology like static HTML and JavaScript while the server side will remain pure API service.

For this migration to happen I need the HTML pages at dif.io to execute JavaScript and load information which comes from the rhcloud.com domain. Unfortunately this is not easily doable with AJAX because of the Same origin policy in browsers.

I'm using the Dojo Toolkit JavaScript framework which has a solution. It's called JSONP. Here's how it works:

dif.io ------ JSONP request --> abc.rhcloud.com --v

^ |

| |

JavaScript processing |

| |

+------------------ JSONP response ------------+

This is pretty standard configuration for a web service.

Going to the clouds

The way Dojo implements JSONP is through the dojo.io.script module. It works by appending a query string parameter of the form ?callback=funcName which the server uses to generate the JSONP response. This callback name is dynamically generated by Dojo based on the order in which your call to dojo.io.script is executed.

Until recently Amazon CloudFront ignored all query string parameters when requesting the content from the origin server. Without the query string it was not possible to generate the JSONP response. Luckily Amazon resolved the issue only one day after I asked about it on their forums.

Now Amazon CloudFront will use the URL path and the query string parameters to identify the objects in cache. To enable this edit the CloudFront distribution behavior(s) and set Forward Query Strings to Yes.

When a visitor of the website requests the data Amazon CloudFront will use exactly the same url path and query strings to fetch the content from the origin server. All that I had to do is switch the domain of the JSONP service to point to the cloudfront.net domain. It became like this:

| Everything on this side is handled by Amazon.

| No code required!

|

dif.io ------ JSONP request --> xyz.cloudfront.net -- JSONP request if cache miss --> abc.rhcloud.com --v

^ | ^ |

| | | |

JavaScript processing | +---------- JSONP response --------------------------+

| |

+---- cached JSONP response ---+

As you can see the website structure and code didn't change at all. All that changed was a single domain name.

Controlling the cache

Amazon CloudFront will keep the contents in cache based on the origin headers if present or the manual configuration from the AWS Console. To work around frequent requests to the origin server it is considered best practice to set the Expires header to a value far in the future, like 1 year. However if the content changes you need some way to tell CloudFront about it. The most commonly used method is through using different URLs to access the same content. This will cause CloudFront to cache the content under the new location while keeping the old content until it expires.

Dojo makes this very easy:

require(["dojo/io/script"],

function(script) {

script.get({

url: "https://xyz.cloudfront.net/api/json/updates/1234",

callbackParamName: "callback",

content: {t: timeStamp},

load: function(jsonData) {

....

},

The content property allows additional key/value pairs to be sent in the query string. The timeStamp parameter serves only to control Amazon CloudFront cache. It's not processed server side.

On the server-side we have:

response['Cache-Control'] = 'max-age=31536000'

response['Expires'] = (datetime.now()+timedelta(seconds=31536000)).strftime('%a, %d %b %Y %H:%M:%S GMT')

Benefits

There were two immediate benefits:

- Reduced page load time. Combined with serving static files from CDN this greatly improves the user experience;

- Reduced server load. Content is requested only once if it is missing from the cache and then served from CloudFront. The server isn't so busy serving content so it can be used to do more computations or simply reduce the bill.

The presented method works well for Difio because of two things:

- The content which Difio serves usually doesn't change at all once made public. In rare occasions, for example an error has been published, we have to regenerate new content and publish it under the same URL.

- Before content is made public it is inspected for errors and this also preseeds the cache.

There are comments.

Using OpenShift as Amazon CloudFront Origin Server

It's been several months after the start of Difio and I started migrating various parts of the platform to CDN. The first to go are static files like CSS, JavaScript, images and such. In this article I will show you how to get started with Amazon CloudFront and OpenShift. It is very easy once you understand how it works.

Why CloudFront and OpenShift

Amazon CloudFront is cheap and easy to setup with virtually no maintenance. The most important feature is that it can fetch content from any public website. Integrating it together with OpenShift gives some nice benefits:

- All static assets are managed with Git and stored in the same place where the application code and HTML is - easy to develop and deploy;

- No need for external service to host the static files;

- CloudFront will be serving the files so network load on OpenShift is minimal;

- Easy to manage versioned URLs because HTML and static assets are in the same repo - more on this later;

Object expiration

CloudFront will cache your objects for a certain period and then expire them. Frequently used objects are expired less often. Depending on the content you may want to update the cache more or less frequently. In my case CSS and JavaScript files change rarely so I wanted to tell CloudFront to not expire the files quickly. I did this by telling Apache to send a custom value for the Expires header.

$ curl http://d71ktrt2emu2j.cloudfront.net/static/v1/css/style.css -D headers.txt

$ cat headers.txt

HTTP/1.0 200 OK

Date: Mon, 16 Apr 2012 19:02:16 GMT

Server: Apache/2.2.15 (Red Hat)

Last-Modified: Mon, 16 Apr 2012 19:00:33 GMT

ETag: "120577-1b2d-4bdd06fc6f640"

Accept-Ranges: bytes

Content-Length: 6957

Cache-Control: max-age=31536000

Expires: Tue, 16 Apr 2013 19:02:16 GMT

Content-Type: text/css

Strict-Transport-Security: max-age=15768000, includeSubDomains

Age: 73090

X-Cache: Hit from cloudfront

X-Amz-Cf-Id: X558vcEOsQkVQn5V9fbrWNTdo543v8VStxdb7LXIcUWAIbLKuIvp-w==,e8Dipk5FSNej3e0Y7c5ro-9mmn7OK8kWfbaRGwi1ww8ihwVzSab24A==

Via: 1.0 d6343f267c91f2f0e78ef0a7d0b7921d.cloudfront.net (CloudFront)

Connection: close

All headers before Strict-Transport-Security come from the origin server.

Versioning

Sometimes however you need to update the files and force CloudFront to update the content. The recommended way to do this is to use URL versioning and update the path to the files which changed. This will force CloudFront to cache and serve the content under the new path while keeping the old content available until it expires. This way your visitors will not be viewing your site with the new CSS and old JavaScript.

There are many ways to do this and there are some nice frameworks as well. For Python there is webassets. I don't have many static files so I opted for no additional dependencies. Instead I will be updating the versions by hand.

What comes to mind is using mod_rewrite to redirect the versioned URLs back to non versioned ones. However there's a catch. If you do this CloudFront will cache the redirect itself, not the content. The next time visitors hit CloudFront they will receive the cached redirect and follow it back to your origin server, which is defeating the purpose of having CDN.

To do it properly you have to rewrite the URLs but still return a 200 response code and the content which needs to be cached. This is done with mod_proxy:

RewriteEngine on

RewriteRule ^VERSION-(\d+)/(.*)$ http://%{ENV:OPENSHIFT_INTERNAL_IP}:%{ENV:OPENSHIFT_INTERNAL_PORT}/static/$2 [P,L]

This .htaccess trick doesn't work on OpenShift though. mod_proxy is not enabled at the moment. See bug 812389 for more info.

Luckily I was able to use symlinks to point to the content. Here's how it looks:

$ pwd

/home/atodorov/difio/wsgi/static

$ cat .htaccess

ExpiresActive On

ExpiresDefault "access plus 1 year"

$ ls -l

drwxrwxr-x. 6 atodorov atodorov 4096 16 Apr 21,31 o

lrwxrwxrwx. 1 atodorov atodorov 1 16 Apr 21,47 v1 -> o

settings.py:

STATIC_URL = '//d71ktrt2emu2j.cloudfront.net/static/v1/'

HTML template:

<link type="text/css" rel="stylesheet" media="screen" href="{{ STATIC_URL }}css/style.css" />

How to implement it

First you need to split all CSS and JavaScript from your HTML if you haven't done so already.

Then place everything under your git repo so that OpenShift will serve the files. For Python applications place the files under wsgi/static/ directory in your git repo.

Point all of your HTML templates to the static location on OpenShift and test if everything works as expected. This is best done if you're using some sort of template language and store the location in a single variable which you can change later. Difio uses Django and the STATIC_URL variable of course.

Create your CloudFront distribution - don't use Amazon S3, instead configure a custom origin server. Write down your CloudFront URL. It will be something like 1234xyz.cludfront.net.

Every time a request hits CloudFront it will check if the object is present in the cache. If not present CloudFront will fetch the object from the origin server and populate the cache. Then the object is sent to the user.

Update your templates to point to the new cloudfront.net URL and redeploy your website!

There are comments.