Virtualization Platforms Supported by Red Hat Enterprise Linux

This is mostly for my own reference, to have a handy list of supported virtualization platforms by Red Hat Enterprise Linux.

Software virtualization solutions

A guest RHEL operating system is supported if it runs on the following platforms:

- Xen shipped with RHEL Server

- KVM shipped with RHEL Server or RHEV for Servers

- VMware ESX/vSphere

- Microsoft Hyper-V

Red Hat does not support Citrix XenServer. However, customers can buy RHEL Server and use it with Citrix XenServer with the understanding that Red Hat will only support technical issues that can be reproduced on bare metal.

The official virtualization support matrix shows which host/guest operating systems combinations are supported.

Hardware partitioning

Red Hat supports RHEL on hardware partitioning and virtualization solutions such as:

Unfortunately the recently updated hardware catalog doesn't allow to filter by hardware partitioning vs. virtualization platform so you need to know what you are looking for to find it :(.

Red Hat Enterprise Linux as a guest on the Cloud

Multiple public cloud providers are supported. Comprehensive list can be found here: http://www.redhat.com/solutions/cloud-computing/public-cloud/find-partner/

You can also try Red Hat Partner Locator's advanced search. However at the time of this writing there are no partners listed in the Cloud / Virtualization category.

Warning: It is known that Amazon uses Xen with custom modifications (not sure what version) and HP Cloud uses KVM but there is not much public record about hypervisor technology used by most cloud providers. Red Hat has partner agreements with these vendors and will commercially support only their platforms. This means that if you decide to use upstream Xen or anything else not listed above, you are on your own. You have been warned!

Unsupported but works

I'm not a big fan of running on top of unsupported environments and I don't have the need to do so. I've heard about people running CentOS (RHEL compatible) on VirtualBox but I have no idea how well it works.

If you are using a different virtualization platform

(like LXC, OpenVZ, UML,

Parallels

or other) let me know if CentOS/Fedora works on it.

Alternatively I can give it a try if you can provide me with ssh/VNC access to the machine.

There are comments.

Bug in Nokia software shows wrong caller ID

During the past month one of my cell phones,

Nokia

5800 XpressMusic

, was not showing the caller name when a friend was calling.

The number in the contacts list was correct but the name wasn't showing,

nor the custom assigned ringing tone. It turned out to be a bug!

The story behind this is that accidentally the same number was saved again in the contacts list, but without a name assigned to it. The software was matching the later one, so no custom ringing tone, no name shown. Removing the duplicate entry fixed the issue. Software version of this phone is

v 21.0.025

RM-356

02-04-09

I wondered what will happen with multiple duplicates and if this was fixed in a later

software version so I tested with another phone,

Nokia 6303.

Software version is

V 07.10

25-03-10

RM-638

- Step 0 - add the number to the contacts list, with name

Buddy 1 - Step 1 - add the same number to the contacts, with empty name.

Result: You get a warning this number is already present for

Buddy 1! When receiving a call,Buddy 1is displayed. - Step 2 - edit the empty name contact and change the name to

Buddy 2. Result: when receiving a callBuddy 2is displayed. - Step 3 - add the same number again, with name

Buddy 0. This is the latest entry but it is sorted before the previous two (this is important). Result: You get a warning that this number is already present forBuddy 1andBuddy 2. When receiving a callBuddy 0is displayed.

Summary: so it looks like Nokia fixed the issue with empty names, by simply ignoring them but when multiple duplicate contacts are available it displays the name of the last entered in the contact list, independent of name sort order.

Later today or tomorrow I will test on

Nokia 700

which runs Symbian OS and update this post with more results.

Updated on 2013-03-19 23:50

Finally managed to test on

Nokia 700.

Software version is:

Release

Nokia Belle Feature pack 1

Software version

112.010.1404

Software version date

2012-03-30

Type

RM-670

Result: If a duplicate contact entry is present it doesn't matter if the name is empty or not. Both times no name was displayed when receiving a call. Looks like Nokia is not paying attention to regressions at all.

Android and iPhone

I don't own any

Android

or

iPhone

devices so I'm not able to test on them. If you have one, please let me know if this bug is still present

and how does the software behave when multiple contacts share the same number or have empty names! Thanks!

There are comments.

django-social-auth tip: Reminder of Login Provider

Every now and then users forget their passwords. This is why I prefer using OAuth and social network accounts like GitHub or Twitter. But what do you do when somebody forgets which OAuth provider they used to login to your site? Your website needs a reminder. This is how to implement one if using django-social-auth.

Back-end

Create a similar view on your Django back-end

def ajax_social_auth_provider_reminder(request):

"""

Remind the user which social auth provider they used to login.

"""

if not request.POST:

return HttpResponse("Not a POST", mimetype='text/plain', status=403)

email = request.POST.get('email', "")

email = email.strip()

if not email or (email.find("@") == -1):

return HttpResponse("Invalid address!", mimetype='text/plain', status=400)

try:

user = User.objects.filter(email=email, is_active=True).only('pk')[0]

except:

return HttpResponse("No user with address '%s' found!" % email, mimetype='text/plain', status=400)

providers = []

for sa in UserSocialAuth.objects.filter(user=user.pk).only('provider'):

providers.append(sa.provider.title())

if len(providers) > 0:

send_templated_mail(

template_name='social_provider_reminder',

from_email='Difio <reminder@dif.io>',

recipient_list=[email],

context={'providers' : providers},

)

return HttpResponse("Reminder sent to '%s'" % email, mimetype='text/plain', status=200)

else:

return HttpResponse("User found but no social providers found!", mimetype='text/plain', status=400)

This example assumes it is called via POST request which contains the email address. All responses are handled at the front-end via JavaScript. If a user with the specified email address exists this address will receive a reminder listing all social auth providers associated with the user account.

Front-end

On the browser side I like to use Dojo. Here is a simple script which connects to a form and POSTs the data back to the server.

require(["dojo"]);

require(["dijit"]);

function sendReminderForm(){

var form = dojo.byId("reminderForm");

dojo.connect(form, "onsubmit", function(event){

dojo.stopEvent(event);

dijit.byId("dlgForgot").hide();

var xhrArgs = {

form: form,

handleAs: "text",

load: function(data){alert(data);},

error: function(error, ioargs){alert(ioargs.xhr.responseText);}

};

var deferred = dojo.xhrPost(xhrArgs);

});

}

dojo.ready(sendReminderForm);

You can try this out at Difio and let me know how it works for you!

There are comments.

Python Twitter + django-social-auth == Hello New User

I have been experimenting with the twitter module for Python and decided to combine it with django-social-auth to welcome new users who join Difio. In this post I will show you how to tweet on behalf of the user when they join your site and send them a welcome email.

Configuration

In django-social-auth the authentication workflow is handled by an operations pipeline where custom functions can be added or default items can be removed to provide custom behavior. This is how our pipeline looks:

SOCIAL_AUTH_PIPELINE = (

'social_auth.backends.pipeline.social.social_auth_user',

#'social_auth.backends.pipeline.associate.associate_by_email',

'social_auth.backends.pipeline.user.get_username',

'social_auth.backends.pipeline.user.create_user',

'social_auth.backends.pipeline.social.associate_user',

'social_auth.backends.pipeline.social.load_extra_data',

'social_auth.backends.pipeline.user.update_user_details',

'myproject.tasks.welcome_new_user'

)

This is the default plus an additional method at the end to welcome new users.

You also have to create and configure a Twitter application so that users can login with Twitter OAuth to your site. RTFM for more information on how to do this.

Custom pipeline actions

This is how the custom pipeline action should look:

from urlparse import parse_qs

def welcome_new_user(backend, user, social_user, is_new=False, new_association=False, *args, **kwargs):

"""

Part of SOCIAL_AUTH_PIPELINE. Works with django-social-auth==0.7.21 or newer

@backend - social_auth.backends.twitter.TwitterBackend (or other) object

@user - User (if is_new) or django.utils.functional.SimpleLazyObject (if new_association)

@social_user - UserSocialAuth object

"""

if is_new:

send_welcome_email.delay(user.email, user.first_name)

if backend.name == 'twitter':

if is_new or new_association:

access_token = social_user.extra_data['access_token']

parsed_tokens = parse_qs(access_token)

oauth_token = parsed_tokens['oauth_token'][0]

oauth_secret = parsed_tokens['oauth_token_secret'][0]

tweet_on_join.delay(oauth_token, oauth_secret)

return None

This code works with django-social-auth==0.7.21 or newer. In older versions the

new_association parameter is missing as

I discovered.

If you use an older version you won't be able to distinguish between newly created

accounts and ones which have associated another OAuth backend. You are warned!

Tweet & email

Sending the welcome email is out of the scope of this post. I am using django-templated-email to define how emails look and sending them via Amazon SES. See Email Logging for Django on RedHat OpenShift With Amazon SES for more information on how to configure emailing with SES.

Here is how the Twitter code looks:

import twitter

from celery.task import task

from settings import TWITTER_CONSUMER_KEY, TWITTER_CONSUMER_SECRET

@task

def tweet_on_join(oauth_token, oauth_secret):

"""

Tweet when the user is logged in for the first time or

when new Twitter account is associated.

@oauth_token - string

@oauth_secret - string

"""

t = twitter.Twitter(

auth=twitter.OAuth(

oauth_token, oauth_secret,

TWITTER_CONSUMER_KEY, TWITTER_CONSUMER_SECRET

)

)

t.statuses.update(status='Started following open source changes at http://www.dif.io!')

This will post a new tweet on behalf of the user, telling everyone they joined your website!

NOTE:

tweet_on_join and send_welcome_email are Celery tasks, not ordinary Python

functions. This has the advantage of being able to execute these actions async

and not slow down the user interface.

Are you doing something special when a user joins your website? Please share your comments below. Thanks!

There are comments.

Tip: Delete User Profiles with django-social-auth

Common functionality for websites is the 'DELETE ACCOUNT' or 'DISABLE ACCOUNT' button. This is how to implement it if using django-social-auth.

delete_objects_for_user(request.user.pk) # optional

UserSocialAuth.objects.filter(user=request.user).delete()

User.objects.filter(pk=request.user.pk).update(is_active=False, email=None)

return HttpResponseRedirect(reverse('django.contrib.auth.views.logout'))

This snippet does the following:

- Delete (or archive) all objects for the current user;

- Delete the social auth profile(s) because there is no way to disable them. DSA will create new objects if the user logs in again;

- Disable the

Userobject. You could also delete it but mind foreign keys; - Clear the email for the

Userobject - if a new user is created after deletion we don't want duplicated email addresses in the database; - Finally redirect the user to the logout view.

There are comments.

Email Logging for Django on RedHat OpenShift with Amazon SES

Sending email in the cloud can be tricky. IPs of cloud providers are blacklisted because of frequent abuse. For that reason I use Amazon SES as my email backend. Here is how to configure Django to send emails to site admins when something goes wrong.

# Valid addresses only.

ADMINS = (

('Alexander Todorov', 'atodorov@example.com'),

)

LOGGING = {

'version': 1,

'disable_existing_loggers': False,

'handlers': {

'mail_admins': {

'level': 'ERROR',

'class': 'django.utils.log.AdminEmailHandler'

}

},

'loggers': {

'django.request': {

'handlers': ['mail_admins'],

'level': 'ERROR',

'propagate': True,

},

}

}

# Used as the From: address when reporting errors to admins

# Needs to be verified in Amazon SES as a valid sender

SERVER_EMAIL = 'django@example.com'

# Amazon Simple Email Service settings

AWS_SES_ACCESS_KEY_ID = 'xxxxxxxxxxxx'

AWS_SES_SECRET_ACCESS_KEY = 'xxxxxxxx'

EMAIL_BACKEND = 'django_ses.SESBackend'

You also need the django-ses dependency.

See http://docs.djangoproject.com/en/dev/topics/logging for more details on how to customize your logging configuration.

I am using this configuration successfully at RedHat's OpenShift PaaS environment. Other users have reported it works for them too. Should work with any other PaaS provider.

There are comments.

Performance Test: Amazon EBS vs. Instance Storage, Pt.1

I'm exploring the possibility to speed-up my cloud database so I've run some basic tests against storage options available to Amazon EC2 instances. The instance was m1.large with High I/O performance and two additional disks with the same size:

- /dev/xvdb - type EBS

- /dev/xvdc - type instance storage

Both are Xen para-virtual disks. The difference is that EBS is persistent across reboots while instance storage is ephemeral.

hdparm

For a quick test I used hdparm. The manual says:

-T Perform timings of cache reads for benchmark and comparison purposes.

This displays the speed of reading directly from the Linux buffer cache

without disk access. This measurement is essentially an indication of

the throughput of the processor, cache, and memory of the system under test.

-t Perform timings of device reads for benchmark and comparison purposes.

This displays the speed of reading through the buffer cache to the disk

without any prior caching of data. This measurement is an indication of how

fast the drive can sustain sequential data reads under Linux, without any

filesystem overhead.

The results of 3 runs of hdparm are shown below:

# hdparm -tT /dev/xvdb /dev/xvdc

/dev/xvdb:

Timing cached reads: 11984 MB in 1.98 seconds = 6038.36 MB/sec

Timing buffered disk reads: 158 MB in 3.01 seconds = 52.52 MB/sec

/dev/xvdc:

Timing cached reads: 11988 MB in 1.98 seconds = 6040.01 MB/sec

Timing buffered disk reads: 1810 MB in 3.00 seconds = 603.12 MB/sec

# hdparm -tT /dev/xvdb /dev/xvdc

/dev/xvdb:

Timing cached reads: 11892 MB in 1.98 seconds = 5991.51 MB/sec

Timing buffered disk reads: 172 MB in 3.00 seconds = 57.33 MB/sec

/dev/xvdc:

Timing cached reads: 12056 MB in 1.98 seconds = 6075.29 MB/sec

Timing buffered disk reads: 1972 MB in 3.00 seconds = 657.11 MB/sec

# hdparm -tT /dev/xvdb /dev/xvdc

/dev/xvdb:

Timing cached reads: 11994 MB in 1.98 seconds = 6042.39 MB/sec

Timing buffered disk reads: 254 MB in 3.02 seconds = 84.14 MB/sec

/dev/xvdc:

Timing cached reads: 11890 MB in 1.99 seconds = 5989.70 MB/sec

Timing buffered disk reads: 1962 MB in 3.00 seconds = 653.65 MB/sec

Result: Sequential reads from instance storage are 10x faster compared to EBS on average.

IOzone

I'm running MySQL and sequential data reads are probably over idealistic scenario. So I found another benchmark suite, called IOzone. I used the 3-414 version built from the official SRPM.

IOzone performs multiple tests. I'm interested in read/re-read, random-read/write, read-backwards and stride-read.

For this round of testing I've tested with ext4 filesystem with and without journal on both types of disks. I also experimented running Iozone inside a ramfs mounted directory. However I didn't have the time to run the test suite multiple times.

Then I used iozone-results-comparator to visualize the results. (I had to do a minor fix to the code to run inside virtualenv and install all missing dependencies).

Raw IOzone output, data visualization and the modified tools are available in the aws_disk_benchmark_w_iozone.tar.bz2 file (size 51M).

Graphics

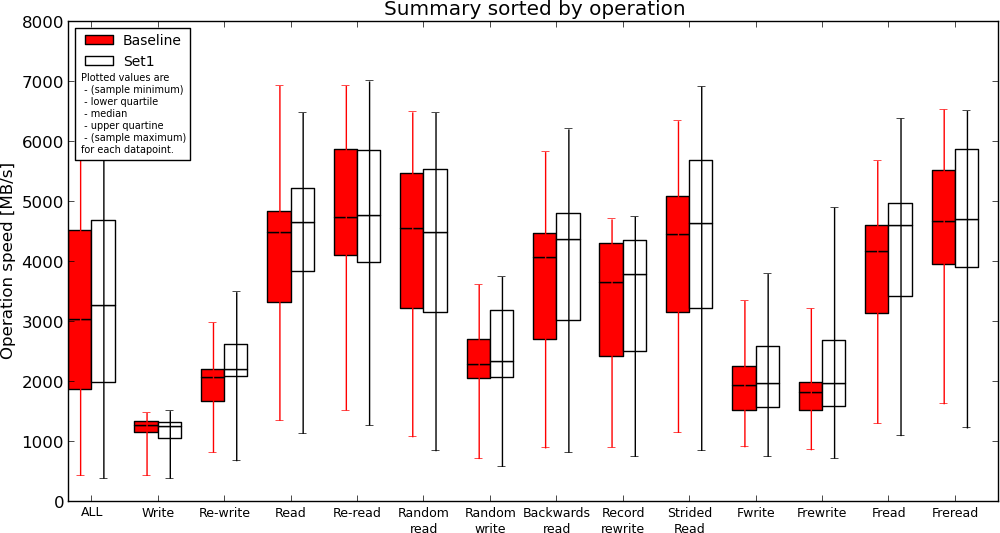

EBS without journal(Baseline) vs. Instance Storage without journal(Set1)

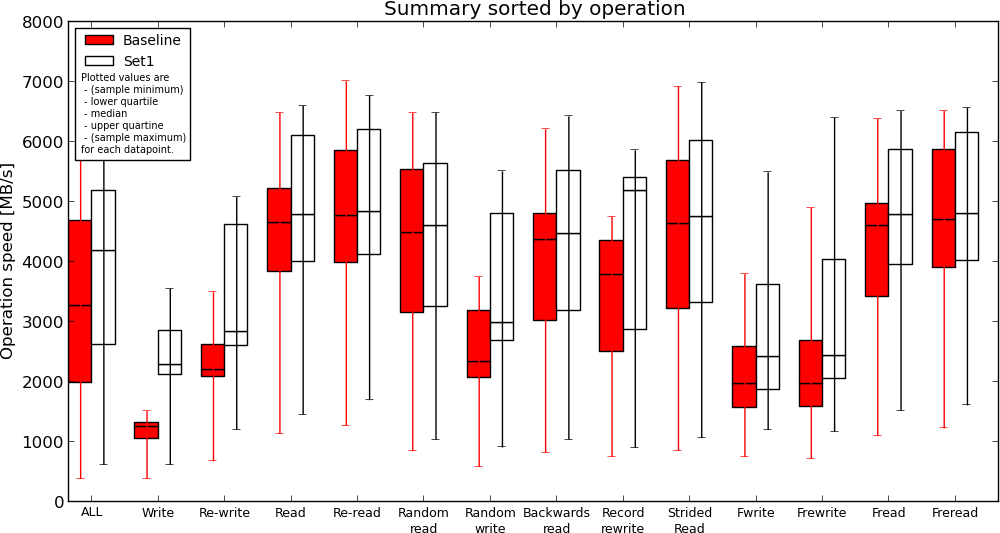

Instance Storage without journal(Baseline) vs. Ramfs(Set1)

Results

- ext4 journal has no effect on reads, causes slow down when writing to disk. This is expected;

- Instance storage is faster compared to EBS but not much. If I understand the results correctly, read performance is similar in some cases;

- Ramfs is definitely the fastest but read performance compared to instance storage is not two-fold (or more) as I expected;

Conclusion

Instance storage appears to be faster (and this is expected) but I'm still not sure if my application will gain any speed improvement or how much if migrated to read from instance storage (or ramfs) instead of EBS. I will be performing more real-world test next time, by comparing execution time for some of my largest SQL queries.

If you have other ideas how to adequately measure I/O performance in the AWS cloud, please use the comments below.

There are comments.

Tip: Generating Directory Listings with wget

Today I was looking to generate a list of all files under remote site directory, including sub directories. I found no built-in options for this in wget. This is how I did it:

wget http://example.com/dir/ --spider -r -np 2>&1 | grep http:// | tr -s ' ' | cut -f3 -d' '

I managed to retrieve 12212 entries from the URL I was exploring.

There are comments.

How Large Are My MySQL Tables

Image CC-BY-SA, Michael Mandiberg

Image CC-BY-SA, Michael Mandiberg

I found two good blog posts about investigating MySQL internals: Researching your MySQL table sizes and Finding out largest tables on MySQL Server. Using the queries against my site Difio showed:

mysql> SELECT CONCAT(table_schema, '.', table_name),

-> CONCAT(ROUND(table_rows / 1000000, 2), 'M') rows,

-> CONCAT(ROUND(data_length / ( 1024 * 1024 * 1024 ), 2), 'G') DATA,

-> CONCAT(ROUND(index_length / ( 1024 * 1024 * 1024 ), 2), 'G') idx,

-> CONCAT(ROUND(( data_length + index_length ) / ( 1024 * 1024 * 1024 ), 2), 'G') total_size,

-> ROUND(index_length / data_length, 2) idxfrac

-> FROM information_schema.TABLES

-> ORDER BY data_length + index_length DESC;

+----------------------------------------+-------+-------+-------+------------+---------+

| CONCAT(table_schema, '.', table_name) | rows | DATA | idx | total_size | idxfrac |

+----------------------------------------+-------+-------+-------+------------+---------+

| difio.difio_advisory | 0.04M | 3.17G | 0.00G | 3.17G | 0.00 |

+----------------------------------------+-------+-------+-------+------------+---------+

The table of interest is difio_advisory which had 5 longtext fields. Those fields were

not used for filtering or indexing the rest of the information.

They were just storage fields - a `nice' side effect of using Django's ORM.

I have migrated the data to Amazon S3 and stored it in JSON format there. After dropping these fields the table was considerably smaller:

+----------------------------------------+-------+-------+-------+------------+---------+

| CONCAT(table_schema, '.', table_name) | rows | DATA | idx | total_size | idxfrac |

+----------------------------------------+-------+-------+-------+------------+---------+

| difio.difio_advisory | 0.01M | 0.00G | 0.00G | 0.00G | 0.90 |

+----------------------------------------+-------+-------+-------+------------+---------+

For those interested I'm using django-storages on the back-end to save the data in S3 when generated. On the front-end I'm using dojo.xhrGet to load the information directly into the browser.

I'd love to hear your feedback in the comments section below. Let me know what you found for your own databases. Were there any issues? How did you deal with them?

There are comments.

Secure VNC Installation of Red Hat Enterprise Linux 6

Image CC-BY-SA,

Red Hat

Image CC-BY-SA,

Red Hat

From time to time I happen to remotely install Red Hat Enterprise Linux servers via the Internet. When the system configuration is not decided upfront you need to use interactive mode. This means VNC in my case.

In this tutorial I will show you how to make VNC installations more secure when using public networks to connect to the server.

Meet your tools

Starting with Red Hat Enterprise Linux 6 and all the latest Fedora releases, the installer supports SSH connections during install.

Note that by default, root has a blank password.

If you don't want any user to be able to ssh in and have full access to your hardware, you must specify sshpw for username root. Also note that if Anaconda fails to parse the kickstart file, it will allow anyone to login as root and have full access to your hardware.

Fedora Kickstart manual https://fedoraproject.org/wiki/Anaconda/Kickstart#sshpw

Preparation

We are going to use SSH port forwarding and tunnel VNC traffic through it. Create a kickstart file as shown below:

install

url --url http://example.com/path/to/rhel6

lang en_US.UTF-8

keyboard us

network --onboot yes --device eth0 --bootproto dhcp

vnc --password=s3cr3t

sshpw --user=root s3cr3t

The first 5 lines configure the loader portion of the installer. They will setup networking and fetch the installer image called stage2. This is completely automated. NB: If you miss some of the lines or have a syntax error the installer will prompt for values. You either need a remote console access or somebody present at the server console!

The last 2 lines configure passwords for VNC and SSH respectively.

Make this file available over HTTP(S), FTP or NFS.

NB: Make sure that the file is available on the same network where your server is, or use HTTPS if on public networks.

Installation

Now, using your favorite installation media start the installation process like this:

boot: linux sshd=1 ks=http://example.com/ks.cfg

After a minute or more the installer will load stage2, with the interactive VNC session. You need to know the IP address or hostname of the server. Either look into DHCP logs, have somebody look at the server console and tell you that (it's printed on tty1) or script it in a %pre script which will send you an email for example.

When ready, redirect one of your local ports through SSH to the VNC port on the server:

$ ssh -L 5902:localhost:5901 -N root@server.example.com

Now connect to DISPLAY :2 on your system to begin the installation:

$ vncviewer localhost:2 &

Warning Bugs Present

As it happens, I find bugs everywhere. This is no exception. Depending on your network/DHCP configuration IP address during install may change mid-air and cause VNC client connection to freeze.

The reason for this bug is evident from the code (rhel6-branch):

if not anaconda.isKickstart:

self.utcCheckbox.set_active(not hasWindows(anaconda.id.bootloader))

if not anaconda.isKickstart and not hasWindows(anaconda.id.bootloader):

asUtc = True

Because we are using a kickstart file Anaconda will assume the system clock DOES NOT use UTC. If you forget to configure it manually you may see time on the server shifting back or forward (depending on your timezone) while installing. If your DHCP is configured for short lease time the address will expire before the installation completes. When new address is requested from DHCP it may be different and this will cause your VNC connection to freeze.

To workaround this issue select the appropriate value for the system clock settings during install and possibly use static IP address during the installation.

Feedback

As always I'd love to hear your feedback in the comments section below. Let me know your tips and tricks to perform secure remote installations using public networks.

There are comments.

The shoes start-up

Did you know there is a start-up company producing men's shoes? Me neither, and I love good quality shoes.

Beckett Simonon launched in 2012, and slashed prices by working with local manufacturers and selling directly to customers online.

The company, which currently operates out of Bogota, Colombia, says it has generated $80,000 in revenue after two months in operation, and has five employees.

Julie Strickland http://www.inc.com/julie-strickland/mens-fashion-startups.html

From what I can tell Beckett Simonon produce nice shoes. I would rate them between very good and high quality, just looking at the pictures. All designs are very classy and stylish, which is rare these days. Leather looks high quality, soles too.

The ones I loved right away are the Bailey Chukka Boot model, shown here. So far my gut feeling about shoes has never been wrong, even on the Internet, so I'm thinking to pre-order a pair.

Anybody wants to team up for a joint order?

There are comments.

Performance test of MD5, SHA1, SHA256 and SHA512

A few months ago I wrote

django-s3-cache.

This is Amazon Simple Storage Service (S3) cache backend for Django

which uses hashed file names.

django-s3-cache uses sha1 instead of md5 which appeared to be

faster at the time. I recall that my testing wasn't very robust so I did another

round.

Test Data

The file urls.txt contains 10000 unique paths from the dif.io website and looks like this:

/updates/Django-1.3.1/Django-1.3.4/7858/

/updates/delayed_paperclip-2.4.5.2 c23a537/delayed_paperclip-2.4.5.2/8085/

/updates/libv8-3.3.10.4 x86_64-darwin-10/libv8-3.3.10.4/8087/

/updates/Data::Compare-1.22/Data::Compare-Type/8313/

/updates/Fabric-1.4.0/Fabric-1.4.4/8652/

Test Automation

I used the standard timeit module in Python.

#!/usr/bin/python

import timeit

t = timeit.Timer(

"""

import hashlib

for line in url_paths:

h = hashlib.md5(line).hexdigest()

# h = hashlib.sha1(line).hexdigest()

# h = hashlib.sha256(line).hexdigest()

# h = hashlib.sha512(line).hexdigest()

"""

,

"""

url_paths = []

f = open('urls.txt', 'r')

for l in f.readlines():

url_paths.append(l)

f.close()

"""

)

print t.repeat(repeat=3, number=1000)

Test Results

The main statement hashes all 10000 entries one by one. This statement is executed 1000 times in a loop, which is repeated 3 times. I have Python 2.6.6 on my system. After every test run the system was rebooted. Execution time in seconds is available below.

MD5 10.275190830230713, 10.155328989028931, 10.250311136245728

SHA1 11.985718965530396, 11.976419925689697, 11.86873197555542

SHA256 16.662450075149536, 21.551337003707886, 17.016510963439941

SHA512 18.339390993118286, 18.11187481880188, 18.085782051086426

Looks like I was wrong the first time! MD5 is still faster but not that much. I will stick with SHA1 for the time being.

If you are interested in Performance Testing checkout the

performance testing books on Amazon.

As always I’d love to hear your thoughts and feedback. Please use the comment form below.

Python 2.7 vs. 3.6 and BLAKE2

UPDATE: added on June 9th 2017

After request from my reader refi64 I've tested this again between different versions of Python and included a few more hash functions. The test data is the same, the test script was slightly modified for Python 3:

import timeit

print (timeit.repeat(

"""

import hashlib

for line in url_paths:

# h = hashlib.md5(line).hexdigest()

# h = hashlib.sha1(line).hexdigest()

# h = hashlib.sha256(line).hexdigest()

# h = hashlib.sha512(line).hexdigest()

# h = hashlib.blake2b(line).hexdigest()

h = hashlib.blake2s(line).hexdigest()

"""

,

"""

url_paths = [l.encode('utf8') for l in open('urls.txt', 'r').readlines()]

""",

repeat=3, number=1000))

Test was repeated 3 times for each hash function and the best time was taken into account. The test was performed on a recent Fedora 26 system. The results are as follows:

Python 2.7.13

MD5 [13.94771409034729, 13.931367874145508, 13.908519983291626]

SHA1 [15.20741891860962, 15.241390943527222, 15.198163986206055]

SHA256 [17.22162389755249, 17.229840993881226, 17.23402190208435]

SHA512 [21.557533979415894, 21.51376700401306, 21.522911071777344]

Python 3.6.1

MD5 [11.770181038000146, 11.778772834999927, 11.774679265000032]

SHA1 [11.5838599839999, 11.580340686999989, 11.585769942999832]

SHA256 [14.836309305999976, 14.847088003999943, 14.834776135999846]

SHA512 [19.820048629999746, 19.77282728099999, 19.778471210000134]

BLAKE2b [12.665497404000234, 12.668979115000184, 12.667314543999964]

BLAKE2s [11.024885618000098, 11.117366972000127, 10.966767880999669]

- Python 3 is faster than Python 2

- SHA1 is a bit faster than MD5, maybe there's been some optimization

- BLAKE2b is faster than SHA256 and SHA512

- BLAKE2s is the fastest of all functions

Note: BLAKE2b is optimized for 64-bit platforms, like mine and I thought it will be faster than BLAKE2s (optimized for 8- to 32-bit platforms) but that's not the case. I'm not sure why is that though. If you do, please let me know in the comments below!

There are comments.

Tip: Save Money on Amazon - Buy Used Books

I like to buy books, the real ones, printed on paper. This however comes at a certain price when buying from Amazon. The book price itself is usually bearable but many times shipping costs to Bulgaria will double the price. Especially if you are making a single book order.

To save money I started buying used books when available. For books that are not so popular I look for items that have been owned by a library.

This is how I got a hardcover 1984 edition of

The Gentlemen's Clubs of London

by Anthony Lejeune for $10. This is my best deal so far.

The book was brand new I dare to say. There was no edge wear, no damaged pages,

with nice and vibrant colors. The second page had the library sign and no other marks.

Let me know if you had an experience buying used books online? Did you score a great deal like I did?

There are comments.

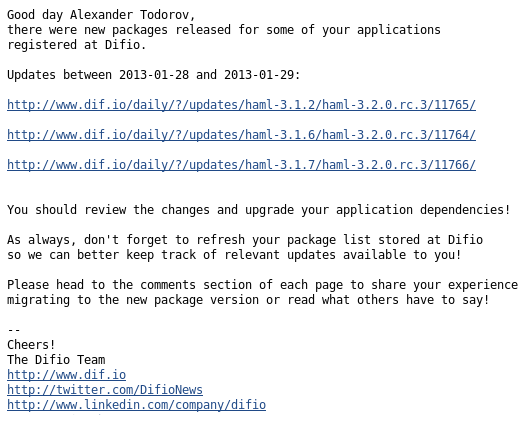

Click Tracking without MailChimp

Here is a standard notification message that users at Difio receive. It is plain text, no HTML crap, short and URLs are clean and descriptive. As the project lead developer I wanted to track when people click on these links and visit the website but also keep existing functionality.

Standard approach

A pretty common approach when sending huge volumes of email is to use an external service, such as MailChimp. This is one of many email marketing services which comes with a lot of features. The most important to me was analytics and reports.

The downside is that MailChimp (and I guess others) use HTML formatted emails extensively. I don't like that and I'm sure my users will not like it as well. They are all developers. Not to mention that MailChimp is much more expensive than Amazon SES which I use currently. No MailChimp for me!

Another common approach, used by Feedburner by the way, is to use shortened URLs which redirect to the original ones and measure clicks in between. I also didn't like this for two reasons: 1) the shortened URLs look ugly and they are not at all descriptive and 2) I need to generate them automatically and maintain all the mappings. Why bother ?

How I did it?

So I needed something which will do a redirect to a predefined URL, measure how many redirects were there (essentially clicks on the link) and look nice. The solution is very simple, if you have not recognized it by now from the picture above.

I opted for a custom redirect engine, which will add tracking information to the destination URL so I can track it in Google Analytics.

Previous URLs were of the form http://www.dif.io/updates/haml-3.1.2/haml-3.2.0.rc.3/11765/.

I've added the humble /daily/? prefix before the URL path so it becomes

http://www.dif.io/daily/?/updates/haml-3.1.2/haml-3.2.0.rc.3/11765/

Now /updates/haml-3.1.2/haml-3.2.0.rc.3/11765/ becomes a query string parameter which

the /daily/index.html page uses as its destination. Before doing the redirect

a script adds tracking parameters so that Google Analytics will properly

report this visit. Here is the code:

<html>

<head>

<script type="text/javascript">

var uri = window.location.toString();

var question = uri.indexOf("?");

var param = uri.substring(question + 1, uri.length)

if (question > 0) {

window.location.href = param + '?utm_source=email&utm_medium=email&utm_campaign=Daily_Notification';

}

</script>

</head>

<body></body>

</html>

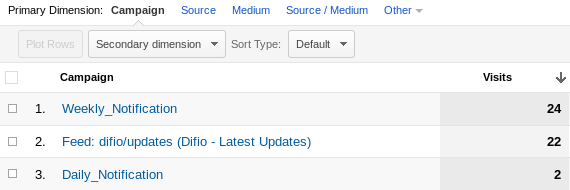

Previously Google Analytics was reporting these visits as direct hits while now it lists them under campaigns like so:

Because all visitors of Difio use JavaScript enabled browsers I combined this approach with another one, to remove query string with JavaScript and present clean URLs to the visitor.

Why JavaScript?

You may be asking why the hell I am using JavaScript and not Apache's wonderful mod_rewrite module? This is because the destination URLs are hosted in Amazon S3 and I'm planning to integrate with Amazon CloudFront. Both of them don't support .htaccess rules nor anything else similar to mod_rewrite.

As always I'd love to hear your thoughts and feedback. Please use the comment form below.

There are comments.

StartUP Talk#5 - book list

Yesterday I have visited an interesting talk by Teodor Panayotov held at betahaus Sofia. He was talking about his path to success and all the companies he had worked in or founded.

I'm writing this post as a personal note to not forget all the good books Teodor mentioned in his presentation.

I intend to read the these four as a start:

The 4-Hour Workweek: Escape 9-5, Live Anywhere, and Join the New Rich (Expanded and Updated)

The E-Myth Revisited: Why Most Small Businesses Don't Work and What to Do About It

Mastering the Hype Cycle: How to Choose the Right Innovation at the Right Time (Gartner)

The rest he recommended are:

How to Get Rich: One of the World's Greatest Entrepreneurs Shares His Secrets

Abundance: The Future Is Better Than You Think

The Singularity Is Near: When Humans Transcend Biology

The Law Of Success: Napoleon Hill

If you happen to read any of these before me, please share your thoughts on the book.

There are comments.

Remove Query String with JavaScript and HTML5

A query string is the stuff after the question mark in URLs.

Why remove query strings?

Two reasons: clean URLs and social media.

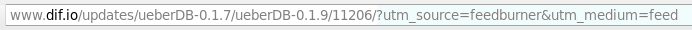

Clean URLs not only look better but they prevent users to see if you are tracking where they came from. The picture above shows what the address bar looks like after the user clicks a link in Feedburner. The high-lightened part is what Google Analytics uses to distinguish Feedburner traffic from other types of traffic. I don't want my users to see this.

As you know, social media sites give URLs a score, whether it is based on number of bookmarks, reviews, comments, likes or whatever. Higher scores usually result in increased traffic to your site. Query strings mess things up because http://www.dif.io and http://www.dif.io/?query are usually considered two different URLs. So instead of having a high number of likes for your page you get several scores and never make it to the headlines.

JavaScript and HTML5 to the rescue

Place this JavaScript code in the <head> section of your pages. Preferably near the top.

<script type="text/javascript">

var uri = window.location.toString();

if (uri.indexOf("?") > 0) {

var clean_uri = uri.substring(0, uri.indexOf("?"));

window.history.replaceState({}, document.title, clean_uri);

}

</script>

This code will clean the URL in the browser address bar without reloading the page. It works for HTML5 enabled browsers.

This works for me with

Firefox 10.0.12, Opera 12.02.1578 and Chrome 24.0.1312.56 under Linux.

In fact I'm using this snippet on this very own blog as well.

UPDATE: I've

migrated to Pelican

and haven't enabled this script on the blog!

Updated on 2013-01-30

Here is another approach proposed by reader Kamen Mazdrashki:

<script type="text/javascript">

var clean_uri = location.protocol + "//" + location.host + location.pathname;

/*

var hash_pos = location.href.indexOf("#");

if (hash_pos > 0) {

var hash = location.href.substring(hash_pos, location.href.length);

clean_uri += hash;

}

*/

window.history.replaceState({}, document.title, clean_uri);

</script>

If you'd like to keep the hash tag aka named anchor aka fragment identifier at the end of the URL then uncomment the commented section.

I've tested removing the hashtag from the URL. Firefox doesn't seem to scroll the page to where I wanted but your experience may vary. I didn't try hard enough to verify the results.

One question still remains though: Why would someone point the users to an URL which contains named anchors and then remove them? I don't see a valid use case for this scenario.

You may want to take a look at the many

HTML5 and JavaScript books

if you don't have enough experience with the subject. For those of you who

are looking into Node.js I can recommend two book by my friend Krasi Tsonev:

Node.js Blueprints - Practical Projects to Help You Unlock the Full Potential of Node.js

and

Node.js By Example

.

There are comments.

Hello World

Hi everyone and welcome to my blog! My name is Alex and you can read more about me if you like.

After several years of rather unsuccessful blogging I decided to revamp my blog,

influenced by the book

Technical Blogging: Turn Your Expertise into a Remarkable Online Presence

(

Amazon

|

O'Reilly

).

I'm starting fresh with new domain name and a big list of topics to write about. This blog will be the place to share my thoughts on open source software, QA and cloud. From time to time I intend to write about start-ups and being a start-up owner as well.

All content is published under Creative Commons Attribution-ShareAlike 3.0 license and all source code is under the MIT license if not stated otherwise.

I hope you find it interesting and helpful.

Update 2013-09-22: I have migrated some older blog posts and materials scattered around various places to this site as well. So this isn't the very first post anymore!

There are comments.

About Me

My name is Alexander Todorov and this is how I look. It's pretty close!

I'm currently a contractor operating in the field of open source software and quality engineering and a start-up owner with many ideas.

My professional interests cover vast amount of topics including but not limited to Linux and open source, quality engineering, DevOps, cloud and platform-as-a-service, programming with Python and Django.

I used to teach to students at Technical University of Sofia. Nowadays I teach the QA and Automation 101 course at HackBulgaria!

Execute this command to see my email:

echo 17535658@572.24 | tr [12345678] [abdgortv]

Below you can find a list of current ventures and some of the more interesting previous ones.

Mr. Senko

is an open source tech support company, focusing on libraries and components used to build

other applications. Whenever you need something fixed and released into a new package version

Mr. Senko is the go-to place!

Difio was a service targeted at developers

who use open source packages and libraries. It shut down on Sept 10th 2014.

I have some hard-earned certificates including:

- RHCE - Red Hat Certified Engineer - Oct 2008

- RHCI - Red Hat Certified Instructor - Oct 2009

- RHCVA - Red Hat Certified Virtualization Administrator - Sep 2010

- Red Hat Partner Platform Certified Salesperson - Oct 2011

- Red Hat Partner Virtualization Certified Salesperson - Oct 2011

- Red Hat Partner Middleware Certified Salesperson - Oct 2011

and I also own some domains. If you are interested just ask!

- atodorov.org

- dif.io - not in use anymore

- Mr. Senko

- obuvki41plus.com

- otb.bg

- qecloud.com

- qecloud.net

- qecloud.org

There are comments.

Mission Impossible - ABRT Bugzilla Plugin on RHEL6

Some time ago Red Hat introduced Automatic Bug Reporting Tool to their Red Hat Enterprise Linux platform. This is a nice tool which lets users report bugs easily to Red Hat. However one of the plugins in the latest version doesn't seem usable at all.

First make sure you have libreport-plugin-bugzilla package installed. This is the plugin to

report bugs directly to Bugzilla. It may not be installed by default

because customers are supposed to report issues to Support first - this is why they pay anyway.

If you are a tech savvy user though, you may want to skip Support and go straight to the developers.

To enable Bugzilla plugin:

- Edit the file

/etc/libreport/events.d/bugzilla_event.confchange the lineEVENT=report_Bugzilla analyzer=libreport reporter-bugzilla -b

to

EVENT=report_Bugzilla reporter-bugzilla -b

-

Make sure ABRT will collect meaningful backtrace. If debuginfo is missing it will not let you continue. Edit the file

/etc/libreport/events.d/ccpp_event.conf. There should be something like this:EVENT=analyze_LocalGDB analyzer=CCpp abrt-action-analyze-core --core=coredump -o build_ids && abrt-action-generate-backtrace && abrt-action-analyze-backtrace ( bug_id=$(reporter-bugzilla -h `cat duphash`) && if test -n "$bug_id"; then abrt-bodhi -r -b $bug_id fi ) -

Change it to look like this - i.e. add the missing

/usr/libexec/line:EVENT=analyze_LocalGDB analyzer=CCpp abrt-action-analyze-core --core=coredump -o build_ids && /usr/libexec/abrt-action-install-debuginfo-to-abrt-cache --size_mb=4096 && abrt-action-generate-backtrace && abrt-action-analyze-backtrace && ( bug_id=$(reporter-bugzilla -h `cat duphash`) && if test -n "$bug_id"; then abrt-bodhi -r -b $bug_id fi )

Supposedly after everything is configured properly ABRT will install missing debuginfo packages, generate the backtrace and let you report it to Bugzilla. Because of bug 759443 this will not happen.

To work around the problem you can try to manually install the missing debuginfo packages. Go to your system profile in RHN and subscribe the system to all appropriate debuginfo channels. Then install the packages. In my case:

# debuginfo-install firefox

And finally - bug 800754 which was already reported!

There are comments.

Combining PDF Files On The Command Line

VERSION

Red Hat Enterprise Linux 6

PROBLEM

You have to create a single PDF file by combining multiple files - for example individually scanned pages.

ASSUMPTIONS

You know how to start a shell and havigate to the directory containing the files.

SOLUTION

If individual PDF files are named, for example, doc_01.pdf, doc_02.pdf, doc_03.pdf,

doc_04.pdf, then you can combine them with the gs command:

$ gs -dBATCH -dNOPAUSE -sDEVICE=pdfwrite -sOutputFile=mydocument.pdf doc_*.pdf

The resulting PDF file will contain all pages from the individual files.

MORE INFO

The gs command is part of the ghostscript rpm package.

You can find more about it using man gs, the documentation file /usr/share/doc/ghostscript-*/index.html

or http://www.ghostscript.com.

There are comments.

Page 15 / 16