Faster Travis CI tests with Docker cache

For a while now I've been running tests on Travis CI using Docker containers to build the project and execute the tests inside. In this post I will explain how to speed up execution times.

A Docker image is a filesystem snapshot similar to a virtual machine

image. From these images we build containers (e.g. we run the container X

from the image Y). The construction of Docker images is controlled via

Dockerfile which contains a set of instructions how to build the image.

For example:

FROM welder/web-nodejs:latest

MAINTAINER Brian C. Lane <bcl@redhat.com>

RUN dnf install -y nginx

CMD nginx -g "daemon off;"

EXPOSE 3000

## Do the things more likely to change below here. ##

COPY ./docker/nginx.conf /etc/nginx/

# Update node dependencies only if they have changed

COPY ./package.json /welder/package.json

RUN cd /welder/ && npm install

# Copy the rest of the UI files over and compile them

COPY . /welder/

RUN cd /welder/ && node run build

COPY entrypoint.sh /usr/local/bin/entrypoint.sh

ENTRYPOINT ["/usr/local/bin/entrypoint.sh"]

docker build is smart enough to actually build intermediate layers for each

command and store them on your computer. Each command is hashed and it is rebuilt

only if it has been changed. Thus the stuff which doesn't change often goes first

(like setting up a web server or a DB) and the stuff that changes (like the project source code)

goes at the end. All of this is beautifully explained by Stefan Kanev in

this video (in Bulgarian).

Travis and Docker

While intermediate layer caching is a standard feature for Docker it is disabled by default in Travis CI and any other CI service I was able to find. To be frank Circles CI offer this as a premium feature but their pricing plans on that aren't clear at all.

However you can enable the use of caching following a few simple steps:

- Make your Docker images publicly available (e.g. Docker Hub or Amazon EC2 Container Service)

- Before starting the test job do a

docker pull my/image:latest - When building your Docker images in Travis add

--cache-from my/image:latesttodocker build - After successful execution

docker tagthe latest image with the build job number anddocker pushit again to the hub!

NOTES:

- Everything you do will become public so take care not to expose internal code. Alternatively you may configure a private docker registry (e.g. Amazon EC2 CS) and use encrypted passwords for Travis to access your images;

docker pullwill download all layers that it needs. If your hosting is slow this will negatively impact execution times;docker pushwill upload only the layers that have been changed;- I only push images coming from the master branch which are not from a pull request build job. This prevents me from accidentally messing something up.

If you examine the logs of Job #247.4 and Job #254.4 you will notice that almost all intermediate layers were re-used from cache:

Step 3/12 : RUN dnf install -y nginx

---> Using cache

---> 25311f052381

Step 4/12 : CMD nginx -g "daemon off;"

---> Using cache

---> 858606811c85

Step 5/12 : EXPOSE 3000

---> Using cache

---> d778cbbe0758

Step 6/12 : COPY ./docker/nginx.conf /etc/nginx/

---> Using cache

---> 56bfa3fa4741

Step 7/12 : COPY ./package.json /welder/package.json

---> Using cache

---> 929f20da0fc1

Step 8/12 : RUN cd /welder/ && npm install

---> Using cache

---> 68a30a4aa5c6

Here the slowest operations are dnf install and npm install which on normal execution

will take around 5 minutes.

You can check-out my .travis.yml for more info.

First time cache

It is important to note that you need to have your docker images available in the

registry before you execute the first docker pull from CI. I do this by manually building

the images on my computer and uploading them before configuring CI integration. Afterwards

the CI system takes care of updating the images for me.

Initially you may not notice a significant improvement as seen in Job #262, Step 18/22. The initial image available on Docker Hub has all the build dependencies installed and the code has not been changed when job #262 was executed.

The COPY command copies the entire contents of the directory, including filesystem metadata!

Things like uid/gid (file ownership), timestamps (not sure if taken into account)

and/or extended attributes (e.g. SELinux)

will cause the intermediate layers checksums to differ even though the actual

source code didn't change. This will resolve itself once your CI system starts automatically

pushing the latest images to the registry.

Thanks for reading and happy testing!

There are comments.

TransactionManagementError during testing with Django 1.10

During the past 3 weeks I've been debugging a weird error which started happening after I migrated KiwiTestPad to Django 1.10.7. Here is the reason why this happened.

Symptoms

After migrating to Django 1.10 all tests appeared to be working locally on SQLite however they failed on MySQL with

TransactionManagementError: An error occurred in the current transaction. You can't execute queries until the end of the 'atomic' block.

The exact same test cases failed on PostgreSQL with:

InterfaceError: connection already closed

Since version 1.10 Django executes all tests inside transactions so my first thoughts were related to the auto-commit mode. However upon closer inspection we can see that the line which triggers the failure is

self.assertTrue(users.exists())

which is essentially a SELECT query aka

User.objects.filter(username=username).exists()!

My tests were failing on a SELECT query!

Reading the numerous posts about TransactionManagementError I discovered it may

be caused by a run-away cursor. The application did use raw SQL statements which

I've converted promptly to ORM queries, that took me some time. Then I also fixed

a couple of places where it used transaction.atomic() as well. No luck!

Then, after numerous experiments and tons of logging inside Django's own code I was able to figure out when the failure occurred and what events were in place. The test code looked like this:

response = self.client.get('/confirm/')

user = User.objects.get(username=self.new_user.username)

self.assertTrue(user.is_active)

The failure was happening after the view had been rendered upon the first time I do a SELECT against the database!

The problem was that the connection to the database had been closed midway during the transaction!

In particular (after more debugging of course) the sequence of events was:

- execute

django/test/client.py::Client::get() - execute

django/test/client.py::ClientHandler::__call__(), which takes care to disconnect/connectsignals.request_startedandsignals.request_finishedwhich are responsible for tearing down the DB connection, so problem not here - execute

django/core/handlers/base.py::BaseHandler::get_response() - execute

django/core/handlers/base.py::BaseHandler::_get_response()which goes through the middleware (needless to say I did inspect all of it as well since there have been some changes in Django 1.10) - execute

response = wrapped_callback()while still insideBaseHandler._get_response() -

execute

django/http/response.py::HttpResponseBase::close()which looks like# These methods partially implement the file-like object interface. # See https://docs.python.org/3/library/io.html#io.IOBase # The WSGI server must call this method upon completion of the request. # See http://blog.dscpl.com.au/2012/10/obligations-for-calling-close-on.html def close(self): for closable in self._closable_objects: try: closable.close() except Exception: pass self.closed = True signals.request_finished.send(sender=self._handler_class) -

signals.request_finishedis fired django/db/__init__.py::close_old_connections()closes the connection!

IMPORTANT: On MySQL setting AUTO_COMMIT=False and CONN_MAX_AGE=None helps

workaround this problem but is not the solution for me because it didn't help on

PostgreSQL.

Going back to HttpResponseBase::close() I started wondering who calls this method.

The answer was it was getting called by the @content.setter method at

django/http/response.py::HttpResponse::content() which is even more weird because

we assign to self.content inside HttpResponse::__init__()

Root cause

The root cause of my problem was precisely this HttpResponse::__init__() method

or rather the way we arrive at it inside the application.

The offending view last line was

return HttpResponse(Prompt.render(

request=request,

info_type=Prompt.Info,

info=msg,

next=request.GET.get('next', reverse('core-views-index'))

))

and the Prompt class looks like this

from django.shortcuts import render

class Prompt(object):

@classmethod

def render(cls, request, info_type=None, info=None, next=None):

return render(request, 'prompt.html', {

'type': info_type,

'info': info,

'next': next

})

Looking back at the internals of HttpResponse we see that

- if content is a string we call

self.make_bytes() - if the content is an iterator then we assign it and if the object has a close method then it is executed.

HttpResponse itself is an iterator, inherits from six.Iterator so when we initialize

HttpResponse with another HttpResponse object (aka the content) we execute content.close()

which unfortunately happens to close the database connection as well.

IMPORTANT: note that from the point of view of a person using the application the

HTML content is exactly the same regardless of whether we have nested HttpResponse objects

or not.

Also during normal execution the code doesn't run inside a transaction so we never notice

the problem in production.

The fix of course is very simple, just return Prompt.render()!

Thanks for reading and happy testing!

There are comments.

Producing coverage report for Haskell binaries

Recently I've started testing a Haskell application and a question I find unanswered (or at least very poorly documented) is how to produce coverage reports for binaries ?

Understanding HPC & cabal

hpc is the Haskell code coverage tool. It produces the following files:

- .mix - module index file, contains information about tick boxes - their type and location in the source code;

- .tix - tick index file aka coverage report;

- .pix - program index file, used only by

hpc trans.

The invocation to hpc report needs to know where to find the .mix files in order

to be able to translate the coverage information back to source and it needs to

know the location (full path or relative from pwd) to the tix file we want to

report.

cabal is the package management tool for Haskell. Among other thing it can be used

to build your code, execute the test suite and produce the coverage report for you.

cabal build will produce module information in dist/hpc/vanilla/mix and

cabal test will store coverage information in dist/hpc/vanilla/tix!

A particular thing about Haskell is that you can only test code which can be

imported, e.g. it is a library module. You can't test (via Hspec or Hunit) code which

lives inside a file that produces a binary (e.g. Main.hs). However you can still

execute these binaries (e.g. invoke them from the shell) and they will produce a

coverage report in the current directory (e.g. main.tix).

Putting everything together

- Using

cabal buildandcabal testbuild the project and execute your unit tests. This will create the necessary .mix files (including ones for binaries) and .tix files coming from unit testing; - Invoke your binaries passing appropriate data and examining the results (e.g. compare the output to a known value). A simple shell or Python script could do the job;

- Copy the

binary.tixfile underdist/hpc/vanilla/binary/binary.tix!

Produce coverage report with hpc:

hpc markup --hpcdir=dist/hpc/vanilla/mix/lib --hpcdir=dist/hpc/vanilla/mix/binary dist/hpc/vanilla/tix/binary/binary.tix

Convert the coverage report to JSON and send it to Coveralls.io:

cabal install hpc-coveralls

~/.cabal/bin/hpc-coveralls --display-report tests binary

Example

Check out the haskell-rpm repository

for an example. See job #45 where there is now

coverage for the inspect.hs, unrpm.hs and rpm2json.hs files, producing binary executables.

Also notice that in

RPM/Parse.hs

the function parseRPMC is now covered, while it was not covered in the

previous job #42!

script:

- ~/.cabal/bin/hlint .

- cabal install --dependencies-only --enable-tests

- cabal configure --enable-tests --enable-coverage --ghc-option=-DTEST

- cabal build

- cabal test --show-details=always

# tests to produce coverage for binaries

- wget https://s3.amazonaws.com/atodorov/rpms/macbook/el7/x86_64/efivar-0.14-1.el7.x86_64.rpm

- ./tests/test_binaries.sh ./efivar-0.14-1.el7.x86_64.rpm

# move .tix files in appropriate directories

- mkdir ./dist/hpc/vanilla/tix/inspect/ ./dist/hpc/vanilla/tix/unrpm/ ./dist/hpc/vanilla/tix/rpm2json/

- mv inspect.tix ./dist/hpc/vanilla/tix/inspect/

- mv rpm2json.tix ./dist/hpc/vanilla/tix/rpm2json/

- mv unrpm.tix ./dist/hpc/vanilla/tix/unrpm/

after_success:

- cabal install hpc-coveralls

- ~/.cabal/bin/hpc-coveralls --display-report tests inspect rpm2json unrpm

Thanks for reading and happy testing!

There are comments.

What's the bug in this pseudo-code

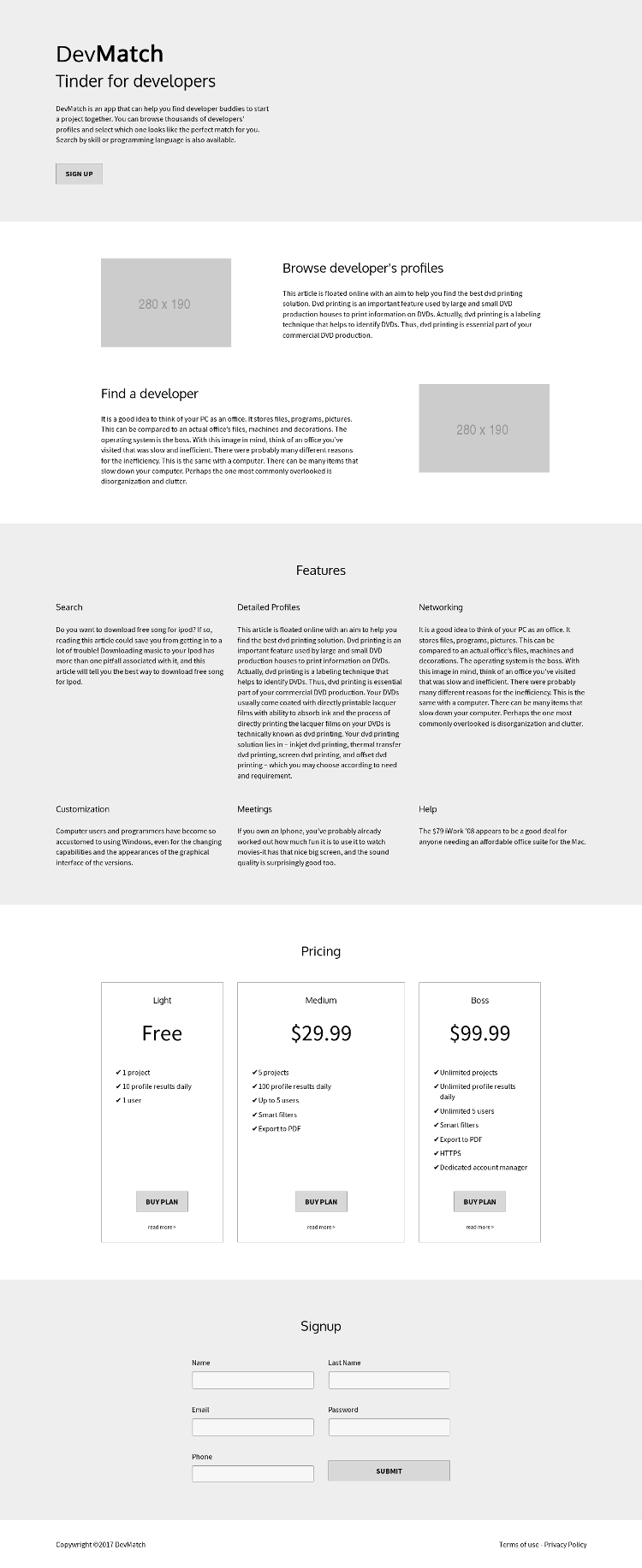

This is one of the stickers for the second edition of Rails Girls Vratsa which was held yesterday. Let's explore some of the bug proposals submitted by the Bulgarian QA group:

- sad() == true is ugly

- sad() is not very nice, better make it if(isSad())

- use sadStop(), and even better - stopSad()

- there is an extra space character in beAwesome( )

- the last curly bracket needs to be on a new line

Lyudmil Latinov

My friend Lu describes what I would call style issues. The style he refers to

is mostly Java oriented, especially with naming things. In Ruby we would probably

go with sad? instead of isSad. Style is important and there are many tools

to help us with that this will not cause a functional problem! While I'm at it let me say

the curly brackets are not the problem either. They are not valid in Ruby this is

a pseudo-code and they also fall in the style category.

The next interesting proposal comes from Tsveta Krasteva. She examines the possibility

of sad() returning an object or nil instead of boolean value. Her first question was

will the if statement still work, and the answer is yes. In Ruby everything is an object

and every object can be compared to true and false. See

Alan Skorkin's blog

post on the subject.

Then Tsveta says the answer is to use sad().stop() with the warning that it may return

nil. In this context the sad() method returns on object indicating that the person

is feeling sad. If the method returns nil then the person is feeling OK.

class Csad

def stop()

print("stop\n");

end

end

def sad()

print("sad\n");

Csad.new();

end

def beAwesome()

print("beAwesome\n");

end

# notice == true was removed

if(sad())

print("Yes, I am sad\n");

sad.stop();

beAwesome( );

end

While this is coming closer to a functioning solution something about it is bugging me.

In the if statement the developer has typed more characters than required (== true).

This sounds to me unlikely but is possible with less experienced developers.

The other issue is that we are using an object (of class Csad) to represent an internal

state in the system under test. There is one method to return the state (sad()) and

another one to alter the state (Csad.stop()). The two methods don't operate on

the same object! Not a very strong OOP design. On top of that we have to call the

method twice, first time in the if statement, the second time in the body of the

if statement, which may have unwanted side effects. It is best to assign the return

value to some variable instead.

IMO if we are to use this OOP approach the code should look something like:

class Person

def sad?()

end

def stopBeingSad()

end

def beAwesome()

end

end

p = Person.new

if p.sad?

p.stopBeingSad

p.beAwesome

end

Let me return back to assuming we don't use classes here.

The first obvious mistake is the space in sad stop(); first spotted by Peter Sabev*.

His proposal, backed by others is to use sad.stop(). However they

didn't use my hint asking what is the return value of sad() ?

If sad() returns boolean then we'll get

undefined method 'stop' for true:TrueClass (NoMethodError)!

Same thing if sad() returns nil, although we skip the if block in this case.

In Ruby we are allowed to skip parentheses when calling a method, like I've shown

above. If we ignore this fact for a second, then sad?.stop() will mean execute the

method named stop() which is a member of the sad? variable, which is of type method!

Again, methods don't have an attribute named stop!

The last two paragraphs are the semantic/functional mistake I see in this code. The only way for it to work is to use an OOP variant which is further away from what the existing clues give us.

Note: The variant sad? stop() is syntactically correct. This means call the function sad?

with parameter the result of calling the method stop(), which depending on the outer scope of this program may or may not

be correct (e.g. stop is defined, sad? accepts optional parameters, sad? maintains

global state).

Thanks for reading and happy testing!

There are comments.

Design for developers in 5 steps

Design is a method! Design can be taught! Developers can do good design! If this sounds outrageous then I present you Zaharenia Atzitzikaki who is a developer by education, not a graphics designer and she thinks otherwise. This blog post will summarize her workshop held at the DEVit conference last month.

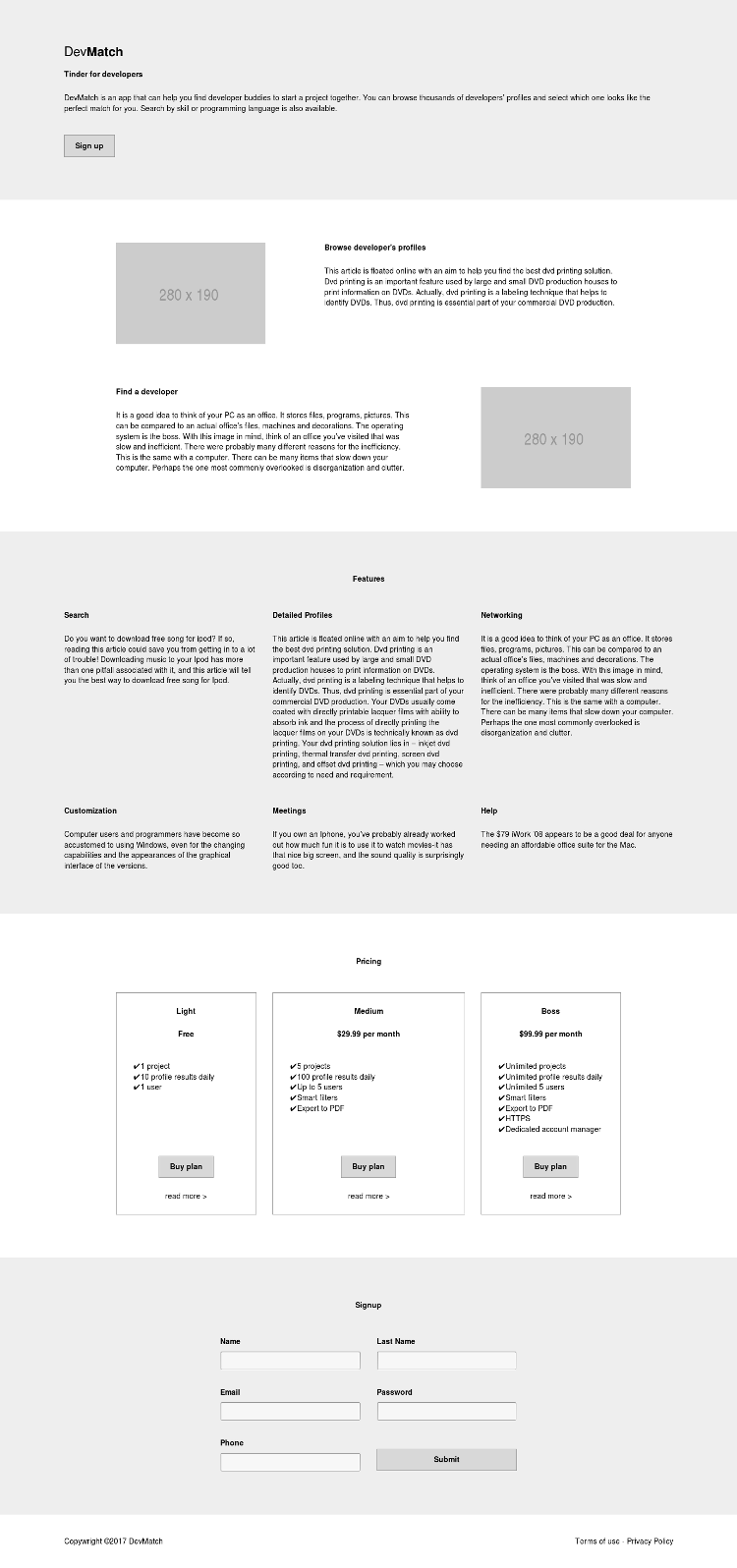

We are going to build a site called DevMatch, which is like Tinder for

developers. The initial version doesn't look bad but we can do better:

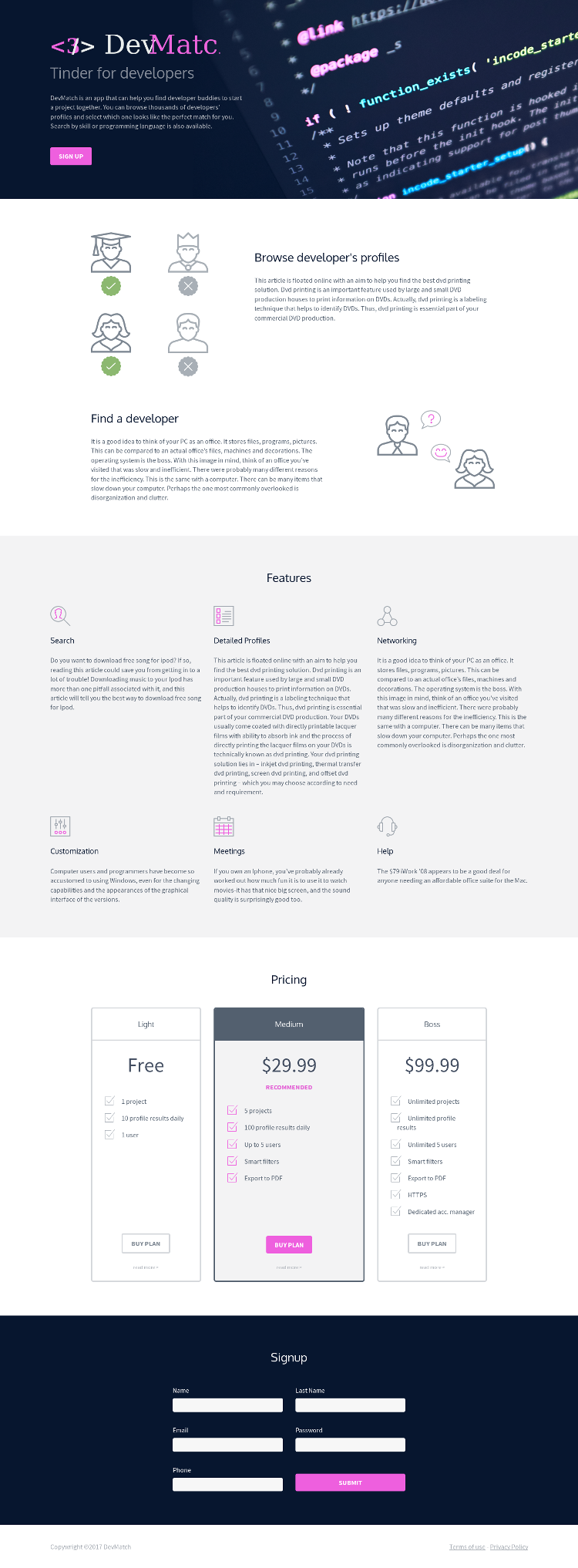

Step 1: Layout

Layout is grids and the most popular designs use grids with 12, 16 or 24 columns. The idea is to make everything align to the grid which allows the eyes to follow a straight line and makes the content easier to perceive. You don't want to break the story line. Don't fear the white space but don't leave it random.

Make everything align to the grid ... but not so much (checkout this TEDx talk about predictability and variability in music).

Make sure not to use centered alignment, nor justified alignment because they don't provide a single line for the eyes to follow. Align to the left, buttons align at the bottom.

To make an element more prominent (like the recommended plan) then make it double width!

Finally we remove the stock images because they are distracting!

Here's how everything looks now:

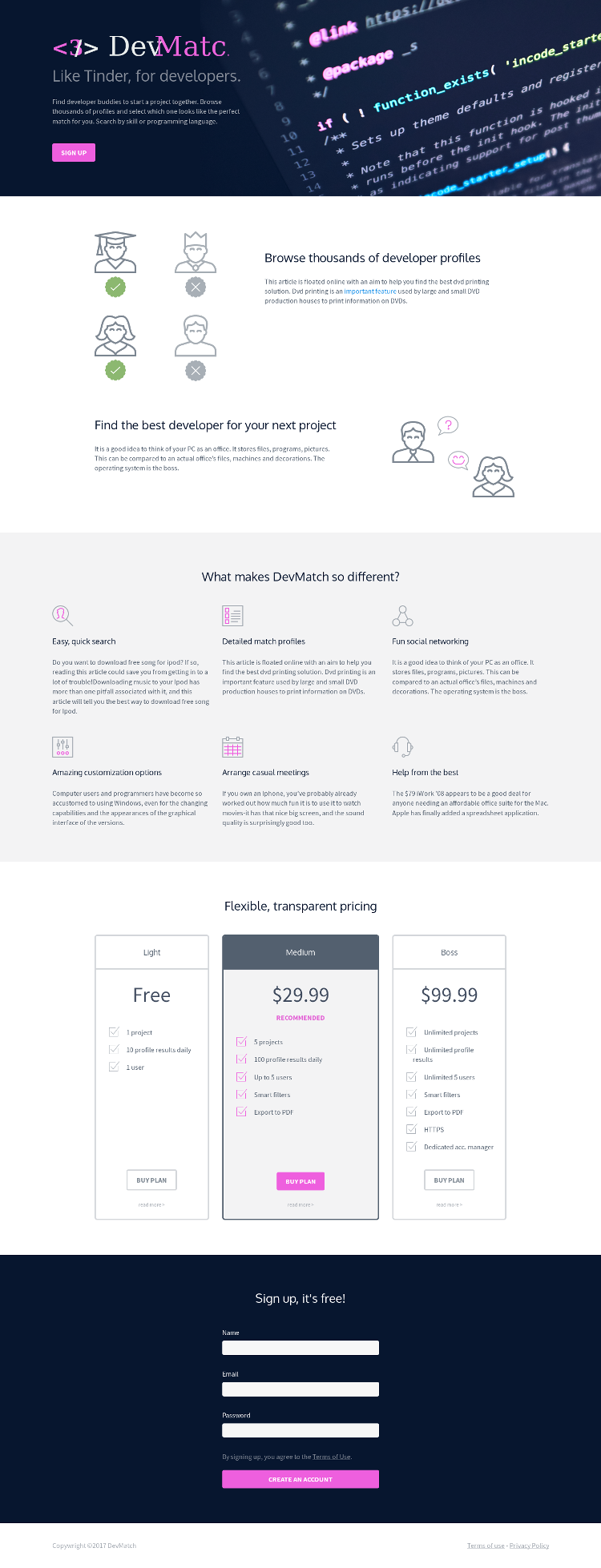

Step 2: Typography

The web is 95% typography. Serif fonts are good for reading long passages of text because they allow the eyes to follow. Sans-serif fonts look great on screens, especially for smaller sizes (< 12px). Monospaced fonts are only for code! Script fonts are fun but use them with caution.

The fonts we select need to improve readability, not hinder it. Minimum font size should be 16px or even 18px.

Use a typographic scale which tells you how big certain text should be, e.g. h1 vs h2 vs h3 vs paragraph!

Find a font pair which works (e.g. Oxygen + Source Sans). Also compile a list of fallback fonts, e.g. Futura, Trebuchet MS, Arial, Sans-serif. This makes sure that your fonts work well together and that visitors on your site will use fonts which are as close as possible to what you intended.

Increase line height to improve readability of paragraphs. The minimum is 1.4em. Keep line length short, between 45 and 75 characters.

Layout and Typography are the two most important design steps and you will

achieve very good results if you apply only the two of them.

Here's how everything looks now:

Step 3: Color

Find a color palette generator and use it. For new projects start with competitor analysis, a logo or a picture you like or something that conveys a known meaning to the customer. Zaharenia's tips include:

- Avoid clear black and clear white because they are not easy to read. Use a gray-scale shade or change the transparency channel to get a new color;

- Success is GREEN;

- Error is RED. Don't use red color for normal text;

- Don't rely on color alone because some people may be color blind, others may be using a gray scale (or a bad quality) screen, etc.;

- For links use underlines;

- For background use the brand color - this is the most visible color;

- Use an accent color. This is the most striking color (purple in this example) but use it sparingly, only for buttons or important items;

- We need a light background color as well;

- Need dark text color, but not black;

- Need link color;

Here's how everything looks now:

Step 4: Visual elements

Here we talk about icons and images which are to be used only as visual aid, not alone (especially for navigation). The best thing you can do is find a good icon set (with lots of sizes) or even better an SVG set. Then combine several icons together if need be, instead of using stock photos.

It is best to use SVG for all icons because we can use CSS to modify the colors

inside the SVG. For example the features icons below are all gray and some SVG paths

have been styled with the accent color. Here's how it looks now:

Other tips include

- Use logo and header images for the headers;

- To make element pop add border, add header, add accent color in the middle (e.g. the pricing section).

Step 5: Copyright

This is about what text we provide on the screen. The rules of thumb are:

- People scan, they don't read;

- Aim for clarity;

- Avoid industry/technical slang;

- Keep lines short: 45 to 75 characters;

- Write clear error messages and clear call to actions. Repeat the actual verb in the call to action and be more verbose. E.g. Yes, delete this or No, I changed my mind. Instead of Submit use Create account;

- Truncate your text: cut in half and then again;

- Design your text to be roughly even which helps with alignment but don't over do it;

- Keep forms very short

And this is the final version of our website (note: the header logo mishap

is probably from my side, not intentional):

These are the 5 basic design steps. You don't need to be a trained designer to be able to apply them. Now that you know what the steps are simply search for fonts, scales, color palettes and icon sets and apply them. This is what Zaharenia does (in her own words). You can find all HTML, CSS and images for this workshop at the design4devs-devit repository.

Thanks for reading and happy designing!

There are comments.

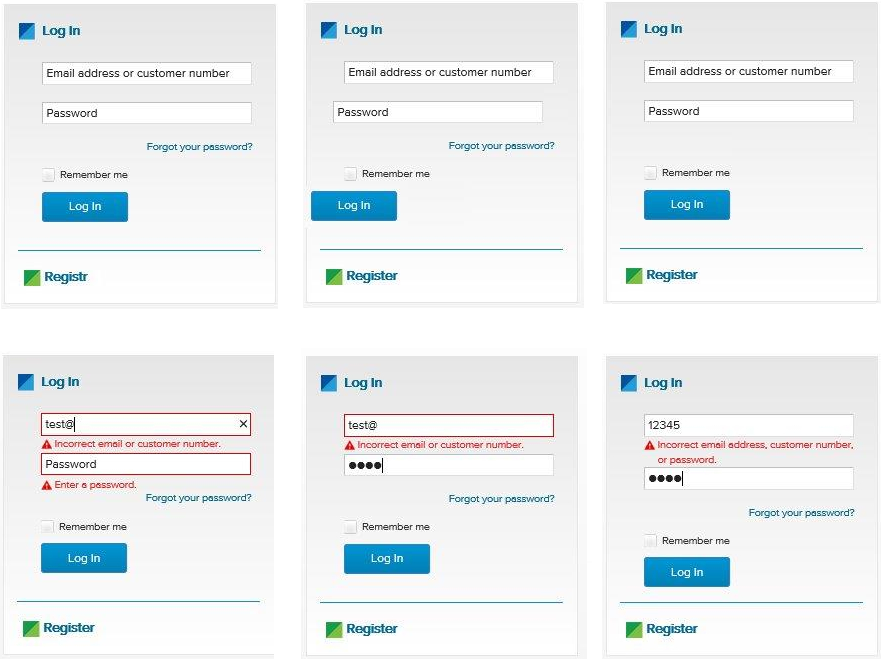

VMware's favorite login form

How do you test a login form? is one of my favorite questions when screening candidates for QA positions and also a good brain exercise even for experienced testers. I've written about it last year. In this blog post I'd like to share a different perspective on this same question, this time courtesy of my friend Rayna Stankova.

What bugs do you see above

The series of images above is from a Women Who Code Sofia workshop where the participants were given printed copies and asked to find as much defects as possible. Here they are (counting clock-wise from the top-left corner):

- Typo in "Registr" link at the bottom;

- UI components are not aligned;

- Missing "Forgot your password?" link

- Backend credentials validation with empty password; plain text password field; Too specific information about incorrect credentials;

- Too specific information about incorrect credentials with visual hint as to what exactly is not correct. In this case it looks like the password is OK, maybe it was one of the 4 most commonly used passwords, but the username is wrong which we can easily figure out;

- In this case the error handling appears to be correct, not disclosing what exactly is wrong. The placement is somewhat wrong, it looks like an error message for one of the fields instead for the entire form. I'd move that to the top and even slightly update the wording to be more like Login failed, bad credentials, try again.

How do you test this

Here is a list of possible test scenarios, proposed by Rayna. Notes are mine.

UI Layer

- Test 1: Verify Email (User ID) field has focus on page load

- Test 2: Verify Empty Email (User ID) field and Password field

- Test 3: Verify Empty Email (User ID) field

- Test 4: Verify Empty Password field

- Test 5: Verify Correct sign in

- Test 6: Verify Incorrect sign in

- Test 7: Verify Password Reset - working link

- Test 8: Verify Password Reset - invalid emails

- Test 9: Verify Password Reset - valid email

- Test 10: Verify Password Reset - using new password

- Test 11: Verify Password Reset - using old password

- Test 12: Verify whether password text is hidden

- Test 13: Verify text field limits - whether the browser accepts more than the allowed database limits

- Test 14: Verify that validation message is displayed in case user exceeds the character limit of the username and password fields

- Test 15: Verify if there is checkbox with label "remember password" in the login page

- Test 16: Verify if it’s allowed the username to contain non printable characters? If not, this is invalid on the 'create user' section.

- Test 17: Verify if the user must be logged in to access any other area of the site.

Tests 10 and 11 are particularly relevant for Fedora Account System where you need a really strong password and (at least in the past) had to change it more often and couldn't reuse any of your old passwords. As a user I really hate this b/c I can't remember my own password but it makes for a good test scenario.

13 and 14 are also something I rarely see and could make a nice case for property based testing.

16 would have been the bread and butter of testing Emoj.li (the first emoji-only social network).

Keyboard Specific

- Test 18: Verify Navigate to all fields

- Test 19: Verify Enter submits on password focus

- Test 20: Verify Space submits on login focus

- Test 21: Verify Enter submits

These are all so relevant with beautifully styled websites nowadays. The one I hate the most is when space key doesn't trigger select/unselect for checkboxes which are actually images!

Security:

- Test 22: Verify SQL Injections testing - password field

- Test 23: Verify SQL Injections testing - username field

- Test 24: Verify SQL Injections testing - reset password

- Test 25: Verify Password/username not visible from URL login

- Test 26: Verify For security point of view, in case of incorrect credentials user is displayed the message like "incorrect username or password" instead of exact message pointing at the field that is incorrect. As message like "incorrect username" will aid hacker in brute-forcing the fields one by one

- Test 27: Verify the timeout of the login session

- Test 28: Verify if the password can be copy-pasted or not

- Test 29: Verify that once logged in, clicking back button doesn't logout user

22, 23 and 24 are a bit generic and I guess can be collapsed into one. Better yet make them more specific instead.

Test 28 may sound like nonsense but is not. I remember back in the days that it was possible to copy and paste the password out of Windows dial-up credentials screen. With heavily styled form fields it is possible to have this problem again so it is a valid check IMO.

Others:

- Test 30: Verify that the password is in encrypted form when entered

- Test 31: Verify the user must be logged in to call any web services.

- Test 32: Verify if the username is allowed to contain non printable characters, the code handling login can deal with them and no error is thrown.

I think Test 30 means to validate that the backend doesn't store passwords in plain text but rather stores their hashes.

32 is a duplicate of 16. I also say why only the username? Password field is also a good candidate for this.

If you check how I would test a login form you will find some similarities but there are also scenarios which are different. I'm interested to see what other scenarios we've both missed, especially ones which have manifested themselves as bugs in actual applications.

Thanks for reading and happy testing!

There are comments.

Monitoring behavior via automated tests

In my last several presentations I briefly talked about using your tests as a monitoring tool. I've not been eating my own dog food and stuff failed in production!

What is monitoring via testing

This is a technique I coined 6 months ago while working with Tradeo's team. I'm not the first one to figure this out so if you know the proper name for it please let me know in the comments. So why not take a subset of your automated tests and run them regularly against production? Let's say every hour?

In my particular case we started with integration tests which interact with the product (a web app) in a way that a living person would do. E.g. login, update their settings, follow another user, chat with another user, try to deposit money, etc. The results from these tests are logged into a database and then charted (using Grafana). This way we can bring lots of data points together and easily analyze them.

This technique has the added bonus that we can cover the most critical test paths in a couple of minutes and do so regularly without human intervention. Perusing the existing monitoring infrastructure of the devops team we can configure alerts if need be. This makes it sort of early detection/warning system plus it gives a degree of possibility to spot correlations between data points or patterns.

As simple as it sounds I've heard about a handfull of companies doing this sort of continuous testing against production. Maybe you can implement something similar in your organization and we can talk more about the results?

Why does it matter

Anyway, everyone knows how to write Selenium tests so I'm not going to bother you with the details. Why does this kind of testing matter?

Do you remember a recent announcement by GitHub about Travis CI leaking some authentication tokens into their public log files? I did receive an email about this but didn't pay attention to it because I don't use GitHub tokens for anything I do in Travis. However as a safety measure GitHub had went ahead and wiped out my security tokens.

The result from this is that my automated upstream testing infrastructure had stopped working! In particular my requests to the GitHub API stopped working. And I didn't even know about it!

This means that since May 24th there have been at least 4 new versions of libraries and frameworks on which some of my software depends and I failed to test them! One of them was Django 1.11.2.

I have supplied a new GitHub token for my infra but if I had monitoring I would have known about this problem well in advance. Next I'm off to write some monitoring tests and also implement better failure detection in Strazar itself!

Thanks for reading and happy testing (in production)!

There are comments.

Can a nested function assign to variables from the parent function

While working on a new feature for Pelican I've put myself in a situation where I have two functions, one nested inside the other and I want the nested function to assign to variable from the parent function. Turns out this isn't so easy in Python!

def hello(who):

greeting = 'Hello'

i = 0

def do_print():

if i >= 5:

return

print i, greeting, who

i += 1

do_print()

do_print()

if __name__ == "__main__":

hello('World')

The example above is a recursive Hello World. Notice the i += 1 line!

This line causes i to be considered local to do_print() and the result

is that we get the following failure on Python 2.7:

Traceback (most recent call last):

File "./test.py", line 16, in <module>

hello('World')

File "./test.py", line 13, in hello

do_print()

File "./test.py", line 6, in do_print

if i >= 5:

UnboundLocalError: local variable 'i' referenced before assignment

We can workaround by using a global variable like so:

i = 0

def hello(who):

greeting = 'Hello'

def do_print():

global i

if i >= 5:

return

print i, greeting, who

i += 1

do_print()

do_print()

However I prefer not to expose internal state outside the hello()

function. Only if there was a keyword similar to global. In Python 3

there is nonlocal!

def hello(who):

greeting = 'Hello'

i = 0

def do_print():

nonlocal i

if i >= 5:

return

print(i, greeting, who)

i += 1

do_print()

do_print()

nonlocal is nice but it doesn't exist in Python 2! The workaround is to not assign state to the variable itself, but instead use a mutable container. That is instead of a scalar use a list or a dictionary like so:

def hello(who):

greeting = 'Hello'

i = [0]

def do_print():

if i[0] >= 5:

return

print i[0], greeting, who

i[0] += 1

do_print()

do_print()

Thanks for reading and happy coding!

There are comments.

Semantically Invalid Input

. . 9 | . 2 8 | 7 . .

8 . 6 | . . 4 | . . 5

. . 3 | . . . | . . 4

------+-------+------

6 . . | . . . | . . .

? 2 . | 7 1 3 | 4 5 .

. . . | . . . | . . 2

------+-------+------

3 . . | . . . | 5 . .

9 . . | 4 . . | 8 . 7

. . 1 | 2 5 . | 3 . .

In a comment to a previous post Flavio Poletti proposed a very interesting test case for a function which solves the Sudoku game - semantically invalid input, i.e. an input that passes intermediate validation checks (no duplicates in any row/col/9-square) but that cannot possibly have a solution.

Until then I thought that Sudoku was a completely deterministic game and if input followed all validation checks then we always have a solution. Apparently I was wrong! Reading more on the topic I discovered these Sudoku test cases from Sudopedia. Their Invalid Test Cases section lists several examples of semantically invalid input in Sudoku:

- Unsolvable Square;

- Unsolvable Box;

- Unsolvable Column;

- Unsolvable Row;

- Not Unique with examples having 2, 3, 4, 10 and 125 solutions

The example above cannot be solved because the left-most square of the middle row (r5c1) has no possible candidates.

Following the rule non-repeating numbers from 1 to 9 in each row for row 5 we're left with numbers: 6, 8 and 9. For (r5c1) 6 is a no-go because it is already present in the same square. Then 9 is a no-go because it is present in column 1. Which leaves us with 8, which is also present in column 1! Pretty awesome, isn't it?

Also check the Valid Test Cases section which includes other interesting examples and definitely not ones which I have considered previously when testing Sudoku.

On a more practical note I have been trying to remember a case from my QA practice where we had input data that matched all conditions but is semantically invalid. I can't remember of such a case. If you do have examples about semantically invalid data in real software please let me know in the comments below!

Thanks for reading and happy testing!

There are comments.

Top 7 Lessons From 134 Books

This post is a quick summary of the Top 7 Lessons From 134 Books video by the OnePercentBetter YouTube channel. I am posting it as self reference and because I'm interested to know what works for my readers.

Lesson 1: Boost your happy chemicals

The essence of this is to get your 8 hours of sleep, exercise regularly and eat healthy.

Couple of years ago I was a sugar addict and stopped cold turkey. Then I tried fasting for a year, strictly following the religious calendar every day (wasn't that hard). Then I started doing some moderate exercise.

As boring as it may sound it does actually work. I still have my urges but I am feeling much more energetic right now. I am able to maintain concentration for longer periods and I am actually more productive.

Lesson 2: Forget self-help, be kind

Just be kind!

Lesson 3: Value your time

One of the lessons which very much resonates with me. I hate people who don't value their time, mostly because when I have to interact with such people they are also wasting my own time.

Not caring what others think about you also falls into this category.

Lesson 4: The 80/20 principle

80% of the returns come from 20% of the causes. Again one of my favorites which I learned from The 4-Hour workweek by Tim Ferris.

This principle can be applied to every aspect of our lives to maximize the returns. I still not very good at applying it (I think) but I'm trying to figure it out.

Lesson 5: Learn how to win friends and influence people

This is from another favorite book of mine. How to Win Friends and Influence People by Dale Carnegie. Just read the book!

Lesson 6: Create, don't consume

It is only when we start creating that opportunities start coming our way! I can confirm this from experience. It is because of this blog, my open source work on GitHub, my teaching work and my speaking engagements that people contact me every day with opportunities and work related proposals.

Sure you need the skills to back those up, but the strange thing about creating is that it actually improves these very same skills (plus teaches you a few other) and that helps you deliver on the new opportunities that just came up. It's like an enchanted circle but a good one!

Lesson 7: Mind over matter

No drama, please. There are events in our lives which we can't control. Why then bother worrying about them and spending energy? The only thing we can do is choose how to react when these events happen. I'm not saying don't care about anything but rather care more selectively and spend more energy on the things that matter.

Bonus: 7 more lessons

OnePercentBetter made a new video called 7 Unconventional Lessons From 179 Books (NOT Taught At SCHOOL) which adds the following lessons:

- Future blindness - people sucks at predicting the future. If you want to know what it is really like to be in somebody's position just ask them.

- The 1% rule - small improvements applied continuously over a period of time have drastic effects.

- University is a scam - this one is controversial but the idea is that information is everywhere and accessible for free and opportunities are ripe. You don't (always) need to go to university to become successful.

- Don't give a fuck - what people think about you

- Mentorship is the fast-track to success - find a mentor to speed up your learning, your success rate, etc, learn from other people's mistakes instead of committing them on your own. I will also add learn how to and become a mentor yourself.

- Direct your efforts - set a goal and work towards it every single day. This gives meaning to everything you do.

- Pseudoscience can be beneficial - sometimes we don't have strong scientific proof that something is beneficial but experience tells us it probably is. Don't rush to decisions, analyze the risks and potential benefits before jumping in but do keep an eye on new methods and techniques. If they seem to work why not reap the benefits before the masses ?

Thanks for reading and don't forget to comment and give me your feedback!

Social media image source: https://elearningindustry.com/top-10-psychology-books-elearning-professional-read

There are comments.

Learn Python & Selenium Automation in 8 weeks

Couple of months ago I conducted a practical, instructor lead training in Python and Selenium automation for manual testers. You can find the materials at GitHub.

The training consists of several basic modules and practical homework assignments. The modules explain

- The basic structure of a Python program and functions

- Commonly used data types

- If statements and (for) loops

- Classes and objects

- The Python unit testing framework and its assertions

- High-level introduction to Selenium with Python

- High-level introduction to the Page Objects design pattern

- Writing automated tests for real world scenarios without any help from the instructor.

Every module is intended to be taken in the course of 1 week and begins with links to preparatory materials and lots of reading. Then I help the students understand the basics and explain with more examples, often writing code as we go along. At the end there is the homework assignment for which I expect a solution presented by the end of the week so I can comment and code-review it.

All assignments which require the student to implement functionality, not tests, are paired with a test suite, which the student should use to validate their solution.

What worked well

Despite everything I've written below I had 2 students (from a group of 8) which showed very good progress. One of them was the absolute star, taking active participation in every class and doing almost all homework assignments on time, pretty much without errors. I think she'd had some previous training or experience though. She was in the USA, training was done remotely via Google Hangouts.

The other student was in Sofia, training was done in person. He is not on the

same level as the US student but is the best from the Bulgarian team. IMO he

lacks a little bit of motivation. He "cheated" a bit on some tasks providing

non-standard, easier solutions and made most of his assignments. After the first

Selenium session he started creating small scripts to extract results from

football sites or as helpers to be applied in the daily job.

The interesting

fact for me was that he created his programs as unittest.TestCase classes.

I guess because this was the way he knew how to run them!?!

There were another few students which had had some prior experience with programming but weren't very active in class so I can't tell how their careers will progress. If they put some more effort into it I'm sure they can turn out to have decent programming skills.

What didn't work well

Starting from the beginning most students failed to read the preparatory materials. Some of the students did read a little bit, others didn't read at all. At the times when they came prepared I had the feeling the sessions progressed more smoothly. I also had students joining late in the process, which for the most part didn't participate at all in the training. I'd like to avoid that in the future if possible.

Sometimes students complained about lack of example code, although Dive into Python includes tons of examples. I've resorted to sending them the example.py files which I produced during class.

The practical part of the training was mostly myself programming on a big TV screen in front of everyone else. Several times someone from the students took my place. There wasn't much active participation on their part and unfortunately they didn't want to bring personal laptops to the training (or maybe weren't allowed)! We did have a company provided laptop though.

When practicing functions and arithmetic operations the students struggled with basic maths like breaking down a number into its digits or vice versa, working with Fibonacci sequences and the like. In some cases they cheated by converting to/from strings and then iterating over them. Also some hard-coded the first few numbers of the Fibonacci sequence and returned it directly. Maybe an in-place explanation of the underlying maths would have been helpful but honestly I was surprised by this. Somebody please explain or give me an advise here!

I am completely missing examples of the datetime and timedelta classes

which tuned out to be very handy in the practical Selenium tasks and we had

to go over them on the fly.

The OOP assignments went mostly undone, not to mention one of them had bonus tasks which are easily solved using recursion. I think we could skip some of the OOP practice (not sure how safe that is) because I really need classes only for constructing the tests and we don't do anything fancy there.

Page Object design pattern is also OOP based and I think that went somewhat well granted that we are only passing values around and performing some actions. I didn't put constraints nor provided guidance on what the classes should look like and which methods go where. Maybe I should have made it easier.

Anyway, given that Page Objects is being replaced by Screenplay pattern, I think we can safely stick to the all-in-one functional based Selenium tests. Maybe utilize helper functions for repeated tasks (like login). Indeed this is what I was using last year with Rspec & Capybara!

What students didn't understand

Right until the end I had people who had troubles understanding function signatures, function instances and calling/executing a function. Also returning a value from a function vs. printing the (same) value on screen or assigning to the same global variable (e.g. FIB_NUMBERS).

In the same category falls using method parameters vs. using global variables

(which happened to have the same value), using the parameters as arguments to

another function inside the body of the current function, using class attributes

(e.g. self.name) to store and pass values around vs. local variables in methods

vs. method parameters which have the same names.

I think there was some confusion about lists, dictionaries and tuples but we did practice mostly with list structures so I don't have enough information.

I have the impression that object oriented programming (classes and instances, we didn't go into inheritance) are generally confusing to beginners with zero programming experience. The classical way to explain them is by using some abstractions like animal -> dog -> a particular dog breed -> a particular pet. OOP was explained to me in a similar way back in school so these kinds of abstractions are very natural for me. I have no idea if my explanation sucks or students are having hard time wrapping their heads around the abstraction. I'd love to hear some feedback from other instructors on this one.

I think there is some misunderstanding between a class (a definition of behavior)

and an instance/object of this class (something which exists into memory). This

may also explain the difficulty remembering or figuring out what self points to

and why do we need to use it inside method bodies.

For unittest.TestCase we didn't do lots of practice which is my fault.

The homework assignments request the students to go back to solutions

of previous modules and implement more tests for them. Next time I should

provide a module (possibly with non-obvious bugs) and request to write

a comprehensive test suite for it.

Because of the missing practice there was some confusion/misunderstanding

about the setUpClass/tearDownClass and the setUp/tearDown methods.

Also add to the mix that the first are @classmethod while the later

are not. "To be safe" students always defined both as class methods!

I have since corrected the training materials but we didn't have

good examples (nor practiced) explaining the difference between

setUpClass (executed once aka before suite) and setUp

(possibly executed multiple times aka before test method).

On the Selenium side I think it is mostly practice which students lack, not understanding. The entire Selenium framework (any web test framework for that matter) boils down to

- Load a page

- Find element(s)

- Click or hover (that one was tricky) element

- Get element's attribute value or text

- Wait for the proper page to load (or worst case AJAX calls)

IMO finding the correct element on the page is on-par with waiting (which also relies on locating elements) and took 80% of the time we spent working with Selenium.

Thanks for reading and don't forget to comment and give me your feedback!

Image source: https://www.udemy.com/selenium-webdriver-with-python/

There are comments.

Quality Assurance According 2 Einstein

Quality Assurance According 2 Einstein is a talk which introduces several different ideas about how we need to think and approach software testing. It touches on subjects like mutation testing, pairwise testing, automatic test execution, smart test non-execution, using tests as monitoring tools and team/process organization.

Because testing is more thinking than writing I have chosen a different format for this presentation. It contains only slides with famous quotes from one of the greatest thinkers of our time - Albert Einstein!

This blog post includes the accompanying links and references only! It is my first iteration on the topic so expect it to be unclear and incomplete, use your imagination! I will continue working and presenting on the same topic in the next few months so you can expect updates from time to time. In the mean time I am happy to discuss with you down in the comments.

IMAGINATION IS MORE IMPORTANT THAN KNOWLEDGE.

- Hello World bug challenge

- Testing a Sudoku

- https://github.com/weldr/welder-web/pull/56

- https://github.com/weldr/welder-web/pull/59

THE FASTER YOU GO, THE SHORTER YOU ARE.

- Using Statistics to Predict Which Tests to Run

- The framework that knows its bugs

- Testing Red Hat Enterprise Linux the Microsoft way

- Automatic dependency testing with Strazar

- Automatic cargo update, test and pull request

IF THE FACTS DON'T FIT THE THEORY, CHANGE THE FACTS.

- Coverage is Not Strongly Correlated with Test Suite Effectiveness

- Code Coverage is a Strong Predictor of Test Suite Effectiveness

- Mutation testing vs. coverage, Pt. 1

- Mutation testing vs. coverage, Pt. 2

- There are 101 coverage metrics according to Cem Kaner. Which ones are you measuring and what conclusions are you making out of these metrics?

THE WHOLE OF SCIENCE IS NOTHING MORE THAN A REFINEMENT OF EVERYDAY THINKING.

- How to find 1000 bugs in 30 minutes

- How we found a million style and grammar errors in the English Wikipedia

- Simple Testing Can Prevent Most Critical Failures

- Need it robust, make it fragile

- btw its me who asks the first question at the end :)

INSANITY - DOING THE SAME THING OVER AND OVER AND EXPECTING DIFFERENT RESULTS.

This principle can be applied to any team/process within the organization. The above link is reference to a nice book which was recommended to me but the gist of it is that we always need to analyze, ask questions and change is we want to achieve great results. A practicle example of what is possible if you follow this principle is this talk Accelerate Automation Tests From 3 Hours to 3 Minutes.

THE ONLY REASON FOR TIME IS SO THAT EVERYTHING DOESN'T HAPPEN AT ONCE.

The topic here is "using tests as a monitoring tool". This is something I started a while back ago, helping a prominent startup with their production testing but my involvement ended very soon after the framework was deployed live so I don't have lots of insight.

As the first few days this technique identified some unexpected behaviors, for example a 3rd party service was updating very often. Once even they were broken for a few hours - something nobody had information about.

Since then I've heard about 2 more companies using similar techniques to continuously validate that production software continues to work without having a physical person to verify it. In the event of failures there are alerts which are delath with accordingly.

NO PROBLEM CAN BE SOLVED FROM THE SAME LEVEL OF CONSIOUSNESS THAT CREATED IT.

That much must be obvious to us quality engineers. What about the future however?

I don't have anything more concrete here. Just looking towards what is coming next!

DO NOT WORRY ABOUT YOUR DIFFICULTIES IN MATHEMATICS. I CAN ASSURE YOU MINE ARE STILL GREATER.

Thanks for reading and happy testing!

There are comments.

Automatic cargo update & pull requests for Rust projects

If you follow my blog you are aware that I use automated tools to do some boring tasks instead of me. For example they can detect when new versions of dependencies I'm using are available and then schedule testing against them on the fly.

One of these tools is Strazar which I use heavily for my Django based packages. Example: django-s3-cache build job.

Recently I've made a slightly different proof-of-concept for a Rust project.

Because rustc and various dependencies (called crates) are updated very often

we didn't want to expand the test matrix like Strazar does. Instead we wanted to

always build & test against the latest crates versions and if that passes

create a pull request for the update (in Cargo.lock). All of this unattended

of course!

To start create a cron job in Travis CI which will execute once per day and call your test script. The script looks like this:

#!/bin/bash

if [ -z "$GITHUB_TOKEN" ]; then

echo "GITHUB_TOKEN is not defined"

exit 1

fi

BRANCH_NAME="automated_cargo_update"

git checkout -b $BRANCH_NAME

cargo update && cargo test

DIFF=`git diff`

# NOTE: we don't really check the result from testing here. Only that

# something has been changed, e.g. Cargo.lock

if [ -n "$DIFF" ]; then

# configure git authorship

git config --global user.email "atodorov@MrSenko.com"

git config --global user.name "Alexander Todorov"

# add a remote with read/write permissions!

# use token authentication instead of password

git remote add authenticated https://atodorov:$GITHUB_TOKEN@github.com/atodorov/bdcs-api-rs.git

# commit the changes to Cargo.lock

git commit -a -m "Auto-update cargo crates"

# push the changes so that PR API has something to compare against

git push authenticated $BRANCH_NAME

# finally create the PR

curl -X POST -H "Content-Type: application/json" -H "Authorization: token $GITHUB_TOKEN" \

--data '{"title":"Auto-update cargo crates","head":"automated_cargo_update","base":"master", "body":"@atodorov review"}' \

https://api.github.com/repos/atodorov/bdcs-api-rs/pulls

fi

A few notes here:

- You need to define a secret

GITHUB_TOKENvariable for authentication; - The script doesn't force push, but in practice that may be useful (e.g. updating the PR);

- The script doesn't have any error handling;

- If PR is still open GitHub will tell us about it but we ignore the result here;

- DON'T paste this into your

Makefilebecause theGITHUB_TOKENvariable will be expanded into the logs and your secrets go away! Always call the script from yourMakefileto avoid revealing secrets. - I am using topic branches because this is a POC. Switch to master and maybe move all URLs as variables at the top of the script!

- I run this cron build against a fork of the project because the team doesn't feel comfortable having automated commits/pushes. I also create the pull requests against my own fork. You will have to adjust the targets if you want your PR to go to the original repository.

Here is the PR which was created by this script: https://github.com/atodorov/bdcs-api-rs/pull/5

Notice that it includes previous commits b/c they have not been merged to the master branch!

Here's the test job (#77) which generated this PR: https://travis-ci.org/atodorov/bdcs-api-rs/builds/219274916

Here's a test job (#87) which bails out miserably because the PR already exists: https://travis-ci.org/atodorov/bdcs-api-rs/builds/220954269

This post is part of my Quality Assurance According to Einstein series - a detailed description of useful techniques I will be presenting very soon.

Thanks for reading and happy testing!

There are comments.

Testing Red Hat Enterprise Linux the Microsoft way

Pairwise (a.k.a. all-pairs) testing is an effective test case generation technique that is based on the observation that most faults are caused by interactions of at most two factors! Pairwise-generated test suites cover all combinations of two therefore are much smaller than exhaustive ones yet still very effective in finding defects. This technique has been pioneered by Microsoft in testing their products. For an example please see their GitHub repo!

I heard about pairwise testing by Niels Sander Christensen last year at QA Challenge Accepted 2.0 and I immediately knew where it would fit into my test matrix.

This article describes an experiment made during Red Hat Enterprise Linux 6.9 installation testing campaign. The experiment covers generating a test plan (referred to Pairwise Test Plan) based on the pairwise test strategy and some heuristics. The goal was to reduce the number of test cases which needed to be executed and still maintain good test coverage (in terms of breadth of testing) and also maintain low risk for the product.

Product background

For RHEL 6.9 there are 9 different product variants each comprising of particular package set and CPU architecture:

- Server i386

- Server x86_64

- Server ppc64 (IBM Power)

- Server s390x (IBM mainframe)

- Workstation i386

- Workstation x86_64

- Client i386

- Client x86_64

- ComputeNode x86_64

Traditional testing activities are classified as Tier #1, Tier #2 and Tier #3

- Tier #1 - basic form of installation testing. Executed for all arch/variants on all builds, including nightly builds. This group includes the most common installation methods and configurations. If Tier #1 fails the product is considered unfit for customers and further testing blocking the release!

- Tier #2 and #3 - includes additional installation configurations and/or functionality which is deemed important. These are still fairly common scenarios but not the most frequently used ones. If some of the Tier#2 and #3 test cases fail they will not block the release.

This experiment focuses only on Tier #2 and #3 test cases because they generate the largest test matrix! This experiment is related only to installation testing of RHEL. This broadly means "Can the customer install RHEL via the Anaconda installer and boot into the installed system". I do not test functionality of the system after reboot!

I have theorized that from the point of view of installation testing RHEL is mostly a platform independent product!

Individual product variants rarely exhibit differences in their functional behavior because they are compiled from the same code base! If a feature is present it should work the same on all variants. The main differences between variants are:

- What software has been packaged as part of the variant (e.g. base package set and add-on repos);

- Whether or not a particular feature is officially supported, e.g. iBFT on Client variants. Support is usually provided via including the respective packages in the variant package set and declaring SLA for it.

These differences may lead to problems with resolving dependencies and missing packages but historically haven't shown significant tendency to cause functional failures e.g. using NFS as installation source working on Server but not on Client.

The main component being tested, Anaconda - the installer, is also mostly platform independent. In a previous experiment I had collected code coverage data from Anaconda while performing installation with the same kickstart (or same manual options) on various architectures. The coverage report supports the claim that Anaconda is platform independent! See Anaconda & coverage.py - Pt.3 - coverage-diff, section Kickstart vs. Kickstart!

Testing approach

The traditional pairwise approach focuses on features whose functionality is controlled via parameters. For example: RAID level, encryption cipher, etc. I have taken this definition one level up and applied it to the entire product! Now functionality is also controlled by variant and CPU architecture! This allows me to reduce the number of total test cases in the test matrix but still execute all of them at least once!

The initial implementation used a simple script, built with the Ruby pairwise gem, that:

-

Copies verbatim all test cases which are applicable for a single product variant, for example s390x Server or ppc64 Server! There's nothing we can do to reduce these from combinatorial point of view!

-

Then we have the group of test cases with input parameters. For example:

storage / iBFT / No authentication / Network init script storage / iBFT / CHAP authentication / Network Manager storage / iBFT / Reverse CHAP authentication / Network ManagerIn this example the test is

storage / iBFTand the parameters are- Authentication type

- None

- CHAP

- Reverse CHAP

- Network management type

- SysV init

- NetworkManager

For test cases in this group I also consider the CPU architecture and OS variant as part of the input parameters and combine them using pairwise. Usually this results in around 50% reduction of test efforts compared to testing against all product variants!

- Authentication type

-

Last we have the group of test cases which don't depend on any input parameters, for example

partitioning / swap on LVM. They are grouped together (wrt their applicable variants) and each test case is executed only once against a randomly chosen product variant! This is my own heuristic based on the fact that the product is platform independent!NOTE: You may think that for these test cases the product variant is their input parameter. If we consider this to be the case then we'll not get any reduction because of how pairwise generation works (the 2 parameters with the largest number of possible values determine the maximum size of the test matrix). In this case the 9 product variants is the largest set of values!

For this experiment pairwise_spec.rb only produced the list of test scenarios (test cases) to be executed! It doesn't schedule test execution and it doesn't update the test case management system with actual results. It just tells you what to do! Obviously this script will need to integrate with other systems and processes as defined by the organization!

Example results:

RHEL 6.9 Tier #2 and #3 testing

Test case w/o parameters can't be reduced via pairwise

x86_64 Server - partitioning / swap on LVM

x86_64 Workstation - partitioning / swap on LVM

x86_64 Client - partitioning / swap on LVM

x86_64 ComputeNode - partitioning / swap on LVM

i386 Server - partitioning / swap on LVM

i386 Workstation - partitioning / swap on LVM

i386 Client - partitioning / swap on LVM

ppc64 Server - partitioning / swap on LVM

s390x Server - partitioning / swap on LVM

Test case(s) with parameters can be reduced by pairwise

x86_64 Server - rescue mode / LVM / plain

x86_64 ComputeNode - rescue mode / RAID / encrypted

x86_64 Client - rescue mode / RAID / plain

x86_64 Workstation - rescue mode / LVM / encrypted

x86_64 Server - rescue mode / RAID / encrypted

x86_64 Workstation - rescue mode / RAID / plain

x86_64 Client - rescue mode / LVM / encrypted

x86_64 ComputeNode - rescue mode / LVM / plain

i386 Server - rescue mode / LVM / plain

i386 Client - rescue mode / RAID / encrypted

i386 Workstation - rescue mode / RAID / plain

i386 Workstation - rescue mode / LVM / encrypted

i386 Server - rescue mode / RAID / encrypted

i386 Workstation - rescue mode / RAID / encrypted

i386 Client - rescue mode / LVM / plain

ppc64 Server - rescue mode / LVM / plain

s390x Server - rescue mode / RAID / encrypted

s390x Server - rescue mode / RAID / plain

s390x Server - rescue mode / LVM / encrypted

ppc64 Server - rescue mode / RAID / encrypted

Finished in 0.00602 seconds (files took 0.10734 seconds to load)

29 examples, 0 failures

In this example there are 9 (variants) * 2 (partitioning type) * 2 (encryption type) == 32 total combinations! As you can see pairwise reduced them to 20! Also notice that if you don't take CPU arch and variant into account you are left with 2 (partitioning type) * 2 (encryption type) == 4 combinations for each product variant and they can't be reduced on their own!

Acceptance criteria

I did evaluate all bugs which were found by executing the test cases from the pairwise test plan and compared them to the list of all bugs found by the team. This will tell me how good my pairwise test plan was compared to the regular one. "good" meaning:

- How many bugs would I find if I don't execute the full test matrix

- How many critical bugs would I miss if I don't execute the full test matrix

Results:

- Pairwise found 14 new bugs;

- 23 bugs were first found by regular test plan

- some by test cases not included in this experiment;

- pairwise totally missed 4 bugs!

Pairwise test plan missed 3 critical regressions due to:

- Poor planning of pairwise test activity. There was a regression in one of the latest builds and that particular test was simply not executed!

- Human factor aka me not being careful enough and not following the process diligently. I waived a test due to infrastructure issues while there was a bug which stayed undiscovered! I should have tried harder to retest this scenario after fixing my infrastructure!

- Architecture and networking specific regression which wasn't tested on multiple levels and is very narrow corner case. Can be mitigated with more testing upstream, more automation and better understanding of the hidden test requirements (e.g. IPv4 vs IPv6) for all of which pairwise can help (analysis and more available resources).

All of the missed regressions could have been missed by regular test plan as well, however the risk of missing them in pairwise is higher b/c of the reduced test matrix and the fact that you may not execute exactly the same test scenario for quite a long time. OTOH the risk can be mitigated with more automation b/c we now have more free resources.

IMO pairwise test plan did a good job and didn't introduce "dramatic" changes in risk level for the product!

Summary

- 65 % reduction in test matrix;

- Only 1/3rd of team engineers needed;

- keep arch experts around though;

- 2/3rd of team engineers could be free for automation and to create even more test cases;

- Test run execution completion rate is comparable to regular test plan

- average execution completion for pairwise test plan was 76%!

- average execution completion for regular test plan was 85%!

- New bugs found:

- 30% by Pairwise Test Plan

- 30% by Tier #1 test cases (good job here)

- 30% by exploratory testing

- Risk of missing regressions or critical bugs exists (I did miss 3) but can be mitigated;

- Clearly exposes the need of constant review, analysis and improvement of existing test cases;

- Exposes hidden parameters in test scenarios and some hidden relationships;

- Patterns and other optimization techniques observed

Patterns observed:

- Many new test case combinations found, which I had to describe into Nitrate; The longer you use pairwise the less new combinations are discovered (aka undocumented scenarios). The first 3 initial test runs discovered the most of the missing combinations!

- Found quite a few test cases with hidden parameters, for example

swap / recommendedwhich calculates the recommended size of swap partition based on 4 different ranges in which the actual RAM size fits! These ranges became parameters to the test case; - Can combine (2, 3, etc) independent test cases together and consider them as parameters so we can apply pairwise against the combination. This will create new scenarios, broaden the test matrix but not result in significant increase in execution time. I didn't try this because it was not the focus of the experiment;

- Found some redundant/duplicate test cases - test plans need to be constantly analyzed and maintained you know;

- Automated scheduling and tools integration is critical. This needs to be working perfectly in order to capitalize on the newly freed resources;

- Testing on s390x was sub-optimal (mostly my own inexperience with the platform) so for specialized environments we still want to keep the experts around;

- 1 engineer (me) was able to largely keep up with schedule with the rest of the team!

- experiment was conducted during the course of several months

- I have tried to adhere to all milestones and deadlines and mostly succeeded

I have also discovered ideas for new test execution optimization techniques which need to be evaluated and measured further:

- Use a common set-up step for multiple test cases across variants, e.g.

- install a RAID system then;

- perform 3 rescue mode tests (same test case, different variants)

- Pipeline test cases so that the result of one case is the setup for the next, e.g.

- install a RAID system and test for correctness of the installation;

- perform rescue mode test;

- damage one of the RAID partitions while still in rescue mode;

- test installation with damaged disks - it should not crash!

These techniques can be used stand-alone or in combination with other optimization techniques and tooling available to the team. They are specific to my particular kind of testing so beware of your surroundings before you try them out!

Thanks for reading and happy testing!

Cover image copyright: cio-today.com

There are comments.

Mutation Testing vs. Coverage, Pt. 2

In a previous post I have shown an example of real world bugs which we were not able to detect despite having 100% mutation and test coverage. I am going to show you another example here.

This example comes from one of my training courses. The task is to write a class which represents a bank account with methods to deposit, withdraw and transfer money. The solution looks like this

class BankAccount(object):

def __init__(self, name, balance):

self.name = name

self._balance = balance

self._history = []

def deposit(self, amount):

if amount <= 0:

raise ValueError('Deposit amount must be positive!')

self._balance += amount

def withdraw(self, amount):

if amount <= 0:

raise ValueError('Withdraw amount must be positive!')

if amount <= self._balance:

self._balance -= amount

return True

else:

self._history.append("Withdraw for %d failed" % amount)

return False

def transfer_to(self, other_account, how_much):

self.withdraw(how_much)

other_account.deposit(how_much)

Notice that if withdrawal is not possible then the function returns False. The tests

look like this

import unittest

from solution import BankAccount

class TestBankAccount(unittest.TestCase):

def setUp(self):

self.account = BankAccount("Rado", 0)

def test_deposit_positive_amount(self):

self.account.deposit(1)

self.assertEqual(self.account._balance, 1)

def test_deposit_negative_amount(self):

with self.assertRaises(ValueError):

self.account.deposit(-100)

def test_deposit_zero_amount(self):

with self.assertRaises(ValueError):

self.account.deposit(0)

def test_withdraw_positive_amount(self):

self.account.deposit(100)

result = self.account.withdraw(1)

self.assertTrue(result)

self.assertEqual(self.account._balance, 99)

def test_withdraw_maximum_amount(self):

self.account.deposit(100)

result = self.account.withdraw(100)

self.assertTrue(result)

self.assertEqual(self.account._balance, 0)

def test_withdraw_from_empty_account(self):

result = self.account.withdraw(50)

self.assertIsNotNone(result)

self.assertFalse(result)

assert "Withdraw for 50 failed" in self.account._history

def test_withdraw_non_positive_amount(self):

with self.assertRaises(ValueError):

self.account.withdraw(0)

with self.assertRaises(ValueError):

self.account.withdraw(-1)

def test_transfer_negative_amount(self):

account_1 = BankAccount('For testing', 100)

account_2 = BankAccount('In dollars', 10)

with self.assertRaises(ValueError):

account_1.transfer_to(account_2, -50)

self.assertEqual(account_1._balance, 100)

self.assertEqual(account_2._balance, 10)

def test_transfer_positive_amount(self):

account_1 = BankAccount('For testing', 100)

account_2 = BankAccount('In dollars', 10)

account_1.transfer_to(account_2, 50)

self.assertEqual(account_1._balance, 50)

self.assertEqual(account_2._balance, 60)

if __name__ == '__main__':

unittest.main()

Try the following commands to verify that you have 100% coverage

coverage run test.py

coverage report

cosmic-ray run --test-runner nose --baseline 10 example.json bank.py -- test.py`

cosmic-ray report example.json

Can you tell where the bug is ? How about I try to transfer more money than is available from one account to the other

def test_transfer_more_than_available_balance(self):

account_1 = BankAccount('For testing', 100)

account_2 = BankAccount('In dollars', 10)

# transfer more than available

account_1.transfer_to(account_2, 150)

self.assertEqual(account_1._balance, 100)

self.assertEqual(account_2._balance, 10)

If you execute the above test it will fail

FAIL: test_transfer_more_than_available_balance (__main__.TestBankAccount)

----------------------------------------------------------------------

Traceback (most recent call last):

File "./test.py", line 79, in test_transfer_more_than_available_balance

self.assertEqual(account_2._balance, 10)

AssertionError: 160 != 10

----------------------------------------------------------------------

The problem is that when self.withdraw(how_much) fails transfer_to() ignores

the result and tries to deposit the money into the other account! A better

implementation would be

def transfer_to(self, other_account, how_much):

if self.withdraw(how_much):

other_account.deposit(how_much)

else:

raise Exception('Transfer failed!')

In my earlier article the bugs were caused by external environment and tools/metrics like code coverage and mutation score are not affected by it. In fact the jinja-ab example falls into the category of data coverage testing.

The current example on the other hand is ignoring the return value of the withdraw()

function and that's why it fails when we add the appropriate test.

NOTE: some mutation test tools support the removing/modifying return value

mutation. Cosmic Ray doesn't support this at the moment (I should add it). Even if it did

that would not help us find the bug because we would kill the mutation using

the test_withdraw...() test methods, which already assert on the return value!

Thanks for reading and happy testing!

There are comments.

Article about Nitrate in Methods & Tools

Nitrate is an open source test plan, test run and test case management system I have been working on for a while now. I have been maintaining a custom fork over at Mr. Senko which includes various bug fixes and enhancements which are not yet upstream.

Recently the Methods & Tools QA portal published an article about Nitrate. You can find it here!

Happy reading!

There are comments.

Building cardboard robots

My previous blog post was about the Hello Ruby book, Coder Dojo and making computers out of paper - all cool things for a 5 year old girl. This week I have discovered the Build the Robot book (link to BG edition)!

The book includes colorful pictures and some interesting facts about robots. On the second page it talks about degrees of freedom, which I've studied at technical university during my Mechanics course. How's that for a children's book ?

The most important part of the book are cardboard models of 3 robots: walking one (orange), dancing one (light blue) and one waving its hands (black). The pieces are put together by friction and all of the 3 robots use spring loaded motors for some basic movements.

We did have to use some glue because one of the legs kept falling apart but overall the print/cut quality of the Bulgarian edition was very good.

From the 3 robots the walking one is the worst. I think it is too heavy for the motor to move around. The dancing robot works most of the time. The robot which waves his hands up and down works best!