4 Quick Wins to Manage the Cost of Software Testing

Every activity in software development has a cost and a value. Getting cost to

trend down while increasing value, is the ultimate goal.

This is the introduction of an e-book called 4 Quick Wins to Manage the Cost of Software Testing. It was sent to me by Ivan Fingarov couple of months ago. Just now I've managed to read it and here's a quick summary. I urge everyone to download the original copy and give it a read.

The paper focuses on several practices which organizations can apply immediately in order to become more efficient and transparent in their software testing. While larger organizations (e.g. enterprises) have most of these practices already in place smaller companies (up to 50-100 engineering staff) may not be familiar with them and will reap the most benefits of implementing said practices. Even though I work for a large enterprise I find this guide useful when considered at the individual team level!

The first chapter focuses on Tactics to minimize cost: Process, Tools, Bug System Mining and Eliminating Handoffs.

In Process the goal is to minimize the burden of documenting the test process (aka testing artifacts), allow for better transparency and visibility outside the QA group and streamline the decision making process of what to test and when to stop testing, how much has been tested, what the risk is, ect. The authors propose testing core functionality paired with emerging risk areas based on new features development. They propose making a list of these and sorting that list by perceived risk/priority and testing as much as possible. Indeed this is very similar to the method I've used at Red Hat when designing testing for new features and new major releases of Red Hat Enterprise Linux. A similar method I've seen in place at several start-ups as well, although in the small organization the primary driver for this method is lack of sufficient test resources.

Tools proposes the use of test case management systems to ease the documentation burden. I've used TestLink and Nitrate. From them Nitrate has more features but is currently unmaintained with me being the largest contributor on GitHub. From the paid variants I've used Polarion which I generally dislike. Polarion is most suitable for large organizations because it gives lots of opportunities for tracking and reporting. For small organizations it is an overkill.

Bug System Mining is a technique which involves regularly scanning the bug tracker and searching for patterns. This is useful for finding bug types which appear frequently and generally point to a flaw in the software development process. The fix for these flaws usually is a change in policy/workflow which eliminates the source of the errors. I'm a fan of this technique when joining an existing project and need to assess what the current state is. I've done this when consulting for a few start-ups, including Jitsi Meet (acquired by Atlassian), however I'm not doing bug mining on a regular basis which I consider a drawback and I really should start doing!

For example at one project I found lots of bugs reported against translations, e.g. missing translations, text overflowing the visible screen area or not playing well with existing design, chosen language/style not fitting well with the product domain, etc.

The root cause of the problem was how the software in question has been localized. The translators were given a file of English strings, which they would translate and return back in an spread sheet. Developers would copy&paste the translated strings into localization files and integrate with the software. Then QA would usually inspect all the pages and report the above issues. The solution was to remove devel and QA from the translation process, implement a translation management system together with live preview (web based) so that translators can keep track of what is left to translate and can visually inspect their work immediately after a string was translated. Thus translators are given more context for their work but also given the responsibility to produce good quality translations.

Another example I've seen are many bugs which seem like a follow up/nice to have features of partially implemented functionality. The root cause of this problem turned out to be that devel was jumping straight to implementation without taking the time to brainstorm and consult with QE and product owners, not taking into account corner cases and minor issues which would have easily been raised by skillful testers. This process lead to several iterations until the said functionality was considered initially implemented.

Eliminating Handoffs proposes the use of cross-functional teams to reduce idle time and reduce the back-and-forth communication which happens when a bug is found, reported, evaluated and considered for a fix, fixed by devel and finally deployed for testing. This method argues that including testers early in the process and pairing them with the devel team will produce faster bug fixes and reduce communication burden.

While I generally agree with that statement it's worth noting that cross-functional teams perform really well when all team members have relatively equal skill level on the horizontal scale and strong experience on the vertical scale (think T-shaped specialist). Cross-functional teams don't work well when you have developers who aren't well versed in the testing domain and/or testers who are not well versed in programming or the broader OS/computer science fundamentals domain. In my opinion you need well experienced engineers for a good cross-functional team.

In the chapter Collaboration the paper focuses on pairing, building the right thing and faster feedback loops for developers. This overlaps with earlier proposals for cross-functional teams and QA bringing value by asking the "what if" questions. The chapter specifically talks about the Three Amigos meeting between PM, devel and QA where they discuss a feature proposal from all angles and finally come to a conclusion what the feature should look like. I'm a strong supporter of this technique and have been working with it under one form or another during my entire career. This also touches on the notion that testers need to move into the Quality Assistance business and be proactive during the software development process, which is something I'm hoping to talk about at the Romanian Testing Conference next year!

Finally the book talks about Skills Development and makes the distinction between Centers of Excellence (CoE) and Communities of Practice (CoP). Both the book and I are supporters of the CoP approach. This is a bottoms-up approach which is open for everyone to join in and harnesses the team creative abilities. It also takes into account that different teams use different methods and tools and that "one size doesn't fit all"!

Skilled teams find important bugs faster, discover innovative solutions to hard

testing problems and know how to communicate their value. Sometimes, a few super

testers can replace an army of average testers.

While I consider myself to be a "super tester" with thousands of bugs reported there is a very important note to make here. Communities of Practice are successful when their members are self-focused on skill development! In my view and to some extent the communities I've worked with everyone should strive to constantly improve their skills but also exercise peer pressure on their co-workers to not fall behind. This has been confirmed by other folks in the QA industry and I've heard it many times when talking to friends from other companies.

Thanks for reading and happy testing!

There are comments.

Mutation Testing vs. Coverage

At GTAC 2015 Laura Inozemtseva made a lightning talk titled Coverage is Not Strongly Correlated with Test Suite Effectiveness which is the single event that got me hooked up with mutation testing. This year, at GTAC 2016, Rahul Gopinath made a counter argument with his lightning talk Code Coverage is a Strong Predictor of Test Suite Effectiveness. So which one is better ? I urge you to watch both talks and take notes before reading about my practical experiment and other opinions on the topic!

DISCLAIMER: I'm a heavy contributor to Cosmic-Ray, the mutation testing tool for Python so my view is biased!

Both Laura and Rahul (you will too) agree that a test suite effectiveness depends on the strength of its oracles. In other words the assertions you make in your tests. This is what makes a test suite good and determines its ability to detect bugs when present. I've decided to use pelican-ab as a practical example. pelican-ab is a plugin for Pelican, the static site generator for Python. It allows you to generate A/B experiments by writing out the content into different directories and adjusting URL paths based on the experiment name.

Can 100% code coverage detect bugs

Absolutely NOT! In version 0.2.1, commit ef1e211, pelican-ab had the following bug:

Given: Pelican's DELETE_OUTPUT_DIRECTORY is set to True (which it is by default)

When: we generate several experiments using the commands:

AB_EXPERIMENT="control" make regenerate

AB_EXPERIMENT="123" make regenerate

AB_EXPERIMENT="xy" make regenerate

make publish

Actual result: only the "xy" experiment (the last one) would be published online.

And: all of the other contents will be deleted.

Expected result: content from all experiments will be available under the output directory.

This is because before each invocation Pelican deletes the output directory and re-creates the entire content structure. The bug was not caught regardless of having 100% line + branch coverage. See Build #10 for more info.

Can 100% mutation coverage detect bugs

So I've branched off since commit ef1e211 into the mutation_testing_vs_coverage_experiment branch (requires Pelican==3.6.3).

After initial execution of Cosmic Ray I have 2 mutants left:

$ cosmic-ray run --baseline=10 --test-runner=unittest example.json pelican_ab -- tests/

$ cosmic-ray report example.json

job ID 29:Outcome.SURVIVED:pelican_ab

command: cosmic-ray worker pelican_ab mutate_comparison_operator 3 unittest -- tests/

--- mutation diff ---

--- a/home/senko/pelican-ab/pelican_ab/__init__.py

+++ b/home/senko/pelican-ab/pelican_ab/__init__.py

@@ -14,7 +14,7 @@

def __init__(self, output_path, settings=None):

super(self.__class__, self).__init__(output_path, settings)

experiment = os.environ.get(jinja_ab._ENV, jinja_ab._ENV_DEFAULT)

- if (experiment != jinja_ab._ENV_DEFAULT):

+ if (experiment > jinja_ab._ENV_DEFAULT):

self.output_path = os.path.join(self.output_path, experiment)

Content.url = property((lambda s: ((experiment + '/') + _orig_content_url.fget(s))))

URLWrapper.url = property((lambda s: ((experiment + '/') + _orig_urlwrapper_url.fget(s))))

job ID 33:Outcome.SURVIVED:pelican_ab

command: cosmic-ray worker pelican_ab mutate_comparison_operator 7 unittest -- tests/

--- mutation diff ---

--- a/home/senko/pelican-ab/pelican_ab/__init__.py

+++ b/home/senko/pelican-ab/pelican_ab/__init__.py

@@ -14,7 +14,7 @@

def __init__(self, output_path, settings=None):

super(self.__class__, self).__init__(output_path, settings)

experiment = os.environ.get(jinja_ab._ENV, jinja_ab._ENV_DEFAULT)

- if (experiment != jinja_ab._ENV_DEFAULT):

+ if (experiment not in jinja_ab._ENV_DEFAULT):

self.output_path = os.path.join(self.output_path, experiment)

Content.url = property((lambda s: ((experiment + '/') + _orig_content_url.fget(s))))

URLWrapper.url = property((lambda s: ((experiment + '/') + _orig_urlwrapper_url.fget(s))))

total jobs: 33

complete: 33 (100.00%)

survival rate: 6.06%

The last one, job 33 is equivalent mutation. The first one, job 29 is killed by the test added in commit b8bff85. For all practical purposes we now have 100% code coverage and 100% mutation coverage. The bug described above still exists thought.

How can we detect the bug

The bug isn't detected by any test because we don't have tests designed to perform and validate the exact same steps that a physical person will execute when using pelican-ab. Such test is added in commit ca85bd0 and you can see that it causes Build #22 to fail.

Experiment with setting DELETE_OUTPUT_DIRECTORY=False in tests/pelicanconf.py and

the test will PASS!

Is pelican-ab bug free

Not of course. Even after 100% code and mutation coverage and after manually constructing

a test which mimics user behavior there is at least one more bug present. There

is a pylint bad-super-call error, fixed in

commit 193e3db.

For more information about the error see

this blog post.

Other bugs found

During my humble experience with mutation testing so far I've added quite a few new tests and discovered two bugs which went unnoticed for years. The first one is constructor parameter not passed to parent constructor, see PR#96, pykickstart/commands/authconfig.py

def __init__(self, writePriority=0, *args, **kwargs):

- KickstartCommand.__init__(self, *args, **kwargs)

+ KickstartCommand.__init__(self, writePriority, *args, **kwargs)

self.authconfig = kwargs.get("authconfig", "")

The second bug is parameter being passed to parent class constructor, but the parent class doesn't care about this parameter. For example PR#96, pykickstart/commands/driverdisk.py

- def __init__(self, writePriority=0, *args, **kwargs):

- BaseData.__init__(self, writePriority, *args, **kwargs)

+ def __init__(self, *args, **kwargs):

+ BaseData.__init__(self, *args, **kwargs)

Also note that pykickstart has nearly 100% test coverage as a whole and the affected files were 100% covered as well.

The bugs above don't seem like a big deal and when considered out of context are relatively minor. However pykickstart's biggest client is anaconda, the Fedora and Red Hat Enterprise Linux installation program. Anaconda uses pykickstart to parse and generate text files (called kickstart files) which contain information for driving the installation in a fully automated manner. This is used by everyone who installs Linux on a large scale and is pretty important functionality!

writePriority controls the order of which individual commands are written to file

at the end of the installation. In rare cases the order of commands may depend

on each other. Now imagine the bugs above produce a disordered kickstart file,

which a system administrator thinks should work but it doesn't. It may be the case

this administrator is trying to provision hundreds of Linux systems to bootstrap

a new data center or maybe performing disaster recovery. You get the scale of

the problem now, don't you?

To be honest I've seen bugs of this nature but not in the last several years.

This is all to say a minor change like this may have an unexpectedly big impact somewhere down the line.

Conclusion

With respect to the above findings and my bias I'll say the following:

- Neither 100% coverage, nor 100% mutation coverage are a silver bullet against bugs;

- 100% mutation coverage appears to be better than 100% code coverage in practice;

- Mutation testing clearly shows out pieces of code which need refactoring which in turn minimizes the number of possible mutations;

- Mutation testing causes you to write more asserts and construct more detailed tests which is always a good thing when testing software;

- You can't replace humans designing test cases just yet but can give them tools to allow them to write more and better tests;

- You should not rely on a single tool (or two of them) because tools are only able to find bugs they were designed for!

Bonus: What others think

As a bonus to this article let me share a transcript from the mutation-testing.slack.com community:

atodorov 2:28 PM

Hello everyone, I'd like to kick-off a discussion / interested in what you think about

Rahul Gopinath's talk at GTAC this year. What he argues is that test coverage is still

the best metric for how good a test suite is and that mutation coverage doesn't add much

additional value. His talk is basically the opposite of what @lminozem presented last year

at GTAC. Obviously the community here and especially tools authors will have an opinion on

these two presentations.

tjchambers 12:37 AM

@atodorov I have had the "pleasure" of working on a couple projects lately that illustrate

why LOC test coverage is a misnomer. I am a **strong** proponent of mutation testing so will

declare my bias.

The projects I have worked on have had a mix of test coverage - one about 50% and

another > 90%.

In both cases however there was a significant difference IMO relative to mutation coverage

(which I have more faith in as representative of true tested code).

Critical factors I see when I look at the difference:

- Line length: in both projects the line lengths FAR exceeded visible line lengths that are

"acceptable". Many LONGER lines had inline conditionals at the end, or had ternary operators

and therefore were in fact only 50% or not at all covered, but were "traversed"

- Code Conviction (my term): Most of the code in these projects (Rails applications) had

significant Hash references all of which were declared in "traditional" format hhh[:symbol].

So it was nearly impossible for the code in execution to confirm the expectation of the

existence of a hash entry as would be the case with stronger code such as "hhh.fetch(:symbol)"

- Instance variables abound: As with most of Rails code the number of instance variables

in a controller are extreme. This pattern of reference leaked into all other code as well,

making it nearly impossible with the complex code flow to ascertain proper reference

patterns that ensured the use of the instance variables, so there were numerous cases

of instance variable typos that went unnoticed for years. (edited)

- .save and .update: yes again a Rails issue, but use of these "weak" operations showed again

that although they were traversed, in many cases those method references could be removed

during mutation and the tests would still pass - a clear indication that save or update was

silently failing.

I could go on and on, but the mere traversal of a line of code in Ruby is far from an indication

of anything more than it may be "typed in correctly".

@atodorov Hope that helps.

LOC test coverage is a place to begin - NOT a place to end.

atodorov 1:01 AM

@tjchambers: thanks for your answer. It's too late for me here to read it carefully but

I'll do it tomorrow and ping you back

dkubb 1:13 AM

As a practice mutation testing is less widely used. The tooling is still maturing. Depending on your

language and environment you might have widely different experiences with mutation testing

I have not watched the video, but it is conceivable that someone could try out mutation testing tools

for their language and conclude it doesn’t add very much

mbj 1:14 AM

Yeah, I recall talking with @lminozem here and we identified that the tools she used likely

show high rates of false positives / false coverage (as the tools likely do not protect against

certain types of integration errors)

dkubb 1:15 AM

IME, having done TDD for about 15+ years or so, and mutation testing for about 6 years, I think

when it is done well it can be far superior to using line coverage as a measurement of test quality

mbj 1:16 AM

Any talk pro/against mutation testing must, as the tool basis is not very homogeneous, show a non consistent result.

dkubb 1:16 AM

Like @tjchambers says though, if you have really poor line coverage you’re not going to

get as much of a benefit from mutation testing, since it’s going to be telling you what

you already know — that your project is poorly tested and lots of code is never exercised

mbj 1:19 AM

Thats a good and likely the core point. I consider that mutation testing only makes sense

when aiming for 100% (and this is to my experience not impractical).

tjchambers 1:20 AM

I don't discount the fact that tool quality in any endeavor can bring pro/con judgements

based on particular outcomes

dkubb 1:20 AM

What is really interesting for people is to get to 100% line coverage, and then try mutation

testing. You think you’ve done a good job, but I guarantee mutation testing will find dozens

if not hundreds of untested cases .. even in something with 100% line coverage

To properly evaluate mutation testing, I think this process is required, because you can’t

truly understand how little line coverage gives you in comparison

tjchambers 1:22 AM

But I don't need a tool to tell me that a 250 character line of conditional code that by

itself would be an oversized method AND counts more because there are fewer lines in the

overall percentage contributes to a very foggy sense of coverage.

dkubb 1:22 AM

It would not be unusual for something with 100% line coverage to undergo mutation testing

and actually find out that the tests only kill 60-70% of possible mutations

tjchambers 1:22 AM

@dkubb or less

dkubb 1:23 AM

usually much less :stuck_out_tongue:

it can be really humbling

mbj 1:23 AM

In this discussion you miss that many test suites (unless you have noop detection):

Will show false coverage.

tjchambers 1:23 AM

When I started with mutant on my own project which I developed I had 95% LOC coverage

mbj 1:23 AM

Test suites need to be fixed to comply to mutation testing invariants.

tjchambers 1:23 AM

I had 34% mutation coverage

And that was ignoring the 5% that wasn't covered at all

mbj 1:24 AM

Also if the tool you compare MT with line coverage on: Is not very strong,

the improvement may not be visible.

dkubb 1:24 AM

another nice benefit is that you will become much better at enumerating all

the things you need to do when writing tests

tjchambers 1:24 AM

@dkubb or better yet - when writing code.

The way I look at it - the fewer the alive mutations the better the test,

the fewer the mutations the better the code.

dkubb 1:29 AM

yeah, you can infer a kind of cyclomatic complexity by looking at how many mutations there are

tjchambers 1:31 AM

Even without tests (not recommended) you can judge a lot from the mutations themselves.

I still am an advocate for mutations/LOC metric

As you can see members in the community are strong supporters of mutation testing, all of them having much more experience than I do.

Please help me collect more practical examples! My goal is to collect enough information and present the findings at GTAC 2017 which will be held in London.

UPDATE: I have written Mutation testing vs. coverage, Pt.2 with another example.

Thanks for reading and happy testing!

There are comments.

How to create auxiliary build jobs in Travis-CI matrix

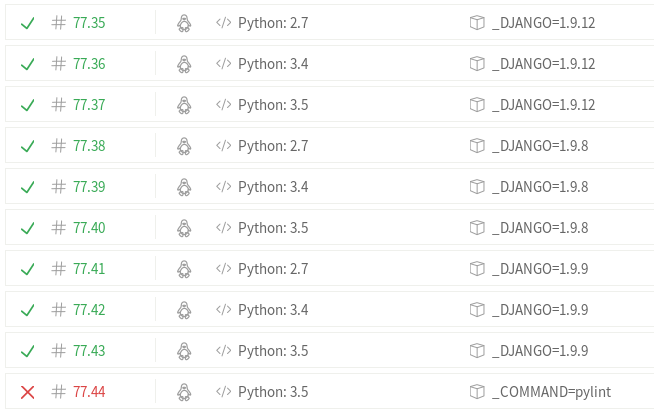

In Travis-CI when you combine the three main configuration options of Runtime (language), Environment and Exclusions/Inclusions you get a build matrix of all possible combinations! For example, for django-chartit the matrix includes 43 build jobs, spread across various Python and Django versions. For reference see Build #75.

For django-chartit I wanted to have an additional build job which would execute pylint. I wanted the job to be independent because currently pylint produces lots of errors and warnings. Having an independent job instead of integrating pylint together with all jobs makes it easier to see if any of the functional tests failed.

Using the inclusion functionality of Travis-CI I was able to define an auxiliary build job. The trick is to provide sane environment defaults for all build jobs (regular and auxiliary ones) so you don't have to expand your environment section! In this case the change looks like this

diff --git a/.travis.yml b/.travis.yml

index 67f656d..9b669f9 100644

--- a/.travis.yml

+++ b/.travis.yml

@@ -2,6 +2,8 @@ after_success:

- coveralls

before_install:

- pip install coveralls

+- if [ -z "$_COMMAND" ]; then export _COMMAND=coverage; fi

+- if [ -z "$_DJANGO" ]; then export _DJANGO=1.10.4; fi

env:

- !!python/unicode '_DJANGO=1.10'

- !!python/unicode '_DJANGO=1.10.2'

@@ -41,6 +43,10 @@ matrix:

python: 3.3

- env: _DJANGO=1.10.4

python: 3.3

+ include:

+ - env: _COMMAND=pylint

+ python: 3.5

+

notifications:

email:

on_failure: change

@@ -50,4 +56,4 @@ python:

- 3.3

- 3.4

- 3.5

-script: make coverage

+script: make $_COMMAND

For more info take a look at commit b22eda7 and Build #77. Note Job #77.44!

Thanks for reading and happy testing!

There are comments.

Overridden let() causes segfault with RSpec

Last week Anton asked me to take a look at one of his RSpec test suites. He was able to consistently reproduce a segfault which looked like this:

/home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:113: [BUG] vm_call_cfunc - cfp consistency error

ruby 2.3.2p217 (2016-11-15 revision 56796) [x86_64-linux]

-- Control frame information -----------------------------------------------

c:0013 p:---- s:0048 e:000047 CFUNC :map

c:0012 p:0011 s:0045 e:000044 BLOCK /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:113

c:0011 p:0035 s:0043 e:000042 METHOD /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/configuration.rb:1835

c:0010 p:0011 s:0040 e:000039 BLOCK /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:112

c:0009 p:0018 s:0037 e:000036 METHOD /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/reporter.rb:77

c:0008 p:0022 s:0033 e:000032 METHOD /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:111

c:0007 p:0025 s:0028 e:000027 METHOD /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:87

c:0006 p:0085 s:0023 e:000022 METHOD /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:71

c:0005 p:0026 s:0016 e:000015 METHOD /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/lib/rspec/core/runner.rb:45

c:0004 p:0025 s:0012 e:000011 TOP /home/atodorov/.rbenv/versions/2.3.2/lib/ruby/gems/2.3.0/gems/rspec-core-3.5.4/exe/rspec:4 [FINISH]

c:0003 p:---- s:0010 e:000009 CFUNC :load

c:0002 p:0136 s:0006 E:001e10 EVAL /home/atodorov/.rbenv/versions/2.3.2/bin/rspec:22 [FINISH]

c:0001 p:0000 s:0002 E:0000a0 (none) [FINISH]

Googling for vm_call_cfunc - cfp consistency error yields

Ruby #10460. Comments on the bug and

particularly this one point towards the error:

> Ruby is trying to be nice about reporting the error; but in the end,

> your code is still broken if it overflows stack.

Somewhere in the test suite was a piece of code that was overflowing the stack. It was somewhere along the lines of

describe '#active_client_for_user' do

context 'matching an existing user' do

it_behaves_like 'manager authentication' do

include_examples 'active client for user with existing user'

end

end

end

Considering the examples in the bug I started looking for patterns where a variable was defined and later redefined, possibly circling back to the previous definition. Expanding the shared examples by hand transformed the code into

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

Line 5. overrode line 3. When line 4. was executed first because of lazy execution and the call execution path became: 4-5-4-5-4-5 ... NOTE: I think we need a warning about that in RuboCop, see RuboCop #3769. The fix however is a no brainer:

- let(:user) { create(:user, :manager) }

- let!(:api_user_authentication) { create(:user_authentication, user: user) }

+ let(:manager) { create(:user, :manager) }

+ let!(:api_user_authentication) { create(:user_authentication, user: manager) }

Thanks for reading and happy testing.

There are comments.

Highlights from ISTA and GTAC 2016

Another two weeks have passed and I'm blogging about another 2 conferences. This year both Innovations in Software Technologies and Automation and Google Test Automation Conference happened on the same day. I was attending ISTA in Sofia during the day and watching the live stream of GTAC during the evenings. Here are some of the things that reflected on me:

- How can I build my software in order to make this a perfect day for the user ?

- People are not the problem which causes bad software to exist. When designing software focus on what people need not on what technology is forcing them to do;

- You need to have blind trust in the people you work with because all the times projects look like they are not going to work until the very end!

- It's good to have diverse set of characters in the company and not homogenize people;

- Team performance grows over time. Effective teams minimize time waste during bad periods. They (have and) extract value from conflicts!

- One-on-one meetings are usually like status reports which is bad. Both parties should bring their own issues to the table;

- To grow an effective team members need to do things together. For example pair programming, writing test scenarios, etc;

- When teams don't take actions after retrospective meetings it is usually a sign of missing foundational trust;

- QA engineers need to be present at every step of the software development life-cycle! This is something I teach my students and have been confirmed by many friends in the industry;

- Agile is all about data, even when it comes to testing and quality. We need to decompose and measure iteratively;

- Agile is also about really skillful people. One way to boost your skills is to adopt the T-shaped specialist model;

- In agile iterative work and continuous delivery is king so QA engineers need to focus even more on visualization (I will also add documentation), refactoring and code reviews;

- Agile teams need to give opportunities to team members for taking risk and taking ownership of their actions in the gray zone (e.g. actions which isn't clear who should be doing).

In the brave new world of micro services end to end testing is no more! We test each level in isolation but keep stable contracts/APIs between levels. That way we can reduce the test burden and still guarantee an acceptable level of risk. This change in software architecture (monolithic vs. micro) leads to change in technologies (one framework/language vs. what's best for the task) which in turn leads to changes in testing approach and testing tools. This ultimately leads to changing people on the team because they now need different skills than when they were hired! This circles back to the T-shaped specialist model and the fact that QA should be integrated in every step of the way! Thanks to Denitsa Evtimova and Lyudmila Labova for this wisdom and the quote pictured above.

Aneta Petkova talked about monitoring of test results which is a topic very close to my work. Imagine you have your automated test suite but still get failures occasionally. Are these bugs or something else broke ? If they are bugs and you are waiting for them to be fixed do you execute the tests against the still broken build or wait ? If you do, what additional info do you get from these executions vs. how much time do you spend figuring out "oh, that's still not fixed or geez, here's another hidden bug in the code" ?

Her team has modified their test execution framework (what I'd usually call a test runner or even test lab) to have knowledge about issues in JIRA and skip some tests when no meaningful information can be extracted from them. I have to point out that this approach may require a lot of maintenance in environments where you have failures due to infrastructure issues. This idea connects very nicely with the general idea behind this year's GTAC - don't run tests if you don't need to aka smart test execution!

Boris Prikhodskiy shared a very simple rule. Don't execute tests

- which have 100 % pass rate;

- during the last month;

- and have been executed at least 100 times before that!

This is what Unity does for their numerous topic branches and reduces test time with 60-70 percent. All of the test suite is still executed against their trunk branch and PR merge queue branches!

At GTAC there were several presentations about speeding up test execution time. Emanuil Slavov was very practical but the most important thing he said was that a fast test suite is the result of many conscious actions which introduced small improvements over time. His team had assigned themselves the task to iteratively improve their test suite performance and at every step of the way they analyzed the existing bottlenecks and experimented with possible solutions.

The steps in particular are (on a single machine):

- Execute tests in dedicated environment;

- Start with empty database, not used by anything else; This also leads to adjustments in your test suite architecture and DB setup procedures;

- Simulate and stub external dependencies like 3rd party services;

- Move to containers but beware of slow disk I/O;

- Run database in memory not on disk because it is a temporary DB anyway;

- Don't clean test data, just trash the entire DB once you're done;

- Execute tests in parallel which should be the last thing to do!

- Equalize workload between parallel threads for optimal performance;

- Upgrade the hardware (RAM, CPU) aka vertical scaling;

- Add horizontal scaling (probably with a messaging layer);

John Micco and Atif Memon talked about flaky tests at Google:

- 84% of the transitions from PASS to FAIL are flakes;

- Almost 16% of their 3.5 million tests have some level of flakiness;

- Flaky failures frequently block and delay releases;

- Google spends between 2% and 16% of their CI compute resources re-running flaky tests;

- Flakiness insertion speed is comparable to flakiness removal speed!

- The optimal setting is 2 persons modifying the same source file at the same time. This leads to minimal chance of breaking stuff;

- Fix or delete flaky tests because you don't get meaningful value out of them.

So Google want to stop a test execution before it is executed if historical data shows that the test has attributes of flakiness. The research they talk about utilizes tons of data collected from Google's CI environment which was the most interesting fact for me. Indeed if we use data to decide which features to build for our customers then why not use data to govern the process of testing? In addition to the video you should read John's post Flaky Tests at Google and How We Mitigate Them.

At the end I'd like to finish with Rahul Gopinath's Code Coverage is a Strong Predictor of Test suite Effectiveness in the Real World. He basically said that code coverage metrics as we know them today are still the best practical indicator of how good a test suite is. He argues that mutation testing is slow and only provides additional 4% to a well designed test suite. This is absolutely the opposite of what Laura Inozemtseva presented last year in her Coverage is Not Strongly Correlated with Test Suite Effectiveness lightning talk. Rahul also made a point about sample size in the two research papers and I had the impression he's saying Laura didn't do a proper academic research.

I'm a heavy contributor to Cosmic Ray, the mutation testing tool for Python and also use mutation testing in my daily job so this is a very interesting topic indeed. I've asked fellow tool authors to have a look at both presentations and share their opinions. I also have an idea about a practical experiment to see if full branch coverage and full mutation coverage will be able to find a known bug in a piece of software I wrote. I will be writing about this experiment next week so stay tuned.

Thanks for reading and happy testing!

There are comments.

Women In Open Source

It's been 2 weeks after OpenFest 2016 and I've promised to blog about what happened during the Women in Open Source presentation, which is the only single talk I did attend.

The presenters were Jona Azizaj, whom I met at FOSDEM earlier this year, Suela Palushi and Kristi Progri, all 3 from Albania. I've went to OpenFest specifically to meet them and listen to their presentation.

They started by explaining their background and telling us more about their respective communities, Fedora Women, WoMoz, GNOME Outreach and the Open Labs hackerspace in Tirana. The girls gave some stats how many women there are in the larger FOSS community and what some newcomer's first impressions could be.

Did you know that in 2002 1.1% of all FOSS participants were female, while in 2013 that was 11% ? A 10x increase but of them only 1.5% are developers.

The presentation was a nice overview of different opportunities to get involved in open source geared towards women. I've specifically asked and the girls responded how they first came to join open source. In general they've had a good and welcoming community around them which made it natural to join and thrive.

Now comes the sad part. Instead of welcoming and supporting these girls that they've stood up to talk about their experiences the audience did the opposite. In particular Maya Milusheva from Plushie Games made a very passionate claim against the topic women in tech. It went like this:

- I am a woman

- I am a good developer

- I am a mother

- I am a CEO of a successful IT company

- when I hire I want the best people for my company and they are men

- women simply don't have the required tech skills/level of expertise

- the whole talk about women in open source/diversity is bullshit

- girls need to sit down on their asses and read more, code more, etc.

In terms of successfullness I think I can compare to Maya. I also have a small child, which I regularly take to conferences with me (the badges above). I also have an IT company which generates a comparable amount of income. I also want to hire the best employees for any given project I'm working on. Sometimes it's happened that to be a woman, sometimes not. The point here however was not about hiring more women per se. It was about giving opportunities in the communities and letting people grow for themselves.

** Yes, she has a point but there's something WRONG in coming to listen to a presenter just to tell them they are full of shit! It's very arrogant shouting around and arguing a point about hiring when in fact the entire presentation was not about hiring! It is totally unacceptable, The NY Times writing about apps you develop and behaving like an asshole at public events at the same time! **

I've been there, the crowd telling me I'm full of shit when I've been presenting about new technologies. I've been there being told that my ideas will not work in this or that way, while in fact the very idea of trying and considering a completely different technological approach was what counted. And finally I've been there years later when the same ideas and technologies have become mainstream and the same crowd was now talking about them!

After Maya there was another person who grabbed the microphone and continued to talk nonsense. Unfortunately he didn't state his name and I don't know the guy in person. What he said was along the lines of little boys play with robots, little girls play with dolls. They like it this way and that's why girls don't get involved in the technical field. Also if a girl played with robots she will be called a tomboy and generally have a negative attitude towards her.

It's absolutely clear this guy has no idea what he's talking about. Everyone who has small children around them will agree that they are born with equal mental capacity. It is up to the environment, parents, teachers, etc to shape this capacity in a positive way. I've seen children who taught themselves speaking English from YouTube and children the same age barely speaking Bulgarian. I've seen children who are curious about the world and how it works and children who can't wipe their own noses. It's not because they like it that way, it's because of their parents and the environment they live in.

Finally I'd like to respond to this guy (I was specifically motioned at the conference not to respond) with this

I have a 5 year old girl. She likes robots as much as she likes dolls. She works with Linux and is lucky enough to have one of the two OLPCs laptops in Bulgaria. She plays SuperTux and has already found a bug in it (I've reported it). She's been to several Linux and IT conferences as you can see from the picture above. She likes being taken to hackathons and learning about inspiring stuff that students are doing.

Are you telling me that because we have the wrong idea women can't be good at technology she can't become a successful engineer ? Are you telling me to basically scratch the next 10 years of her life and tell her she can't become what she wants ? Because if you do I say FUCK OFF!

Even I as a parent don't have the power to tell my child what they can do or not do, what they can accomplish or not. My job is to show them the various possibilities that exist and guide and support them along they way they want to go! This is what we as society also need to do for everyone else!

To wrap up I will tell you about a psychological experiment we devised with Jona and Suela. I've proposed to find a male and female student in Tirana and have them pose online as somebody from the opposite sex, fake accounts and all. They've proposed having the same person both act as male or female for the purposes of evening out the tech skills difference. The goal is to see how does the tech community react to their contributions and try to measure how much does their gender being known affect their performance! I hope the girls will find a way to perform this experiment together with the university Psychology department and share the results with us.

Btw I will be visiting OSCAL'17 to check up on that so see you in Albania!

There are comments.

IT Weekend Highlights

Last weekend I attended the third IT Weekend. It's like a training camp for athletes but for QA engineers. While during the first week the crowd was more in the junior to mid level range now the crowd was more into the senior level range which made for better talks and discussions. The most interesting sessions for me were On-boarding of New Team Members by Nikola Naidenov and Agile Leadership by Bogoi Bogdanov. Here are some of the highlights that I wrote down:

- On-boarding of new team members is very important. You need to have a plan for their first 6 months at the company. This plan needs to have clearly defined tasks and expectations;

- When a person becomes productive for their team/company it means they have been on-boarded successfully;

- A company provided trainer is a good thing but they tend to focus on broader knowledge, they don't cover team specific domain knowledge;

- Some companies provide both technical and business trainers for their teams;

- It is very important to get timely feedback when you are the one providing training. However feedback isn't always easy to get and we don't always receive sincere feedback;

- If the team is swamped with work tasks you need to provide 10-20% of the time for learning and experimenting with new technologies. IMO this is best done by filing tickets in your bug/task tracking system and prioritizing them together with the rest of the tasks;

- It is also important to have an individual training plan for each team member and review this on a regular basis;

- We should strive to use unified terminology and jargon as to not confuse people. IMO it is usually the new hires who are likely to get confused because they are not familiar with the history of the terms used;

In an agile environment we calculate productivity using the formula

Productivity = Effort * Competence * Environment * Motivation^2

There are 3 important factors that drive motivation

- to have a Purpose

- to feel Autonomy

- to be able to achieve Mastery in your skills

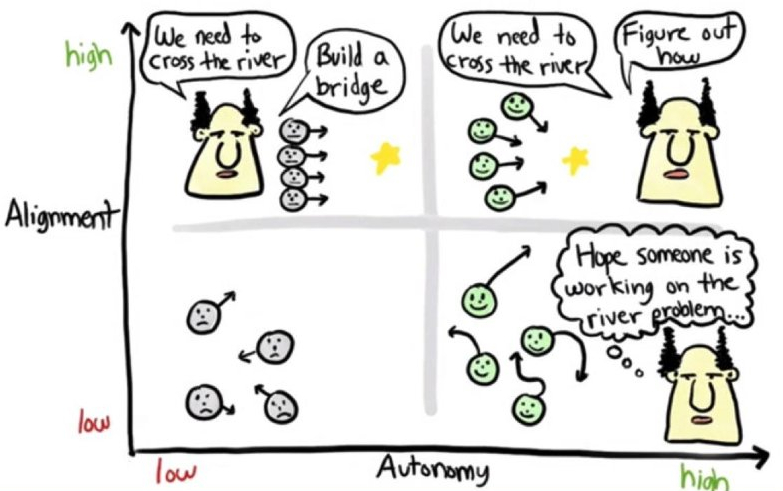

It is also important to note that autonomy is not the opposite of alignment as depicted by the image below.

In agile environment control is a function of trust. To be able to trust people we need to give them autonomy, transparency and short feedback loop!

- Manage for the normal treat exceptions as exceptional;

- Failure recovery is more important than failure avoidance;

- Fail fast means learning fast and improving fast.

Thanks for reading and happy testing!

Photo credit: Rayna Stankova

There are comments.

Animate & Automate

Recently I've attended a presentation by MentorMate where they talked about testing CSS animations ( video in Bulgarian ). The software under test was an ad tech SDK which provides CSS based animations to mobile apps and games. The content is displayed inside a webview and they had to make sure animations were working correctly on different OS and devices.

Analyzing the content (aka getting to know the domain) they figured out in reality there were about 20 basic movements and transformations. So the problem was reduced to "How do we test these 20 basic movements under various OSes and devices" or "How do we verify that basic CSS transformations are supported under different versions and flavors of the OS"?

Their test bed included hand crafted web pages with each basic movement and then several ones with more complex animations (aka integration testing). The idea was to load these pages under different devices and inspect whether or not the animations were visualized properly.

A test script (aka their testing framework) was constantly recording the coordinates of the elements under test to verify that they were really animated. The idea was to use a sample rate of 20ms and expect at lest 20 different changes to the element under test. Coordinates along with color and gradient were recorded and then returned back and analyzed to report a PASS or FAIL result.

This simplistic framework has limitations of course. It is not currently checking the boundaries of where the elements are rendered on the screen. Thus if everything else works as expected this will be a false positive result. On their slides this can be seen at 23:10.

As a side note the entire effort took about 2 days, including research and preparing the test content.

I really like the back to basics approach here and the simplistic framework that MentorMate came up with. Sure it misses some problems but for that particular case it is good enough, easy and fast to implement.

There are comments.

The Passionate Doer

Two weeks ago I visited Startup Factory Ruse and had the opportunity to attend The Passionate Doer – Lessons Learned where Yana Petrova shared her journey with CoKitchen in the past 2 years. The event name comes from The Passionate Programmer book.

A doer is somebody who acts and strives to make changes. When you jump into something new it looks hard and scary at first. With time the important and difficult tasks begin to look like ordinary ones because you gain the experience required for them.

When you are a start-up company you have a limited amount of time and finance to make a break through. Thus it is important to hire the right people, but Yana argues it is more important to quickly dismiss the people who don't fit into your organization. I quite agree with her and the following paragraphs are centered around this idea.

An interesting point Yana makes is about negative reactions and lack of motivation in employees. When a person is lacking motivation they have troubles making logical connections between various tasks and are not able to see the big picture or how their actions or lack thereof affect everything else in the organization. She thinks these are most likely due to problems in our private lives instead of problems at work and as managers we should seek to understand what triggers these negative effects.

Yana believes fatigue makes us vulnerable to negative thoughts so it is best to make important decisions after you've had a good rest. In similar fashion her way to deal with a non-motivated employee is to give them a short break. Then she asks whether or not the employee is ready to return back to work and invest 100% into the job. If not then both parties say good bye to each other. Yana also says that for most problematic employees she'd seen it hasn't been worth it to bring them back and try to improve them.

I've asked Yana if she had some sort of test to keep track of how well a person performs their job. She didn't quite answer but an indicator for under-performance to her is how busy the rest of the team is. Unwillingness to take corrective action, e.g. explore new ways of doing things, acquire more skills or read particular books which will help improve on areas she'd identified, is also a good indicator that the person will have a tendency to under-perform.

Yana says she wouldn't keep an employee who defines their own boundaries and doesn't want to expand them because that person will not go out of their comfort zone. This leaves everyone else tiptoeing around that employee and having to do the job they can't, which puts more stress on the team.

Same approach we should adopt towards customers as well. Get rid of rude and angry customers so that the work flows with less stress.

Yana has been part of many volunteer efforts and her mistake was that she expected everyone to have the same volunteering spirit that she has. In reality it turned out people had trouble managing their own time or lacking the proper communication skills. Not taking responsibility for your actions and not learning from mistakes are other traits she noticed.

At CoKitchen they aim at pairing completely different people with one another so that everyone is able to learn the most from the other person. This is part of their internal mentorship program.

Another interesting book mentioned by a member of the audience was Drive: The Surprising Truth About What Motivates Us. The book reveals the three elements of true motivation:

- AUTONOMY - the desire to direct our own lives;

- MASTERY - the urge to get better and better at something that matters;

- PURPOSE - the yearning to do what we do in the service of something larger than ourselves.

Sounds like an interesting book (from the point of view of the employer) which is definitely going into my reading list.

Sorry if my notes are a bit terse this time, it's been a busy month. I still hope you learned something new from this post. Thanks for reading!

Image credit: Omar Ismail

There are comments.

Updated MacBook Air Drivers for RHEL 7.3

Today I have re-build the wifi and backlight drivers for MacBook Air against the upcoming Red Hat Enterprise Linux 7.3 kernel. wl-kmod again needed a small patch before it can be compiled. mba6x_bl has been updated to the latest upstream and compiled without errors. The current RPM versions are

akmod-wl-6.30.223.248-9.el7.x86_64.rpm

kmod-wl-3.10.0-513.el7.x86_64-6.30.223.248-9.el7.x86_64.rpm

kmod-wl-6.30.223.248-9.el7.x86_64.rpm

wl-kmod-debuginfo-6.30.223.248-9.el7.x86_64.rpm

kmod-mba6x_bl-20161018.d05c125-1.el7.x86_64.rpm

kmod-mba6x_bl-3.10.0-513.el7.x86_64-20161018.d05c125-1.el7.x86_64.rpm

mba6x_bl-common-20161018.d05c125-1.el7.x86_64.rpm

and they seem to work fine for me. Let me know if you have any issues after RHEL 7.3 comes out officially.

PS: The bcwc_pcie driver for the video camera appears to be ready for general use, regardless of some issues. No promises here but I'll try to compile that one as well and provide it in my Macbook Air RHEL 7 repository.

PS2: Sometime after Sept 14th I have probably upgraded my system and now it can't detect external displays if the display is not plugged in during boot. I'm seeing the following

# cat /sys/class/drm/card0-DP-1/enabled

disabled

which appears to be the same issue reported on the ArchLinux forum. I'm in a hurry to resolve this and any help is welcome.

There are comments.

The 4 Basic Communication Styles

The first GEM Conference in Bulgaria took place on Monday and Tuesday. I missed most of the sessions due to other meetings and tasks but managed to attend a workshop on the topic How to harness the power of influence and communication in entrepreneurship by Plamen Popov and Yassar Markos.

3 Fundamentals for Communication

According to Plamen and Yassar there are 3 key fundamentals for any kind of communication:

- Willingness to pay the price for this communication to happen;

- Flexibility because we need to try different approaches until we reach the desired person in a way they can understand what we're saying;

- Integrity because we always need to be consistent in what we deliver to others so that we always match their expectations.

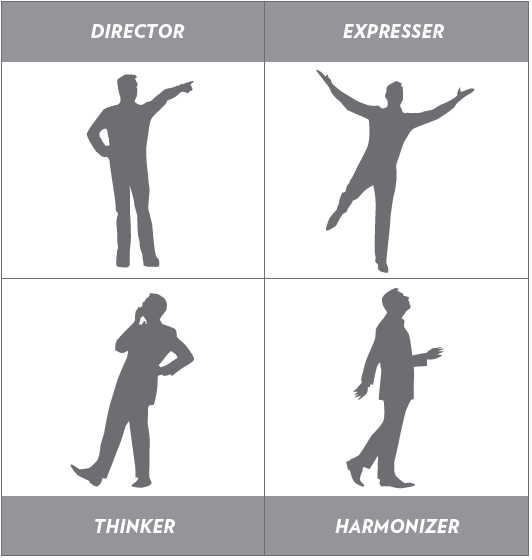

4 Communication Styles

Then there are 4 basic communication styles based on how formal or informal the communication is and how passive or aggressive it is.

-

Director aka dominant style - they exhibit formal and aggressive communication. Key words for them are results, effectiveness, no small talk, to the point. They dislike wasting their time because they always have something better and more interesting to do. This is why people in this group are the worst listeners. They love conflicts and seek ideas and results.

-

Expresser aka promoting style are dominant/aggressive but informal. They are loud, always speak about themselves, they want public attention and to be unique. They forget details but instead are able to grasp the big picture quite easily. They have lots of energy and passion and love to start new projects and don't complete them.

-

Harmonizer aka supportive style - they exhibit informal and passive communication. They love connections and relationships, they use soft language and look after people and team mates. They don't like conflicts and competitive games. People from this style get impressed when you share personal stories and details with them.

-

Thinker aka analytical style people are formal but non-aggressive. They like to know all the facts and always ask lots of questions because they need to understand the big picture. They need time to understand before they can make a decision. In their lives everything has a particular place. They are prone to postponing tasks until they reach perfection (which they never will).

A person typically has one dominant style and a mix of one or two others. I myself am mostly Director and Expresser aka dominant and promoting with a pinch of Thinker. I mostly lack the Harmonizer traits. However it is more important to know what style the person you are talking to is, not what your personal style is.

When judging the style of others take into account where you stand. A person who is strongly formal and aggressive will view a less formal and mild person as the Expresser or Harmonizer style. However to a 3rd party both of these two persons may appear to be dominated by the Director style.

How to Make a Good Presentation

When presenting to a single person or a small group obviously you have to tailor your presentation according to their communication styles. However when presenting before a large and diverse crowd you need to account for all of them in the following order:

-

Capture the Expresser or promoting style by giving them a promise for something cool and interesting. Because they easily forget details and become distracted you can lose them first.

-

Then capture the Director or dominant style by asserting your authority. State your expertise in the field to establish trust. Also sell them the idea for efficiency because this is the value they need to extract from you.

-

Third comes the Harmonizer or supportive style. They seek open people to connect with. Personal stories and struggles are the best way to engage them.

-

Last comes the Thinker or analytical style. They need to know all the information so you have to give them links and materials for further reading. It is also a good idea to give them the ability to ask you questions later (via email, chat, etc) because they will analyze your ideas and come up with more questions on their own.

I have already started to organize my presentations based on the above 4 key points so hopefully you will see me delivering better talks in the future.

There are comments.

What I Learned from IT Weekend

Last week I attended the first IT Weekend in Bulgaria. It's like a training camp for athletes but for QA engineers. There were 20 people attending and the format was very friendly and relaxed. The group had members with various levels of experience and technical skills, also different areas they work in. All presentations are on YouTube. Here's a brief of what happened and what I learned.

I had the honor to present in the first slot and gave a quick introduction to mutation testing. This was my first time giving this talk and I'm not entirely happy with how I've presented it. Also mutation testing is touching a lot on unit tests, programming and source code which in some organizations goes to the devel department. I think mutation testing is harder to understand from people not familiar with it than I initially thought. I'm taking note to improve the way I present this topic to the public.

Yavor Donev gave a good overview of Appium and how to use it on Android. The most important question for me was "Is it possible to utilize the same test suite on Android and iOS, given that the environments are different". With this I mean regardless of how much we try to make the same (native) app on both platforms it will end up differently because the platforms are essentially different. For example there is a different number of physical buttons available.

If we assume that both iOS and Android apps follow the same design and use similar workflow then it should be possible to create a test suite which is platform aware and account for the minor differences. We're also adding another layer of complexity by introducing the requirement that both apps stay in sync with each other and account for the quirks of the foreign platform. Depending on your apps and goals this may not be an easy task!

The one thing I didn't like about Appium is that upstream doesn't care much about version compatibility and they tend to break and change stuff arbitrary between releases. That said if it works, don't update it or otherwise be extremely careful.

I also had a nice chat with Yavor on the topic of career change, learning to program and working with people who have very little coding experience. His approach is to develop a higher level test framework on top of Appium which his team mates can use more easily.

Aneta Petkova's Selenium Grid in Unix Environment is a bit out of my domain. However I took one important lesson: regardless of how great your tools are there are minor details which can make or break your day. In her case these are the physical location of the tests (e.g. which Selenium node runs them) and access to shared resources. Turns out WebDriver doesn't give you this information directly and you need to go through hoops to get it. Her solution was to place the test code on the Grid Hub and provide a shared file system.

The bigger lesson is: whenever you have to design an automated test environment (aka test lab) make sure to evaluate your needs beforehand.

The last talk was a guest appearance by Denitsa Evtimova. She is a QA architect with 16 years of experience and presented the QA strategy at Paysafe group. They have a large monolithic system (legacy code) and have adopted a pyramid style approach to testing. Whenever possible tests are brought down to the lowest level (e.g. unit tests) and not repeated on the higher levels. At the top stand manual testing. Teams are small: 3-4 developers and 2-3 QAs. It is the team responsibility to make sure tests are implemented at the lowest possible level. The process is not strictly enforced and the company relies more on self governance in this aspect. Also everyone on the team can contribute additional tests whenever they see something missing. Test (writing) tasks are all logged in JIRA. They are also small so that everything can be completed in the same day.

The second day was more informal. We did a quick exploratory testing exercise and shared opinions on different test tools. Then the group had a discussion about soft skills and how QA engineers can change the perception of developers about the QA profession (especially in teams where there are many manual testers). The key points are:

- Criticize the software, not the person, e.g. don't blame the person directly;

- Communicate with concrete facts and data, not emotions and perceptions;

- Jokes of the type "how many QA engineers are needed to screw a light bulb" are a problem because they lead to underestimation of the job role;

- Sometimes it is not quite clear (to others) how the QA role contributes to the development of the product and the organization;

- For a QA it is important to be able to give a non-biased opinion and observations on what is happening with the product/process;

- A QA person needs to be very calm. They have to be able to listen to everybody (especially developers) and accept their point of view but at the same time also communicate their own point of view.

- It is important to sit together with developers and observe the problem, brainstorm and propose possible solutions. This also creates a feedback loop where the developer feels empowered because he's part of the process identifying the problem and proposing the best solution;

- In agile teams it is a good idea to rotate people between developer and QA positions. This will help them better understand the job of others, acquire new skills and also bring fresh thinking to the team;

- Quality Assurance is an ungrateful job and only people with very calm and methodical thinking (to follow through and write all possible scenarios) are able to excel in this field. On the other hand developer usually think about the happy path scenarios and strive to make their code work as best as they can;

- By rotating job roles within the team developers will quickly find out that testing is not their field and gain respect towards their QA peers;

- US managers have the habit of telling "good job" to everyone, even for small and routine tasks. In Bulgaria (and maybe elsewhere) we're not used to this. Instead we're used of being scolded when we do something wrong. If everything is good then we don't receive any recognition;

- Using the American "good job" is actually a good thing. Team mates will start performing better over time because they will feel their work is valued and not meaningless, they will feel recognized which will boost morale and productivity.

Thanks for reading and happy testing!

There are comments.

Peter Sabev on Test Automation

Last week Peter Sabev gave his talk "On Reporting Bugs: Errors Made and Lessons learned" for DEV.bg (watch in Bulgarian). At the end of the talk there was a quick question how would he approach automation. I have always approached automation in terms of manpower and skills available within the team while he proposed an approach based on return of investment.

Given that you have a team with strong understanding of the software (code) under test and they have good coding skills then start with the hardest test cases first. This way the team will have lots of hard work upfront and there will be some lead time without visible results. However when the hardest/most complex test cases are already automated you will most likely have covered a big portion of the SUT.

On the contrary, when you start with the easiest test cases first then the team will progress gradually and have enough time to get to grips with the SUT. You are also more likely to see more regressions or bugs missed. With this approach every subsequent automated test will be harder to write and more complex than the previous one. This is a good fit for team who don't have strong experience with test automation and/or are unfamiliar with the product.

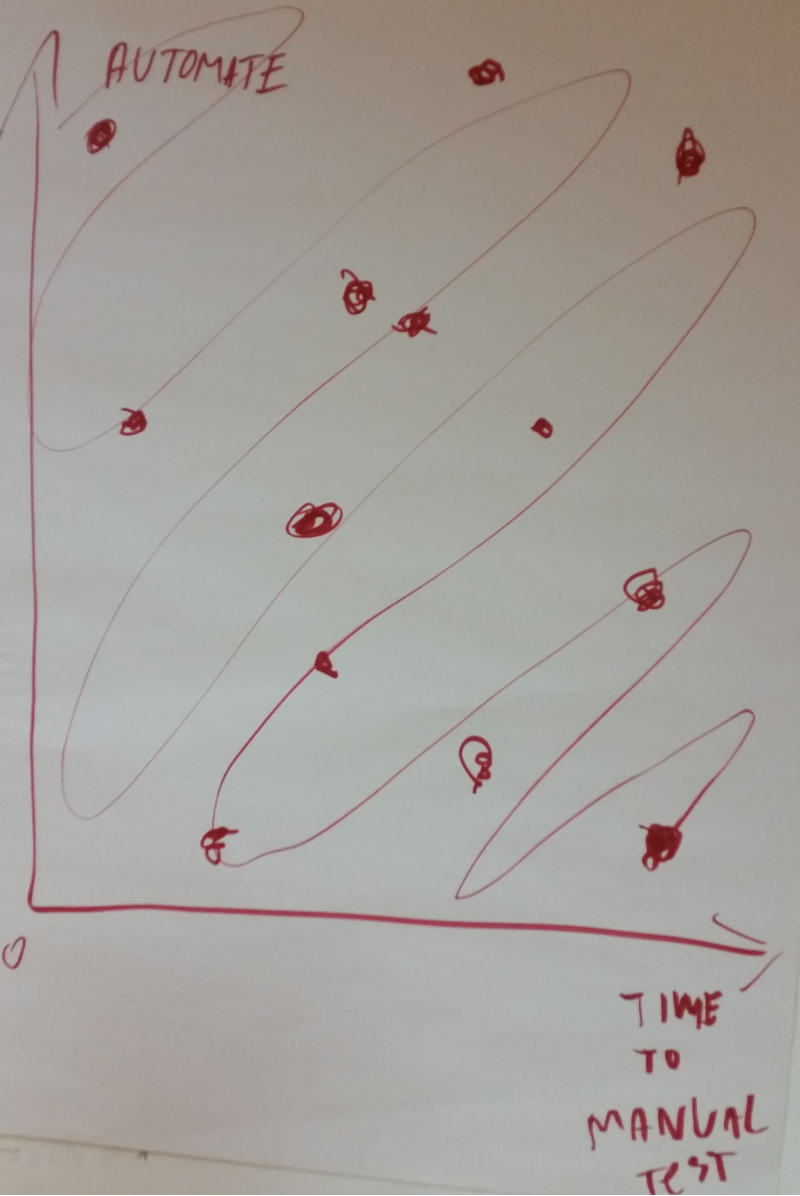

Peter proposes a different approach. He plots the test cases as dots, based on how much time they take to execute manually and how much time/how hard is it to automate the particular case. Then you start to move from the lower right corner towards the upper left corner in a weaving motion, like a snake,

His argument is that once you automate the test cases which are not very complex but require lots of time to execute by hand then you free up resources within the team. As you progress up the chart the test cases become harder to automate and yield less return of investment because they don't take some much time to execute manually.

For more information about Peter's approach please read his article.

As you can see from the snake chart the team constantly faces test scenarios jumping up and down on the automation hardness scale. Which also means that you need to have the suitable skills within the team. IMO this is best suited for teams where each member has different degree of experience. I'm also in favor of using the snake chart as a tool to distribute automation tasks within the team.

If you'd like to hear more about Peter's and mine views on manual vs. automated testing be sure to follow DEV.bg. We are going to host a discussion on October 18th so stay tuned!

There are comments.

What I Learned from EuRuKo 2016

As my frequent readers may know I try to summarize all the conferences and events I go to. This year's EuRuKo inspired me to take a different approach and instead of quickly summarizing the event I will try to highlight what I have learned from it! My intention is to use this as a tool to improve my skills and the work I do. It will probably be a long post so here we go.

Let me say that I don't consider myself a Ruby developer although I do write a small amount of Ruby code. I also don't really consider myself a developer although I have a formal degree in software engineering and do my fair share of open source contributions.

Being different and thinking differently has always been helpful to me in Quality Assurance and this time was no exception. Attending a conference I knew nothing about and meeting with people whose job is totally different than mine turned out to be my greatest experience on the conference circuit this year.

Lesson 1

Get out of the comfort zone, meet new people, exchange ideas and learn! The very fact that I am writing this post not following my usual summary style proves this is working.

Very early during the event I started to notice a recurring theme which grew stronger by the minute. The Ruby community is very open and inclusive to newcomers and they seem to be doing a very good job about on-boarding everyone who wants to learn. I already wrote about Ivan Nemitchenko's experience of organizing remote internships and there are also the Rails Girls local communities, the Rails Girls Summer of Code (didn't know about it) and the various local Ruby communities who pitched their cities to host the next EuRuKo. I really loved this feeling of community. In the broader Linux, Python and QA world I have not seen this being so pronounced.

Lesson 2

Open up (the open source) community even more. Make it easier for newcomers to join! Treat them as human and don't expect them to be like yourself. Do teach and mentor both to help newcomers but also to help yourself become better!

This is mostly on par with my community work but I think I can do better. I will take the time to evaluate what I've been doing in the past and identify areas for improvements. I also encourage my readers and students to send me feedback as well.

I've also learned that junior developers can make meaningful contributions to production grade code when they are given the appropriate set of tasks and guidance. Stephanie Nemeth argued that companies should hire (more) enthusiastic career changers as junior developers because they have very strong motivation for success.

Lesson 3

Re-evaluate how we look at junior developers, especially how we examine and hire them and how we on-board them.

Both lessons 2 and 3 are valid in the open source world and even more so in the corporate world.

I also liked the fact that some of the lightning talks were given by people who had no previous experience in Ruby. @TeamJoda2016 talked about what they did and learn throughout the summer and really cracked the room with their "oh and btw we are looking for a job" as their final slide!

Lesson 4

If you are new/inexperienced at something don't be afraid to try it out. Give it the best you've got and see how it goes. Worst case .... well nothing bad really happens, best case you end up doing the best job in your life. That's also been my personal experience with software testing.

Carina C. Zona's Consequences Of An Insightful Algorithm (old video here) dealt with the ethical responsibilities of us as developers and this is becoming more common with deep learning neural networks.

Lesson 5

We’re able to extract remarkably precise intuitions about an individual. But do we have a right to know what they didn’t consent to share, even when they willingly shared the data that leads us there?

Krissy's The HTT(Pancake) Request made a great analogy of consuming APIs with your customer experience when visiting a restaurant.

Lesson 6

Design APIs (software in general) as if that was a physical product where your customers happiness matters. We see this all the time in our daily jobs and we're guilty of doing it as well. Btw at the moment I'm in the middle of huge refactoring of django-chartit which breaks all backwards compatibility. I guess I will have to re-evaluate my design and approach.

By accident I've made good friends with Alex Georgiev and the folks at Fyber. I liked the fact that at the conference they had couple of people working in QA and we managed to have a nice talk about QA vs developers and the transformation between the two. That also touched on the bigger subject of testers not being able to code and testers not being available for hire.

Lesson 7

Driving people to improve their skills (learn to code, write tests, etc) is possible but needs to come from management, needs clear direction and also a little bit of peer pressure.

After all isn't that what an agile team is supposed to be ?

Now being the able to code, not entirely Ruby ignorant QA guy that I am I was immediately offered several positions in London and Berlin (and no I'm still staying in Sofia). As it turned out good QA engineers with good development skills are in greater demand than developers not only in Sofia but all around the world!

Lesson 8

Fellow QA guys, please do learn to program. Dear developers, please try thinking more like a tester the next time you write code (me included).

Hiring a barista and furnishing your company stand with the best coffee machine you can afford while having an ugly hand written sign saying "MAIN CONFERENCE COFFEE ->" is a marketing stunt that I really love. I'm not sure how well that worked for their hiring but it got them visibility. I'm definitely stealing this one!

Lesson 9

Conference coffee sucks. Provide better one and developers will queue at your stand. To a greater extent - research your target and their needs and provide a product that solves their problem.

What we gave back

#banitsa is a blessing for hang over #euruko

— Monica (@KFMolli) September 24, 2016

indeed Monica it is. Here's the secret sauce

Thanks a lot @euruko to share the recipes for #boza and #banitsa #euruko #bulgary pic.twitter.com/MrkpD4jxve

— Matteo Piotto (@c1p8) September 23, 2016

My personal contribution back was telling Yammer and Deliveroo about mutation testing and pointing them to the right tools and videos on the subject. I wish them good luck and happy testing.

NOTE: I will be speaking about mutation testing at several different events in Bulgaria in the next 2 months so make sure to find me if you want to chat.

There are comments.

What Ivan Learned from Organizing Internships

This is a summary of Ivan Nemytchenko's talk at EuRuKo yesterday (slides here). I'm writing this because that was the best talk both in terms of content and visual presentation I saw at the conference and because it is closely related to my work with HackBulgaria.

The short story is that at some point Ivan was mentoring several junior developers and saw the need to scale this effort so he did a call for interns and got back 60 replies.

What an Intern Gets

- Projects in their portfolio

- Working experience, including team work

- Developing an entire product from idea to production

Ivan wanted to find suitable interns who have basic Ruby on Rails knowledge and who could invest a minimum of 20 hours per week of their time so he devised an aptitude test of 3 parts.

The three part test @inem used to challenge 60 candidates for a Ruby internship. Pretty cool. #euruko pic.twitter.com/OsnN03pXkf

— Tatiana Stantonian (@binaryberry) September 24, 2016

Part 1 is developing basic functionality of the product. Part 2 was adding different user types which require different validation logic, etc. Part 3 was adding "purchasing" logic via external APIs. In Part 3 intentionally there was no code review!

The final result was shit! That was the purpose of the test. The reasoning being that there is no right or wrong way to solve the problems he presented to the interns. Instead he wanted to make them think and decide on a solution. Then feel the pain of their decision. Ivan argues that what made us senior developers are these pains we have experienced at some point in our careers, those fuck-ups that we did in some old project. All of them made us better in our job because we could learn from the mistakes we've made and more importantly understand the consequence of our decisions.

The common mistakes Ivan saw were:

- Ignoring levels of abstraction;

- Using too many gems without knowing or understanding their limitations;

- Gems were treated as the only way to solve a problem. More importantly changing this way was out of the question;

- Interns didn't know about service objects, well even some experienced developers seem to not know that;

- Business logic was all around the place;

- Bad naming all around

The next thing Ivan did was a group hangout code review followed by a short lecture about design patterns, a refactoring session and finally cross code review. At the end the product was delivered as expected.

Following these initial efforts Ivan continued (with even more interns, or the next group of them I think) by asking interns to develop internship automatization, that is a means for the system to distribute tasks based on git commits, tags, etc so it can scale. They've added an admin dashboard and started working on an open source alternative to NewRelic (if I got that correctly). He was also able to enlist 2 more mentors to help him.

Problems Ivan found:

- Not enough mentors and external projects to work on for all of the interns;

- Treating a project as not real (e.g. not a real world product) is a mistake;

- A training project has the same management issues that a real product will have and they need to be resolved in pretty much the same way;

- There was collective irresponsibility from the group of interns. They didn't do what they said they will do;

- There were communication issues between the interns and the lack of enough mentors was an obvious problem.

- There was also lack of motivation.

I'd say these are the typical problems one also sees in almost any teams. It doesn't matter if these are teams of students or teams of developers inside some company.

What a Junior Needs

- A real project to work on;

- A business context, a reason why something should be done and why it needs to be done in a particular way;

- Some visible achievement for their portfolio;

- Team work experience;

- Whole cycle development experience.

Ivan thinks that the aptitude test worked great because his interns were able to find good jobs afterwards but he will change a few things. There will be even more tests and he will reject unfit/bad interns. He will also do call for mentors not only for interns. And he wants to turn mentors' experience into tests as well.

I particularly like the "business context" item. IMO even seasoned developers need to have this if they are expected to create a great product for their company. We're not just coders but sometimes companies forget that!

I am also wondering how can I apply a similar aptitude test in my work (both mentoring at HackBulgaria and otherwise).

How about Senior Developers

- They all have routine tasks;

- and research tasks;

- Nice to have features and

- Low priority features;

- Side project ideas

- Missing features in their favorite open source projects