FOSDEM 2016 Report

Hello everyone, this year I've been to FOSDEM again. Here is a quick report of what I did, saw and liked during the event.

Day 0 - Elixir & Erlang

Friday started with an unexpected shopping trip. The airline broke

my luggage and I had to buy a replacement. The irony is that just before taking off

I saw a guy with an

Osprey Meridian

and thought how cool that was. The next day I was running around Brussels to

find the exact same model! I also searched to buy the book

Teach Your Child How to Think

by Edward De Bono but all 4 bookstores I checked were out of stock.

With the luggage problem solved I headed to BetaCowork for Brussels Erlang Factory Lite where I learned a bit about Erlang and Elixir. I also managed to squeeze a meeting with Gilbert West talking about open source bugs.

I found particularly interesting the Elixir workshop and the talk Erlang In The Wild: A Governmental Web Application by Pieterjan Montens. Later I've managed to get a hold of him and talk some more about his experiences working for the government. As I figured out later we are likely to have mutual friends.

BetaCowork was hosting FOSDEM related events during the entire week. There was a GNOME event and the Libre Office Italian team was there as well. Next time definitely worth a longer visit.

Friday night was reserved for a dinner with the Red Hat Eclipse team and a fair amount of beer at Delirium afterwards where I've met my friend Giannis Konstantinidis and the new Fedora Ambassador for Albania - Jona Azizai.

All-in-all pretty good Friday!

Day 1 - Testing and Automation

FOSDEM was hosting the Testing and automation devroom again. I've spent the entire Saturday there.

Definitely the most interesting talk was Testing interoperability with closed-source software through scriptable diplomacy which introduced Frida. Frida is a testing tool which injects a JavaScript VM into your process and you can write scripts driving the application automatically. It was designed as means to control closed source software but can definitely be used for open source apps as well.

I've talked to both Karl and Ole about Frida and my use-case for testing interactive terminal programs. That should be easy to do with Frida - just hook into the read and write functions and write some JavaScript or Python to run the test. Later we talked about how exactly Frida attaches to the running process and what external dependencies are needed if I'm to inject Frida into the Fedora installation environment.

In Testing embedded systems Itamar Hassin talked about testing medical devices and made a point about regulation, security and compliance. Basically you are not allowed to ship non-application code on production system. However that code is necessary instrumentation to allow external integration testing. I suspect most developers and QA engineers will never have to deal with so strict regulations but that is something to have in mind if you test software in a heavily regulated industry.

Testing complex software in CI was essentially a presentation about cwrap which I've seen at FOSDEM 2014. It touched the topic from a slightly different angle though. Tests in any open source project should have the following properties

- Be able to execute without the need of a complex environment;

- Enable full CI during code review (dependent on previous property);

- Be able to create complete integration tests.

The demo showed how you can execute Samba's test suite locally without preparing a domain controller for example. This helps both devel and external contributors. Now contrast this with how Red Hat QE will do the testing - they will create a bunch of virtual and bare metal machines, configure all related services and then execute the same test scripts to verify that indeed Samba works as expected.

Btw I've been thinking what if I patch cwrap to overwrite read and write ? That will also make it possible to test interactive console programs, wouldn't it?

Jenkins as Code by Marcin and Lukasz was a blast. I think there were people standing alongside the walls. The guys shared their experience with Jenkins Job DSL plugin. The plugin is very flexible and powerful, using Groovy as its programming language. The only drawback is that it is sometimes too complex to use and requires a steep learning curve. Maybe Jenkins Job Builder is better suited if you don't need all that flexibility and complexity. I've met both of them afterwards and talked a bit more about open source bugs.

At Saturday evening I've visited a panel discussion about the impact of open source on the tech industry at Co.Station organized by The Startup Bus. There was beer, pizza, entrepreneur networking and talking about startups and open source. Do I need to say more? I will post a separate blog post about the interesting start-ups I've found so stay tuned.

Day 2 - Ping-pong with BBC Open Source

Sunday was my lazy day. I've attended a few talks but nothing too interesting. I've managed to go around the project stands, filed a bug against FAS and scored the highest ping-pong score for the day at the BBC Open Source stand.

BBC has a long history of being involved with education in the UK and the micro:bit is their latest project. I will love to see this (or similar) delivered by the thousands here in Bulgaria so if you think about sponsoring let me know.

Accidentally I met Martin Sivak - a former Anaconda developer whom I've worked with in the past. He is now at the Virtualization Development team at Red Hat and we briefly talked about the need for more oVirt testing. I have something in mind about this which will be announced in the next 2 months so stay tuned.

There are comments.

How to Get Started on a New QA Project

Every time I have to look at a new software project and use my QA powers to help them I follow a standard process of getting involved. Usually my job is to reveal which areas are lacking adequate test coverage and propose and implement improvements. Here's what it looks like.

Obtain Domain Knowledge

Whatever the software does I don't start looking into the technical details before I have domain knowledge about the subject. If the domain is purely outside my expertise my first reading materials have nothing to do with the product itself. My goal is to learn how the domain works, what language it uses, any particular specifics that may exists, etc.

Then I start asking general questions about the software, how it works and what it does. At this stage I'm not trying to go into details but rather cover as much angles as possible. I want to know the big picture of how things are supposed to work and what the team is trying to do. This also starts to reveal the architecture behind the project.

Then I start using the software as if I'm the intended target audience while taking notes about everything that seems odd or I simply don't understand. Being with limited knowledge about the domain and the product helps a lot because I haven't developed any bias yet. This initial hands-on introduction is best done with a peer who is better familiar with the product. It is not necessary for the peer to be a technical person, although that helps when implementation related questions arise.

RTFM

I make a point to read any available documents, wikis, READMEs, etc. They can fill the gaps with often used terms, explain processes and workflows or reveal that such are missing and document existing infrastructure. Quite often I'm able to see some areas for improvements directly from reading the documentation.

At this stage I'm just collecting notes and impressions which will be validated later. I don't try to remember all of the docs because I can always go back and read them again. Instead I try to remember the topics these documents talk about and possibly collect links for future reference.

Get to Know the Devel Team

One thing I hate the most, except not knowing what a piece of code does is not knowing who to ask. Quite often team structure follows the application structure - front-end, back-end, mobile, etc. While getting to know who does what I also use this as opportunity to gain deeper knowledge about the product. I will talk to team leads and individual developers asking them to explain the chosen architecture and also tell me what are the most annoying problems according to them.

Later this knowledge makes it easier to see trends and suggest changes that will improve the overall product quality. Having good working relationship with developers also makes it easy for these changes to get through.

Get to Know the QA Team

Similar to the previous step but with a deeper focus on details. This is my personal domain so I'm trying to figure out who does what in terms of software testing for the project in question. When I'm working with less experienced QA teams I focus on what are the individual tasks at hand, how often are they executed, what is the general workflow and how are we dealing with bugs.

Behind the scenes what this accomplishes is that I'm able to find what the bug reporting, testing and verification process is. How are new bugs discovered and what are the general test strategies without confronting people directly. I also find what tools are used and get familiar with them in the process and discover the level of technical abilities of individual team members.

Get to Know the App

Armed with the previous knowledge I set off to explore the entire application. Again this is best done with a peer. This time I look at every screen, widget and button there is. I try using all the available features which also doubles as an exploratory testing session.

For backend services which are usually harder to test I opt for a more detailed explanation session with the developers. Here's the time when I'm asking the question "How do you test .... ?" multiple times.

Deploy to Production

If possible I like to keep an eye on how things are deployed to production. It is not uncommon for software to experience problems due to problems with the deployment procedure or the production environment itself.

Also with agile teams it is common to deploy more often. This in turn may generate additional work for QA. Knowing how software is deployed to production and what the workflow in the team is helps later with planning test activities and resources.

Read All Bugs

Unless there are millions of them I try to go through all the bugs reported in the bug tracker. If the project has some age to it earlier reports may not be relevant anymore. In this case my marker is an important event like big refactoring, important or big new features, changes in teams and organization or similar. The thinking behind this is that every big change introduces lots of risks from software quality stand point. Also large changes invalidate previous conditions and may render existing problems obsolete, replacing them with newer set of problems instead!

From reading bug reports I'm able to discover failure trends, which in turn indicate areas for improvement. For example: lots of translation related bugs indicate problems with the translation workflow, lots of broken existing functionality means poor regression testing, probably also lack of unit tests, lots of partially(poorly) implemented features shows chaotic planning and unclear feature specifications, etc.

At this stage I try to classify problems both by technical component and by type of issue. Later this will be my starting reference for creating test strategies and test plans!

If possible and practical I talk to support and read all the support tickets as well. This gives me an idea which problems are most visible to customers which aren't necessary the biggest technical issues but may consume man power dealing with them.

Summarize, Divide and Conquer

Depending on the project and team size these initial steps will take anywhere from a full week to a month or even more. After they are complete I'm feeling comfortable talking about the software at hand and have a list of possible problems and areas of improvements.

After talking to people in charge (e.g. PMs, Project Leads, etc) my initial list is transformed into tasks. I strive to keep the tasks as independent as possible. Then these tasks are prioritized and it's time to start executing them one by one.

There are comments.

Using Multiple Software Collections

Software Collections are never versions of system wide packages used typically on Red Hat Enterprise Linux. They are installed together with the system-wide versions and can be used to develop applications without affecting the system tools.

If you need to use a software collection then enable it like so:

scl enable rh-ruby22 /bin/bash

You can enable a second (and third, etc) software collection by executing scl

again:

scl enable nodejs010 /bin/bash

After executing the above two commands I have:

$ which ruby

/opt/rh/rh-ruby22/root/usr/bin/ruby

$ which node

/opt/rh/nodejs010/root/usr/bin/node

Now I can develop an application using Ruby 2.2 and Node.js 0.10.

There are comments.

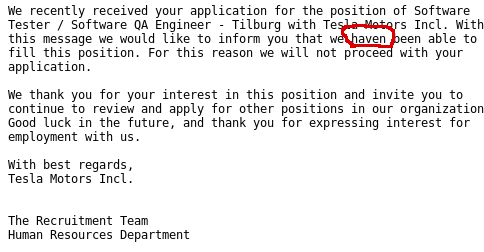

Tesla Needs More QA

A nice way for Tesla Motors to tell me they won't consider my interest in their QA positions - by sending me an email with a bug! It only goes to show that everything coming out of an IT system needs to be tested.

There are comments.

Controlling Interactive Terminal Programs

Building on my previous experience about

capturing terminal output from other processes

I wanted to create an automated test case for the initial-setup-text service

which would control stdin. Something like

Dogtail but for text mode applications.

What you have to do is attach gdb to the process. Then you can write to

any file descriptor that is already opened. WARNING: writing directly to

stdin didn't quite work! Because (I assume) stdin is a tty the text was shown on

the console but the return character wasn't interpreted and the application wasn't

accepting the input string. What I had to do is replace the tty with a pipe and

it worked. However the input is not duplicated on the console this way!

Another drawback is that I couldn't use strace to log the output in combination

with gdb. Once a process is under trace you can't trace it a second time! For this

simple test I was able to live with this by not inspecting the actual text printed

by initial-setup. Instead I'm validating the state of the system after setup is

complete. I've tried to read from stdout in gdb but that didn't work either.

If there's a way to make this happen I can convert this script to a mini-framework.

Another unknown is interacting with passwd. Probably for security reasons

it doesn't allow to mess around with its stdin but I didn't investigate deeper.

I've used the

fdmanage.py

script which does most of the work for me. I've removed the extra bits

that I didn't need, added the write() method and removed the original call to

fcntl which puts gdb.stdout into non-blocking mode (that didn't work for me).

#!/usr/bin/env python

# -*- coding: utf-8 -*-

#

# fdmanage.py is a program to manage file descriptors of running programs

# by using GDB to modify the running program.

# https://github.com/ticpu/tools/blob/master/fdmanage.py

# Copyright (C) 2015 Jérôme Poulin <jeromepoulin@gmail.com>

#

# This program is free software: you can redistribute it and/or modify

# it under the terms of the GNU General Public License as published by

# the Free Software Foundation, either version 3 of the License, or

# (at your option) any later version.

#

# This program is distributed in the hope that it will be useful,

# but WITHOUT ANY WARRANTY; without even the implied warranty of

# MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

# GNU General Public License for more details.

#

# You should have received a copy of the GNU General Public License

# along with this program. If not, see <http://www.gnu.org/licenses/>.

#

# Copyright (c) 2016 Alexander Todorov <atodorov@redhat.com>

from __future__ import unicode_literals

import os

import sys

import select

import subprocess

class Gdb(object):

def __init__(self, pid, verbose=False):

pid = str(pid)

self.gdb = subprocess.Popen(

["gdb", "-q", "-p", pid],

stdin=subprocess.PIPE,

stdout=subprocess.PIPE,

stderr=subprocess.STDOUT,

close_fds=True,

)

self.pid = pid

self.verbose = verbose

def __enter__(self):

return self

def __exit__(self, exc_type, exc_val, exc_tb):

del exc_type, exc_val, exc_tb

self.send_command("detach")

self.send_command("quit")

self.gdb.stdin.close()

self.gdb.wait()

if self.verbose:

print(self.gdb.stdout.read())

print("\nProgram terminated.")

def send_command(self, command):

self.gdb.stdin.write(command.encode("utf8") + b"\n")

self.gdb.stdin.flush()

def send_command_expect(self, command):

self.send_command(command)

while True:

try:

data = self.gdb.stdout.readline().decode("utf8")

if self.verbose:

print(data)

if " = " in data:

return data.split("=", 1)[-1].strip()

except IOError:

select.select([self.gdb.stdout], [], [], 0.1)

def close_fd(self, fd):

self.send_command("call close(%d)" % int(fd))

def dup2(self, old_fd, new_fd):

self.send_command("call dup2(%d, %d)" % (int(old_fd), int(new_fd)))

def open_file(self, path, mode=66):

fd = self.send_command_expect('call open("%s", %d)' % (path, mode))

return fd

def write(self, fd, txt):

self.send_command('call write(%d, "%s", %d)' % (fd, txt, len(txt)-1))

if __name__ == "__main__":

import time

import tempfile

# these are specific to initial-setup-text

steps = [

"1\\n", # License information

"2\\n", # Accept license

"c\\n", # Continue back to the main hub

"3\\n", # Timezone settings

"8\\n", # Europe

"43\\n", # Sofia

# passwd doesn't allow us to overwrite its file descriptors

# either aborts or simply doesn't work

# "4\\n", # Root password

# "redhat\\n",

# "redhat\\n",

# "no\\n", # password is weak

# "123\\n",

# "123\\n",

# "no\\n", # password is too short

# "Th1s-Is-a-Str0ng-Password!\\n",

# "Th1s-Is-a-Str0ng-Password!\\n",

"c\\n", # Continue to exit

]

if len(sys.argv) != 2:

print "USAGE: %s <PID>" % __file__

sys.exit(1)

tmp_dir = tempfile.mkdtemp()

pipe = os.path.join(tmp_dir, "pipe")

os.mkfifo(pipe)

with Gdb(sys.argv[1], True) as gdb:

fd = gdb.open_file(pipe, 2) # O_RDWR

gdb.dup2(fd, 0) # replace STDIN with a PIPE

gdb.close_fd(fd)

# set a breakpoint before continuing

break_point_num = gdb.send_command_expect("break read")

# now execute the process step-by-step

i = 0

len_steps = len(steps)

for txt in steps:

gdb.write(0, txt)

time.sleep(1)

i += 1

if i == len_steps - 1:

gdb.send_command("delete %s" % break_point_num)

gdb.send_command("continue")

time.sleep(1)

# clean up

os.unlink(pipe)

os.rmdir(tmp_dir)

There are comments.

Capture Terminal Output From Other Processes

I've been working on a test case to verify that Anaconda will print its EULA notice at the end of a text mode installation. The problem is how do you capture all the text which is printed to the terminal from processes outside your control ? The answer is surprisingly simple - using strace!

%post --nochroot

PID=`ps aux | grep "/usr/bin/python /sbin/anaconda" | grep -v "grep" | tr -s ' ' | cut -f2 -d' '`

STRACE_LOG="/mnt/sysimage/root/anaconda.strace"

# see https://fedoraproject.org/wiki/Features/SELinuxDenyPtrace

setsebool deny_ptrace 0

# EULA notice is printed after post-scripts are run

strace -e read,write -s16384 -q -x -o $STRACE_LOG -p $PID -f >/dev/null 2>&1 &

strace is tracing only read and write events (we really only need write) and extending the maximum string size printed in the log file. For a simple grep this is sufficient. If you need to pretty-print the strace output have a look at the ttylog utility.

There are comments.

Insert Key on MacBook Keyboard

For some reason my terminal doesn't always accept Ctrl+V for paste. Instead it needs the Shift+Ins key combo. On the MacBook Air keyboard the Ins key is simulated by pressing Fn+Return together. So the paste combo becomes Shift+Fn+Return!

There are comments.

Interesting Ruby Resources

During my quest for faster RSpec tests I've come across several interesting posts about Ruby. Being new to the language they've helped me understand a bit more about the internals. Posting them here so they don't get lost.

Garbage Collection

The road to faster tests - a story about tests and garbage collection.

Tuning Ruby garbage collection for RSpec - practical explanation of Ruby's garbage collector and how to adjust its performance for RSpec

Probably the very first posts I found referencing slow RSpec tests. It turned out

this was not the issue but I've nevertheless tried running GC manually. I can clearly

see (using puts GC.count in after()) GC invoked less frequently, memory usage rising

but the overall execution time wasn't affected. The profiler said 2% speed increase

to be honest.

Profiling

Not being very clear about the different profiling tools available and how to interpret their results I've found these articles:

Profiling Ruby With Google's Perftools - practical example for using perftools.rb

How to read ruby profiler's output - also see the Profiler__ module

Show runtime for each rspec example

- using rspec --profile

Suggestions for Faster Tests

Several general best practices for faster tests:

9 ways to speed up your RSpec tests

Run faster Ruby on Rails tests

Three tips to improve the performance of your test suite

RubyGems related

I've noticed Bundler loading tons of requirements (nearly 3000 unique modules) and for some particular specs this wasn't necessary (for example running Rubocop). I've found the following articles below which sound very reasonable to me:

Use Bundler.setup Instead of Bundler.require

5 Reasons to Avoid Bundler.require

Why "require 'rubygems'" Is Wrong

Weird

Finally (or more precisely first of all) I've seen this Weird performance issue.

During my initial profiling I've seen (and still see) a similar issue. When calling require it goes through lots of hoops before finally loading the module. My profiling results show this taking a lot of time but this time is likely measured with profiling enabled and doesn't represent the real deal.

On my MacBook Air with Red Hat Enterprise Linux 7 this happens when using Ruby 2.2.2 from Software Collections. If using Ruby installed from source with rbenv the profiling profile is completely different.

I will be examining this one in more details. I'm interested to know what is the difference and if that affects performance somehow so stay tuned!

There are comments.

Speeding up RSpec and PostgreSQL tests

I've been working with Tradeo on testing one of their applications. The app is standard Ruby on Rails application with over 1200 tests written with RSpec. And they were horribly slow. On my MacBook Air the entire test suite took 27 minutes to execute. On the Jenkins slaves it took over an hour. After a few changes Jenkins now takes 15 minutes to execute the test suite. Locally it takes around 11 minutes!

The Problem

I've measured the speed (with Time.now) at which individual examples execute and it was quickly apparent they were taking a lot of time cleaning the DB. The offending code in question was:

config.before(:all) do

DatabaseCleaner.clean_with :truncation

end

This is truncating the tables quite often but it turns out this is a very expensive operation on tables with small number of records. I've measured it locally around 2.5 seconds. Check out this SO thread which describes pretty much the same symptoms:

Right now, locally (on a Macbook Air) a full test suite takes 28 minutes....

Tailing the logs on our CI server (Ubuntu 10.04 LTS) .... a build takes 84 minutes.

This excellent answer explains why this is happening:

(a) The bigger shared_buffers may be why TRUNCATE is slower on the CI server.

Different fsync configuration or the use of rotational media instead of

SSDs could also be at fault.

(b) TRUNCATE has a fixed cost, but not necessarily slower than DELETE,

plus it does more work.

The Fix

config.before(:suite) do

DatabaseCleaner.clean_with :truncation

end

config.before(:all) do

DatabaseCleaner.clean_with :deletion

end

before(:suite) will truncate tables every time we run rspec, which is when we

launch the entire test suite. This is to account for the possible side effects

of DELETE in the future (see the SO thread). Then before(:all) aka

before(:context) simply deletes the records which is significantly faster!

Also updated the CI servers postgresql.conf to

fsync=off

full_page_writes=off

The entire build/test process now takes only 15 minutes! Only one test broke due to PostgreSQL returning records in a different order, but it's the test case fault not handling this in the first place!

NOTE: Using fsync=off with rotational media pretty much hides any improvements introduced by updating the DatabaseCleaner strategy.

What's Next

There are several other things worth trying:

- Use UNIX domain sockets instead of TCP/IP (localhost) to connect to PostgreSQL;

- Load the entire PostgreSQL partition in memory;

- Don't delete anything from the database, except once in

before(:suite). If any tests need a particular DB state they have to set this up on their own instead of relying on a global cleanup process. I expect this to break quite a few examples.

After the changes and with my crude measurements I have individual examples taking 0.31 seconds to execute. Interestingly before and after take less than a second while the example code takes around 0.15 seconds. I have no idea where the rest 0.15 seconds are spent. My current speculation is probably RSpec. This is 50% of the execution time and is also worth looking into!

There are comments.

Logging VM installation to stdout

Recently I've been using virt-install to create virtual machines inside some automated tests and then ssh'ing to the VM guest and inspecting the results. What's been bothering me is that while the VM is installing I can't see what's going on. The solution is to log everything to stdout which is then collected by my test harness and archived on the file server. To do this

setenforce 0

virt-install ... -x "console=ttyS0" --serial dev,path=/dev/pts/1

This puts SELinux into Permissive mode, instructs the VM guest to use a serial console and then redirects the serial console to stdout on the host. If you have SELinux in Enforcing mode then you get the following error:

unable to set security context 'system_u:object_r:svirt_image_t:s0:c43,c440' on '/dev/pts/1': Permission denied

NOTE: if you execute this from a script and there is no controlly tty it will

fail. The next best thing you can do is log to a file with

--serial file,path=guest.log and collect this file for later!

There are comments.

Facebook is Bugging me

![]()

Facebook for Android version 55.0.0.18.66 broke the home screen icon title.

It reads false instead of Facebook. This is now fixed in version 58.0.0.28.70.

I wonder how they managed to get this slip through. Also isn't Google

supposed to review the apps coming into the Play Store and not publish them

if there are such visible issues ? I guess this wasn't the case here.

There are comments.

virtio vs. rtl8139

I've been doing some tests with KVM virtual machines and been launching them with virt-install. For some reason the VM wasn't activating its NIC. Changing the interface model from virtio to rtl8139 made it work again:

virt-install --name $VM_NAME --ram 1024 --os-type=linux --os-variant=rhel7 \

- --disk none --network bridge=virbr0,model=virtio,mac=$VM_MAC \

+ --disk none --network bridge=virbr0,model=rtl8139,mac=$VM_MAC \

--noreboot --graphics vnc \

--cdrom /var/tmp/tmp*/images/boot.iso --boot cdrom &

virt-install(1) says:

model

Network device model as seen by the guest. Value can be any nic model supported by the hypervisor, e.g.: 'e1000',

'rtl8139', 'virtio', ...

I'm not clear if this configures what drivers to be used for the NIC inside the guest or what is the driver of the host NIC. At this moment I don't know why changing the value causes networking in the guest to fail. If you do know please comment below. Thanks!

There are comments.

Automatic Upstream Dependency Testing

Ever since RHEL 7.2 python-libs broke s3cmd I've been pondering an age old problem: How do I know if my software works with the latest upstream dependencies ? How can I pro-actively monitor for new versions and add them to my test matrix ?

Mixing together my previous experience with Difio and monitoring upstream sources, and Forbes Lindesay's GitHub Automation talk at DEVit Conf I came together with a plan:

- Make an application which will execute when new upstream version is available;

- Automatically update

.travis.ymlfor the projects I'm interested in; - Let Travis-CI execute my test suite for all available upstream versions;

- Profit!

How Does It Work

First we need to monitor upstream! RubyGems.org has nice webhooks interface, you can even trigger on individual packages. PyPI however doesn't have anything like this :(. My solution is to run a cron job every hour and parse their RSS stream for newly released packages. This has been working previously for Difio so I re-used one function from the code.

After finding anything we're interested in comes the hard part - automatically

updating .travis.yml using the GitHub API. I've described this in more detail

here. This time

I've slightly modified the code to update only when needed and accept more

parameters so it can be reused.

Travis-CI has a clean interface to specify environment variables and

defining several

of them crates a test matrix. This is exactly what I'm doing.

.travis.yml is updated with a new ENV setting, which determines the upstream

package version. After commit new build is triggered which includes the expanded

test matrix.

Example

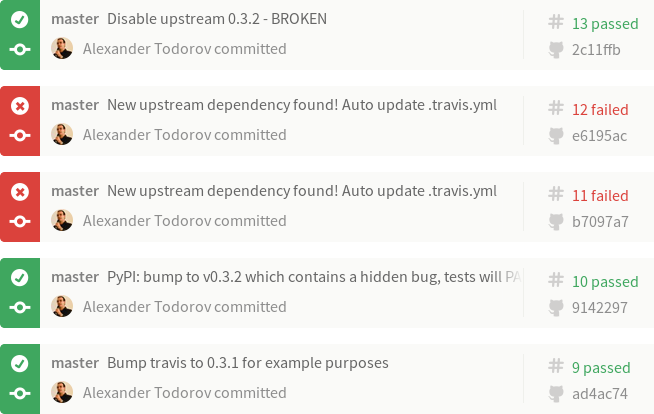

Imagine that our Project 2501 depends on FOO version 0.3.1. The build log illustrates what happened:

- Build #9 is what we've tested with FOO-0.3.1 and released to production. Test result is PASS!

- Build #10 - meanwhile upstream releases FOO-0.3.2 which causes our project to break. We're not aware of this and continue developing new features while all test results still PASS! When our customers upgrade their systems Project 2501 will break ! Tests didn't catch it because test matrix wasn't updated. Please ignore the actual commit message in the example! I've used the same repository for the dummy dependency package.

- Build #11 - the monitoring solution finds FOO-0.3.2 and updates the test matrix automatically. The build immediately breaks! More precisely the test with version 0.3.2 fails!

- Build #12 - we've alerted FOO.org about their problem and they've released FOO-0.3.3. Our monitor has found that and updated the test matrix. However the 0.3.2 test job still fails!

- Build #13 - we decide to workaround the 0.3.2 failure or simply handle the

error gracefully. In this example I've removed version 0.3.2 from the test

matrix to simulate that. In reality I wouldn't touch

.travis.ymlbut instead update my application and tests to check for that particular version. All test results are PASS again!

Btw Build #11 above was triggered manually (./monitor.py) while Build #12 came from OpenShit, my hosting environment.

At present I have this monitoring enabled for my new Markdown extensions and will also add it to django-s3-cache once it migrates to Travis-CI (it uses drone.io now).

Enough Talk, Show me the Code

#!/usr/bin/env python

import os

import sys

import json

import base64

import httplib

from pprint import pprint

from datetime import datetime

from xml.dom.minidom import parseString

def get_url(url, post_data = None):

# GitHub requires a valid UA string

headers = {

'User-Agent' : 'Mozilla/5.0 (X11; Linux x86_64; rv:10.0.5) Gecko/20120601 Firefox/10.0.5',

}

# shortcut for GitHub API calls

if url.find("://") == -1:

url = "https://api.github.com%s" % url

if url.find('api.github.com') > -1:

if not os.environ.has_key("GITHUB_TOKEN"):

raise Exception("Set the GITHUB_TOKEN variable")

else:

headers.update({

'Authorization': 'token %s' % os.environ['GITHUB_TOKEN']

})

(proto, host_path) = url.split('//')

(host_port, path) = host_path.split('/', 1)

path = '/' + path

if url.startswith('https'):

conn = httplib.HTTPSConnection(host_port)

else:

conn = httplib.HTTPConnection(host_port)

method = 'GET'

if post_data:

method = 'POST'

post_data = json.dumps(post_data)

conn.request(method, path, body=post_data, headers=headers)

response = conn.getresponse()

if (response.status == 404):

raise Exception("404 - %s not found" % url)

result = response.read().decode('UTF-8', 'replace')

try:

return json.loads(result)

except ValueError:

# not a JSON response

return result

def post_url(url, data):

return get_url(url, data)

def monitor_rss(config):

"""

Scan the PyPI RSS feeds to look for new packages.

If name is found in config then execute the specified callback.

@config is a dict with keys matching package names and values

are lists of dicts

{

'cb' : a_callback,

'args' : dict

}

"""

rss = get_url("https://pypi.python.org/pypi?:action=rss")

dom = parseString(rss)

for item in dom.getElementsByTagName("item"):

try:

title = item.getElementsByTagName("title")[0]

pub_date = item.getElementsByTagName("pubDate")[0]

(name, version) = title.firstChild.wholeText.split(" ")

released_on = datetime.strptime(pub_date.firstChild.wholeText, '%d %b %Y %H:%M:%S GMT')

if name in config.keys():

print name, version, "found in config"

for cfg in config[name]:

try:

args = cfg['args']

args.update({

'name' : name,

'version' : version,

'released_on' : released_on

})

# execute the call back

cfg['cb'](**args)

except Exception, e:

print e

continue

except Exception, e:

print e

continue

def update_travis(data, new_version):

travis = data.rstrip()

new_ver_line = " - VERSION=%s" % new_version

if travis.find(new_ver_line) == -1:

travis += "\n" + new_ver_line + "\n"

return travis

def update_github(**kwargs):

"""

Update GitHub via API

"""

GITHUB_REPO = kwargs.get('GITHUB_REPO')

GITHUB_BRANCH = kwargs.get('GITHUB_BRANCH')

GITHUB_FILE = kwargs.get('GITHUB_FILE')

# step 1: Get a reference to HEAD

data = get_url("/repos/%s/git/refs/heads/%s" % (GITHUB_REPO, GITHUB_BRANCH))

HEAD = {

'sha' : data['object']['sha'],

'url' : data['object']['url'],

}

# step 2: Grab the commit that HEAD points to

data = get_url(HEAD['url'])

# remove what we don't need for clarity

for key in data.keys():

if key not in ['sha', 'tree']:

del data[key]

HEAD['commit'] = data

# step 4: Get a hold of the tree that the commit points to

data = get_url(HEAD['commit']['tree']['url'])

HEAD['tree'] = { 'sha' : data['sha'] }

# intermediate step: get the latest content from GitHub and make an updated version

for obj in data['tree']:

if obj['path'] == GITHUB_FILE:

data = get_url(obj['url']) # get the blob from the tree

data = base64.b64decode(data['content'])

break

old_travis = data.rstrip()

new_travis = update_travis(old_travis, kwargs.get('version'))

# bail out if nothing changed

if new_travis == old_travis:

print "new == old, bailing out", kwargs

return

####

#### WARNING WRITE OPERATIONS BELOW

####

# step 3: Post your new file to the server

data = post_url(

"/repos/%s/git/blobs" % GITHUB_REPO,

{

'content' : new_travis,

'encoding' : 'utf-8'

}

)

HEAD['UPDATE'] = { 'sha' : data['sha'] }

# step 5: Create a tree containing your new file

data = post_url(

"/repos/%s/git/trees" % GITHUB_REPO,

{

"base_tree": HEAD['tree']['sha'],

"tree": [{

"path": GITHUB_FILE,

"mode": "100644",

"type": "blob",

"sha": HEAD['UPDATE']['sha']

}]

}

)

HEAD['UPDATE']['tree'] = { 'sha' : data['sha'] }

# step 6: Create a new commit

data = post_url(

"/repos/%s/git/commits" % GITHUB_REPO,

{

"message": "New upstream dependency found! Auto update .travis.yml",

"parents": [HEAD['commit']['sha']],

"tree": HEAD['UPDATE']['tree']['sha']

}

)

HEAD['UPDATE']['commit'] = { 'sha' : data['sha'] }

# step 7: Update HEAD, but don't force it!

data = post_url(

"/repos/%s/git/refs/heads/%s" % (GITHUB_REPO, GITHUB_BRANCH),

{

"sha": HEAD['UPDATE']['commit']['sha']

}

)

if data.has_key('object'): # PASS

pass

else: # FAIL

print data['message']

if __name__ == "__main__":

config = {

"atodorov-test" : [

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/bztest',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

}

],

"Markdown" : [

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/Markdown-Bugzilla-Extension',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

},

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/Markdown-No-Lazy-Code-Extension',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

},

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/Markdown-No-Lazy-BlockQuote-Extension',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

},

],

}

# check the RSS to see if we have something new

monitor_rss(config)

There are comments.

Hosting Multiple Python WSGI Scripts on OpenShift

With OpenShift you can host WSGI Python applications. By default the Python cartridge comes with a simple WSGI app and the following directory layout

./

./.openshift/

./requirements.txt

./setup.py

./wsgi.py

I wanted to add my GitHub Bugzilla Hook in a subdirectory (git submodule actually) and simply reserve a URL which will be served by this app. My intention is also to add other small scripts to the same cartridge in order to better utilize the available resources.

Using WSGIScriptAlias inside .htaccess DOESN'T WORK! OpenShift errors

out when WSGIScriptAlias is present. I suspect this to be a known limitation

and I have an open support case with Red Hat to confirm this.

My workaround is to configure the URL paths from the wsgi.py file in the root

directory. For example

diff --git a/wsgi.py b/wsgi.py

index c443581..20e2bf5 100644

--- a/wsgi.py

+++ b/wsgi.py

@@ -12,7 +12,12 @@ except IOError:

# line, it's possible required libraries won't be in your searchable path

#

+from github_bugzilla_hook import wsgi as ghbzh

+

def application(environ, start_response):

+ # custom paths

+ if environ['PATH_INFO'] == '/github-bugzilla-hook/':

+ return ghbzh.application(environ, start_response)

ctype = 'text/plain'

if environ['PATH_INFO'] == '/health':

This does the job and is almost the same as configuring the path in .htaccess.

I hope it helps you!

There are comments.

Commit a file with the GitHub API and Python

How do you commit changes to a file using the GitHub API ? I've found this post by Levi Botelho which explains the necessary steps but without any code. So I've used it and created a Python example.

I've rearranged the steps so that all write operations follow after a certain section in the code and also added an intermediate section which creates the updated content based on what is available in the repository.

I'm just appending

versions of Markdown to the .travis.yml (I will explain why in my next post)

and this is hard-coded for the sake of example. All content related operations

are also based on the GitHub API because I want to be independent of the source

code being around when I push this script to a hosting provider.

I've tested this script against itself. In the commits log you can find the Automatic update to Markdown-X.Y messages. These are from the script. Also notice the Merge remote-tracking branch 'origin/master' messages, these appeared when I pulled to my local copy. I believe the reason for this is that I have some dangling trees and/or commits from the time I was still experimenting with a broken script. I've tested on another clean repository and there are no such merges.

IMPORTANT

For this to work you need to properly authenticate with GitHub. I've crated a new token at https://github.com/settings/tokens with the public_repo permission and that works for me.

There are comments.

Inspecting Method Arguments in Python

How do you execute methods from 3rd party classes in a backward compatible manner when these methods change their arguments ?

s3cmd's PR #668 is an example

of this behavior, where python-libs's httplib.py added a new parameter

to disable hostname checks. As a result of this

s3cmd broke.

One solution is to use try-except and nest as much blocks as you need to cover all of the argument variations. In s3cmd's case we needed two nested try-except blocks.

Another possibility is to use the inspect module and create the argument list passed to the method dynamically, based on what parameters are supported. Depending on the number of parameters this may or may not be more elegant than using try-except blocks although it looks to me a bit more human readable.

The argument list is a member named co_varnames of the code object. If you want to get the members for a function then

inspect.getmembers(my_function.__code__)

if you want to get the members for a class method then

inspect.getmembers(MyClass.my_method.__func__.__code__)

Consider the following example

import inspect

def hello_world(greeting, who):

print greeting, who

class V1(object):

def __init__(self):

self.message = "Hello World"

def do_print(self):

print self.message

class V2(V1):

def __init__(self, greeting="Hello"):

V1.__init__(self)

self.message = self.message.replace('Hello', greeting)

class V3(V2):

def __init__(self, greeting="Hello", who="World"):

V2.__init__(self, greeting)

self.message = self.message.replace('World', who)

if __name__ == "__main__":

print "=== Example: call the class directly ==="

v1 = V1()

v1.do_print()

v2 = V2(greeting="Good day")

v2.do_print()

v3 = V3(greeting="Good evening", who="everyone")

v3.do_print()

# uncomment to see the error raised

#v4 = V1(greeting="Good evening", who="everyone")

#v4.do_print()

print "=== Example: use try-except ==="

for C in [V1, V2, V3]:

try:

c = C(greeting="Good evening", who="everyone")

except TypeError:

try:

print " error: nested-try-except-1"

c = C(greeting="Good evening")

except TypeError:

print " error: nested-try-except-2"

c = C()

c.do_print()

print "=== Example: using inspect ==="

for C in [V1, V2, V3]:

members = dict(inspect.getmembers(C.__init__.__func__.__code__))

var_names = members['co_varnames']

args = {}

if 'greeting' in var_names:

args['greeting'] = 'Good morning'

if 'who' in var_names:

args['who'] = 'children'

c = C(**args)

c.do_print()

The output of the example above is as follows

=== Example: call the class directly ===

Hello World

Good day World

Good evening everyone

=== Example: use try-except ===

error: nested-try-except-1

error: nested-try-except-2

Hello World

error: nested-try-except-1

Good evening World

Good evening everyone

=== Example: using inspect ===

Hello World

Good morning World

Good morning children

There are comments.

3 New Python Markdown extensions

I've managed to resolve several of my issues with Python-Markdown behaving not quite as I expect. I have the pleasure to announce three new extensions which now power this blog.

No Lazy BlockQuote Extension

Markdown-No-Lazy-BlockQuote-Extension

makes it possible blockquotes separated by newline to be rendered separately.

If you want to include empty lines in your blockquotes make sure to prefix

each line with >. The standard behavior can be seen in

GitHub

while the changed behavior is visible

in this article. Notice how on

GitHub both quotes are rendered as one big block, while here they are two separate

blocks.

No Lazy Code Extension

Markdown-No-Lazy-Code-Extension allows code blocks separated by newline to be rendered separately. If you want to include empty lines in your code blocks make sure to indent them as well. The standard behavior can be seen on GitHub while the improved one in this post. Notice how GitHub renders the code in the Warning Bugs Present section as one block while in reality these are two separate blocks from two different files.

Bugzilla Extension

Markdown-Bugzilla-Extension

allows for easy references to bugs. Strings like [bz#123] and [rhbz#456] will

be converted into links.

All three extensions are available on PyPI!

Bonus: Codehilite with filenames in Markdown

The standard Markdown codehilite extension doesn't allow to specify filename

on the :::python shebang line while Octopress did and I've used the syntax

on this blog in a number of articles. The fix is simple, but requires changes in

both Markdown and Pygments. See

PR #445 for the initial

version and ongoing discussion. Example of the new :::python settings.py syntax

can be seen

here.

There are comments.

Blog Migration: from Octopress to Pelican

Finally I have migrated this blog from Octopress to Pelican. I am using the clean-blog theme with modifications.

See the pelican_migration branch for technical details. Here's the summary:

- I removed pretty much everything that Octopress uses, only left the content files;

- I've added my own CSS overrides;

- I had several Octopress pages, these were merged and converted into blog posts;

- In Octopress all titles had quotes, which were removed using sed;

- Octopress categories were converted to Pelican tags and removed quotes around them, again using sed;

- Manually updated Octopress's

{% codeblock %}and{% blockquote %}tags to match Pelican syntax. This is the biggest content change; - I was trying to keep as much as the original URLs as possible.

ARTICLE_URL,ARTICLE_SAVE_AS,TAG_URL,TAG_SAVE_AS,FEED_ALL_ATOMandTAG_FEED_ATOMare the relevant settings. For 50+ posts I had to manually specify theSlug:variable so that they match existing Octopress URLs. Verifying the resulting names was as simple as diffing the file listings from both Octopress and Pelican. NOTE: The fedora.planet tag changed its URL because there's no way to assign slugs for tags in Pelican. The new URL is missing the dot! Luckily I make use of this only in one place which was manually updated!

I've also found a few bugs and missing functionality:

- There's no

rake new_postcounterpart in Pelican. See Issue 1410 and commit 6552f6f. Thanks Kevin Decherf; As far as I can tell the preview server doesn't regenerate files automatically.Domake regenerateandmake servein two separate shells. Thanks Kevin Decherf;Pelican will merge code blocks and quotes which follow one after another but are separated with a newline in Markdown. This makes it visually impossible to distinguish code from two files, or quotes from two people, which are published without any other comments in between.See Markdown-No-Lazy-BlockQuote-Extension and Markdown-No-Lazy-Code-Extension;The syntax doesn't allow to specify filename or a quote title when publishing code blocks and quotes. Octopress did that easily. I will be happy with something likeSee PR #445;:::python settings.py.- There's no way to specify slugs for tag URLs in order to keep compatibility with existing URLs, see Issue 1873.

I will be filling Issues and pull requests for both Pelican and the clear-blog theme in the next few days so stay tuned!

UPDATED 2015-11-26: added links to issues, pull requests and custom extensions.

There are comments.

python-libs in RHEL 7.2 broke SSL verification in s3cmd

Today started with Planet Sofia Valley being broken again. Indeed it's been broken since last Friday when I've upgraded to the latest RHEL 7.2. I quickly identified that I was hitting Issue #647. Then I tried the git checkout without any luck. This is when I started to suspect that python-libs has been updated in an incompatible way.

After series of reported bugs,

RHBZ #1284916,

RHBZ #1284930,

Python#25722, it was clear that

ssl.py was working according to RFC6125, that Amazon S3 was not playing

nicely with this same RFC and that my patch proposal was wrong.

This immediately had me looking upper in the stack at httplib.py and s3cmd.

Indeed there was a change in httplib.py which introduced two parameters,

context and check_hostname, to HTTPSConnection.__init__. The change

also supplied the logic which performs SSL hostname validation.

if not self._context.check_hostname and self._check_hostname:

try:

ssl.match_hostname(self.sock.getpeercert(), server_hostname)

except Exception:

self.sock.shutdown(socket.SHUT_RDWR)

self.sock.close()

raise

This looks a bit doggy as I don't quite understand the intention behind not PREDICATE and PREDICATE. Anyway to disable the validation you need both parameters set to False, which is PR #668.

Notice the two try-except blocks. This is in case we're running with a version that has a context but not the check_hostname parameter. I've found the inspect.getmembers function which can be used to figure out what parameters are there for the init method but a solution based on it doesn't appear to be more elegant. I will describe this in more details in my next post.

There are comments.

GitHub Bugzilla Hook

Last month I've created a tool which adds comments to Bugzilla when a commit message references a bug number. It was done as a proof of concept and didn't receive much attention at the time. Today I'm happy to announce the existence of GitHub Bugzilla Hook.

I've used David Shea's GitHub Email Hook as my starting template and only modified it where needed. GitHub Bugzilla Hook will examine push data and post comments for every unique bug+branch combination. Once a comment for that particular bug+branch combination is made, new ones will not be posted, even if later commits reference the same bug. My main assumption is commits which are related to a bug will be pushed together most of the times so there shouldn't be lots of noise in Bugzilla.

See [rhbz#1274703] for example of how the comments look. The parser behavior is taken from anaconda and conforms to the style the Red Hat Installer Engineering Team uses. Hopefully you find it useful as well.

My next step is to find a hosting place for this script and hook it up with the rhinstaller GitHub repos!

There are comments.

Page 5 / 16