Call to Action: Improving Overall Test Coverage in Fedora

Around Christmas 2013 I said

... it looks like on average 30% of the packages execute their test suites at build time in the %check section and less than 35% have test suites at all! There’s definitely room for improvement and I plan to focus on this during 2014!

I've recently started working on this goal by first identifying potential offending packages and discussing the idea on Fedora's devel, packaging and test mailing lists.

May I present you nearly 2000 packages which need your love:

The intent for these pages is to serve as a source of working material for Fedora volunteers.

How Can I Help

- Join upstream and create a test suite for a package you find interesting;

- Provide patches - first patch came in less than 30 minutes of initial announcement :);

- Review packages in the wiki and help identify false negatives;

- Forward to people who may be interested to work on these items;

- Share and promote in your local open source and developer communities;

Important

If you would like to gain some open source practice and QA experience I will happily provide mentorship and general help so you can start working on Fedora. Just ping me!

There are comments.

Mocking Django AUTH_PROFILE_MODULE without a Database

Difio is a Django based service which uses a profile

model to provide site-specific, per-user information.

In the process of open sourcing Difio its core

functionality becomes available as a Django app. The trouble is that the

UserProfile model contains site-specific and proprietary data which doesn't

make sense to the public nor I want to release it.

The solution is to have a MockProfile model and work with

that by default while www.dif.io and other implementations

override it as needed.

How do you do that without creating useless table and records in the database

but still have the profiles created automatically for every user?

It turns out the solution is quite simple. See my comments inside the code below.

class AbstractMockProfile(models.Model):

"""

Any AUTH_PROFILE_MODULE model should inherit this

and override the default methods.

This model provides the FK to User!

"""

user = models.ForeignKey(User, unique=True)

def is_subscribed(self):

""" Is this user subscribed for our newsletter? """

return True

class Meta:

# no DB table created b/c model is abstract

abstract = True

class MockProfileManager(models.Manager):

"""

This manager creates MockProfile's on the fly without

touching the database. It is needed by User.get_profile()

b/c we can't have an abstract base class as AUTH_PROFILE_MODULE.

"""

def using(self, *args, **kwargs):

""" It doesn't matter which database we use! """

return self

def get(self, *args, **kwargs):

"""

User.get_profile() calls .using(...).get(user_id__exact=X)

so we instrument it here to return a MockProfile() with

user_id=X parameter. Anything else may break!!!

"""

params = {}

for p in kwargs.keys():

params[p.split("__")[0]] = kwargs[p]

# this creates an object in memory. To save it to DB

# call obj.save() which we DON'T do anyway!

return MockProfile(params)

class MockProfile(AbstractMockProfile):

"""

In-memory (fake) profile class used by default for

the AUTH_PROFILE_MODULE setting.

"""

objects = MockProfileManager()

class Meta:

# DB table is NOT created automatically

# when managed = False

managed = False

In Difio core the user profile is always used like this

profile = request.user.get_profile()

if profile.is_subscribed():

pass

and by default

AUTH_PROFILE_MODULE = "difio.MockProfile"

Voila!

There are comments.

Skip or Render Specific Blocks from Jinja2 Templates

I wasn't able to find detailed information on how to skip rendering or only render specific blocks from Jinja2 templates so here's my solution. Hopefully you find it useful too.

With below template I want to be able to render only kernel_options block as a single line and then render the rest of the template excluding kernel_options.

{% block kernel_options %}

console=tty0

{% block debug %}

debug=1

{% endblock %}

{% endblock kernel_options %}

{% if OS_MAJOR == 5 %}

key --skip

{% endif %}

%packages

@base

{% if OS_MAJOR > 5 %}

%end

{% endif %}

To render a particular block you have to use the low level Jinja API template.blocks. This will return a dict of block rendering functions which need a Context to work with.

The second part is trickier. To remove a block we have to create an extension which will filter it out. The provided SkipBlockExtension class does exactly this.

Last but not least - if you'd like to use both together you have to disable caching in the Environment (so you get a fresh template every time), render your blocks first, configure env.skip_blocks and render the entire template without the specified blocks.

#!/usr/bin/env python

import os

import sys

from jinja2.ext import Extension

from jinja2 import Environment, FileSystemLoader

class SkipBlockExtension(Extension):

def __init__(self, environment):

super(SkipBlockExtension, self).__init__(environment)

environment.extend(skip_blocks=[])

def filter_stream(self, stream):

block_level = 0

skip_level = 0

in_endblock = False

for token in stream:

if (token.type == 'block_begin'):

if (stream.current.value == 'block'):

block_level += 1

if (stream.look().value in self.environment.skip_blocks):

skip_level = block_level

if (token.value == 'endblock' ):

in_endblock = True

if skip_level == 0:

yield token

if (token.type == 'block_end'):

if in_endblock:

in_endblock = False

block_level -= 1

if skip_level == block_level+1:

skip_level = 0

if __name__ == "__main__":

context = {'OS_MAJOR' : 5, 'ARCH' : 'x86_64'}

abs_path = os.path.abspath(sys.argv[1])

dir_name = os.path.dirname(abs_path)

base_name = os.path.basename(abs_path)

env = Environment(

loader = FileSystemLoader(dir_name),

extensions = [SkipBlockExtension],

cache_size = 0, # disable cache b/c we do 2 get_template()

)

# first render only the block we want

template = env.get_template(base_name)

lines = []

for line in template.blocks['kernel_options'](template.new_context(context)):

lines.append(line.strip())

print "Boot Args:", " ".join(lines)

print "---------------------------"

# now instruct SkipBlockExtension which blocks we don't want

# and get a new instance of the template with these blocks removed

env.skip_blocks.append('kernel_options')

template = env.get_template(base_name)

print template.render(context)

print "---------------------------"

The above code results in the following output:

$ ./jinja2-render ./base.j2

Boot Args: console=tty0 debug=1

---------------------------

key --skip

%packages

@base

---------------------------

Teaser: this is part of my effort to replace SNAKE with a client side kickstart template engine for Beaker so stay tuned!

There are comments.

7 Years and 1400 Bugs Later as Red Hat QA

Today I celebrate my 7th year working at Red Hat's Quality Engineering department. Here's my story!

On a cold winter Friday in 2007 I left my job as a software developer in Sofia, packed my stuff together, purchased my first laptop and on Sunday jumped the train to Brno to join the Release Test Team at Red Hat. Little did I know what it was all about. When I was offered the position I was on a very noisy bus and had to pick between two positions. I didn't quite understood what were the options and just picked the second one. Luckily everything turned out great and continues to this day.

I'm sharing my experience and highlighting some bugs which I've found. Hopefully you will find this interesting and amusing. If you are a QA engineer I urge you to take a look at my public bug portfolio, dive into details, read the comments and learn as much as you can.

What do I do exactly

From all QE teams in Red Hat, Release Test Team is the first one and last one to test a release. The team has both technical function and a more managerial one. Our focus is on the core Red Hat Enterprise Linux product. Unfortunately I can't go into much details because this is not a public facing unit. I will limit myself to public and/or non-sensitive information.

We are the first to test a new nightly build or a snapshot of the upcoming RHEL release. If the tree is installable other teams take over and do their magic. At the end when bits are published live we're the last to verify that content is published where it is expected to be. In short this is covering the work of the release engineering team which is to build a product and publish the contents for consumption.

The same principles apply to Fedora although the engagement here is less demanding.

Personally I have been and continue to be responsible for Red Hat Enterprise Linux 5 family of releases. It's up to me to give the go ahead for further testing or request a re-spin. This position also has the power to block and delay the GA release if not happy with testing or there is a considerable risk of failure until things are sorted out.

Like in other QA teams I create test plan documents, write test case scenarios, implement test automation scripts (and sometimes tools), regularly execute said test plans and test cases, find and report any new bugs and verify old ones are fixed. Most importantly make sure RHEL installs and is usable for further testing :).

Sometimes I have to deal with capacity planning and as RHEL 5 installation test lead I have to organize and manage the entire installation testing campaign for that product.

My favorite testing technique is exploratory testing.

Stats and Numbers

It is hard (if not impossible) to measure QA work with numbers alone but here are some interesting facts about my experience so far.

- Nearly 1400 bugs filed (1390 at the time of writing);

- Reported bugs across 32 different products. Top 3 being RHEL 6, RHEL 5 and Fedora (1000+ bugs);

- Top 3 components for reporting bugs against: anaconda, releng, kernel;

- Nearly 100 bugs filed in my first year 2007;

- The 3 most productive years being 2010, 2009, 2011 (800 + bugs);

- Filed 200 bugs/year which is about 1 bug/day considering holidays;

- 35th top bug reporter (excluding robot accounts). I was in top 10 a few years back;

Many of the bugs I report are private so if you'd like to know more stats just ask me and I'll see what I can do.

2007

My very first bug is RHBZ #231860(private) which is about the graphical update tool Pup which used to show the wrong number of available updates.

Then I've played with adding Dogtail support to Anaconda. While initially this was rejected (Fedora 6/7), it was implemented few years later (Fedora 9) and then removed once again during the big Anaconda rewrite.

I've spent my time working extensively on RHEL 5 battling with multi-lib issues, SELinux denials and generally making the 5 family less rough. Because I was still on-boarding I generally worked on everything I could get my hands on and also did some work on RHEL3-U9 (latest release before EOL) and some RHEL4-U6 testing.

With ia64 on RHEL3 I found a corner case kernel bug which flooded the serial console with messages and caused a multi-CPU system to freeze.

In 2008 Time went backwards

My first bug in 2008 is RHBZ #428280. glibc introduced SHA-256/512 hashes for hashing passwords with crypt but that wasn't documented.

UPDATE 2014-02-21 While testing 5.1 to 5.2 updates I found RHBZ #435475 - a severe performance degradation in the package installation process. Upgrades took almost twice as much time to complete, rising from 4 hours to 7 hours depending on hardware and package set. This was a tough one to test and verify. END UPDATE

While dogfooding the 5.2 beta in March I hit RHBZ #437252 - kernel: Timer ISR/0: Time went backwards. To this date this is one of my favorite bugs with a great error message!

Removal of a hack in RPM led to file conflicts under /usr/share/doc in several packages:

RHBZ #448905,

RHBZ #448906,

RHBZ #448907,

RHBZ #448909,

RHBZ #448910,

RHBZ #448911

which is also the first time I happen to file several bugs in a row.

ia64 couldn't boot with encrypted partitions - RHBZ #464769, RHEL 5 introduced support for ext4 - RHBZ #465248 and I've hit a fontconfig issue during upgrades - RHBZ #469190 which continued to resurface occasionally during the next 5 years.

This is the year when I took over responsibility for the general installation testing of RHEL 5 from James Laska and will continue to do so until it reaches end-of-life!

I've also worked on RHEL 4, Fedora and even the OLPC project. On the testing side of things I've participated in testing Fedora networking on the XO hardware and worked on translation and general issues.

2009 - here comes RHEL 6

This year starts my 3 most productive years period.

The second bug reported this year is RHBZ #481338 which also mentions one of my hobbies - wrist watches. While browsing a particular website Xorg CPU usage rose to 100%. I've seen a number of these through the years and I'm still not sure if its Xorg or Firefox or both to blame. And I still see my CPU usage go to 100% just like that and drain my battery. I'm open to suggestions how to test and debug what's going on as it doesn't happen in a reproducible fashion.

I happened to work on RHEL 4, RHEL 5, Fedora and the upcoming RHEL 6 releases and managed to file bugs in a row not once but twice. I wish I was paid per bug reported back then :).

The first series was about empty debuginfo packages with both empty packages which shouldn't have existed at all (e.g. redhat-release) and missing debuginfo information for binary packages (e.g. nmap).

The second series is around 100 bugs which had to do with the texinfo documentation of packages when installed with --excludedocs. The first one is RHBZ #515909 and the last one RHBZ #516014. While this works great for bumping up your bug count it made lots of developers unhappy and not all bugs were fixed. Still the use case is valid and these were proper software errors. It is also the first time I've used a script to file the bugs automatically and not by hand.

Near the end of the year I've started testing installation on new hardware by the likes of Intel and AMD before they hit the market. I had the pleasure to work with the latest chipsets and CPUs, even sometime pre-release versions and make sure Red Hat Enterprise Linux installed and worked properly on them. I've stopped doing this last year to free up time for other tasks.

2010 - one bug a day keeps developers at bay :)

My most productive year with 1+ bugs per day.

2010 starts with a bug about file conflicts (private one) and continues with the same narrative throughout the year. As a matter of fact I did a small experiment and found around 50000 (you read that right, fifty thousand) potentially conflicting files, mostly between multi-lib packages, which were being ignored by RPM due to its multi-lib policies. However these were primarily man pages or documentation and most of them didn't get fixed. The proper fix would have been to introduce a -docs sub-package and split these files from the actual binaries. Fortunately the world migrated to 64bit only and this isn't an issue anymore.

By that time RHEL 6 development was running at its peak capacity and there were Beta versions available. Almost the entire year I've been working on internal RHEL 6 snapshots and discovering the many new bugs introduced with tons of new features in the installer. Some of the new features included better IPv6 support, dracut and KVM.

An interesting set of bugs from September are the rpmlint errors and warnings ones, for example RHBZ #634931. I just run the most basic test tool against some packages. It generated lots of false negatives but also revealed bugs which were fixed.

Although there were many bugs filed this year I don't see any particularly interesting ones. It's been more like lots of work to improve the overall quality than exploring edge cases and finding interesting failures. If you find a bug from this period that you think is interesting I will comment on it.

2011 - Your system may be seriously compromised

This is the last year of my 3 year top cycle.

It starts with RHBZ #666687 - a patch for my crappy printer-scanner-coffee maker which I've been carrying around since 2009 when I bought it.

I was still working primarily on RHEL 6 but helped test the latest RHEL 4 release before it went end-of-life. The interesting thing about it was that unlike other released RHEL4-U9 was not available on installation media but only as an update from RHEL4-U8. This was a great experience which you happen to see every 4 to 5 years or so.

Btw I've also led the installation testing effort and RTT team through the last few RHEL 4 releases but given the product was approaching EOL there weren't many changes and things went smoothly.

A minor side activity was me playing around with USB Multi-seat and finding a few bugs here and there along the way.

Another interesting activity in 2011 was proof-reading the entire product documentation before its release which I can now relate to the Testing Documentation talk at FOSDEM 2014.

In 2011 I've started using the cloud and most notably Red Hat's OpenShift PaaS service. First internally as an early adopter and later externally after the product was announced to the public. There are a few interesting bugs here but they are private and I'm not at liberty to share although they've all been fixed since then.

An interesting bug with NUMA, Xen and ia64 (RHBZ #696599 - private) had me and devel banging our heads against the wall until we figured out that on this particular system the NUMA configuration was not suitable for running Xen virtualization.

Can you spot the problem here ?

try:

import kickstartGui

except:

print (_("Could not open display because no X server is running."))

print (_("Try running 'system-config-kickstart --help' for a list of options."))

sys.exit(0)

Be honest and use the comments form to tell me what you've found. If you struggled then see RHBZ #703085 and come back again to comment. I'd love to hear from you.

What do you do when you see an error message saying: Your system may be seriously compromised! /usr/sbin/NetworkManager tried to load a kernel module. This is the scariest error message I've ever seen. Luckily its just SELinux overreacting, see RHBZ #704090.

2012 is in the red zone

While the number of reported bugs dropped significantly compared to previous years this is the year when I've reported almost exclusively high priority and urgent bugs, the first one being RHBZ #771901.

RHBZ #799384(against Fedora) is one of the rare cases when I was able to contribute (although just by raising awareness) to localization and improved support for Bulgarian and Cyrillic. The other one case was last year. Btw I find it strange that although Cyrillic was invented by Bulgarians we didn't (or still don't) have a native font co-maintainer. Somebody please step up!

The red zone bugs continue to span till the end of the year across RHEL 5, 6 and early cuts of RHEL 7 with a pinch of OpenShift and some internal and external test tools.

In 2013 Bugzilla hit 1 million bugs

The year starts with a very annoying and still not fixed bug against ABRT. It's very frustrating when the tool which is supposed to help you file bugs doesn't work properly, see RHBZ #903591. It's a known fact that ABRT has problems and for this scenario I may have a tip for you.

RHBZ #923416 - another one of these 100% CPU bugs. As I said they happen from time to time and mostly go by unfixed or partially fixed because of their nature. Btw as I'm writing this post and have a few tabs open in Firefox it keeps using between 15% and 20% CPU and the CPU temperature is over 90 degrees C. And all I'm doing is writing text in the console. Help!

RHBZ #967229 - a minor one but reveals an important thing - your output (and input for that matter) methods may be producing different results. Worth testing if your software supports more than one.

This year I did some odd jobs working on several of Red Hat's layered products mainly Developer Toolset. It wasn't a tough job and was a refreshing break away from the mundane installation testing.

While I stopped working actively on the various RHEL families which are under development or still supported I happened to be one of top 10 bug reporters for high/urgent priority bugs for RHEL 7. In appreciation Red Hat sent me lots of corporate gifts and the Platform QE hoodie pictured at the top of the page. Many thanks!

In the summer Red Hat's Bugzilla hit One Million bugs. The closest I come to this milestone is RHBZ #999941.

I finally managed to transfer most of my responsibilities to co-workers and joined the Fedora QA team as a part-time contributor. I had some highs and lows with Fedora test days in Sofia as well. Good thing is I scored another 15 bugs across the virtualization stack and GNOME 3.10.

The year wraps up with another series of identical bugs, RHBZ #1024729 and RHBZ #1025289 for example. As it turned out lots of packages don't have any test suites at all and those which do don't always execute them automatically in %check. I've promised myself to improve this but still haven't had time to work on it. Hopefully by March I will have something in the works.

2014 - Fedora QA improvement

Last two months I've been working on some internal projects and looking a little bit into improving processes, test coverage and QA infrastructure - RHBZ #1064895. And Rawhide (upcoming Fedora 21) isn't behaving - RHBZ #1063245.

My goal for this year is to do more work on improving the overall test coverage of Fedora and together with the Fedora QA team bring an open testing infrastructure to the community.

Let's see how well that plays out!

What do I do now

During the last year I have gradually changed my responsibilities to work more on Fedora. As a volunteer in the Fedora QA I'm regularly testing installation of Rawhide trees and try to work closely with the community. I still have to manage RHEL 5 test cycles where I don't expect nothing disruptive at this stage in the product life-cycle!

I'm open to any ideas and help which can improve test coverage and quality of software in Fedora. If you're just joining the open source world this is an excellent opportunity to do some good, get noticed and even maybe get a job. I will definitely help you get through the process if you're willing to commit your time to this.

I hope this long post has been useful and fun to read. Please use the comments form to tell me if I'm missing something or you'd like to know more.

Looking forward to the next 7 years!

There are comments.

Moving /tmp from EBS to Instance Storage

I've seen a fair amount of stories about moving away from Amazon's EBS volumes

to ephemeral instance storage. I've decided to give it a try starting with /tmp

directory where Difio operates.

It should be noted that although instance storage may be available for some instance types it may not be attached by default. Use this command to check:

$ curl http://169.254.169.254/latest/meta-data/block-device-mapping/

ami

root

swap

In the above example there is no instance storage present.

You can attach one either when launching the EC2 instance or when creating a customized AMI (instance storage devices are pre-defined in the AMI). When creating an AMI you can attach more ephemeral devices but they will not available when instance is launched. The maximum number of available instance storage devices can be found in the docs. That is to say if you have an AMI which defines 2 ephemeral devices and launch a standard m1.small instance there will be only one ephemeral device present.

Also note that for M3 instances, you must specify instance store volumes in the block device mapping for the instance. When you launch an M3 instance, Amazon ignores any instance store volumes specified in the block device mapping for the AMI.

As far as I can see the AWS Console doesn't indicate if instance storage is attached or not. For instance with 1 ephemeral volume:

$ curl http://169.254.169.254/latest/meta-data/block-device-mapping/

ami

ephemeral0

root

swap

$ curl http://169.254.169.254/latest/meta-data/block-device-mapping/ephemeral0

sdb

Ephemeral devices can be mounted in /media/ephemeralX/, but not all volumes.

I've found that usually only ephemeral0 is mounted automatically.

$ curl http://169.254.169.254/latest/meta-data/block-device-mapping/

ami

ephemeral0

ephemeral1

root

$ ls -l /media/

drwxr-xr-x 3 root root 4096 21 ное 2009 ephemeral0

For Difio I have an init.d script which executes when the system

boots. To enable /tmp on ephemeral storage I just added the following snippet:

echo $"Mounting /tmp on ephemeral storage:"

for ef in `curl http://169.254.169.254/latest/meta-data/block-device-mapping/ 2>/dev/null | grep ephemeral`; do

disk=`curl http://169.254.169.254/latest/meta-data/block-device-mapping/$ef 2>/dev/null`

echo $"Unmounting /dev/$disk"

umount /dev/$disk

echo $"mkfs /dev/$disk"

mkfs.ext4 -q /dev/$disk

echo $"Mounting /dev/$disk"

mount -t ext4 /dev/$disk /tmp && chmod 1777 /tmp && success || failure

done

NB: success and failure are from /etc/rc.d/init.d/functions.

If you are using LVM or RAID you need to reconstruct your block devices

accordingly!

If everything goes right I should be able to reduce my AWS costs by saving on provisioned storage and I/O requests. I'll keep you posted on this after a month or two.

There are comments.

Articles of The Week: Git Submodules and Stopping SPAM Without CAPTCHA

Here are two articles which I found very useful and interesting this week:

Git Submodules: Adding, Using, Removing, Updating is a clear description of working with git submodules which I had to do recently. There's a huge comments section with more useful info as well.

Stopping spambots with hashes and honeypots is a very interesting read about non-CAPTCHA techniques to stop SPAM bots from filling forms and comments on your site. I didn't even know these exist but I have to admin this is not my area of expertise either. The author just scratches the surface of the topic and there are other methods described in the comments as well. Make sure to read this if you are building forms or sites with comments.

There are comments.

Tip: How to Build updates.img for Fedora

Anaconda the Fedora, CentOS and Red Hat Enterprise Linux installer has the

capability to incorporate

updates at runtime.

These updates are generally distributed as an updates.img file. Here is how

to easily build one from a working installation tree.

Instead of using the git sources to build an updates.img I prefer using the SRPM

from the tree which I am installing. This way the resulting updates image will be

more consistent with the anaconda version already available in the tree. And in theory

everything you need to build it should already be available as well.

UPDATE 2014-02-08: You can also build the updates.img from the git source tree

which is shown at the bottom of this article.

The following steps work for me on a Fedora 20 system.

-

Download the source RPM for anaconda from the tree and extract the sources to a working directory. Then;

cd anaconda-20.25.16-1 git init git add . git commit -m "initial import" git tag anaconda-20.25.16-1 -

The above steps will create a local git repository and tag the initial contents before modification. The tag is required later by the script which creates the updates image;

-

After making your changes commit them and from the top anaconda directory execute:

./scripts/makeupdates -t anaconda-20.25.16-1

You can also add RPM contents to the updates.img but you need to download the packages first:

yumdownloader python-coverage python-setuptools

./scripts/makeupdates -t anaconda-20.25.16-1 -a ~/python-coverage-3.7-1.fc20.x86_64.rpm -a ~/python-setuptools-1.4.2-1.fc20.noarch.rpm

BUILDDIR /home/atodorov/anaconda-20.25.16-1

Including anaconda

2 RPMs added manually:

python-setuptools-1.4.2-1.fc20.noarch.rpm

python-coverage-3.7-1.fc20.x86_64.rpm

cd /home/atodorov/anaconda-20.25.16-1/updates && rpm2cpio /home/atodorov/python-setuptools-1.4.2-1.fc20.noarch.rpm | cpio -dium

3534 blocks

cd /home/atodorov/anaconda-20.25.16-1/updates && rpm2cpio /home/atodorov/python-coverage-3.7-1.fc20.x86_64.rpm | cpio -dium

1214 blocks

<stdin> to <stdout> 4831 blocks

updates.img ready

In the above example I have only modified the top level anaconda file (/usr/sbin/anaconda

inside the installation environment) experimenting with

python-coverage integration.

You are done! Make the updates.img available to Anaconda and start using it!

UPDATE 2014-02-08: If you prefer working with the anaconda source tree here's how to do it:

git clone git://git.fedorahosted.org/git/anaconda.git

cd anaconda/

git checkout anaconda-20.25.16-1 -b my_feature-branch

... make changes ...

git commit -a -m "Fixed something"

./scripts/makeupdates -t anaconda-20.25.16-1

There are comments.

AWS Tip: Shrinking EBS Root Volume Size

Amazon's Elastic Block Store volumes are easy to use and expand but notoriously hard to shrink once their size has grown. Here is my tip for shrinking EBS size and saving some money from over-provisioned storage. I'm assuming that you want to shrink the root volume which is on EBS.

- Write down the block device name for the root volume (/dev/sda1) - from AWS console: Instances; Select instance; Look at Details tab; See Root device or Block devices;

- Write down the availability zone of your instance - from AWS console: Instances; column Availability Zone;

- Stop instance;

- Create snapshot of the root volume;

- From the snapshot, create a second volume, in the same availability zone as your instance (you will have to attach it later). This will be your pristine source;

- Create new empty EBS volume (not based on a snapshot), with smaller size,

in the same availability zone - from AWS console: Volumes; Create Volume;

Snapshot == No Snapshot; IMPORTANT - size should be large enough to hold

all the files from the source file system (try

df -hon the source first); - Attach both volumes to instance while taking note of the block devices names you assign for them in the AWS console;

For example: In my case /dev/sdc1 is the source snapshot and /dev/sdd1 is the

empty target.

- Start instance;

- Optionally check the source file system with

e2fsck -f /dev/sdc1; - Create a file system for the empty volume -

mkfs.ext4 /dev/sdd1; - Mount volumes at

/sourceand/targetrespectively; - Now sync the files:

rsync -aHAXxSP /source/ /target. Note the missing slash (/) after/target. If you add it you will end up with files inside/target/source/which you don't want; - Quickly verify the new directory structure with

ls -l /target; - Unmount

/target; - Optionally check the new file system for consistency

e2fsck -f /dev/sdd1; - IMPORTANT - check how

/boot/grub/grub.confspecifies the root volume - by UUID, by LABEL, by device name, etc. You will have to duplicate the same for the new smaller volume or update/target/boot/grub/grub.confto match the new volume. Check/target/etc/fstabas well!

In my case I had to e2label /dev/sdd1 / because both grub.conf and fstab were

using the device label.

- Shutdown the instance;

- Detach all volumes;

- IMPORTANT - attach the new smaller volume to the instance using the same block device

name from the first step (e.g.

/dev/sda1); - Start the instance and verify it is working correctly;

- DELETE auxiliary volumes and snapshots so they don't take space and accumulate costs!

There are comments.

FOSDEM 2014 Report - Day #2 Testing and Automation

FOSDEM was hosting the Testing and automation devroom for the second year and this was the very reason I attended the conference. I managed to get in early and stayed until 15:00 when I had to leave to catch my flight (which was late :().

There were 3 talks given by Red Hat employees in the testing devroom which was a nice opportunity to meet some of the folks I've been working on IRC with. Unfortunately I didn't meet anyone from Fedora QA. Not sure if they were attending or not.

All the talks were interesting so see the official schedule and video for more details. I will highlight only the items I saw as particularly interesting or have not heard of before.

ANSTE

ANSTE - Advanced Network Service Testing Environment is a test infrastructure controller, something like our own Beaker but designed to create complex networking environments. I think it lacks many of the provisioning features built in Beaker and integration with various hypervisors and bare-metal provisioning. What it seems to do better (as far as I can tell from the talk) is to deploy virtual systems and create more complex network configuration between them. Not something I will need in the near future but definitely worth a look at.

cwrap

cwrap is...

a set of tools to create a fully isolated network environment to test client/server components on a single host. It provides synthetic account information, hostname resolution and support for privilege separation. The heart of cwrap consists of three libraries you can preload to any executable.

That one was the coolest technology I've seen so far although I may not need to use it at all, hmmm maybe testing DHCP fits the case.

It evolved from the Samba project and takes advantage of the order in which libraries are searched when resolving functions. When you preload the project libraries to any executable they will override standard libc functions for working with sockets, user accounts and privilege escalation.

The socket_wrapper library redirects networking sockets through local UNIX sockets and gives you the ability to test applications which need privileged ports with a local developer account.

The nss_wrapper library provides artificial information for user and group accounts, network name resolution using a hosts file and loading and testing of NSS modules.

The uid_wrapper library allows uid switching as a normal user (e.g. fake root) and supports user/group changing in the local thread using the syscalls (like glibc).

All of these wrapper libraries are controlled via environment variables and definitely makes testing of daemons and networking applications easier.

Testing Documentation

That one was just scratching the surface of an entire branch of testing which I've not even considered before. The talk also explains why it is hard to test documentation and what possible solutions there are.

If you write user guides and technical articles which need to stay current with the software this is definitely the place to start.

Automation in the Foreman Infrastructure

The last talk I've listened to. Definitely the best one from a general testing approach point of view. Greg talked about starting with Foreman unit tests, then testing the merged PR, then integration tests, then moving on to test the package build and then the resulting packages themselves.

These guys try to even test their own infrastructure (infra as code) and the test suites they use to test everything else. It's all about automation and the level of confidence you have in the entire process.

I like the fact that no single testing approach can make you confident enough before shipping the code and that they've taken into account changes which get introduced at various places (e.g. 3rd party package upgrades, distro specific issues, infrastructure changes and such)

If I had to attend only one session it would have been this one. There are many things for me to take back home and apply to my work on Fedora and RHEL.

If you find any of these topics remotely interesting I advise you to wait until FOSDEM video team uploads the recordings and watch the entire session stream. I'm definitely missing a lot of stuff which can't be easily reproduced in text form.

You can also find my report of the first FOSDEM'14 day on Saturday here.

There are comments.

FOSDEM 2014 Report - Day #1 Python, Stands and Lightning Talks

As promised I'm starting catching up on blogging after being sick and traveling. Here's my report of what I saw and found interesting at this year's FOSDEM which was held the last weekend in Brussels.

On Friday evening I've tried to attend the FOSDEM beer event at Delirium Cafe but had a bad luck. At 21:30 the place was already packed with people. I managed to get access to only one of the rooms but it looked like the wrong one :(. I think the space is definitely small for all who are willing to attend.

During Saturday morning I did a quick sight-seeing most notably Mig's wine shop and the area around it since I've never been to this part of the city before. Then I took off to FOSDEM arriving at noon (IOW not too late).

I've spent most of my day at building K where project stands were and I stayed quite a long time around the Python and Perl stands meeting new people and talking to them about their upgrade practices and how they manage package dependencies (aka promoting Difio).

Fedora stand was busy with 3D printing this year. I've seen 3D printing before but here I was amazed of the fine-grained quality of the pieces produced. This is definitely something to have in mind if you are building physical products.

Red Hat's presence was very strong this year. In addition to the numerous talks they gave there were also oVirt and OpenShift Origin stands which were packed with people. I couldn't even get close to say hi or take a picture.

Near the end of the day I went to listen to some of the lightning talks. The ones that I liked the most were MATE Desktop and DoudouLinux.

The thing about MATE which I liked is that they have a MATE University initiative which is targeting developers who want to learn how to develop MATE extensions. This is pretty cool with respect to community and developers on-boarding.

DoudouLinux is a Debian based distribution targeted at small children (2 or 3 years old) based on simple desktop and educational activities. I've met project leader and founder Jean-Michel Philippe who gave the talk. We chatted for a while when Alejandro Simon from Kano came around and showed us a prototype of their computer for children. I will definitely give DoudouLinux a try and maybe pre-order Kano as well.

In the evening there was a Python beer event at Delirium and after that dinner at Chez Leon where I had snails and rabbit with cherries in cherry beer sauce. I've had a few beers with Marc-Andre from eGenix and Charlie from Clark Consulting and the talk was mostly about non-technical stuff which was nice.

After that we went back to Delirium and re-united with Alexander Kurtakov and other folks from Red Hat for more cherry beer!

Report of the second day of FOSDEM'14 on Sunday is here.

There are comments.

Book Review - January 2014

Sorry for not being able to write anything this month. I've been very sick and hardly even touched a computer in the last few weeks. I promise to make it up to you next month. Until then here's the books I've managed to read in January.

Hooked: How to Build Habit-Forming Products

Hooked is an ebook for Kindle which I luckily got for free on NYE (it's paid now). The book describes the so called "Hook Model" which is a guide to building products people can’t put down.

The book goes through a cycle of trigger, action, variable reward and investment to describe how one can design a product which users keep using without you doing anything (pretty much). There are plenty of examples with products like Instagram and Pinterest.

I really find the book interesting and will strongly recommend it as a must read to anyone who is building a product. If you're thinking about a mobile app or an online service this book is definitely for you.

CyberJoly Drim

CyberJoly Drim is a 1998 cyber punk story by Polish writer Antonina Liedtke. One I've heard about through the years but found just recently. It's about a love story although everything else is fiction. PDF format is around 30 pages.

I found it hard to read especially b/c my Polish is totally rusty (not to mention I hardly understand it) and Google translate didn't manage well neither. Anyway, not a bad read before bed time if you like this kind of stuff.

The First in Bulgarian Internet

Last a book about Bulgarian Internet pioneers. The book compiles a great deal with historical data as well as interviews and web site descriptions. It's said to mention about 400 persons.

The events start around 1989 with the BBS systems at the time and the first one to appear in the country with accounts of first time logging into the network and pretty much seeing a computer. Then it goes to tell the story of first companies and Internet providers, how they started business, how they grew and formed the country's backbone infrastructure. There's lots of personal memories and stories as well. This goes to about 2002 when the book was written.

The second part of the book mostly describes various websites, some of the first ones and some milestone or famous ones. It's organized by date of website launch but isn't that much interesting. I find the local contents at the time a bit boring.

The book isn't what I initially expected - I wanted more personal stories and more news from the kitchen. It's not that, it looks to me the people who were interviewed had chosen their words very carefully and didn't reveal any sensational stories.

The nice thing about all of that is I've started using the web in early 1998 and remember most of the events and websites described in the book. It's good to remember the history. I own the book and can give it to you if you like. It's on the Give Away list.

I hope you find something interesting to read in my library. If not please share what did you read this month.

PS: I'm currently in Milan and will be visiting FOSDEM at the end of the week. Catch you there if you're coming.

There are comments.

Book Review of 2013

Happy New Year everyone! I was able to complete reading a few books in the last days so this first post will be a quick review of all twelve books I've read in 2013.

Technical Blogging

I've started the year with Technical Blogging: Turn Your Expertise into a Remarkable Online Presence by Antonio Cangiano. This is the book which prompted me to start this blog. It is targeted primarily at technical blogging but could be useful to non-tech bloggers as well. I strongly recommend this book to anyone who is writing for the web.

The 4-Hour Workweek

The 4-Hour Workweek: Escape 9-5, Live Anywhere, and Join the New Rich by Timothy Ferriss. This book I found at a local start-up event and is one of my all time favorites. The basic idea is to ditch the traditional working space and work less utilizing more automation. This is something I've been doing to an extent during the last 5+ years and I am still changing my life and working habits to reach the moment where I work only a few hours a week.

A must read for any entrepreneur, freelancer or work from home folks.

The E-Myth Revisited

The E-Myth Revisited: Why Most Small Businesses Don't Work and What to Do About It by Michael Gerber is the second book I've found at the same start-up event mentioned previously. I found this one particularly hard to read. What I took from it was that you should think about your business as a franchise and document processes so that others can do it for you in the same way you would do it yourself.

Culture and Wine

Next two books are in Bulgarian and can be found at my Goodreads list. They are about culture and religion and wine tasting 101.

Accelerando

Accelerando (Singularity) by Charles Stross is also one of my favorites. I've heard about it at a local CloudFoundry conference a few years back and finally had the time to read it. This is a fiction book where events start in the near future and drive forward to dismantling the planets in the Solar system, uploading lobsters minds on the Internet, space travel, aliens and family relationships. I will definitely recommend it, the book is a very relaxing read.

DevOps

What Is DevOps? by Mike Loukides and Building a DevOps Culture by Mandi Walls are two short free books for Kindle. They just touch base on the topic of DevOps and what it takes to create a DevOps organization. Definitely worth reading even if you're not deeply interested in this topic.

DevOps for Developers by Michael Huttermann is a practical books about DevOps. It talks about processes and tools and lists lots of examples. The tools part is Ruby centric though. I found it a bit hard to read at times and it took me a while to complete it. I'm not sure if the book is really helpful if one decides to change an exiting organization to a DevOps like structure but it is definitely a starting point.

The days of waterfall software development are gone and knowingly or not most of us work in a DevOps like environment these days. I think it's worth at least skimming through this book and taking some essentials from it.

UX For Lean Startups

UX for Lean Startups by Laura Klein is a very good book about designing user experience and validation which I've reviewed previously. I strongly recommend this book to everyone who is building a product of some sort.

Bulgarian Comics

At the end of the year I've managed to read two comics books, both in Bulgarian. The first one is a brief history of the comics arts in the country in the past 150 years with lots of references to good old stories which I've read when I was a kid.

The second one is a special edition (ebook only, print edition is out of stock) of the popular Daga (Rainbow) magazine which ceased to exist 20 years ago. There are some new episodes of the old stories drawn by the original artists.

Both books seem to be a turning point in Bulgarian comics art and market. Hopefully we'll see more cool stuff in the future. These books are not available on Amazon but there are some free comics books for Kindle which you may give a try.

I hope you find something interesting to read in my list. Also feel free to comment and share your favorite books in the comments below.

There are comments.

Upstream Test Suite Status of Fedora 20

Last week I've expressed my thoughts about the state of upstream test suites in Fedora along with some other ideas. Following the response on this thread I'm starting to analyze all SRPM packages in Fedora 20 in order to establish a baseline. Here are my initial findings.

What's Inside

I've found two source distributions for Fedora 20:

- The

Fedora-20-source-DVD.isofile which to my knowledge contains the sources of all packages that comprise the installation media; - The

Everything/source/SRPMS/directory which appears to contain the sources of everything else available in the Fedora 20 repositories.

There are 2574 SRPM packages in Fedora-20 source DVD and 14364 SRPMs in the Everything/ directory. 9,2G vs. 41G.

Test Suite Execution In %check

Fedora Packaging Guidelines state

If the source code of the package provides a test suite, it should be executed in the %check section, whenever it is practical to do so.

In my research I found 738 SRPMs on the DVD which have a %check

section and 4838 such packages under Everything/. This is 28,6% and 33,6%

respectively.

Test Suite Existence

A quick grep for either test/ or tests/ directories in the package sources revealed

870 SRPM packages in the source DVD which are very likely to have a test suite.

This is 33,8%. I wasn't able to inspect the Everything/ directory with this script

because it takes too long to execute and my system crashed out of memory.

I will update this post later with that info.

UPDATE 2014-01-02:

In the Everything/ directory only 4481 (31,2%) SRPM packages appear to have

test suites.

The scripts and raw output are available at https://github.com/atodorov/fedora-scripts.

So it looks like on average 30% of the packages execute their test suites at build time in the %check section and less than 35% have test suites at all! There's definitely room for improvement and I plan to focus on this during 2014!

There are comments.

Django Template Tag Inheritance How-to

While working on open-sourcing Difio I needed to remove

all hard-coded URL references from the templates. My solution was to essentially

inherit from the standard {% url %} template tag. Here is how to do it.

Background History

Difio is not hosted on a single server. Parts of the website are static HTML, hosted on Amazon S3. Other parts are dynamic - hosted on OpenShift. It's also possible but not required at the moment to host at various PaaS providers for redundancy and simple load balancing.

As an easy fix I had hard-coded some URLs to link to the static S3 pages and others go link to my PaaS provider.

I needed a simple solution which can be extended to allow for multiple domain hosting.

The Solution

The solution I came up with is to override the standard {% url %}

tag and use it everywhere in my templates. The new tag will produce absolute URLs containing

the specified protocol plus domain name and view path. For this to work you have to

inherit the standard URLNode class and override the render() method to include the new

values.

You also need to register a tag method to utilize the new class. My approach was to use

the existing url() method to do all background processing and simply casting the result

object to the new class.

All code is available at https://djangosnippets.org/snippets/3013/.

To use in your templates simply add

{% load fqdn_url from fqdn_url %}

<a href="{% fqdn_url 'dashboard' %}">Dashboard</a>

There are comments.

Can I Use Android Phone as Smart Card Reader

Today I had troubles with my Omnikey CardMan 6121 smart card reader.

For some reason it will not detect the card inside and was unusable.

/var/log/messages was filled with Card Not Powered messages:

Dec 18 11:17:55 localhost pcscd: eventhandler.c:292:EHStatusHandlerThread() Error powering up card: -2146435050 0x80100016

Dec 18 11:18:01 localhost pcscd: winscard.c:368:SCardConnect() Card Not Powered

Dec 18 11:18:02 localhost pcscd: winscard.c:368:SCardConnect() Card Not Powered

I've found the solution in RHBZ #531998.

I've found the problem, and it's purely mechanical. Omnikey has simply screwed up when they designed this reader. When the reader is inserted into the ExpressCard slot, it gets slightly compressed. This is enough to trigger the mechanical switch that detects insertions. If I jam something in there and force it apart, then pcscd starts reporting that the slot is empty.

Pierre Ossman, https://bugzilla.redhat.com/show_bug.cgi?id=531998#c12

So I tried moving the smart card a millimeter back and forth inside the reader and that fixed it for me.

This smart card is standard SIM size and I wonder if it is possible to use dual SIM smart phones and tablets as a reader? I will be happy to work on the software side if there is an open source project already (e.g. OpenSC + drivers for Android). If not, why not?

If you happen to have information on the subject please share it in the comments. Thanks!

There are comments.

Idempotent Django Email Sender with Amazon SQS and Memcache

Recently I wrote about my problem with duplicate Amazon SQS messages causing multiple emails for Difio. After considering several options and feedback from @Answers4AWS I wrote a small decorator to fix this.

It uses the cache backend to prevent the task from executing twice during the specified time frame. The code is available at https://djangosnippets.org/snippets/3010/.

As stated on Twitter you should use Memcache (or ElastiCache) for this.

If using Amazon S3 with my

django-s3-cache don't use the

us-east-1 region because it is eventually consistent.

The solution is fast and simple on the development side and uses my existing cache infrastructure so it doesn't cost anything more!

There is still a race condition between marking the message as processed and the second check but nevertheless this should minimize the possibility of receiving duplicate emails to an accepted level. Only time will tell though!

There are comments.

Book Review: UX for Lean Startups

Recently I've finished reading UX for Lean Startups and strongly recommend this book to anyone who is OR wants to be an entrepreneur. Here is a short review of the book.

This book is for anyone who is creating a product/service or is considering the idea of doing so. It talks about validation, interaction design and subsequent product measurement and iteration. The book demonstrates some techniques and tools to validate, design and measure your business ideas and products. Its goal is to teach you how to design products that deliver fantastic user experience, e.g. ones that are intuitive and easy to use. It has nothing to do with visual design.

The author Laura Klein summarizes the book as follows:

User research

Listen to your users. All the time. I mean it.

Validation

When you make assumptions or create hypotheses, test them before spending lots of time building products around them.

Design

Iterate. Iterate. Iterate.

Early Validation

This chapter helped me a lot to understand what exactly is validation and how to go about it. The flow is validating the problem you are trying to solve, then the market and then the product.

I will also add that by using some of these research techniques around a vague idea/area of interest you may come around a particular trend/pattern or problem and develop your business from there.

You’ll know that you’ve validated a problem when you start to hear particular groups of people complaining about something specific.

...

Your goal in validating your market is to begin to narrow down the group of people who will want their problems solved badly enough to buy your product. Your secondary goal is to understand exactly why they’re interested so you can find other markets that might be similarly motivated.

...

You’ll know that you’ve successfully validated your market when you can accurately predict that a particular type of person will have a specific problem and that the problem will be severe enough that that person is interested in purchasing a solution.

...

Just because you have discovered a real problem and have a group of people willing to pay you to solve their problem, that doesn’t necessarily mean that your product is the right solution.

...

You’ll know that you’ve validated your product when a large percentage of your target market offers to pay you money to solve their problem.

User Research

Next few chapters talk about user research, the various kinds of it and when/how to perform it. It talks how to properly run surveys, how to ask good questions, etc.

Qualitative vs. Quantitative Research

Quantitative research is about measuring what real people are actually doing with your product. It doesn’t involve speaking with specific humans. It’s about the data in aggregate. It should always be statistically significant.

...

Quantitative research tells you what your problem is. Qualitative research tells you why you have that problem.

...

If you want to measure something that exists, like traffic or revenue or how many people click on a particular button, then you want quantitative data. If you want to know why you lose people out of your purchase funnel or why people all leave once they hit a specific page, or why people seem not to click that button, then you need qualitative.

Part Two: Design

The second part of this book talks about design - everything from building a prototype to figuring out when you don’t want one. It assumes you have validated the initial idea and now move on to designing the product and validating that design before you start building it. It talks about diagrams, sketches, wireframes, prototypes and of course MVPs.

I think you can safely skip some of these steps when it comes to small applications because it may be easier/faster to build the application instead of a prototype. Definitely not to be skipped if you're building a more complex product!

Part Three: Product

This section talks about metrics and measuring the product once it is out of the door. Supposedly based on these metrics you will refine your design and update the product accordingly. Most of the time it focuses on A/B testing and which metrics are important and which are so called "vanity metrics".

I particularly liked the examples of A/B testing and explanations what it is good for and what it does poorly. Definitely a mistake I've happened to made myself. I'm sure you too.

Let me know if you have read this book and what your thoughts are. Thanks!

There are comments.

Duplicate Amazon SQS Messages Cause Multiple Emails

Beware if using Amazon Simple Queue Service to send email messages! Sometime SQS messages are duplicated which results in multiple copies of the messages being sent. This happened today at Difio and is really annoying to users. In this post I will explain why there is no easy way of fixing it.

Q: Can a deleted message be received again?

Yes, under rare circumstances you might receive a previously deleted message again. This can occur in the rare situation in which a DeleteMessage operation doesn't delete all copies of a message because one of the servers in the distributed Amazon SQS system isn't available at the time of the deletion. That message copy can then be delivered again. You should design your application so that no errors or inconsistencies occur if you receive a deleted message again.

Amazon FAQ

In my case the cron scheduler logs say:

>>> <AsyncResult: a9e5a73a-4d4a-4995-a91c-90295e27100a>

While on the worker nodes the logs say:

[2013-12-06 10:13:06,229: INFO/MainProcess] Got task from broker: tasks.cron_monthly_email_reminder[a9e5a73a-4d4a-4995-a91c-90295e27100a]

[2013-12-06 10:18:09,456: INFO/MainProcess] Got task from broker: tasks.cron_monthly_email_reminder[a9e5a73a-4d4a-4995-a91c-90295e27100a]

This clearly shows the same message (see the UUID) has been processed twice! This resulted in hundreds of duplicate emails :(.

Why This Is Hard To Fix

There are two basic approaches to solve this issue:

- Check some log files or database for previous record of the message having been processed;

- Use idempotent operations that if you process the message again, you get the same results, and that those results don't create duplicate files/records.

The problem with checking for duplicate messages is:

- There is a race condition between marking the message as processed and the second check;

- You need to use some sort of locking mechanism to safe-guard against the race condition;

- In the event of an eventual consistency of the log/DB you can't guarantee that the previous attempt will show up and so can't guarantee that you won't process the message twice.

All of the above don't seem to work well for distributed applications not to mention Difio processes millions of messages per month, per node and the logs are quite big.

The second option is to have control of the Message-Id or some other email header so that the second message will be discarded either at the server (Amazon SES in my case) or at the receiving MUA. I like this better but I don't think it is technically possible with the current environment. Need to check though.

I've asked AWS support to look into this thread and hopefully they will have some more hints. If you have any other ideas please post in the comments! Thanks!

There are comments.

Bug in Python URLGrabber/cURL on Fedora and Amazon Linux

Accidentally I have discovered a bug for Python's URLGrabber module which has to do with change in behavior in libcurl.

>>> from urlgrabber.grabber import URLGrabber

>>> g = URLGrabber(reget=None)

>>> g.urlgrab('https://s3.amazonaws.com/production.s3.rubygems.org/gems/columnize-0.3.6.gem', '/tmp/columnize.gem')

Traceback (most recent call last):

File "<console>", line 1, in <module>

File "/home/celeryd/.virtualenvs/difio/lib/python2.6/site-packages/urlgrabber/grabber.py", line 976, in urlgrab

return self._retry(opts, retryfunc, url, filename)

File "/home/celeryd/.virtualenvs/difio/lib/python2.6/site-packages/urlgrabber/grabber.py", line 880, in _retry

r = apply(func, (opts,) + args, {})

File "/home/celeryd/.virtualenvs/difio/lib/python2.6/site-packages/urlgrabber/grabber.py", line 962, in retryfunc

fo = PyCurlFileObject(url, filename, opts)

File "/home/celeryd/.virtualenvs/difio/lib/python2.6/site-packages/urlgrabber/grabber.py", line 1056, in __init__

self._do_open()

File "/home/celeryd/.virtualenvs/difio/lib/python2.6/site-packages/urlgrabber/grabber.py", line 1307, in _do_open

self._set_opts()

File "/home/celeryd/.virtualenvs/difio/lib/python2.6/site-packages/urlgrabber/grabber.py", line 1161, in _set_opts

self.curl_obj.setopt(pycurl.SSL_VERIFYHOST, opts.ssl_verify_host)

error: (43, '')

>>>

The code above works fine with curl-7.27 or older while it breaks with curl-7.29 and newer. As explained by Zdenek Pavlas the reason is an internal change in libcurl which doesn't accept a value of 1 anymore!

The bug is reproducible with a newer libcurl version and a vanilla urlgrabber==3.9.1 from PyPI (e.g. inside a virtualenv). The latest python-urlgrabber RPM packages in both Fedora and Amazon Linux already have the fix.

I have tested the patch proposed by Zdenek and it works for me. I still have no idea why there aren't any updates released on PyPI though!

There are comments.

Open Source Quality Assurance Infrastructure for Fedora QA

In the last few weeks I've been working together with Tim Flink and Kamil Paral from the Fedora QA team on bringing some installation testing expertise to Fedora and establishing an open source test lab to perform automated testing in. The infrastructure is already in relatively usable condition so I've decided to share this information with the community.

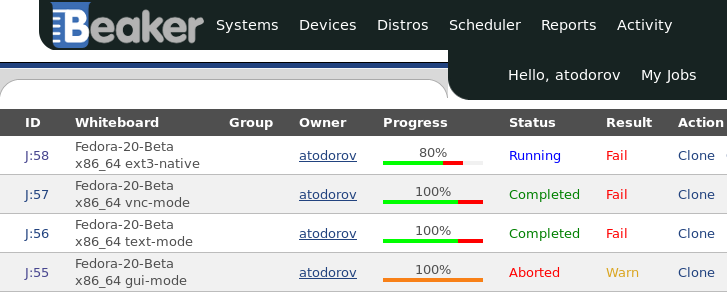

Beaker is Running Our Test Lab

Beaker is the software suite that powers the test lab infrastructure. It is quite complex, with many components and sometimes not very straight-forward to set up. Tim has been working on that with me giving it a try and reporting issues as they have been discovered and fixed.

In the process of working on this I've managed to create couple of patches against Beaker and friends. They are still pending release in a future version because of more urgent bug fixes which need to released first.

SNAKE is The Kickstart Template Server

SNAKE is a client/server Python framework used to support Anaconda installations. It supports plain text ks.cfg files, IIRC those were static templates, no variable substitution.

The other possibility is Python templates based on Pykickstart:

from pykickstart.constants import KS_SCRIPT_POST

from pykickstart.parser import Script

from installdefaults import InstallKs

def ks(**context):

'''Anaconda autopart'''

ks=InstallKs()

ks.packages.add(['@base'])

ks.clearpart(initAll=True)

ks.autopart(autopart=True)

script = '''

cp /tmp/ks.cfg /mnt/sysimage/root/ks.cfg || \

cp /run/install/ks.cfg /mnt/sysimage/root/ks.cfg

'''

post = Script(script, type=KS_SCRIPT_POST, inChroot=False)

ks.scripts.append(post)

return ks

At the moment SNAKE is essentially abandoned but feature complete. I'm thinking about adopting the project just in case we need to make some fixes. Will let you know more about this when it happens.

Open Source Test Suite

I have been working on opening up several test cases for what we (QE) call a tier #1 installation test suite. They can be found in git. The tests are base on beakerlib and the legacy RHTS framework which is now part of Beaker.

This effort has been coordinated with Kamil as part of a pilot project he's responsible for. I've been executing the same test suite against earlier Fedora 20 snapshots but using an internal environment. Now everything is going out in the open.

Executing The Tests

Well you can't do that - YET! There are command line client tools for Fedora but Beaker and SNAKE are not well suited for use outside a restricted network like LAN or VPN. There are issues with authentication most notably for SNAKE.

At the moment I have to ssh through two different systems to get proper access. However this is been worked on. I've read about a rewrite which will allow Beaker to utilize a custom authentication framework like FAS for example. Hopefully that will be implemented soon enough.

I will also like to see the test systems have direct access to the Internet for various reasons but this is not without its risks either. This is still to be decided.

If you are interested anyway see the kick-tests.sh file in the test suite for

examples and command line options.

Test Results

The first successfully completed test jobs are jobs 50 to 58. There's a failure in one of the test cases, namely SELinux related RHBZ #1027148.

From what I can tell the lab is now working as expected and we can start doing some testing against Fedora development snapshots.

Ping me or join #fedora-qa on irc.freenode.net if you'd like to join Fedora QA!

There are comments.

Page 10 / 16