Capture Terminal Output From Other Processes

I've been working on a test case to verify that Anaconda will print its EULA notice at the end of a text mode installation. The problem is how do you capture all the text which is printed to the terminal from processes outside your control ? The answer is surprisingly simple - using strace!

%post --nochroot

PID=`ps aux | grep "/usr/bin/python /sbin/anaconda" | grep -v "grep" | tr -s ' ' | cut -f2 -d' '`

STRACE_LOG="/mnt/sysimage/root/anaconda.strace"

# see https://fedoraproject.org/wiki/Features/SELinuxDenyPtrace

setsebool deny_ptrace 0

# EULA notice is printed after post-scripts are run

strace -e read,write -s16384 -q -x -o $STRACE_LOG -p $PID -f >/dev/null 2>&1 &

strace is tracing only read and write events (we really only need write) and extending the maximum string size printed in the log file. For a simple grep this is sufficient. If you need to pretty-print the strace output have a look at the ttylog utility.

There are comments.

Facebook is Bugging me

![]()

Facebook for Android version 55.0.0.18.66 broke the home screen icon title.

It reads false instead of Facebook. This is now fixed in version 58.0.0.28.70.

I wonder how they managed to get this slip through. Also isn't Google

supposed to review the apps coming into the Play Store and not publish them

if there are such visible issues ? I guess this wasn't the case here.

There are comments.

Automatic Upstream Dependency Testing

Ever since RHEL 7.2 python-libs broke s3cmd I've been pondering an age old problem: How do I know if my software works with the latest upstream dependencies ? How can I pro-actively monitor for new versions and add them to my test matrix ?

Mixing together my previous experience with Difio and monitoring upstream sources, and Forbes Lindesay's GitHub Automation talk at DEVit Conf I came together with a plan:

- Make an application which will execute when new upstream version is available;

- Automatically update

.travis.ymlfor the projects I'm interested in; - Let Travis-CI execute my test suite for all available upstream versions;

- Profit!

How Does It Work

First we need to monitor upstream! RubyGems.org has nice webhooks interface, you can even trigger on individual packages. PyPI however doesn't have anything like this :(. My solution is to run a cron job every hour and parse their RSS stream for newly released packages. This has been working previously for Difio so I re-used one function from the code.

After finding anything we're interested in comes the hard part - automatically

updating .travis.yml using the GitHub API. I've described this in more detail

here. This time

I've slightly modified the code to update only when needed and accept more

parameters so it can be reused.

Travis-CI has a clean interface to specify environment variables and

defining several

of them crates a test matrix. This is exactly what I'm doing.

.travis.yml is updated with a new ENV setting, which determines the upstream

package version. After commit new build is triggered which includes the expanded

test matrix.

Example

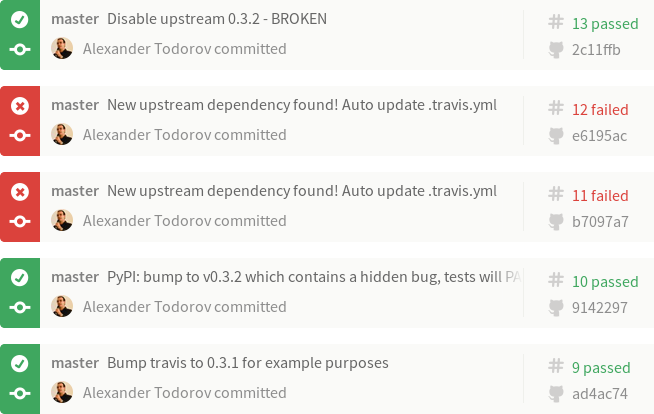

Imagine that our Project 2501 depends on FOO version 0.3.1. The build log illustrates what happened:

- Build #9 is what we've tested with FOO-0.3.1 and released to production. Test result is PASS!

- Build #10 - meanwhile upstream releases FOO-0.3.2 which causes our project to break. We're not aware of this and continue developing new features while all test results still PASS! When our customers upgrade their systems Project 2501 will break ! Tests didn't catch it because test matrix wasn't updated. Please ignore the actual commit message in the example! I've used the same repository for the dummy dependency package.

- Build #11 - the monitoring solution finds FOO-0.3.2 and updates the test matrix automatically. The build immediately breaks! More precisely the test with version 0.3.2 fails!

- Build #12 - we've alerted FOO.org about their problem and they've released FOO-0.3.3. Our monitor has found that and updated the test matrix. However the 0.3.2 test job still fails!

- Build #13 - we decide to workaround the 0.3.2 failure or simply handle the

error gracefully. In this example I've removed version 0.3.2 from the test

matrix to simulate that. In reality I wouldn't touch

.travis.ymlbut instead update my application and tests to check for that particular version. All test results are PASS again!

Btw Build #11 above was triggered manually (./monitor.py) while Build #12 came from OpenShit, my hosting environment.

At present I have this monitoring enabled for my new Markdown extensions and will also add it to django-s3-cache once it migrates to Travis-CI (it uses drone.io now).

Enough Talk, Show me the Code

#!/usr/bin/env python

import os

import sys

import json

import base64

import httplib

from pprint import pprint

from datetime import datetime

from xml.dom.minidom import parseString

def get_url(url, post_data = None):

# GitHub requires a valid UA string

headers = {

'User-Agent' : 'Mozilla/5.0 (X11; Linux x86_64; rv:10.0.5) Gecko/20120601 Firefox/10.0.5',

}

# shortcut for GitHub API calls

if url.find("://") == -1:

url = "https://api.github.com%s" % url

if url.find('api.github.com') > -1:

if not os.environ.has_key("GITHUB_TOKEN"):

raise Exception("Set the GITHUB_TOKEN variable")

else:

headers.update({

'Authorization': 'token %s' % os.environ['GITHUB_TOKEN']

})

(proto, host_path) = url.split('//')

(host_port, path) = host_path.split('/', 1)

path = '/' + path

if url.startswith('https'):

conn = httplib.HTTPSConnection(host_port)

else:

conn = httplib.HTTPConnection(host_port)

method = 'GET'

if post_data:

method = 'POST'

post_data = json.dumps(post_data)

conn.request(method, path, body=post_data, headers=headers)

response = conn.getresponse()

if (response.status == 404):

raise Exception("404 - %s not found" % url)

result = response.read().decode('UTF-8', 'replace')

try:

return json.loads(result)

except ValueError:

# not a JSON response

return result

def post_url(url, data):

return get_url(url, data)

def monitor_rss(config):

"""

Scan the PyPI RSS feeds to look for new packages.

If name is found in config then execute the specified callback.

@config is a dict with keys matching package names and values

are lists of dicts

{

'cb' : a_callback,

'args' : dict

}

"""

rss = get_url("https://pypi.python.org/pypi?:action=rss")

dom = parseString(rss)

for item in dom.getElementsByTagName("item"):

try:

title = item.getElementsByTagName("title")[0]

pub_date = item.getElementsByTagName("pubDate")[0]

(name, version) = title.firstChild.wholeText.split(" ")

released_on = datetime.strptime(pub_date.firstChild.wholeText, '%d %b %Y %H:%M:%S GMT')

if name in config.keys():

print name, version, "found in config"

for cfg in config[name]:

try:

args = cfg['args']

args.update({

'name' : name,

'version' : version,

'released_on' : released_on

})

# execute the call back

cfg['cb'](**args)

except Exception, e:

print e

continue

except Exception, e:

print e

continue

def update_travis(data, new_version):

travis = data.rstrip()

new_ver_line = " - VERSION=%s" % new_version

if travis.find(new_ver_line) == -1:

travis += "\n" + new_ver_line + "\n"

return travis

def update_github(**kwargs):

"""

Update GitHub via API

"""

GITHUB_REPO = kwargs.get('GITHUB_REPO')

GITHUB_BRANCH = kwargs.get('GITHUB_BRANCH')

GITHUB_FILE = kwargs.get('GITHUB_FILE')

# step 1: Get a reference to HEAD

data = get_url("/repos/%s/git/refs/heads/%s" % (GITHUB_REPO, GITHUB_BRANCH))

HEAD = {

'sha' : data['object']['sha'],

'url' : data['object']['url'],

}

# step 2: Grab the commit that HEAD points to

data = get_url(HEAD['url'])

# remove what we don't need for clarity

for key in data.keys():

if key not in ['sha', 'tree']:

del data[key]

HEAD['commit'] = data

# step 4: Get a hold of the tree that the commit points to

data = get_url(HEAD['commit']['tree']['url'])

HEAD['tree'] = { 'sha' : data['sha'] }

# intermediate step: get the latest content from GitHub and make an updated version

for obj in data['tree']:

if obj['path'] == GITHUB_FILE:

data = get_url(obj['url']) # get the blob from the tree

data = base64.b64decode(data['content'])

break

old_travis = data.rstrip()

new_travis = update_travis(old_travis, kwargs.get('version'))

# bail out if nothing changed

if new_travis == old_travis:

print "new == old, bailing out", kwargs

return

####

#### WARNING WRITE OPERATIONS BELOW

####

# step 3: Post your new file to the server

data = post_url(

"/repos/%s/git/blobs" % GITHUB_REPO,

{

'content' : new_travis,

'encoding' : 'utf-8'

}

)

HEAD['UPDATE'] = { 'sha' : data['sha'] }

# step 5: Create a tree containing your new file

data = post_url(

"/repos/%s/git/trees" % GITHUB_REPO,

{

"base_tree": HEAD['tree']['sha'],

"tree": [{

"path": GITHUB_FILE,

"mode": "100644",

"type": "blob",

"sha": HEAD['UPDATE']['sha']

}]

}

)

HEAD['UPDATE']['tree'] = { 'sha' : data['sha'] }

# step 6: Create a new commit

data = post_url(

"/repos/%s/git/commits" % GITHUB_REPO,

{

"message": "New upstream dependency found! Auto update .travis.yml",

"parents": [HEAD['commit']['sha']],

"tree": HEAD['UPDATE']['tree']['sha']

}

)

HEAD['UPDATE']['commit'] = { 'sha' : data['sha'] }

# step 7: Update HEAD, but don't force it!

data = post_url(

"/repos/%s/git/refs/heads/%s" % (GITHUB_REPO, GITHUB_BRANCH),

{

"sha": HEAD['UPDATE']['commit']['sha']

}

)

if data.has_key('object'): # PASS

pass

else: # FAIL

print data['message']

if __name__ == "__main__":

config = {

"atodorov-test" : [

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/bztest',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

}

],

"Markdown" : [

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/Markdown-Bugzilla-Extension',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

},

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/Markdown-No-Lazy-Code-Extension',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

},

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/Markdown-No-Lazy-BlockQuote-Extension',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

},

],

}

# check the RSS to see if we have something new

monitor_rss(config)

There are comments.

python-libs in RHEL 7.2 broke SSL verification in s3cmd

Today started with Planet Sofia Valley being broken again. Indeed it's been broken since last Friday when I've upgraded to the latest RHEL 7.2. I quickly identified that I was hitting Issue #647. Then I tried the git checkout without any luck. This is when I started to suspect that python-libs has been updated in an incompatible way.

After series of reported bugs,

RHBZ #1284916,

RHBZ #1284930,

Python#25722, it was clear that

ssl.py was working according to RFC6125, that Amazon S3 was not playing

nicely with this same RFC and that my patch proposal was wrong.

This immediately had me looking upper in the stack at httplib.py and s3cmd.

Indeed there was a change in httplib.py which introduced two parameters,

context and check_hostname, to HTTPSConnection.__init__. The change

also supplied the logic which performs SSL hostname validation.

if not self._context.check_hostname and self._check_hostname:

try:

ssl.match_hostname(self.sock.getpeercert(), server_hostname)

except Exception:

self.sock.shutdown(socket.SHUT_RDWR)

self.sock.close()

raise

This looks a bit doggy as I don't quite understand the intention behind not PREDICATE and PREDICATE. Anyway to disable the validation you need both parameters set to False, which is PR #668.

Notice the two try-except blocks. This is in case we're running with a version that has a context but not the check_hostname parameter. I've found the inspect.getmembers function which can be used to figure out what parameters are there for the init method but a solution based on it doesn't appear to be more elegant. I will describe this in more details in my next post.

There are comments.

GitHub Bugzilla Hook

Last month I've created a tool which adds comments to Bugzilla when a commit message references a bug number. It was done as a proof of concept and didn't receive much attention at the time. Today I'm happy to announce the existence of GitHub Bugzilla Hook.

I've used David Shea's GitHub Email Hook as my starting template and only modified it where needed. GitHub Bugzilla Hook will examine push data and post comments for every unique bug+branch combination. Once a comment for that particular bug+branch combination is made, new ones will not be posted, even if later commits reference the same bug. My main assumption is commits which are related to a bug will be pushed together most of the times so there shouldn't be lots of noise in Bugzilla.

See [rhbz#1274703] for example of how the comments look. The parser behavior is taken from anaconda and conforms to the style the Red Hat Installer Engineering Team uses. Hopefully you find it useful as well.

My next step is to find a hosting place for this script and hook it up with the rhinstaller GitHub repos!

There are comments.

Bad Stub Design in DNF, Pt.2

Do you remember my example of a bad stub design in DNF ? At that time I didn't have a good example of why this is a bad design and what are the consequences of it. Today I have!

From my comment on PR #118

Note: the benefit of this patch are quite subtle. I've played around with creating a few more tests and the benefit I see affect only a few lines of code.

For #114 there doesn't seem to be any need to test _get_query directly, although we call

q = self.base.sack.query() q = q.available()which will benefit from this PR b/c we're stubbing out the entire Sack object. I will work on a test later today/tomorrow to see how it looks.

OTOH for #113 where we modify _get_query the test can look something like this:

def test_get_query_with_local_rpm(self): try: (fs, rpm_path) = tempfile.mkstemp('foobar-99.99-1.x86_64.rpm') # b/c self.cmd.cli.base is a mock object add_remote_rpm # will not update the available packages while testing. # it is expected to hit an exception with self.assertRaises(dnf.exceptions.PackageNotFoundError): self.cmd._get_query(rpm_path) self.cmd.cli.base.add_remote_rpm.assert_called_with(rpm_path) finally: os.remove(rpm_path)Note the comment above the with block. If we leave out

_get_queryas before (a simple stub function) we're not going to be able to useassert_called_withlater.

Now a more practical example. See

commit fe13066

- in case the package is not found we log the error. In case configuration is

strict=True then the plugin will raise another exception. With the initial version

of the stubs this change in behavior is silently ignored. If there was an error

in the newly introduced lines it would go straight into production because the

existing tests passed.

What happens is that test_get_packages() calls _get_packages(['notfound']),

which is not the real code but a test stub and returns an empty list in this case.

The empty list is expected from the test and it will not fail!

With my new stub design the test will execute the actual _get_packages()

method from download.py and choke on the exception. The test itself needs

to be modified, which is done in

commit 2c2b34

and no further errors were found.

So let me summarize: ** When using mocks, stubs and fake objects we should be replacing external dependencies of the software under test, not internal methods from the SUT! **

There are comments.

Tip: Running DNF Plugins from git

This is mostly for self reference because it is not currently documented

in the code. To use dnf plugins from a local git checkout modify your

/etc/dnf/dnf.conf and add the following line under the [main] section:

pluginpath=/path/to/dnf-plugins-core/plugins

There are comments.

Revamping Anaconda's Dogtail Tests

In my previous post I briefly talked

about running anaconda from a git checkout. My goal was to rewrite tests/gui/ so

that they don't use a LiveCD and virtual machines anymore. I'm pleased to announce

that this is already done (still not merged), see

PR#457.

The majority of the changes are just shuffling bits around and deleting unused code. The existing UI tests were mostly working and only needed minor changes. There are two things which didn't work and are temporarily disabled:

- Clicking the Help button results in RHBZ #1282432, which in turn may be hiding another bug behind it;

- Looping over the available languages resulted in AT-SPI NonImplementedError which I'm going to debug next.

To play around with this make sure you have accessibility enabled and:

# cd anaconda/

# export top_srcdir=`pwd`

# setenforce 0

# cd tests/gui/

# ./run_gui_tests.sh

Note: you also need Dogtail for Python3 which isn't officially available yet. I'm building from https://vhumpa.fedorapeople.org/dogtail/beta/dogtail3-0.9.1-0.3.beta3.src.rpm

My future plans are to figure out how to re-enable what is temporarily

disabled, update run_gui_tests.sh to properly start gnome-session and

enable accessibility, do a better job cleaning up after a failure,

enable coverage and hook everything into make ci.

Happy testing!

There are comments.

Running Anaconda from git

It is now possible to execute anaconda directly from a git checkout.

Disclaimer: this is only for testing purposes, you are not supposed to

execute anaconda from git and install a running system! My intention is

to use this feature and rewrite the Dogtail tests inside tests/gui/ which

rely on having a LiveCD.iso and running VMs to execute. For me this has proven

very slow and difficult to debug problems in the past hence the change.

Note: you will need to have an active DISPLAY in your environment and also set SELinux to permissive, see RHBZ #1276376.

Please see PR 438 for more details.

There are comments.

How Krasi Tsonev Broke Planet.SofiaValley.com

Yesterday I've added Krasimir Tsonev's blog to http://planet.sofiavalley.com and the planet broke. Suddenly it started showing only Krasi's articles and all of them with the same date. The problem was the RSS feed didn't have any timestamps. The fix is trivial:

--- rss.xml.orig 2015-11-13 10:12:35.348625718 +0200

+++ rss.xml 2015-11-13 10:12:45.157932304 +0200

@@ -9,120 +9,160 @@

<title><![CDATA[A modern React starter pack based on webpack]]></title>

<link>http://krasimirtsonev.com/blog/article/a-modern-react-starter-pack-based-on-webpack</link>

<description><![CDATA[<p><i>Checkout React webpack starter in <a href=\"https://github.com/krasimir/react-web<br /><p>You know how crazy is the JavaScript world nowadays. There are new frameworks, libraries and tools coming every day. Frequently I’m exploring some of these goodies. I got a week long holiday. I promised to myself that I’ll not code, read or watch about code. Well, it’s stronger than me. <a href=\"https://github.com/krasimir/react-webpack-starter\">React werbpack starter</a> is the result of my no-programming week.</p>]]></description>

+ <pubDate>Thu, 01 Oct 2015 00:00:00 +0300</pubDate>

+ <guid>http://krasimirtsonev.com/blog/article/a-modern-react-starter-pack-based-on-webpack</guid>

</item>

Thanks to Krasi for fixing this quickly and happy reading!

There are comments.

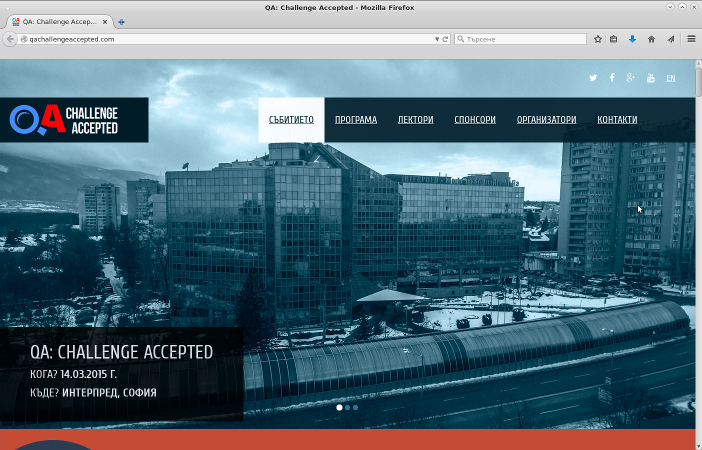

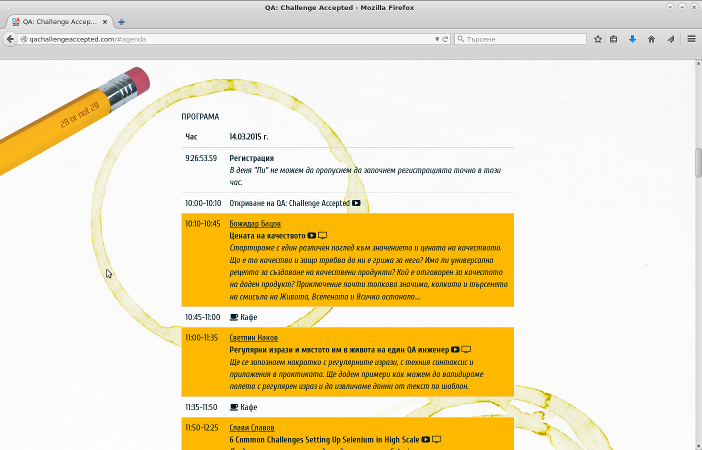

UI Usability Bug for QAChallengeAccepted.com

Today I wanted to submit a presentation proposal for QA Challenge Accepted 2016 and found a usability problem in their website. The first picture is how the UI looks on my screen. As you can see the screen height is enough to show the first section of the interface. There's something orange at the bottom which isn't clearly identifiable. The next picture shows the UI as it looks after clicking on the PROGRAM menu link.

The problem is that I never saw the orange section, which turned out to be the call for papers and a link to the submission form. To fix this the orange section either needs to go at the top and be clearly visible or at least a new item be added to the menu.

Btw the next event will be in March 2016 in Sofia and I hope to see you there!

There are comments.

Anaconda & coverage.py - Pt.3 - coverage-diff

In my previous post I've talked about testing anaconda and friends and raised some questions. Today I'm going to give an example of how to answer one of them: "How different is the code execution path between different tests?"

coverate-tools

I'm going to use coverage-tools in my explanations below so a little introduction is required. All the tools are executable Python scripts which build on top of existing coverage.py API. The difference is mainly in flexibility of parameters and output formatting. I've tried to keep as close as possible to the existing behavior of coverage.py.

coverage-annotate - when given a .coverage data file prints the source code annotated with line numbers and execution markers.

!!! missing/usr/lib64/python2.7/site-packages/pyanaconda/anaconda_argparse.py

>>> covered/usr/lib64/python2.7/site-packages/pyanaconda/anaconda_argparse.py

... skip ...

37 > import logging

38 > log = logging.getLogger("anaconda")

39

40 # Help text formatting constants

41

42 > LEFT_PADDING = 8 # the help text will start after 8 spaces

43 > RIGHT_PADDING = 8 # there will be 8 spaces left on the right

44 > DEFAULT_HELP_WIDTH = 80

45

46 > def get_help_width():

47 > """

48 > Try to detect the terminal window width size and use it to

49 > compute optimal help text width. If it can't be detected

50 > a default values is returned.

51

52 > :returns: optimal help text width in number of characters

53 > :rtype: int

54 > """

55 # don't do terminal size detection on s390, it is not supported

56 # by its arcane TTY system and only results in cryptic error messages

57 # ending on the standard output

58 # (we do the s390 detection here directly to avoid

59 # the delay caused by importing the Blivet module

60 # just for this single call)

61 > is_s390 = os.uname()[4].startswith('s390')

62 > if is_s390:

63 ! return DEFAULT_HELP_WIDTH

64

... skip ...

In the example above all lines starting with > were executed by the interpreter. All top-level import statements were executed as you would expect. Then the method get_help_width() was executed (called from somewhere). Because this was on x86_64 machine line 63 was not executed. It is marked with !. The comments and empty lines are of no interest.

coverage-diff - produces git like diff reports on the text output of annotate.

--- a/usr/lib64/python2.7/site-packages/pyanaconda/ui/gui/spokes/source.py

+++ b/usr/lib64/python2.7/site-packages/pyanaconda/ui/gui/spokes/source.py

@@ -634,7 +634,7 @@

634 # Wait to make sure the other threads are done before sending ready, otherwise

635 # the spoke may not get be sensitive by _handleCompleteness in the hub.

636 > while not self.ready:

- 637 ! time.sleep(1)

+ 637 > time.sleep(1)

638 > hubQ.send_ready(self.__class__.__name__, False)

639

640 > def refresh(self):\

In this example line 637 was not executed in the first test run, while it was executed in the second test run. Reading the comments above it is clear the difference between the two test runs is just timing and synchronization.

Kickstart vs. Kickstart

How different is the code execution path between different tests? Looking at Fedora 23 test results we see several tests which differ only slightly in their setup - installation via HTTP, FTP or NFS; installation to SATA, SCSI, SAS drives; installation using RAID for the root file system; These are good candidates for further analysis.

Note: my results below are not from Fedora 23 but the conclusions still apply! The tests were executed on bare metal and virtual machines, trying to use the same hardware or same systems configurations where possible!

Example: HTTP vs. FTP

--- a/usr/lib64/python2.7/site-packages/pyanaconda/packaging/__init__.py

+++ b/usr/lib64/python2.7/site-packages/pyanaconda/packaging/__init__.py

@@ -891,7 +891,7 @@

891

892 # Run any listeners for the new state

893 > for func in self._event_listeners[event_id]:

- 894 ! func()

+ 894 > func()

895

896 > def _runThread(self, storage, ksdata, payload, fallback, checkmount):

897 # This is the thread entry

--- a/usr/lib64/python2.7/site-packages/pyanaconda/ui/gui/spokes/lib/resize.py

+++ b/usr/lib64/python2.7/site-packages/pyanaconda/ui/gui/spokes/lib/resize.py

@@ -102,10 +102,10 @@

102 # Otherwise, fall back on increasingly vague information.

103 > if not part.isleaf:

104 > return self.storage.devicetree.getChildren(part)[0].name

- 105 > if getattr(part.format, "label", None):

+ 105 ! if getattr(part.format, "label", None):

106 ! return part.format.label

- 107 > elif getattr(part.format, "name", None):

- 108 > return part.format.name

+ 107 ! elif getattr(part.format, "name", None):

+ 108 ! return part.format.name

109 ! else:

110 ! return ""

111

@@ -315,10 +315,10 @@

315 > def on_key_pressed(self, window, event, *args):

316 # Handle any keyboard events. Right now this is just delete for

317 # removing a partition, but it could include more later.

- 318 > if not event or event and event.type != Gdk.EventType.KEY_RELEASE:

+ 318 ! if not event or event and event.type != Gdk.EventType.KEY_RELEASE:

319 ! return

320

- 321 > if event.keyval == Gdk.KEY_Delete and self._deleteButton.get_sensitive():

+ 321 ! if event.keyval == Gdk.KEY_Delete and self._deleteButton.get_sensitive():

322 ! self._deleteButton.emit("clicked")

323

324 > def _sumReclaimableSpace(self, model, path, itr, *args):

--- a/usr/lib64/python2.7/site-packages/pyanaconda/ui/gui/spokes/source.py

+++ b/usr/lib64/python2.7/site-packages/pyanaconda/ui/gui/spokes/source.py

@@ -634,7 +634,7 @@

634 # Wait to make sure the other threads are done before sending ready, otherwise

635 # the spoke may not get be sensitive by _handleCompleteness in the hub.

636 > while not self.ready:

- 637 ! time.sleep(1)

+ 637 > time.sleep(1)

638 > hubQ.send_ready(self.__class__.__name__, False)

639

640 > def refresh(self):

The difference in source.py is from timing/synchronization and can safely be ignored.

I'm not exactly sure about __init__.py but doesn't look much of a big deal.

We're left with resize.py. The differences in on_key_pressed() are because

I've probably used the keyboard instead the mouse (these are indeed manual installs).

The other difference is in how the partition labels are displayed. One of the installs

was probably using fresh disks while the other not.

Example: SATA vs. SCSI - no difference

Example: SATA vs. SAS (mpt2sas driver)

--- a/usr/lib64/python2.7/site-packages/pyanaconda/bootloader.py

+++ b/usr/lib64/python2.7/site-packages/pyanaconda/bootloader.py

@@ -109,10 +109,10 @@

109 > try:

110 > opts.parity = arg[idx+0]

111 > opts.word = arg[idx+1]

- 112 ! opts.flow = arg[idx+2]

- 113 ! except IndexError:

- 114 > pass

- 115 > return opts

+ 112 > opts.flow = arg[idx+2]

+ 113 > except IndexError:

+ 114 ! pass

+ 115 ! return opts

116

117 ! def _is_on_iscsi(device):

118 ! """Tells whether a given device is on an iSCSI disk or not."""

@@ -1075,13 +1075,13 @@

1075 > command = ["serial"]

1076 > s = parse_serial_opt(self.console_options)

1077 > if unit and unit != '0':

- 1078 ! command.append("--unit=%s" % unit)

+ 1078 > command.append("--unit=%s" % unit)

1079 > if s.speed and s.speed != '9600':

1080 > command.append("--speed=%s" % s.speed)

1081 > if s.parity:

- 1082 ! if s.parity == 'o':

+ 1082 > if s.parity == 'o':

1083 ! command.append("--parity=odd")

- 1084 ! elif s.parity == 'e':

+ 1084 > elif s.parity == 'e':

1085 ! command.append("--parity=even")

1086 > if s.word and s.word != '8':

1087 ! command.append("--word=%s" % s.word)

As you can see the difference is minimal, mostly related to the underlying hardware. As far as I can tell this has to do with how the bootloader is installed on disk but I'm no expert on this particular piece of code. I've seen the same difference in other comparisons so it probably has to do more with hardware than with what kind of disk/driver is used.

Example: RAID 0 vs. RAID 1 - manual install

--- a/usr/lib64/python2.7/site-packages/pyanaconda/ui/gui/spokes/datetime_spoke.py

+++ b/usr/lib64/python2.7/site-packages/pyanaconda/ui/gui/spokes/datetime_spoke.py

@@ -490,9 +490,9 @@

490

491 > time_init_thread = threadMgr.get(constants.THREAD_TIME_INIT)

492 > if time_init_thread is not None:

- 493 > hubQ.send_message(self.__class__.__name__,

- 494 > _("Restoring hardware time..."))

- 495 > threadMgr.wait(constants.THREAD_TIME_INIT)

+ 493 ! hubQ.send_message(self.__class__.__name__,

+ 494 ! _("Restoring hardware time..."))

+ 495 ! threadMgr.wait(constants.THREAD_TIME_INIT)

496

497 > hubQ.send_ready(self.__class__.__name__, False)

498

As far as I can tell the difference is related to hardware clock settings, probably due to different defaults in BIOS on the various hardware. Additional tests with RAID 5 and RAID 6 reveals the same exact difference. RAID 0 vs. RAID 10 shows no difference at all. Indeed as far as I know anaconda delegates the creation of RAID arrays to mdadm once the desired configuration is known so these results are to be expected.

Conclusion

As you can see sometimes there are tests which appear to be very important

but in reality they cover a corner case of the base test. For example if any

of the RAID levels works we can be pretty confident

all of them work they won't break in anaconda

(thanks Adam Williamson)!

What you do with this information is up to you. Sometimes QA is able to execute all the tests and life is good. Sometimes we have to compromise, skip some testing and accept the risks of doing so. Sometimes you can execute all tests for every build, sometimes only once per milestone. Whatever the case having the information to back up your decision is vital!

In my next post on this topic I'm going to talk more about functional tests vs. unit tests. Both anaconda and blivet have both kinds of tests and I'm interested to know if tests from the two categories focus on the same functionality how are they different. If we have a unit test for feature X, does it warrant to spend the resources doing functional testing for X as well?

There are comments.

Anaconda & coverage.py - Pt.2 - Details

My previous post was an introduction to testing installation related components. Now I'm going to talk more about anaconda and how it is tested.

There are two primary ways to test anaconda. You can execute make check in the

source directory which will trigger the package test suite. The other possibility

is to perform an actual installation, on bare meta or virtual machine, using the

latest Rawhide snapshots which also

include the latest anaconda. For both of these methods we can collect code

coverage information. In live installation mode coverage is enabled via the

inst.debug boot argument. Fedora 23 and earlier use debug=1 but that

can lead to problems

sometimes.

Kickstart Testing

Kickstart

is a method of automating the installation of Fedora by supplying the necessary

configuration into a text file and pointing the installer at this file. There is

the directory tests/kickstart_tests, inside the anaconda source, where each

test is a kickstart file and a shell script. The test runner provisions a virtual

machine using boot.iso and the kickstart file. A shell script then verifies

installation was as expected and copies files of interest to the host system.

Kickstart files are also the basis for testing Fedora installations in

Beaker.

Naturally some of these in-package kickstart tests are the same as out-of-band kickstart tests. Hint: there are more available but not yet public.

The question which I don't have an answer for right now is "Can we remove some of the duplicates and how this affects devel and QE teams" ? The pros of in-package testing are that it is faster compared to Beaker. The cons are that you're not testing the real distro (every snapshot is a possible final release to the users).

Dogtail

Dogtail uses accessibility technologies to communicate with desktop applications. It is written in Python and can be used as GUI test automation framework. Long time ago I've proposed support for Dogtail in anaconda which was rejected, then couple of years later it was accepted and later removed from the code again.

Anaconda has in-package Dogtail tests (tests/gui/). They work by attaching

a second disk image with the test suite to a VM running a LiveCD. Anaconda is

started on the LiveCD and an attempt to install Fedora on disk 1 is made.

Everything is driven by the Dogtail scripts. There are only a few of these

tests available and they are currently disabled.

Red Hat QE has also created another method for running Dogtail tests in anaconda

using an updates.img with the previous functionality.

Even if there are some duplicate tests I'm not convinced we have to drop the

tests/gui/ directory from the code because

the framework used to drive the graphical interface of anaconda appears to be very

well written. The code is clean and easy to follow.

Also I don't have metrics of how much these two methods differ or how much they cover

in their testing. IMO they are pretty close and before we can find a way to

reliably execute them on a regular basis there isn't much to be done here.

One idea is to use the --dirinstall or --image options and skip the

LiveCD part entirely.

How Much is Tested

make ci covers 10% of the entire code base for anaconda. Mind you that

tests/storage and tests/gui are currently disabled.

See PR #346,

PR #327 and

PR #319!

There is definitely room for improvement.

On the other hand live installation testing is much better. Text mode covers around 25% while graphical installations around 40%. Text and graphical combined cover 50% though. These numbers will drop quite a bit once anaconda learns to include all possible files in its report but it is a good estimate.

The important questions to ask here are:

- How much can PyUnit tests cover in anaconda?

- How much can kickstart tests cover ?

- Have we reached a threshold in any of the two primary methods for testing ?

- Does UI automation (with Dogtail) improve anything ?

- When testing a particular feature (say user creation) how different is the code execution path between manual (GUI) testing, kickstart and unit testing ? If not so different can we invest in unit tests instead of higher level tests then ?

- How different is the code execution path between different tests (manual or kickstart) ? In other words how much value are we getting from testing for the resources we're putting in ?

In my next post I will talk more about these questions and some rudimentary analysis against coverage data from the various test methods and test cases!

There are comments.

Anaconda & coverage.py - Pt.1 - Introduction

Since early 2015 I've been working on testing installation related components in Rawhide. I'm interested in the code produced by the Red Hat Installer Engineering Team and in particular in anaconda, blivet, pyparted and pykickstart. The goal of this effort is to improve the overall testing of these components and also have Red Hat QE contribute some of our knowledge back to the community. The benefit of course will be better software for everyone. In the next several posts I'll summarize what has been done so far and what's to be expected in the future.

Test Documentation Matters

Do you want others to contribute tests? I certainly do! When I started looking at the code it was obviously clear there was no documentation related to testing. Everyone needs to know how to write and execute these tests! Currently we have basic README files describing how to install necessary dependencies for development and test execution, how to execute the tests (and what can be tested) and most importantly what is the test architecture. There is description of how the file structure is organized and which are the base classes to inherit from when adding new tests. Most of the times each component goes through a pylint check and a standard PyUnit test suite.

Test documentation is usually in a tests/README file. For example:

I've tried to explain as much as possible without bloating the files and going into unnecessary details. If you spot something missing please send a pull request.

Continuous Integration

This has been largely an effort driven by Chris Lumens from the devel team.

All the components I'm interested in are tested regularly in a CI environment.

There is a make ci Makefile target for those of you interested in what exactly

gets executed.

Test Coverage

In order to improve something you need to know where you stand. We'll I didn't. That's why the first step was to integrate the coverage.py tool with all of these components.

With the exception of blivet (written in C) all of the other components integrate well with coverage.py and produce good statistics. pykickstart is the champ here with 90% coverage, while anaconda is somewhere between 10% and 50%. Full test coverage measurement for anaconda isn't straight forward and will be the subject of my next post. For the C based code we have to hook up with Gcov which shouldn't be too difficult.

At the moment there are several open pull requests to integrate the coverage test

targets with make ci and also report the results in human readable form. I will be

collecting these for historical references.

Tools

I've created some basic text-mode coverage-tools to help me combine and compare data from different executions. These are only the start of it and I'm expanding them as my needs for reporting and analytics evolve. I'm also looking into more detailed coverage reports but I don't have enough data and use cases to work on this front at the moment.

Some ideas currently in mind:

- map code changes (git commits) to existing test coverage to get a feeling where to invest in more testing;

- map bugs to code areas and to existing test coverage to see if we aren't missing tests in areas where the bugs are happening;

Bugs

coverage.py is a very nice tool indeed but I guess most people use it in a very limited way. Shortly after I started working with it I've found several places which need improvements. These have to do with combining and reporting on multiple files.

Some of the interesting issues I've found and still open are:

- PR #63 - New option --dont-remove when combining coverage data

- #425 - source parameter not including files which are explicitly specified

- #426 - Difference between coverage results with source specifies full dir instead of module name

In my next post I will talk about anaconda code coverage and what I want to do with it. In the mean time please use the comments to share your feedback.

There are comments.

Unit Testing Example - Bad Stub Design in DNF

In software testing, usually unit testing, test stubs are programs that simulate the behaviors of external dependencies that a module undergoing the test depends on. Test stubs provide canned answers to calls made during the test.

I've discovered an improperly written stub method in one of DNF's tests:

class DownloadCommandTest(unittest.TestCase):

def setUp(self):

def stub_fn(pkg_spec):

if '.src.rpm' in pkg_spec:

return Query.filter(sourcerpm=pkg_spec)

else:

q = Query.latest()

return [pkg for pkg in q if pkg_spec == pkg.name]

cli = mock.MagicMock()

self.cmd = download.DownloadCommand(cli)

self.cmd.cli.base.repos = dnf.repodict.RepoDict()

self.cmd._get_query = stub_fn

self.cmd._get_query_source = stub_fn

The replaced methods look like this:

def _get_query(self, pkg_spec):

"""Return a query to match a pkg_spec."""

subj = dnf.subject.Subject(pkg_spec)

q = subj.get_best_query(self.base.sack)

q = q.available()

q = q.latest()

if len(q.run()) == 0:

msg = _("No package " + pkg_spec + " available.")

raise dnf.exceptions.PackageNotFoundError(msg)

return q

def _get_query_source(self, pkg_spec):

""""Return a query to match a source rpm file name."""

pkg_spec = pkg_spec[:-4] # skip the .rpm

nevra = hawkey.split_nevra(pkg_spec)

q = self.base.sack.query()

q = q.available()

q = q.latest()

q = q.filter(name=nevra.name, version=nevra.version,

release=nevra.release, arch=nevra.arch)

if len(q.run()) == 0:

msg = _("No package " + pkg_spec + " available.")

raise dnf.exceptions.PackageNotFoundError(msg)

return q

As seen here stub_fn replaces the _get_query methods from the class under test. At the time of writing this has probably seemed like a good idea to speed up writing the tests.

The trouble is we should be replacing the external dependencies of _get_query (other parts of DNF essentially) and not methods from DownloadCommand. To understand why this is a bad idea check PR #113, which directly modifies _get_query. There's no way to test this patch with the current state of the test.

So I took a few days to experiment and update the current test stubs. The result is PR #118. The important bits are the SackStub and SubjectStub classes which hold information about the available RPM packages on the system. The rest are cosmetics to fit around the way the query objects are used (q.available(), q.latest(), q.filter()). The proposed design correctly overrides the external dependencies on dnf.subject.Subject and self.base.sack which are initialized before our plugin is loaded by DNF.

I must say this is the first error of this kind I've seen in my QA practice so far. I have no idea if this was a minor oversight or something which happens more frequently in open source projects but it's a great example nevertheless.

For those of you who'd like to get started on unit testing I can recommend the book

The Art of Unit Testing: With Examples in .Net

by Roy Osherove!

UPDATE: Part 2 with more practical examples can be found here.

There are comments.

4000+ bugs in Fedora - checksec failures

In the last week I've been trying to figure out how many packages conform to the new Harden All Packages policy in Fedora!

From 46884 RPMs, 17385 are 'x86_64' meaning they may contain ELF objects.

From them 4489 are reported as failed checksec.

What you should see as the output from checksec is

Full RELRO Canary found NX enabled PIE enabled No RPATH No RUNPATH

Full RELRO Canary found NX enabled DSO No RPATH No RUNPATH

The first line is for binaries, the second one for libraries b/c

DSOs on x86_64 are always position-independent. Some RPATHs are acceptable,

e.g. %{_libdir}/foo/ and I've tried to exclude them unless

other offenses are found. The script which does this is

checksec-collect.

Most often I'm seeing Partial RELRO, No canary found and No PIE errors. Since all packages potentially process untrusted input, it makes sense for all of them to be hardened and enhance the security of Fedora. That's why all of these errors should be considered valid bugs.

Attn package maintainers

Please see if your package is in the list and try to fix it or let me know why it should be excluded, for example it's a boot loader and doesn't function properly with hardening enabled. The full list is available at GitHub.

For more information about the different protection mechanisms see the following links:

- Partial vs Full RELRO

- Stack canaries

- NX memory protection

- Position Independent Executables

- RPATH

- RUNPATH

UPDATE 2015-09-17

I've posted my findings on fedora-devel and the comments are more than interesting even revealing an old bug in libtool.

There are comments.

Minor Typo Bug in Messenger for bg_BG.UTF-8

There's a typo in the Bulgarian translation of Messenger.com. It is highlighted by the red dot on the picture.

hunspell easily catches it so either Facebook doesn't run their translations through a spell checker or their spell checker is borked.

There are comments.

Pedometer Bug in Samsung S Health

Do you remember the pedometer bug in Samsung Gear Fit I've discovered earlier ? It turns out that Samsung is a fan of this one and has the exact same bug in their S Health application.

The application doesn't block pedometer(e.g. steps counting) while performing other activities such as cycling for example. So in reallity it reports incorrect value for burned callories. At this time I call it bad software development practice/architecture on Samsung's part which leads to this bug being present.

Btw for more interesting bugs see Samsung Gear Fit Bug-of-the-Day.

There are comments.

Call for Ideas: Graphical Test Coverage Reports

If you are working with Python and writing unit tests chances are you are familiar with the coverage reporting tool. However there are testing scenarios in which we either don't use unit tests or maybe execute different code paths(test cases) independent of each other.

For example, this is the case with installation testing in Fedora. Because anaconda - the installer is very complex the easiest way is to test it live, not with unit tests. Even though we can get a coverage report (anaconda is written in Python) it reflects only the test case it was collected from.

coverage combine can be used to combine several data files and produce an aggregate

report. This can tell you how much test coverage you have across all your tests.

As far as I can tell Python's coverage doesn't tell you how many times a particular line of code has been executed. It also doesn't tell you which test cases executed a particular line (see PR #59). In the Fedora example, I have the feeling many of our tests are touching the same code base and not contributing that much to the overall test coverage. So I started working on these items.

I imagine a script which will read coverage data from several test executions (preferably in JSON format, PR #60) and produce a graphical report similar to what GitHub does for your commit activity.

See an example here!

The example uses darker colors to indicate more line executions, lighter for less executions. Check the HTML for the actual numbers b/c there are no hints yet. The input JSON files are here and the script to generate the above HTML is at GitHub.

Now I need your ideas and comments!

What kinds of coverage reports are you using in your job ? How do you generate them ? How do they look like ?

There are comments.

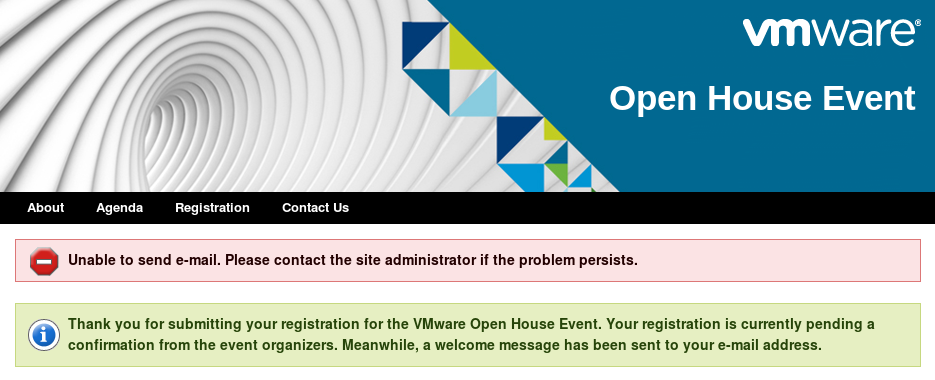

Bug in VMware Open House Website

This is a slightly annoying UI bug in VMware's Open House website. I've reported it and hopefully they will fix it.

There are comments.

Page 4 / 7