Free Software Testing Books

There's a huge list of free books on the topic of software testing. This will definitely be my summer reading list. I hope you find it helpful.

200 Graduation Theses About Software Testing

The guys from QAHelp have compiled a list of 200 graduation theses from various universities which are freely accessible online. The list can be found here.

There are comments.

Pedometer Bug in Samsung Gear Fit Smartwatch

Image source Pocketnow

Image source Pocketnow

Recently I've been playing around with a

Samsung Gear Fit

and while the hardware seems good I'm a bit disapointed on the software side.

There is at least one bug which is clearly visible - pedometer counts calories twice

when it's on and exercise mode is started.

How to test:

- Start the Pedometer app and record any initial readings;

- Walk a fixed distance and at the end record all readings;

- Now go back to the Exercise app and select a Walking exercise from the menu. Tap Start;

- Walk back the same distance/road as before. At the end of the journey stop the walking exercise and record all readings.

Expected results:

At the end of the trip I expect to see roughly the same calories burned for both directions.

Actual results:

The return trip counted twice as many calories compared to the forward trip. Here's some actual data to prove it:

+--------------------------+----------+----------------+---------+-------------+---------+

| | Initial | Forward trip | | Return trip | |

| | Readings | Pedometer only | Delta | Pedometer & | Delta |

| | | | | Exercise | |

+--------------------------+----------+----------------+---------+-------------+---------+

| Total Steps | 14409 st | 14798 st | 389 st | 15246 st | 448 st |

+--------------------------+----------+----------------+---------+-------------+---------+

| Total Distance | 12,19 km | 12,52 km | 0,33 km | 12,90 km | 0,38 km |

+--------------------------+----------+----------------+---------+-------------+---------+

| Cal burned via Pedometer | 731 Cal | 751 Cal | 20 Cal | 772 Cal | 21 Cal |

+--------------------------+----------+----------------+=========+-------------+=========+

| Cal burned via Exercise | 439 Cal | 439 Cal | 0 | 460 Cal | 21 Cal |

+--------------------------+----------+----------------+---------+-------------+=========+

| Total calories burned | 1170 Cal | 1190 Cal | 20 cal | 1232 Cal | 42 Cal |

+--------------------------+----------+----------------+=========+-------------+=========+

Note: Data values above were taken from Samsung's S Health app which is easier to work with instead of the Gear Fit itself.

The problem is that both apps are accessing the sensor simultaneously and not aware of each other. In theory it should be relatively easy to block access of one app while the other is running but that may not be so easy to implement on the limited platform the Gear Fit is.

There are comments.

2 Barcode Related Bugs in MyFitnessPal

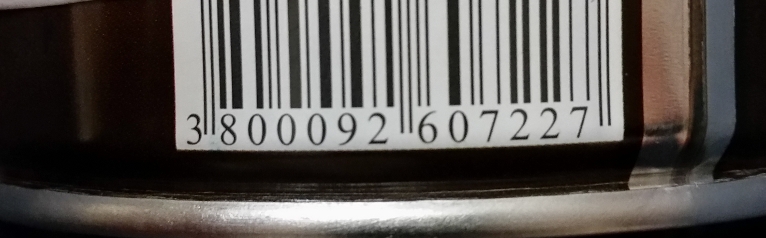

Did you know that the popular MyFitnessPal application can't scan barcodes printed on curved surfaces? The above barcode fails to scan because it is printed on a metal can full of roasted almonds :). In contrast the Barcode Scanner from ZXing Team understands it just fine. My bet is MyFitnessPal uses less advanced barcode scanning library. Judging from the visual clues in the app the issue is between 6 and 0 where white space is wider.

Despite being a bit blurry this second barcode is printed on a flat surface and is understood by both MyFitnessPal and "ZXing Barcode Scanner".

NOTE I get the same results regardless if I try to scan the actual barcode printed on packaging, a picture from a mobile device screen or these two images from the laptop screen.

MyFitnessPal also has problems with duplicate barcodes! Barcodes are not unique and many producers use the same code for multiple products. I've seen this in the case of two different varieties of salami from the same manufacturer on the good end and two different products produced across the world (eggs and popcorn) on the extreme end.

Once the user scans their barcodes and establish that the existing information is not correct they can Create a Food and update the calories database. This is then synced back to MyFitnesPal servers and overrides any existing information. When the same barcode is scanned for the second time only the new DB entry is visible.

How to reproduce:

- Scan an existing barcode and enter it to MFP database if not already there;

- Scan the same barcode one more time and pretend the information is not correct;

- Click the Create a Food button and fill-in the fields. For example use a different food name to distinguish between the two database entries. Save!

- From another device with different account (to verify information in DB) scan the same barcode again.

Actual results: The last entered information is shown.

Expected results: User is shown both DB records and can select between them.

There are comments.

Endless Loop Bug in Candy Crush Saga Level 80

Happy new year everyone. During the holidays I've discovered several interesting bugs which will be revealed in this blog. Starting today with a bug in the popular game Candy Crush Saga.

In level 80 one teleport is still open but the chocolates are blocking the rest. The game has ended but candies keep flowing through the teleport and the level doesn't exit. My guess is that the game logic is missing a check whether or not it will go into an endless loop.

This bug seems to be generic for the entire game. It pops up also on level 137 in the Owl part of the game (recorded by somebody else):

There are comments.

BlackBerry Z10 is Killing My WiFi Router

Few days ago I've resurrected my BlackBerry Z10 only to find out that it kills my WiFi router shortly after connecting to the network. It looks like many people are having the same problem with BlackBerry but most forum threads don't offer a meaningful solution so I did some tests.

Everything works fine when WiFi mode is set to either 11bgn mixed or 11n only and WiFi security is disabled.

When using WPA2/Personal security mode and AES encryption the problem occurs regardless of which WiFi mode is used. There is another type of encryption called TKIP but the device itself warns that this is not supported by the 802.11n specification (all my devices use it anyway).

So to recap: BlackBerry Z10 causes my TP-Link router to die if using WPA2/Personal security with AES Encryption. Switching to open network with MAC address filtering works fine!

I haven't had the time to upgrade the firmware of this router and see if the problem persists. Most likely I'll just go ahead and flash it with OpenWRT.

There are comments.

Speed Comparison of Web Proxies Written in Python Twisted and Go

After I figured out that Celery is rather slow I moved on to test another part of my environment - a web proxy server. The test here compares two proxy implementations - one with Python Twisted, the other in Go. The backend is a simple web server written in Go, which is probably the fastest thing when it comes to serving HTML.

The test content is a snapshot of the front page of this blog taken few days ago. The system is a standard Lenovo X220 laptop, with Intel Core i7 CPU, with 4 cores. The measurement instrument is the popular wrk tool with a custom Lua script to redirect the requests through the proxy.

All tests were repeated several times, only the best results are shown here.

I've taken time between the tests in order for all open TCP ports to close.

I've also observed the number of open ports (e.g. sockets) using netstat.

Baseline

Using wrk against the web server in Go yields around 30000 requests per second with an average of 2000 TCP ports in use:

$ ./wrk -c1000 -t20 -d30s http://127.0.0.1:8000/atodorov.html

Running 30s test @ http://127.0.0.1:8000/atodorov.html

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 304.43ms 518.27ms 1.47s 82.69%

Req/Sec 1.72k 2.45k 17.63k 88.70%

1016810 requests in 29.97s, 34.73GB read

Non-2xx or 3xx responses: 685544

Requests/sec: 33928.41

Transfer/sec: 1.16GB

Python Twisted

The Twisted implementation performs at little over 1000 reqs/sec with an average TCP port use between 20000 and 30000:

./wrk -c1000 -t20 -d30s http://127.0.0.1:8080 -s scripts/proxy.lua -- http://127.0.0.1:8000/atodorov.html

Running 30s test @ http://127.0.0.1:8080

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 335.53ms 117.26ms 707.57ms 64.77%

Req/Sec 104.14 72.19 335.00 55.94%

40449 requests in 30.02s, 3.67GB read

Socket errors: connect 0, read 0, write 0, timeout 8542

Non-2xx or 3xx responses: 5382

Requests/sec: 1347.55

Transfer/sec: 125.12MB

Go proxy

First I've run several 30 seconds tests and performance was around 8000 req/sec

with around 20000 ports used (most of them remain in TIME_WAIT state for a while).

Then I've modified proxy.go to make use of all available CPUs on the system and let

the test run for 5 minutes.

$ ./wrk -c1000 -t20 -d300s http://127.0.0.1:9090 -s scripts/proxy.lua -- http://127.0.0.1:8000/atodorov.html

Running 5m test @ http://127.0.0.1:9090

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 137.22ms 437.95ms 4.45s 97.55%

Req/Sec 669.54 198.52 1.71k 76.40%

3423108 requests in 5.00m, 58.27GB read

Socket errors: connect 0, read 26, write 181, timeout 24268

Non-2xx or 3xx responses: 2870522

Requests/sec: 11404.19

Transfer/sec: 198.78MB

Performance peaked at 10000 req/sec. TCP port usage initially rose to around 30000

but rapidly dropped and stayed around 3000. Both webserver.go and proxy.go were

printing the following messages on the console:

2014/11/18 21:53:06 http: Accept error: accept tcp [::]:9090: too many open files; retrying in 1s

Conclusion

There's no doubt that Go is blazingly fast compared to Python and I'm most likely to use it further in my experiments. Still I didn't expect a 3x difference in performance from webserver vs. proxy.

Another thing that worries me is the huge number of open TCP ports which then drops and stays consistent over time and the error messages from both webserver and proxy (maybe per process sockets limit).

At the moment I'm not aware of the internal workings of neither wrk, nor Go itself, nor the goproxy library to make conclusion if this is a bad thing or expected. I'm eager to hear what others think in the comments. Thanks!

Update 2015-01-27

I have retested with PyPy but on a different system so I'm giving all the test results

on it as well. /proc/cpuinfo says we have 16 x Intel(R) Xeon(R) CPU E5-2450L 0 @ 1.80GHz

CPUs.

Baseline - Go server:

$ ./wrk -c1000 -t20 -d30s http://127.0.0.1:8000/atodorov.html

Running 30s test @ http://127.0.0.1:8000/atodorov.html

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 15.57ms 20.38ms 238.93ms 98.11%

Req/Sec 3.55k 1.32k 15.91k 82.49%

1980738 requests in 30.00s, 174.53GB read

Socket errors: connect 0, read 0, write 0, timeout 602

Non-2xx or 3xx responses: 60331

Requests/sec: 66022.87

Transfer/sec: 5.82GB

Go proxy (30 sec):

$ ./wrk -c1000 -t20 -d30s http://127.0.0.1:9090 -s scripts/proxy.lua -- http://127.0.0.1:8000/atodorov.html

Running 30s test @ http://127.0.0.1:9090

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 68.93ms 718.98ms 12.60s 99.58%

Req/Sec 1.61k 784.01 4.83k 62.50%

942757 requests in 30.00s, 32.16GB read

Socket errors: connect 0, read 26, write 0, timeout 3050

Non-2xx or 3xx responses: 589940

Requests/sec: 31425.47

Transfer/sec: 1.07GB

Python proxy with Twisted==14.0.2 and pypy-2.2.1-2.el7.x86_64:

$ ./wrk -c1000 -t20 -d30s http://127.0.0.1:8080 -s scripts/proxy.lua -- http://127.0.0.1:8000/atodorov.html

Running 30s test @ http://127.0.0.1:8080

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 858.75ms 1.47s 6.00s 88.09%

Req/Sec 146.39 104.83 341.00 54.18%

85645 requests in 30.00s, 853.54MB read

Socket errors: connect 0, read 289, write 0, timeout 3297

Non-2xx or 3xx responses: 76567

Requests/sec: 2854.45

Transfer/sec: 28.45MB

Update 2015-01-27-2

Python proxy with Twisted==14.0.2 and python-2.7.5-16.el7.x86_64:

$ ./wrk -c1000 -t20 -d30s http://127.0.0.1:8080 -s scripts/proxy.lua -- http://127.0.0.1:8000/atodorov.html

Running 30s test @ http://127.0.0.1:8080

20 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 739.64ms 1.58s 14.22s 96.18%

Req/Sec 84.43 36.61 157.00 67.79%

49173 requests in 30.01s, 701.77MB read

Socket errors: connect 0, read 240, write 0, timeout 2463

Non-2xx or 3xx responses: 41683

Requests/sec: 1638.38

Transfer/sec: 23.38MB

As seen Go proxy is slower than the Go server by factor of 2. Python proxy is slower by than the Go server by factor of 20. These results are similar to previous ones so I don't think PyPy makes any significant difference.

There are comments.

Proxy Support for wrk HTTP Benchmarking Tool

Few times recently I've seen people using an HTTP benchmarking tool called wrk and decided to give it a try. It is a very cool instrument but didn't fit my use case perfectly. What I needed is to be able to redirect the connection through a web proxy and measure how much the proxy slows down things compared to hitting the web server directly with wrk. In other words - how fast is the proxy server.

How does a proxy work

I've examined the source code of two proxies (one in Python and another one in Go) and what happens is this:

- The proxy server starts listening to a TCP port

- A client (e.g. the browser) sends the request using an absolute URL (GET http://example.com/about.html)

- Instead of connecting directly to the web server behind example.com the client connects to the proxy

- The proxy server does connect to example.com directly, reads the response and delivers it back to the client.

Proxy in wrk

Luckily wrk supports the execution of Lua scripts so we can make a simple script like this:

init = function(args)

target_url = args[1] -- proxy needs absolute URL

end

request = function()

return wrk.format("GET", target_url)

end

Then update your command line to something like this: ./wrk [options] http://proxy:port -s proxy.lua -- http://example.com/about.html

This causes wrk to connect to our proxy server but instead issue GET requests for another URL.

Depending on how your proxy works you may need to add the Host: example.com header as well.

Now let's do some testing.

There are comments.

Speeding Up Celery Backends, Part 3

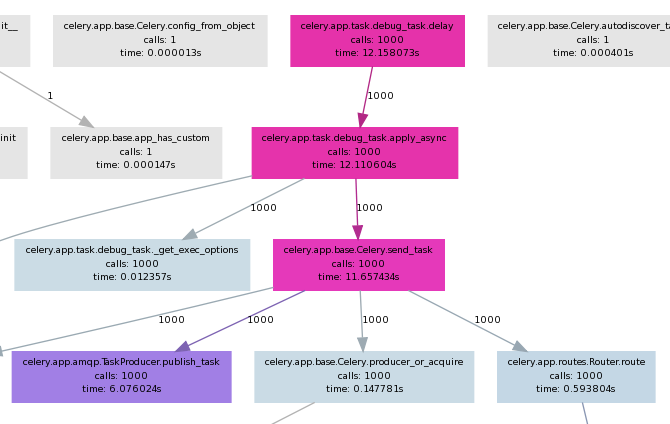

In the second part of this article we've seen how slow Celery actually is. Now let's explore what happens inside and see if we can't speed things up.

I've used pycallgraph to create call graph visualizations of my application. It has the nice feature to also show execution time and use different colors for fast and slow operations.

Full command line is:

pycallgraph -v --stdlib --include ... graphviz -o calls.png -- ./manage.py celery_load_test

where the --include is used to limit the graph to a particular Python module(s).

General findings

- The first four calls is where most of the time is spent as seen on the picture.

- As it seems most of the slow down comes from Celery itself, not the underlying messaging transport Kombu (not shown on picture)

celery.app.amqp.TaskProducer.publish_tasktakes half of the execution time ofcelery.app.base.Celery.send_taskcelery.app.task.Task.delaydirectly executes.apply_asyncand can be skipped if one rewrites the code.

More findings

In celery.app.base.Celery.send_task there is this block of code:

349 with self.producer_or_acquire(producer) as P:

350 self.backend.on_task_call(P, task_id)

351 task_id = P.publish_task(

352 name, args, kwargs, countdown=countdown, eta=eta,

353 task_id=task_id, expires=expires,

354 callbacks=maybe_list(link), errbacks=maybe_list(link_error),

355 reply_to=reply_to or self.oid, **options

356 )

producer is always None because delay() doesn't pass it as argument.

I've tried passing it explicitly to apply_async() as so:

from djapp.celery import *

# app = debug_task._get_app() # if not defined in djapp.celery

producer = app.amqp.producer_pool.acquire(block=True)

debug_task.apply_async(producer=producer)

However this doesn't speedup anything. If we replace the above code block like this:

349 with producer as P:

it blows up on the second iteration because producer and its channel is already None !?!

If you are unfamiliar with the with statement in Python please read

this article. In short the with statement is

a compact way of writing try/finally. The underlying kombu.messaging.Producer class does a

self.release() on exit of the with statement.

I also tried killing the with statement and using producer directly but with limited success. While it was not released(was non None) on subsequent iterations the memory usage grew much more and there wasn't any performance boost.

Conclusion

The with statement is used throughout both Celery and Kombu and I'm not at all sure if there's a mechanism for keep-alive connections. My time constraints are limited and I'll probably not spend anymore time on this problem soon.

Since my use case involves task producer and consumers on localhost I'll try to workaround the current limitations by using Kombu directly (see this gist) with a transport that uses either a UNIX domain socket or a name pipe (FIFO) file.

There are comments.

Speeding up Celery Backends, Part 2

In the first part of this post I looked at a few celery backends and discovered they didn't meet my needs. Why is the Celery stack slow? How slow is it actually?

How slow is Celery in practice

- Queue: 500`000 msg/sec

- Kombu: 14`000 msg/sec

- Celery: 2`000 msg/sec

Detailed test description

There are three main components of the Celery stack:

- Celery itself

- Kombu which handles the transport layer

- Python Queue()'s underlying everything

Using the Queue and Kombu tests run for 1 000 000 messages I got the following results:

- Raw Python Queue: Msgs per sec: 500`000

- Raw Kombu without Celery where

kombu/utils/__init__.py:uuid()is set to return 0- with json serializer: Msgs per sec: 5`988

- with pickle serializer: Msgs per sec: 12`820

- with the custom mem_serializer from part 1: Msgs per sec: 14`492

Note: when the test is executed with 100K messages mem_serializer yielded 25`000 msg/sec then the performance is saturated. I've observed similar behavior with raw Python Queue()'s. I saw some cache buffers being managed internally to avoid OOM exceptions. This is probably the main reason performance becomes saturated over a longer execution.

- Using celery_load_test.py modified to loop 1 000 000 times I got 1908.0 tasks created per sec.

Another interesting this worth outlining - in the kombu test there are these lines:

with producers[connection].acquire(block=True) as producer:

for j in range(1000000):

If we swap them the performance drops down to 3875 msg/sec which is comparable with the

Celery results. Indeed inside Celery there's the same with producer.acquire(block=True)

construct which is executed every time a new task is published. Next I will be looking

into this to figure out exactly where the slowliness comes from.

There are comments.

Speeding up Celery Backends, Part 1

I'm working on an application which fires a lot of Celery tasks - the more the better! Unfortunately Celery backends seem to be rather slow :(. Using the celery_load_test.py command for Django I was able to capture some metrics:

- Amazon SQS backend: 2 or 3 tasks/sec

- Filesystem backend: 2000 - 2500 tasks/sec

- Memory backend: around 3000 tasks/sec

Not bad but I need in the order of 10000 tasks created per sec! The other noticeable thing is that memory backend isn't much faster compared to the filesystem one! NB: all of these backends actually come from the kombu package.

Why is Celery slow ?

Using celery_load_test.py together with

cProfile I

was able to pin-point some problematic areas:

-

kombu/transports/virtual/__init__.py: class Channel.basic_publish() - does self.encode_body() into base64 encoded string. Fixed with custom transport backend I called fastmemory which redefines the body_encoding property:@cached_property def body_encoding(self): return None -

Celery uses json or pickle (or other) serializers to serialize the data. While json yields between 2000-3000 tasks/sec, pickle does around 3500 tasks/sec. Replacing with a custom serializer which just returns the objects (since we read/write from/to memory) yields about 4000 tasks/sec tops:

from kombu.serialization import register def loads(s): return s def dumps(s): return s register('mem_serializer', dumps, loads, content_type='application/x-memory', content_encoding='binary') -

kombu/utils/__init__.py: def uuid() - generates random unique identifiers which is a slow operation. Replacing it withreturn "00000000"boosts performance to 7000 tasks/sec.

It's clear that a constant UUID is not of any practical use but serves well to illustrate how much does this function affect performance.

Note:

Subsequent executions of celery_load_test seem to report degraded performance even with

the most optimized transport backend. I'm not sure why is this. One possibility is the random

UUID usage in other parts of the Celery/Kombu stack which drains entropy on the system and

generating more random numbers becomes slower. If you know better please tell me!

I will be looking for a better understanding of these IDs in Celery and hope to be able to produce a faster uuid() function. Then I'll be exploring the transport stack even more in order to reach the goal of 10000 tasks/sec. If you have any suggestions or pointers please share them in the comments.

There are comments.

Performance Profiling in Python with cProfile

This is a quick reference on profiling Python applications with cProfile:

$ python -m cProfile -s time application.py

The output is sorted by execution time -s time

9072842 function calls (8882140 primitive calls) in 9.830 CPU seconds

Ordered by: internal time

ncalls tottime percall cumtime percall filename:lineno(function)

61868 0.575 0.000 0.861 0.000 abstract.py:28(__init__)

41250 0.527 0.000 0.660 0.000 uuid.py:101(__init__)

61863 0.405 0.000 1.054 0.000 abstract.py:40(as_dict)

41243 0.343 0.000 1.131 0.000 __init__.py:143(uuid4)

577388 0.338 0.000 0.649 0.000 abstract.py:46(<genexpr>)

20622 0.289 0.000 8.824 0.000 base.py:331(send_task)

61907 0.232 0.000 0.477 0.000 datastructures.py:467(__getitem__)

20622 0.225 0.000 9.298 0.000 task.py:455(apply_async)

61863 0.218 0.000 2.502 0.000 abstract.py:52(__copy__)

20621 0.208 0.000 4.766 0.000 amqp.py:208(publish_task)

462640 0.193 0.000 0.247 0.000 {isinstance}

515525 0.162 0.000 0.193 0.000 abstract.py:41(f)

41246 0.153 0.000 0.633 0.000 entity.py:143(__init__)

In the example above (actual application) first line is kombu's

abstract.py: class Object(object).__init__()

and the second one is Python's

uuid.py: class UUID().__init__().

There are comments.

SNAKE is no Longer Needed to Run Installation Tests in Beaker

This is a quick status update for one of the pieces of Fedora QA infrastructure and mostly a self-note.

Previously to control the kickstart configuration used during installation in Beaker one

had to either modify the job XML in Beaker or use SNAKE (bkr workflow-snake) to render

a kickstart configuration from a Python template.

SNAKE presented challenges when deploying and using beaker.fedoraproject.org and is virtually unmaintained.

I present the new bkr workflow-installer-test which uses Jinja2 templates to

generate a kickstart configuration when provisioning the system. This is already

available in beaker-client-0.17.1.

The templates make use of all Jinja2 features (as far as I can tell) so you can create very complex ones. You can even include snippets from one template into another if required. The standard context that is passed to the template is:

- DISTRO - if specified, the distro name

- FAMILY - as returned by Beaker server, e.g. RedHatEnterpriseLinux6

- OS_MAJOR and OS_MINOR - also taken from Beaker server. e.g. OS_MAJOR=6 and OS_MINOR=5 for RHEL 6.5

- VARIANT - if specified

- ARCH - CPU architecture like x86_64

- any parameters passed to the test job with

--taskparam. They are processed last and can override previous values.

Installation related tests at fedora-beaker-tests

have been updated with a ks.cfg.tmpl templates to use with this new workflow.

This workflow also has the ability to return boot arguments for the installer if needed.

If any, they should be defined in a {% block kernel_options %}{% endblock %}

block inside the template. A simpler variant is to define a comment line that stars with

## kernel_options:

There are still a few issues which need to be fixed before beaker.fedoraproject.org can be used by the general public though. I will be writing another post about that so stay tuned.

There are comments.

How Do You Test Thai Scalable Fonts

Recently I wrote about testing fonts. I finally managed to get an answer from the authors of thai-scalable-fonts.

What is your approach for testing Fonts-TLWG?

It's not automated test. What it does is generate PDF with sample texts at several sizes (the waterfall), pangrams, and glyph table. It needs human eyes to investigate.

What kind of problems is your test suite designed for ?

- Shaping

- Glyph coverage

- Metrics

We also make use of fontforge features to make spotting errors easier, such as - Show extremas - Show almost vertical/horizontal lines/curves

Theppitak Karoonboonyanan, Fonts-TLWG

There are comments.

How do You Test Fonts

Previously I mentioned about testing fonts but didn't have any idea how this is done. Authors Khaled Hosny of Amiri Font and Steve White of GNU FreeFont provided valuable insight and material for further reading. I've asked them:

- What is your approach for testing ?

- What kind of problems is your test suite designed for ?

Here's what they say:

Currently my test suite consists of text strings (or lists of code points) and expected output glyph sequences and then use HarfBuzz (through its hb-shape command line tool) to check that the fonts always output the expected sequence of glyphs, sometimes with the expected positioning as well. Amiri is a complex font that have many glyph substitution and positioning rules, so the test suite is designed to make sure those rules are always executed correctly to catch regressions in the font (or in HarfBuzz, which sometimes happens since the things I do in my fonts are not always that common).

I think Lohit project do similar testing for their fonts, and HarfBuzz itself has a similar test suite with a bunch of nice scripts (though they are not installed when building HarfBuzz, yet[1]).

Recently I added more kinds of tests, namely checking that OTS[2] sanitizes the fonts successfully as this is important for using them on the web, and a test for a common mistakes I made in my feature files that result in unexpected blank glyphs in the fonts.

Khaled Hosny, Amiri Font

The answer is complicated. I'll do what I can to answer.

First, the FontForge application has a "verification" function which can be run from a script, and which identifies numerous technical problems.

FontForge also has a "Find Problems" function that I run by hand.

The monospaced face has special restrictions, first that all glyphs of non-zero width must be of the same width, and second, that all glyphs lie within the vertical bounds of the font.

Beside this, I have several other scripts that check for a few things that FontForge doesn't (duplicate names, that glyph slots agree with Unicode code within Unicode character blocks).

Several tests scripts have yet to be uploaded to the version control system -- because I'm unsure of them.

There is a more complicated check of TrueType tables, which attempts to find cases of tables that have been "shadowed" by the script/language specification of another table. This is helpful, but works imperfectly.

ALL THAT SAID,

In the end, every script used in the font has to be visually checked. This process takes me weeks, and there's nothing systematic about it, except that I look at printout of documents in each language to see if things have gone awry.

For a few documents in a few languages, I have images of how text should look, and can compare that visually (especially important for complex scripts.)

A few years back, somebody wrote a clever script that generated images of text and compared them pixel-by-pixel. This was a great idea, and I wish I could use it more effectively, but the problem was that it was much too sensitive. A small change to the font (e.g. PostScript parameters) would cause a small but global change in the rendering. Also the rendering could vary from one version of the rendering software to another. So I don't use this anymore.

That's all I can think of right now.

In fact, testing has been a big problem in getting releases out. In the past, each release has taken at least two weeks to test, and then another week to fix and bundle...if I was lucky. And for the past couple of years, I just haven't been able to justify the time expenditure. (Besides this, there are still a few serious problems with the fonts--once again, a matter of time.)

Have a look at the bugs pages, to get an idea of work being done.

http://savannah.gnu.org/bugs/?group=freefont

Steve White, GNU FreeFont

I'm not sure if ImageMagic or PIL can help solve the rendering and compare problem Steve is talking about. They can definitely be used for image comparison so maybe coupled with some rendering library it's worth a quick try.

If you happen to know more about fonts, please join me in improving overall test coverage in Fedora by designing test suites for fonts packages.

There are comments.

Last Week in Fedora QA

Here are some highlights from the past week discussions in Fedora which I found interesting or participated in.

Call to Action: Improving Overall Test Coverage in Fedora

I can not stress enough how important it is to further improve test coverage in Fedora! You can help too. Here's how:

- Join upstream and create a test suite for a package you find interesting;

- Provide patches - first patch came in less than 30 minutes of initial announcement :);

- Review packages in the wiki and help identify false negatives;

- Forward to people who may be interested to work on these items;

- Share and promote in your local open source and developer communities;

Auto BuildRequires

Auto-BuildRequires

is a simple set of scripts which compliments rpmbuild by

automatically suggesting BuildRequires lines for the just built package.

It would be interesting to have this integrated into Koji and/or continuous integration environment and compare the output between every two consecutive builds (iow older and newer package versions). It sounds like a good way to identify newly added or removed dependencies and update the package specs accordingly.

How To Test Fonts Packages

This is exactly what Christopher Meng asked and frankly I have no idea.

I've come across a few fonts packages (amiri-fonts, gnu-free-fonts and thai-scalable-fonts) which seem to have some sort of test suites but I don't know how they work or what type of problems they test for. On top of that all three have a different way of doing things (e.g. not using a standardized test framework or a variation of such).

I'll keep you posted on this once I manage to get more info from upstream developers.

Is URL Field in RPM Useless

So is it? Opinions here differ from totally useless to "don't remove it, I need it". However I run a small test and from 2574 RPMs on the source DVD there is around 40% of "something different than HTTP 200 OK". This means 40% potentially broken URLs!

The majority are responses in the 3XX range and only less than 10% are actual errors (4XX, 5XX, missing URLs or connection errors).

It will be interesting to see if this can be removed from rpm altogether.

I don't think it will happen soon but if we don't use it why have it there?

My script for the test is here.

There are comments.

Call to Action: Improving Overall Test Coverage in Fedora

Around Christmas 2013 I said

... it looks like on average 30% of the packages execute their test suites at build time in the %check section and less than 35% have test suites at all! There’s definitely room for improvement and I plan to focus on this during 2014!

I've recently started working on this goal by first identifying potential offending packages and discussing the idea on Fedora's devel, packaging and test mailing lists.

May I present you nearly 2000 packages which need your love:

The intent for these pages is to serve as a source of working material for Fedora volunteers.

How Can I Help

- Join upstream and create a test suite for a package you find interesting;

- Provide patches - first patch came in less than 30 minutes of initial announcement :);

- Review packages in the wiki and help identify false negatives;

- Forward to people who may be interested to work on these items;

- Share and promote in your local open source and developer communities;

Important

If you would like to gain some open source practice and QA experience I will happily provide mentorship and general help so you can start working on Fedora. Just ping me!

There are comments.

7 Years and 1400 Bugs Later as Red Hat QA

Today I celebrate my 7th year working at Red Hat's Quality Engineering department. Here's my story!

On a cold winter Friday in 2007 I left my job as a software developer in Sofia, packed my stuff together, purchased my first laptop and on Sunday jumped the train to Brno to join the Release Test Team at Red Hat. Little did I know what it was all about. When I was offered the position I was on a very noisy bus and had to pick between two positions. I didn't quite understood what were the options and just picked the second one. Luckily everything turned out great and continues to this day.

I'm sharing my experience and highlighting some bugs which I've found. Hopefully you will find this interesting and amusing. If you are a QA engineer I urge you to take a look at my public bug portfolio, dive into details, read the comments and learn as much as you can.

What do I do exactly

From all QE teams in Red Hat, Release Test Team is the first one and last one to test a release. The team has both technical function and a more managerial one. Our focus is on the core Red Hat Enterprise Linux product. Unfortunately I can't go into much details because this is not a public facing unit. I will limit myself to public and/or non-sensitive information.

We are the first to test a new nightly build or a snapshot of the upcoming RHEL release. If the tree is installable other teams take over and do their magic. At the end when bits are published live we're the last to verify that content is published where it is expected to be. In short this is covering the work of the release engineering team which is to build a product and publish the contents for consumption.

The same principles apply to Fedora although the engagement here is less demanding.

Personally I have been and continue to be responsible for Red Hat Enterprise Linux 5 family of releases. It's up to me to give the go ahead for further testing or request a re-spin. This position also has the power to block and delay the GA release if not happy with testing or there is a considerable risk of failure until things are sorted out.

Like in other QA teams I create test plan documents, write test case scenarios, implement test automation scripts (and sometimes tools), regularly execute said test plans and test cases, find and report any new bugs and verify old ones are fixed. Most importantly make sure RHEL installs and is usable for further testing :).

Sometimes I have to deal with capacity planning and as RHEL 5 installation test lead I have to organize and manage the entire installation testing campaign for that product.

My favorite testing technique is exploratory testing.

Stats and Numbers

It is hard (if not impossible) to measure QA work with numbers alone but here are some interesting facts about my experience so far.

- Nearly 1400 bugs filed (1390 at the time of writing);

- Reported bugs across 32 different products. Top 3 being RHEL 6, RHEL 5 and Fedora (1000+ bugs);

- Top 3 components for reporting bugs against: anaconda, releng, kernel;

- Nearly 100 bugs filed in my first year 2007;

- The 3 most productive years being 2010, 2009, 2011 (800 + bugs);

- Filed 200 bugs/year which is about 1 bug/day considering holidays;

- 35th top bug reporter (excluding robot accounts). I was in top 10 a few years back;

Many of the bugs I report are private so if you'd like to know more stats just ask me and I'll see what I can do.

2007

My very first bug is RHBZ #231860(private) which is about the graphical update tool Pup which used to show the wrong number of available updates.

Then I've played with adding Dogtail support to Anaconda. While initially this was rejected (Fedora 6/7), it was implemented few years later (Fedora 9) and then removed once again during the big Anaconda rewrite.

I've spent my time working extensively on RHEL 5 battling with multi-lib issues, SELinux denials and generally making the 5 family less rough. Because I was still on-boarding I generally worked on everything I could get my hands on and also did some work on RHEL3-U9 (latest release before EOL) and some RHEL4-U6 testing.

With ia64 on RHEL3 I found a corner case kernel bug which flooded the serial console with messages and caused a multi-CPU system to freeze.

In 2008 Time went backwards

My first bug in 2008 is RHBZ #428280. glibc introduced SHA-256/512 hashes for hashing passwords with crypt but that wasn't documented.

UPDATE 2014-02-21 While testing 5.1 to 5.2 updates I found RHBZ #435475 - a severe performance degradation in the package installation process. Upgrades took almost twice as much time to complete, rising from 4 hours to 7 hours depending on hardware and package set. This was a tough one to test and verify. END UPDATE

While dogfooding the 5.2 beta in March I hit RHBZ #437252 - kernel: Timer ISR/0: Time went backwards. To this date this is one of my favorite bugs with a great error message!

Removal of a hack in RPM led to file conflicts under /usr/share/doc in several packages:

RHBZ #448905,

RHBZ #448906,

RHBZ #448907,

RHBZ #448909,

RHBZ #448910,

RHBZ #448911

which is also the first time I happen to file several bugs in a row.

ia64 couldn't boot with encrypted partitions - RHBZ #464769, RHEL 5 introduced support for ext4 - RHBZ #465248 and I've hit a fontconfig issue during upgrades - RHBZ #469190 which continued to resurface occasionally during the next 5 years.

This is the year when I took over responsibility for the general installation testing of RHEL 5 from James Laska and will continue to do so until it reaches end-of-life!

I've also worked on RHEL 4, Fedora and even the OLPC project. On the testing side of things I've participated in testing Fedora networking on the XO hardware and worked on translation and general issues.

2009 - here comes RHEL 6

This year starts my 3 most productive years period.

The second bug reported this year is RHBZ #481338 which also mentions one of my hobbies - wrist watches. While browsing a particular website Xorg CPU usage rose to 100%. I've seen a number of these through the years and I'm still not sure if its Xorg or Firefox or both to blame. And I still see my CPU usage go to 100% just like that and drain my battery. I'm open to suggestions how to test and debug what's going on as it doesn't happen in a reproducible fashion.

I happened to work on RHEL 4, RHEL 5, Fedora and the upcoming RHEL 6 releases and managed to file bugs in a row not once but twice. I wish I was paid per bug reported back then :).

The first series was about empty debuginfo packages with both empty packages which shouldn't have existed at all (e.g. redhat-release) and missing debuginfo information for binary packages (e.g. nmap).

The second series is around 100 bugs which had to do with the texinfo documentation of packages when installed with --excludedocs. The first one is RHBZ #515909 and the last one RHBZ #516014. While this works great for bumping up your bug count it made lots of developers unhappy and not all bugs were fixed. Still the use case is valid and these were proper software errors. It is also the first time I've used a script to file the bugs automatically and not by hand.

Near the end of the year I've started testing installation on new hardware by the likes of Intel and AMD before they hit the market. I had the pleasure to work with the latest chipsets and CPUs, even sometime pre-release versions and make sure Red Hat Enterprise Linux installed and worked properly on them. I've stopped doing this last year to free up time for other tasks.

2010 - one bug a day keeps developers at bay :)

My most productive year with 1+ bugs per day.

2010 starts with a bug about file conflicts (private one) and continues with the same narrative throughout the year. As a matter of fact I did a small experiment and found around 50000 (you read that right, fifty thousand) potentially conflicting files, mostly between multi-lib packages, which were being ignored by RPM due to its multi-lib policies. However these were primarily man pages or documentation and most of them didn't get fixed. The proper fix would have been to introduce a -docs sub-package and split these files from the actual binaries. Fortunately the world migrated to 64bit only and this isn't an issue anymore.

By that time RHEL 6 development was running at its peak capacity and there were Beta versions available. Almost the entire year I've been working on internal RHEL 6 snapshots and discovering the many new bugs introduced with tons of new features in the installer. Some of the new features included better IPv6 support, dracut and KVM.

An interesting set of bugs from September are the rpmlint errors and warnings ones, for example RHBZ #634931. I just run the most basic test tool against some packages. It generated lots of false negatives but also revealed bugs which were fixed.

Although there were many bugs filed this year I don't see any particularly interesting ones. It's been more like lots of work to improve the overall quality than exploring edge cases and finding interesting failures. If you find a bug from this period that you think is interesting I will comment on it.

2011 - Your system may be seriously compromised

This is the last year of my 3 year top cycle.

It starts with RHBZ #666687 - a patch for my crappy printer-scanner-coffee maker which I've been carrying around since 2009 when I bought it.

I was still working primarily on RHEL 6 but helped test the latest RHEL 4 release before it went end-of-life. The interesting thing about it was that unlike other released RHEL4-U9 was not available on installation media but only as an update from RHEL4-U8. This was a great experience which you happen to see every 4 to 5 years or so.

Btw I've also led the installation testing effort and RTT team through the last few RHEL 4 releases but given the product was approaching EOL there weren't many changes and things went smoothly.

A minor side activity was me playing around with USB Multi-seat and finding a few bugs here and there along the way.

Another interesting activity in 2011 was proof-reading the entire product documentation before its release which I can now relate to the Testing Documentation talk at FOSDEM 2014.

In 2011 I've started using the cloud and most notably Red Hat's OpenShift PaaS service. First internally as an early adopter and later externally after the product was announced to the public. There are a few interesting bugs here but they are private and I'm not at liberty to share although they've all been fixed since then.

An interesting bug with NUMA, Xen and ia64 (RHBZ #696599 - private) had me and devel banging our heads against the wall until we figured out that on this particular system the NUMA configuration was not suitable for running Xen virtualization.

Can you spot the problem here ?

try:

import kickstartGui

except:

print (_("Could not open display because no X server is running."))

print (_("Try running 'system-config-kickstart --help' for a list of options."))

sys.exit(0)

Be honest and use the comments form to tell me what you've found. If you struggled then see RHBZ #703085 and come back again to comment. I'd love to hear from you.

What do you do when you see an error message saying: Your system may be seriously compromised! /usr/sbin/NetworkManager tried to load a kernel module. This is the scariest error message I've ever seen. Luckily its just SELinux overreacting, see RHBZ #704090.

2012 is in the red zone

While the number of reported bugs dropped significantly compared to previous years this is the year when I've reported almost exclusively high priority and urgent bugs, the first one being RHBZ #771901.

RHBZ #799384(against Fedora) is one of the rare cases when I was able to contribute (although just by raising awareness) to localization and improved support for Bulgarian and Cyrillic. The other one case was last year. Btw I find it strange that although Cyrillic was invented by Bulgarians we didn't (or still don't) have a native font co-maintainer. Somebody please step up!

The red zone bugs continue to span till the end of the year across RHEL 5, 6 and early cuts of RHEL 7 with a pinch of OpenShift and some internal and external test tools.

In 2013 Bugzilla hit 1 million bugs

The year starts with a very annoying and still not fixed bug against ABRT. It's very frustrating when the tool which is supposed to help you file bugs doesn't work properly, see RHBZ #903591. It's a known fact that ABRT has problems and for this scenario I may have a tip for you.

RHBZ #923416 - another one of these 100% CPU bugs. As I said they happen from time to time and mostly go by unfixed or partially fixed because of their nature. Btw as I'm writing this post and have a few tabs open in Firefox it keeps using between 15% and 20% CPU and the CPU temperature is over 90 degrees C. And all I'm doing is writing text in the console. Help!

RHBZ #967229 - a minor one but reveals an important thing - your output (and input for that matter) methods may be producing different results. Worth testing if your software supports more than one.

This year I did some odd jobs working on several of Red Hat's layered products mainly Developer Toolset. It wasn't a tough job and was a refreshing break away from the mundane installation testing.

While I stopped working actively on the various RHEL families which are under development or still supported I happened to be one of top 10 bug reporters for high/urgent priority bugs for RHEL 7. In appreciation Red Hat sent me lots of corporate gifts and the Platform QE hoodie pictured at the top of the page. Many thanks!

In the summer Red Hat's Bugzilla hit One Million bugs. The closest I come to this milestone is RHBZ #999941.

I finally managed to transfer most of my responsibilities to co-workers and joined the Fedora QA team as a part-time contributor. I had some highs and lows with Fedora test days in Sofia as well. Good thing is I scored another 15 bugs across the virtualization stack and GNOME 3.10.

The year wraps up with another series of identical bugs, RHBZ #1024729 and RHBZ #1025289 for example. As it turned out lots of packages don't have any test suites at all and those which do don't always execute them automatically in %check. I've promised myself to improve this but still haven't had time to work on it. Hopefully by March I will have something in the works.

2014 - Fedora QA improvement

Last two months I've been working on some internal projects and looking a little bit into improving processes, test coverage and QA infrastructure - RHBZ #1064895. And Rawhide (upcoming Fedora 21) isn't behaving - RHBZ #1063245.

My goal for this year is to do more work on improving the overall test coverage of Fedora and together with the Fedora QA team bring an open testing infrastructure to the community.

Let's see how well that plays out!

What do I do now

During the last year I have gradually changed my responsibilities to work more on Fedora. As a volunteer in the Fedora QA I'm regularly testing installation of Rawhide trees and try to work closely with the community. I still have to manage RHEL 5 test cycles where I don't expect nothing disruptive at this stage in the product life-cycle!

I'm open to any ideas and help which can improve test coverage and quality of software in Fedora. If you're just joining the open source world this is an excellent opportunity to do some good, get noticed and even maybe get a job. I will definitely help you get through the process if you're willing to commit your time to this.

I hope this long post has been useful and fun to read. Please use the comments form to tell me if I'm missing something or you'd like to know more.

Looking forward to the next 7 years!

There are comments.

FOSDEM 2014 Report - Day #2 Testing and Automation

FOSDEM was hosting the Testing and automation devroom for the second year and this was the very reason I attended the conference. I managed to get in early and stayed until 15:00 when I had to leave to catch my flight (which was late :().

There were 3 talks given by Red Hat employees in the testing devroom which was a nice opportunity to meet some of the folks I've been working on IRC with. Unfortunately I didn't meet anyone from Fedora QA. Not sure if they were attending or not.

All the talks were interesting so see the official schedule and video for more details. I will highlight only the items I saw as particularly interesting or have not heard of before.

ANSTE

ANSTE - Advanced Network Service Testing Environment is a test infrastructure controller, something like our own Beaker but designed to create complex networking environments. I think it lacks many of the provisioning features built in Beaker and integration with various hypervisors and bare-metal provisioning. What it seems to do better (as far as I can tell from the talk) is to deploy virtual systems and create more complex network configuration between them. Not something I will need in the near future but definitely worth a look at.

cwrap

cwrap is...

a set of tools to create a fully isolated network environment to test client/server components on a single host. It provides synthetic account information, hostname resolution and support for privilege separation. The heart of cwrap consists of three libraries you can preload to any executable.

That one was the coolest technology I've seen so far although I may not need to use it at all, hmmm maybe testing DHCP fits the case.

It evolved from the Samba project and takes advantage of the order in which libraries are searched when resolving functions. When you preload the project libraries to any executable they will override standard libc functions for working with sockets, user accounts and privilege escalation.

The socket_wrapper library redirects networking sockets through local UNIX sockets and gives you the ability to test applications which need privileged ports with a local developer account.

The nss_wrapper library provides artificial information for user and group accounts, network name resolution using a hosts file and loading and testing of NSS modules.

The uid_wrapper library allows uid switching as a normal user (e.g. fake root) and supports user/group changing in the local thread using the syscalls (like glibc).

All of these wrapper libraries are controlled via environment variables and definitely makes testing of daemons and networking applications easier.

Testing Documentation

That one was just scratching the surface of an entire branch of testing which I've not even considered before. The talk also explains why it is hard to test documentation and what possible solutions there are.

If you write user guides and technical articles which need to stay current with the software this is definitely the place to start.

Automation in the Foreman Infrastructure

The last talk I've listened to. Definitely the best one from a general testing approach point of view. Greg talked about starting with Foreman unit tests, then testing the merged PR, then integration tests, then moving on to test the package build and then the resulting packages themselves.

These guys try to even test their own infrastructure (infra as code) and the test suites they use to test everything else. It's all about automation and the level of confidence you have in the entire process.

I like the fact that no single testing approach can make you confident enough before shipping the code and that they've taken into account changes which get introduced at various places (e.g. 3rd party package upgrades, distro specific issues, infrastructure changes and such)

If I had to attend only one session it would have been this one. There are many things for me to take back home and apply to my work on Fedora and RHEL.

If you find any of these topics remotely interesting I advise you to wait until FOSDEM video team uploads the recordings and watch the entire session stream. I'm definitely missing a lot of stuff which can't be easily reproduced in text form.

You can also find my report of the first FOSDEM'14 day on Saturday here.

There are comments.

Upstream Test Suite Status of Fedora 20

Last week I've expressed my thoughts about the state of upstream test suites in Fedora along with some other ideas. Following the response on this thread I'm starting to analyze all SRPM packages in Fedora 20 in order to establish a baseline. Here are my initial findings.

What's Inside

I've found two source distributions for Fedora 20:

- The

Fedora-20-source-DVD.isofile which to my knowledge contains the sources of all packages that comprise the installation media; - The

Everything/source/SRPMS/directory which appears to contain the sources of everything else available in the Fedora 20 repositories.

There are 2574 SRPM packages in Fedora-20 source DVD and 14364 SRPMs in the Everything/ directory. 9,2G vs. 41G.

Test Suite Execution In %check

Fedora Packaging Guidelines state

If the source code of the package provides a test suite, it should be executed in the %check section, whenever it is practical to do so.

In my research I found 738 SRPMs on the DVD which have a %check

section and 4838 such packages under Everything/. This is 28,6% and 33,6%

respectively.

Test Suite Existence

A quick grep for either test/ or tests/ directories in the package sources revealed

870 SRPM packages in the source DVD which are very likely to have a test suite.

This is 33,8%. I wasn't able to inspect the Everything/ directory with this script

because it takes too long to execute and my system crashed out of memory.

I will update this post later with that info.

UPDATE 2014-01-02:

In the Everything/ directory only 4481 (31,2%) SRPM packages appear to have

test suites.

The scripts and raw output are available at https://github.com/atodorov/fedora-scripts.

So it looks like on average 30% of the packages execute their test suites at build time in the %check section and less than 35% have test suites at all! There's definitely room for improvement and I plan to focus on this during 2014!

There are comments.

Can I Use Android Phone as Smart Card Reader

Today I had troubles with my Omnikey CardMan 6121 smart card reader.

For some reason it will not detect the card inside and was unusable.

/var/log/messages was filled with Card Not Powered messages:

Dec 18 11:17:55 localhost pcscd: eventhandler.c:292:EHStatusHandlerThread() Error powering up card: -2146435050 0x80100016

Dec 18 11:18:01 localhost pcscd: winscard.c:368:SCardConnect() Card Not Powered

Dec 18 11:18:02 localhost pcscd: winscard.c:368:SCardConnect() Card Not Powered

I've found the solution in RHBZ #531998.

I've found the problem, and it's purely mechanical. Omnikey has simply screwed up when they designed this reader. When the reader is inserted into the ExpressCard slot, it gets slightly compressed. This is enough to trigger the mechanical switch that detects insertions. If I jam something in there and force it apart, then pcscd starts reporting that the slot is empty.

Pierre Ossman, https://bugzilla.redhat.com/show_bug.cgi?id=531998#c12

So I tried moving the smart card a millimeter back and forth inside the reader and that fixed it for me.

This smart card is standard SIM size and I wonder if it is possible to use dual SIM smart phones and tablets as a reader? I will be happy to work on the software side if there is an open source project already (e.g. OpenSC + drivers for Android). If not, why not?

If you happen to have information on the subject please share it in the comments. Thanks!

There are comments.

Page 5 / 7