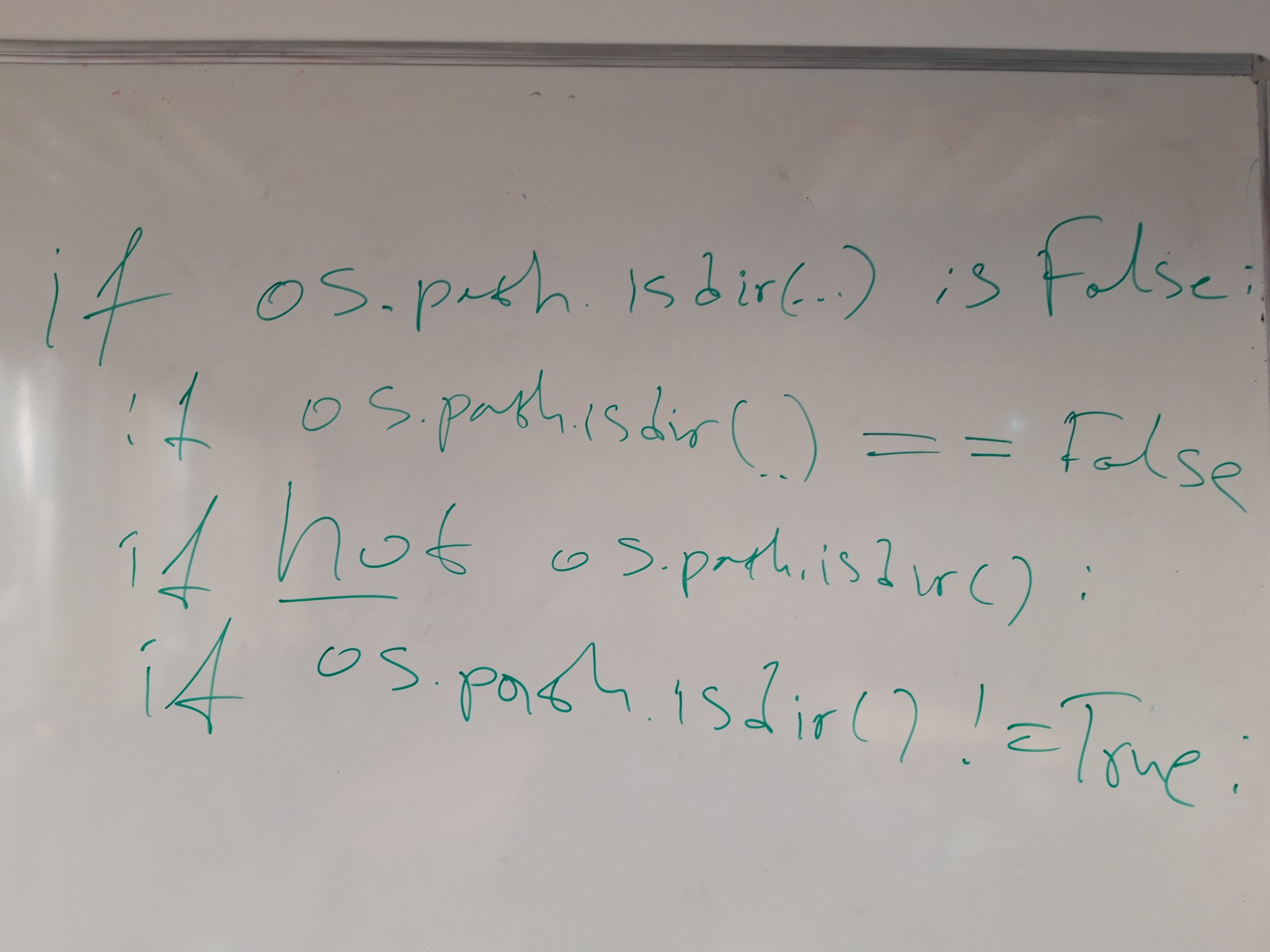

Comparing equivalent Python statements

While teaching one of my Python classes yesterday I noticed a conditional expression which can be written in several ways. All of these are equivalent in their behavior:

if os.path.isdir(path) is False:

pass

if os.path.isdir(path) is not True:

pass

if os.path.isdir(path) == False:

pass

if os.path.isdir(path) != True:

pass

if not os.path.isdir(path):

pass

My preferred style of writing is the last one (not os.path.isdir()) because it

looks the most pythonic of all. However the 5 expressions are slightly different

behind the scenes so they must also have different speed of execution

(click operator for link to documentation):

is- identity operator, e.g. both arguments are the same object as determined by theid()function. In CPython that means both arguments point to the same address in memoryis not- yields the inverse truth value ofis, e.g. both arguments are not the same object (address) in memory==- equality operator, e.g. both arguments have the same value!=- non-equality operator, e.g. both arguments have different valuesnot- boolean operator

In my initial tweet I mentioned

that I think is False should be the fastest. Kiwi TCMS team

member Zahari countered with not to be the fastest

but didn't provide any reasoning!

My initial reasoning was as follows:

isis essentially comparing addresses in memory so it should be as fast as it gets==and!=should be roughly the same but they do need to "read" values from memory which would take additional time before the actual comparison of these valuesnotis a boolean operator but honestly I have no idea how it is implemented so I don't have any opinion as to its performance

Using the following performance test script we get the average of 100 repetitions from executing the conditional statement 1 million times:

#!/usr/bin/env python

import statistics

import timeit

t = timeit.Timer(

"""

if False:

#if not result:

#if result is False:

#if result is not True:

#if result != True:

#if result == False:

pass

"""

,

"""

import os

result = os.path.isdir('/tmp')

"""

)

execution_times = t.repeat(repeat=100, number=1000000)

average_time = statistics.mean(execution_times)

print(average_time)

Note: in none of these variants the body of the if statement is executed so the results must be pretty close to how long it takes to calculate the conditional expression itself!

Results (ordered by speed of execution):

False _______ 0.009309015863109380- baselinenot result __ 0.011714859132189304- +25.84%is False ____ 0.018575656899483876- +99.54%is not True _ 0.018815848254598680- +102.1%!= True _____ 0.024881873669801280- +167.2%== False ____ 0.026119318689452484- +180.5%

Now these results weren't exactly what I was expecting. I thought not will come in

last but instead it came in first! Although is False came in second it is almost

twice as slow compared to baseline. Why is that ?

After digging around in CPython I found the following definition for comparison operators:

static PyObject * cmp_outcome(int op, PyObject *v, PyObject *w)

{

int res = 0;

switch (op) {

case PyCmp_IS:

res = (v == w);

break;

case PyCmp_IS_NOT:

res = (v != w);

break;

/* ... skip PyCmp_IN, PyCmp_NOT_IN, PyCmp_EXC_MATCH ... */

default:

return PyObject_RichCompare(v, w, op);

}

v = res ? Py_True : Py_False;

Py_INCREF(v);

return v;

}

where PyObject_RichCompare is defined as follows (definition order reversed

in actual sources):

/* Perform a rich comparison with object result. This wraps do_richcompare()

with a check for NULL arguments and a recursion check. */

PyObject * PyObject_RichCompare(PyObject *v, PyObject *w, int op)

{

PyObject *res;

assert(Py_LT <= op && op <= Py_GE);

if (v == NULL || w == NULL) {

if (!PyErr_Occurred())

PyErr_BadInternalCall();

return NULL;

}

if (Py_EnterRecursiveCall(" in comparison"))

return NULL;

res = do_richcompare(v, w, op);

Py_LeaveRecursiveCall();

return res;

}

/* Perform a rich comparison, raising TypeError when the requested comparison

operator is not supported. */

static PyObject * do_richcompare(PyObject *v, PyObject *w, int op)

{

richcmpfunc f;

PyObject *res;

int checked_reverse_op = 0;

if (v->ob_type != w->ob_type &&

PyType_IsSubtype(w->ob_type, v->ob_type) &&

(f = w->ob_type->tp_richcompare) != NULL) {

checked_reverse_op = 1;

res = (*f)(w, v, _Py_SwappedOp[op]);

if (res != Py_NotImplemented)

return res;

Py_DECREF(res);

}

if ((f = v->ob_type->tp_richcompare) != NULL) {

res = (*f)(v, w, op);

if (res != Py_NotImplemented)

return res;

Py_DECREF(res);

}

if (!checked_reverse_op && (f = w->ob_type->tp_richcompare) != NULL) {

res = (*f)(w, v, _Py_SwappedOp[op]);

if (res != Py_NotImplemented)

return res;

Py_DECREF(res);

}

/**********************************************************************

IMPORTANT: actual execution enters the next block because the bool

type doesn't implement it's own `tp_richcompare` function, see:

Objects/boolobject.c PyBool_Type (near the bottom of that file)

***********************************************************************/

/* If neither object implements it, provide a sensible default

for == and !=, but raise an exception for ordering. */

switch (op) {

case Py_EQ:

res = (v == w) ? Py_True : Py_False;

break;

case Py_NE:

res = (v != w) ? Py_True : Py_False;

break;

default:

PyErr_Format(PyExc_TypeError,

"'%s' not supported between instances of '%.100s' and '%.100s'",

opstrings[op],

v->ob_type->tp_name,

w->ob_type->tp_name);

return NULL;

}

Py_INCREF(res);

return res;

}

The not operator is defined in Objects/object.c as follows (definition order

reverse in actual sources):

/* equivalent of 'not v'

Return -1 if an error occurred */

int PyObject_Not(PyObject *v)

{

int res;

res = PyObject_IsTrue(v);

if (res < 0)

return res;

return res == 0;

}

/* Test a value used as condition, e.g., in a for or if statement.

Return -1 if an error occurred */

int PyObject_IsTrue(PyObject *v)

{

Py_ssize_t res;

if (v == Py_True)

return 1;

if (v == Py_False)

return 0;

if (v == Py_None)

return 0;

/*

IMPORTANT: skip the rest because we are working with bool so this

function will return after the first or the second if statement!

*/

}

So a rough overview of calculating the above expressions is:

not- call 1 function which compares the argument withPy_True/Py_False, compare its result with 0is/is not- do a switch/case/break, compare the result toPy_True/Py_False, call 1 function (Py_INCREF)==/!=- switch/default (that is evaluate all case conditions before that), call 1 function (PyObject_RichCompare), which performs couple of checks and calls another function (do_richcompare), which does a few more checks before executing switch/case/compare toPy_True/Py_False, callPy_INCREFand return the result.

Obviously not has the shortest code which needs to be executed.

We can also invoke the dis module, aka disassembler of Python byte code into mnemonics

like so (it needs a function to dissasemble):

import dis

def f(result):

if False:

pass

print(dis.dis(f))

From the results below you can see that all expression variants are very similar:

--------------- if False -------------------------

0 LOAD_GLOBAL 0 (False)

3 POP_JUMP_IF_FALSE 9

6 JUMP_FORWARD 0 (to 9)

>> 9 LOAD_CONST 0 (None)

12 RETURN_VALUE None

--------------- if not result --------------------

0 LOAD_FAST 0 (result)

3 POP_JUMP_IF_TRUE 9

6 JUMP_FORWARD 0 (to 9)

>> 9 LOAD_CONST 0 (None)

12 RETURN_VALUE None

--------------- if result is False ---------------

0 LOAD_FAST 0 (result)

3 LOAD_GLOBAL 0 (False)

6 COMPARE_OP 8 (is)

9 POP_JUMP_IF_FALSE 15

12 JUMP_FORWARD 0 (to 15)

>> 15 LOAD_CONST 0 (None)

18 RETURN_VALUE None

--------------- if result is not True ------------

0 LOAD_FAST 0 (result)

3 LOAD_GLOBAL 0 (True)

6 COMPARE_OP 9 (is not)

9 POP_JUMP_IF_FALSE 15

12 JUMP_FORWARD 0 (to 15)

>> 15 LOAD_CONST 0 (None)

18 RETURN_VALUE None

--------------- if result != True ----------------

0 LOAD_FAST 0 (result)

3 LOAD_GLOBAL 0 (True)

6 COMPARE_OP 3 (!=)

9 POP_JUMP_IF_FALSE 15

12 JUMP_FORWARD 0 (to 15)

>> 15 LOAD_CONST 0 (None)

18 RETURN_VALUE None

--------------- if result == False ---------------

0 LOAD_FAST 0 (result)

3 LOAD_GLOBAL 0 (False)

6 COMPARE_OP 2 (==)

9 POP_JUMP_IF_FALSE 15

12 JUMP_FORWARD 0 (to 15)

>> 15 LOAD_CONST 0 (None)

18 RETURN_VALUE None

--------------------------------------------------

The last 3 instructions are the same (that is the implicit return None of the function).

LOAD_GLOBAL is to "read" the True or False boolean constants and

LOAD_FAST is to "read" the function parameter in this example.

All of them _JUMP_ outside the if statement and the only difference is

which comparison operator is executed (if any in the case of not).

UPDATE 1: as I was publishing this blog post I read the following comments from Ammar Askar who also gave me a few pointers on IRC:

Note that this code path also has a direct inlined check for booleans, which should help too: https://t.co/YJ0az3q3qu

— Ammar Askar (@ammar2) December 6, 2019

So go ahead and take a look at

case TARGET(POP_JUMP_IF_TRUE).

UPDATE 2:

After the above comments from Ammar Askar on Twitter and from Kevin Kofler below I decided to try and change one of the expressions a bit:

t = timeit.Timer(

"""

result = not result

if result:

pass

"""

,

"""

import os

result = os.path.isdir('/tmp')

"""

)

that is, calculate the not operation, assign to variable and then evaluate the

conditional statement in an attempt to bypass the built-in compiler optimization.

The dissasembled code looks like this:

0 LOAD_FAST 0 (result)

2 UNARY_NOT

4 STORE_FAST 0 (result)

6 LOAD_FAST 0 (result)

8 POP_JUMP_IF_FALSE 10

10 LOAD_CONST 0 (None)

12 RETURN_VALUE None

The execution time was around 0.022 which is between is and ==. However the

not result operation itself (without assignment) appears to execute for 0.017

which still makes the not operator faster than the is operator, but only just!

Like already pointed out this is a fairly complex topic and it is evident that not everything can be compared directly in the same context (expression).

P.S.

When I teach Python I try to explain what is going on under the hood. Sometimes I draw squares on the whiteboard to represent various cells in memory and visualize things. One of my students asked me how do I know all of this? The essentials (for any programming language) are always documented in its official documentation. The rest is hacking around in its source code and learning how it works. This is also what I expect people working with/for me to be doing!

See you soon and Happy learning!

There are comments.

Introducing pylint-django 2.0

Today I have released pylint-django version 2.0 on PyPI. The changes are centered around compatibility with the latest pylint 2.0 and astroid 2.0 versions. I've also bumped pylint-django's version number to reflact that.

A major component, class transformations, was updated so don't be surprised if there are bugs. All the existing test cases pass but you never know what sort of edge case there could be.

I'm also hosting a workshop/corporate training about writing pylint plugins. If you are interested see this page!

Thanks for reading and happy testing!

There are comments.

Introducing pylint-django 0.8.0

Since my previous post was about writing pylint plugins I figured I'd let you know that I've released pylint-django version 0.8.0 over the weekend. This release merges all pull requests which were pending till now so make sure to read the change log.

Starting with this release Colin Howe and myself are the new maintainers of this package. My immediate goal is to triage all of the open issue and figure out if they still reproduce. If yes try to come up with fixes for them or at least get the conversation going again.

My next goal is to integrate pylint-django with Kiwi TCMS and start resolving all the 4000+ errors and warnings that it produces.

You are welcome to contribute of course. I'm also interested in hosting a workshop on the topic of pylint plugins.

Thanks for reading and happy testing!

There are comments.

How to write pylint checker plugins

In this post I will walk you through the process of learning how to write additional checkers for pylint!

Prerequisites

-

Read Contributing to pylint to get basic knowledge of how to execute the test suite and how it is structured. Basically call

tox -e py36. Verify that all tests PASS locally! -

Read pylint's How To Guides, in particular the section about writing a new checker. A plugin is usually a Python module that registers a new checker.

-

Most of pylint checkers are AST based, meaning they operate on the abstract syntax tree of the source code. You will have to familiarize yourself with the AST node reference for the

astroidandastmodules. Pylint uses Astroid for parsing and augmenting the AST.NOTE: there is compact and excellent documentation provided by the Green Tree Snakes project. I would recommend the Meet the Nodes chapter.

Astroid also provides exhaustive documentation and node API reference.

WARNING: sometimes Astroid node class names don't match the ones from ast!

-

Your interactive shell weapons are

ast.dump(),ast.parse(),astroid.parse()andastroid.extract_node(). I use them inside an interactive Python shell to figure out how a piece of source code is parsed and converted back to AST nodes! You can also try this ast node pretty printer! I personally haven't used it.

How pylint processes the AST tree

Every checker class may include special methods with names

visit_xxx(self, node) and leave_xxx(self, node) where xxx is the lowercase

name of the node class (as defined by astroid). These methods are executed

automatically when the parser iterates over nodes of the respective type.

All of the magic happens inside such methods. They are responsible for collecting information about the context of specific statements or patterns that you wish to detect. The hard part is figuring out how to collect all the information you need because sometimes it can be spread across nodes of several different types (e.g. more complex code patterns).

There is a special decorator called @utils.check_messages. You have to list

all message ids that your visit_ or leave_ method will generate!

How to select message codes and IDs

One of the most unclear things for me is message codes. pylint docs say

The message-id should be a 5-digit number, prefixed with a message category. There are multiple message categories, these being

C,W,E,F,R, standing forConvention,Warning,Error,FatalandRefactoring. The rest of the 5 digits should not conflict with existing checkers and they should be consistent across the checker. For instance, the first two digits should not be different across the checker.

I'm usually having troubles with the numbering part so you will have to get creative or look at existing checker codes.

Practical example

In Kiwi TCMS there's legacy code that looks like this:

def add_cases(run_ids, case_ids):

trs = TestRun.objects.filter(run_id__in=pre_process_ids(run_ids))

tcs = TestCase.objects.filter(case_id__in=pre_process_ids(case_ids))

for tr in trs.iterator():

for tc in tcs.iterator():

tr.add_case_run(case=tc)

return

Notice the dangling return statement at the end! It is useless because when missing

the default return value of this function will still be None. So I've decided to

create a plugin for that.

Armed with the knowledge above I first try the ast parser in the console:

Python 3.6.3 (default, Oct 5 2017, 20:27:50)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-11)] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import ast

>>> import astroid

>>> ast.dump(ast.parse('def func():\n return'))

"Module(body=[FunctionDef(name='func', args=arguments(args=[], vararg=None, kwonlyargs=[], kw_defaults=[], kwarg=None, defaults=[]), body=[Return(value=None)], decorator_list=[], returns=None)])"

>>>

>>>

>>> node = astroid.parse('def func():\n return')

>>> node

<Module l.0 at 0x7f5b04621b38>

>>> node.body

[<FunctionDef.func l.1 at 0x7f5b046219e8>]

>>> node.body[0]

<FunctionDef.func l.1 at 0x7f5b046219e8>

>>> node.body[0].body

[<Return l.2 at 0x7f5b04621c18>]

As you can see there is a FunctionDef node representing the function and it has

a body attribute which is a list of all statements inside the function. The last

element is .body[-1] and it is of type Return! The Return node also has an

attribute called .value which is the return value! The complete code will look

like this:

import astroid

from pylint import checkers

from pylint import interfaces

from pylint.checkers import utils

class UselessReturnChecker(checkers.BaseChecker):

__implements__ = interfaces.IAstroidChecker

name = 'useless-return'

msgs = {

'R2119': ("Useless return at end of function or method",

'useless-return',

'Emitted when a bare return statement is found at the end of '

'function or method definition'

),

}

@utils.check_messages('useless-return')

def visit_functiondef(self, node):

"""

Checks for presence of return statement at the end of a function

"return" or "return None" are useless because None is the default

return type if they are missing

"""

# if the function has empty body then return

if not node.body:

return

last = node.body[-1]

if isinstance(last, astroid.Return):

# e.g. "return"

if last.value is None:

self.add_message('useless-return', node=node)

# e.g. "return None"

elif isinstance(last.value, astroid.Const) and (last.value.value is None):

self.add_message('useless-return', node=node)

def register(linter):

"""required method to auto register this checker"""

linter.register_checker(UselessReturnChecker(linter))

Here's how to execute the new plugin:

$ PYTHONPATH=./myplugins pylint --load-plugins=uselessreturn tcms/xmlrpc/api/testrun.py | grep useless-return

W: 40, 0: Useless return at end of function or method (useless-return)

W:117, 0: Useless return at end of function or method (useless-return)

W:242, 0: Useless return at end of function or method (useless-return)

W:495, 0: Useless return at end of function or method (useless-return)

NOTES:

-

If you contribute this code upstream and pylint releases it you will get a traceback:

pylint.exceptions.InvalidMessageError: Message symbol 'useless-return' is already defined

this means your checker has been released in the latest version and you can drop the custom plugin!

-

This is example is fairly simple because the AST tree provides the information we need in a very handy way. Take a look at some of my other checkers to get a feeling of what a more complex checker looks like!

-

Write and run tests for your new checkers, especially if contributing upstream. Have in mind that the new checker will be executed against existing code and in combination with other checkers which could lead to some interesting results. I will leave the testing to yourself, all is written in the documentation.

This particular example I've contributed as PR #1821 which happened to contradict an existing checker. The update, raising warnings only when there's a single return statement in the function body, is PR #1823.

Workshop around the corner

I will be working together with HackSoft on an in-house workshop/training for writing pylint plugins. I'm also looking at reviving pylint-django so we can write more plugins specifically for Django based projects.

If you are interested in workshop and training on the topic let me know!

Thanks for reading and happy testing!

There are comments.

Can a nested function assign to variables from the parent function

While working on a new feature for Pelican I've put myself in a situation where I have two functions, one nested inside the other and I want the nested function to assign to variable from the parent function. Turns out this isn't so easy in Python!

def hello(who):

greeting = 'Hello'

i = 0

def do_print():

if i >= 5:

return

print i, greeting, who

i += 1

do_print()

do_print()

if __name__ == "__main__":

hello('World')

The example above is a recursive Hello World. Notice the i += 1 line!

This line causes i to be considered local to do_print() and the result

is that we get the following failure on Python 2.7:

Traceback (most recent call last):

File "./test.py", line 16, in <module>

hello('World')

File "./test.py", line 13, in hello

do_print()

File "./test.py", line 6, in do_print

if i >= 5:

UnboundLocalError: local variable 'i' referenced before assignment

We can workaround by using a global variable like so:

i = 0

def hello(who):

greeting = 'Hello'

def do_print():

global i

if i >= 5:

return

print i, greeting, who

i += 1

do_print()

do_print()

However I prefer not to expose internal state outside the hello()

function. Only if there was a keyword similar to global. In Python 3

there is nonlocal!

def hello(who):

greeting = 'Hello'

i = 0

def do_print():

nonlocal i

if i >= 5:

return

print(i, greeting, who)

i += 1

do_print()

do_print()

nonlocal is nice but it doesn't exist in Python 2! The workaround is to not assign state to the variable itself, but instead use a mutable container. That is instead of a scalar use a list or a dictionary like so:

def hello(who):

greeting = 'Hello'

i = [0]

def do_print():

if i[0] >= 5:

return

print i[0], greeting, who

i[0] += 1

do_print()

do_print()

Thanks for reading and happy coding!

There are comments.

Python 2 vs. Python 3 List Sort Causes Bugs

Can sorting a list of values crash your software? Apparently it can and is another example of my Hello World Bugs. Python 3 has simplified the rules for ordering comparisons which changes the behavior of sorting lists when their contents are dictionaries. For example:

Python 2.7.5 (default, Oct 11 2015, 17:47:16)

[GCC 4.8.3 20140911 (Red Hat 4.8.3-9)] on linux2

>>>

>>> [{'a':1}, {'b':2}] < [{'a':1}, {'b':2, 'c':3}]

True

>>>

Python 3.5.1 (default, Apr 27 2016, 04:21:56)

[GCC 4.8.3 20140911 (Red Hat 4.8.3-9)] on linux

>>> [{'a':1}, {'b':2}] < [{'a':1}, {'b':2, 'c':3}]

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

TypeError: unorderable types: dict() < dict()

>>>

The problem is that the second elements in both lists have different keys and Python doesn't know how to compare them. In earlier Python versions this has been special cased as described here by Ned Batchelder (the author of Python's coverage tool) but in Python 3 dictionaries have no natural sort order.

In the case of django-chartit (of which I'm now the official maintainer) this bug triggers when you want to plot data from multiple sources (models) on the same chart. In this case the fields coming from each data series are different and the above error is triggered.

I have worked around this in commit 9d9033e by simply disabling an iterator sort but this is sub-optimal and I'm not quite certain what the side effect might be. I suspect you may end up with a chart where the order of values on the X axis isn't the same for the different models, e.g. one graph plotting the data in ascending order the other one in descending.

The trouble also comes from the fact that we're sorting an iterator (a list of fields) by

telling Python to use a list of dicts to determine the sort order. In this arrangement

there is no way to tell Python how we want to compare our dicts. The only solution I

can think about is creating a custom class and implementing a custom __cmp__() method

for this data structure!

There are comments.

On Python Infinite Loops

How do you write an endless loop without using True, False, number constants

and comparison operators in Python ?

I've been working on the mutation test tool

Cosmic Ray and discovered that it

was missing a boolean replacement operator, that is an operator which will switch

True to False and vice versa, so I wrote one. I've also added some tests to

Cosmic Ray's test suite and then I hit the infinite loop problem.

CR's test suite contains the following code inside a module called adam.py

while True:

break

The test suite executes mutations on adam.py and then runs some tests which

it expects to fail. During execution one of the mutations is

replace break with continue which makes the above loop infinite. The test suite

times out after a while and kills the mutation. Everything fails as expected and

we're good.

Adding my boolean replacement operator broke this function. All of the other mutations work as expected but then the loop becomes

while False:

break

When we test this particular mutation there is no infinite loop so Cosmic Ray's test suite doesn't time out like it should and an error is reported.

job ID 25:Outcome.SURVIVED:adam

command: cosmic-ray worker adam boolean_replacer 2 unittest -- tests

--- mutation diff ---

--- a/home/travis/build/MrSenko/cosmic-ray/test_project/adam.py

+++ b/home/travis/build/MrSenko/cosmic-ray/test_project/adam.py

@@ -32,6 +32,6 @@

return x

def trigger_infinite_loop():

- while True:

+ while False:

break

So the question becomes how to write the loop condition in such a way that nothing

will mutate it but it will still remain true so that when break becomes continue

this piece of code will become an infinite loop ? Using True or False constants

obviously is a no go. Same goes for numeric constants, e.g. 1 or comparison

operators like >, <, is, not, etc. - all of them will be mutated and will

break the loop condition.

So I took a look at the docs for truth value testing and discovered my solution:

while object():

break

I'm creating an object instance here which will not be mutated by any of the existing mutation operators.

Thanks for reading and happy testing!

There are comments.

Mismatch in Pyparted Interfaces

Last week my co-worker Marek Hruscak, from Red Hat, found an interesting case of mismatch between the two interfaces provided by pyparted. In this article I'm going to give an example, using simplified code and explain what is happening. From pyparted's documentation we learn the following

pyparted is a set of native Python bindings for libparted. libparted is the library portion of the GNU parted project. With pyparted, you can write applications that interact with disk partition tables and filesystems.

The Python bindings are implemented in two layers. Since libparted itself is written in C without any real implementation of objects, a simple 1:1 mapping of externally accessible libparted functions was written. This mapping is provided in the _ped Python module. You can use that module if you want to, but it's really just meant for the larger parted module.

_ped libparted Python bindings, direct 1:1: function mapping parted Native Python code building on _ped, complete with classes, exceptions, and advanced functionality.

The two interfaces are the _ped and parted modules. As a user I expect them

to behave exactly the same but they don't. For example some partition properties

are read-only in libparted and _ped but not in parted. This is the mismatch

I'm talking about.

Consider the following tests (also available on GitHub)

diff --git a/tests/baseclass.py b/tests/baseclass.py

index 4f48b87..30ffc11 100644

--- a/tests/baseclass.py

+++ b/tests/baseclass.py

@@ -168,6 +168,12 @@ class RequiresPartition(RequiresDisk):

self._part = _ped.Partition(disk=self._disk, type=_ped.PARTITION_NORMAL,

self._part = _ped.Partition(disk=self._disk, type=_ped.PARTITION_NORMAL,

start=0, end=100, fs_type=_ped.file_system_type_get("ext2"))

+ geom = parted.Geometry(self.device, start=100, length=100)

+ fs = parted.FileSystem(type='ext2', geometry=geom)

+ self.part = parted.Partition(disk=self.disk, type=parted.PARTITION_NORMAL,

+ geometry=geom, fs=fs)

+

+

# Base class for any test case that requires a hash table of all

# _ped.DiskType objects available

class RequiresDiskTypes(unittest.TestCase):

diff --git a/tests/test__ped_partition.py b/tests/test__ped_partition.py

index 7ef049a..26449b4 100755

--- a/tests/test__ped_partition.py

+++ b/tests/test__ped_partition.py

@@ -62,8 +62,10 @@ class PartitionGetSetTestCase(RequiresPartition):

self.assertRaises(exn, setattr, self._part, "num", 1)

self.assertRaises(exn, setattr, self._part, "fs_type",

_ped.file_system_type_get("fat32"))

- self.assertRaises(exn, setattr, self._part, "geom",

- _ped.Geometry(self._device, 10, 20))

+ with self.assertRaises((AttributeError, TypeError)):

+# setattr(self._part, "geom", _ped.Geometry(self._device, 10, 20))

+ self._part.geom = _ped.Geometry(self._device, 10, 20)

+

self.assertRaises(exn, setattr, self._part, "disk", self._disk)

# Check that values have the right type.

diff --git a/tests/test_parted_partition.py b/tests/test_parted_partition.py

index 0a406a0..8d8d0fd 100755

--- a/tests/test_parted_partition.py

+++ b/tests/test_parted_partition.py

@@ -23,7 +23,7 @@

import parted

import unittest

-from tests.baseclass import RequiresDisk

+from tests.baseclass import RequiresDisk, RequiresPartition

# One class per method, multiple tests per class. For these simple methods,

# that seems like good organization. More complicated methods may require

@@ -34,11 +34,11 @@ class PartitionNewTestCase(unittest.TestCase):

# TODO

self.fail("Unimplemented test case.")

-@unittest.skip("Unimplemented test case.")

-class PartitionGetSetTestCase(unittest.TestCase):

+class PartitionGetSetTestCase(RequiresPartition):

def runTest(self):

- # TODO

- self.fail("Unimplemented test case.")

+ with self.assertRaises((AttributeError, TypeError)):

+ #setattr(self.part, "geometry", parted.Geometry(self.device, start=10, length=20))

+ self.part.geometry = parted.Geometry(self.device, start=10, length=20)

@unittest.skip("Unimplemented test case.")

class PartitionGetFlagTestCase(unittest.TestCase):

The test in test__ped_partition.py works without problems, I've modified it for

visual reference only. This was also the inspiration behind the test in

test_parted_partition.py. However the second test fails with

======================================================================

FAIL: runTest (tests.test_parted_partition.PartitionGetSetTestCase)

----------------------------------------------------------------------

Traceback (most recent call last):

File "/tmp/pyparted/tests/test_parted_partition.py", line 41, in runTest

self.part.geometry = parted.Geometry(self.device, start=10, length=20)

AssertionError: (<type 'exceptions.AttributeError'>, <type 'exceptions.TypeError'>) not raised

----------------------------------------------------------------------

Now it's clear that something isn't quite the same between the two interfaces.

If we look at src/parted/partition.py we see the following snippet

137 fileSystem = property(lambda s: s._fileSystem, lambda s, v: setattr(s, "_fileSystem", v))

138 geometry = property(lambda s: s._geometry, lambda s, v: setattr(s, "_geometry", v))

139 system = property(lambda s: s.__writeOnly("system"), lambda s, v: s.__partition.set_system(v))

140 type = property(lambda s: s.__partition.type, lambda s, v: setattr(s.__partition, "type", v))

The geometry property is indeed read-write but the system property is write-only.

git blame leads us to the interesting

commit 2fc0ee2b, which changes

definitions for quite a few properties and removes the _readOnly method which raises

an exception. Even more interesting is the fact that the Partition.geometry property

hasn't been changed. If you look closer you will notice that the deleted definition and

the new one are exactly the same. Looks like the problem existed even before this change.

Digging down even further we find

commit 7599aa1

which is the very first implementation of the parted module. There you can see the

_readOnly method and some properties like path and disk correctly marked as such

but geometry isn't.

Shortly after this commit the first test was added (4b9de0e) and a bit later the second, empty test class, was added (c85a5e6). This only goes to show that every piece of software needs appropriate QA coverage, which pyparted was kind of lacking (and I'm trying to change that).

The reason this bug went unnoticed for so long

is the limited exposure of pyparted. To my knowledge anaconda, the Fedora installer

is its biggest (if not single) consumer and maybe it uses only the _ped

interface (I didn't check) or it doesn't try to do silly things like setting

a value to a read-only property.

The lesson from this story is to test all of your interfaces and also make sure they are behaving in exactly the same manner!

Thanks for reading and happy testing!

There are comments.

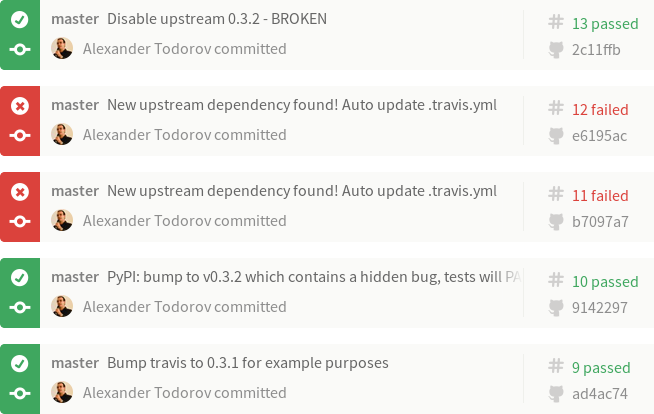

Automatic Upstream Dependency Testing

Ever since RHEL 7.2 python-libs broke s3cmd I've been pondering an age old problem: How do I know if my software works with the latest upstream dependencies ? How can I pro-actively monitor for new versions and add them to my test matrix ?

Mixing together my previous experience with Difio and monitoring upstream sources, and Forbes Lindesay's GitHub Automation talk at DEVit Conf I came together with a plan:

- Make an application which will execute when new upstream version is available;

- Automatically update

.travis.ymlfor the projects I'm interested in; - Let Travis-CI execute my test suite for all available upstream versions;

- Profit!

How Does It Work

First we need to monitor upstream! RubyGems.org has nice webhooks interface, you can even trigger on individual packages. PyPI however doesn't have anything like this :(. My solution is to run a cron job every hour and parse their RSS stream for newly released packages. This has been working previously for Difio so I re-used one function from the code.

After finding anything we're interested in comes the hard part - automatically

updating .travis.yml using the GitHub API. I've described this in more detail

here. This time

I've slightly modified the code to update only when needed and accept more

parameters so it can be reused.

Travis-CI has a clean interface to specify environment variables and

defining several

of them crates a test matrix. This is exactly what I'm doing.

.travis.yml is updated with a new ENV setting, which determines the upstream

package version. After commit new build is triggered which includes the expanded

test matrix.

Example

Imagine that our Project 2501 depends on FOO version 0.3.1. The build log illustrates what happened:

- Build #9 is what we've tested with FOO-0.3.1 and released to production. Test result is PASS!

- Build #10 - meanwhile upstream releases FOO-0.3.2 which causes our project to break. We're not aware of this and continue developing new features while all test results still PASS! When our customers upgrade their systems Project 2501 will break ! Tests didn't catch it because test matrix wasn't updated. Please ignore the actual commit message in the example! I've used the same repository for the dummy dependency package.

- Build #11 - the monitoring solution finds FOO-0.3.2 and updates the test matrix automatically. The build immediately breaks! More precisely the test with version 0.3.2 fails!

- Build #12 - we've alerted FOO.org about their problem and they've released FOO-0.3.3. Our monitor has found that and updated the test matrix. However the 0.3.2 test job still fails!

- Build #13 - we decide to workaround the 0.3.2 failure or simply handle the

error gracefully. In this example I've removed version 0.3.2 from the test

matrix to simulate that. In reality I wouldn't touch

.travis.ymlbut instead update my application and tests to check for that particular version. All test results are PASS again!

Btw Build #11 above was triggered manually (./monitor.py) while Build #12 came from OpenShit, my hosting environment.

At present I have this monitoring enabled for my new Markdown extensions and will also add it to django-s3-cache once it migrates to Travis-CI (it uses drone.io now).

Enough Talk, Show me the Code

#!/usr/bin/env python

import os

import sys

import json

import base64

import httplib

from pprint import pprint

from datetime import datetime

from xml.dom.minidom import parseString

def get_url(url, post_data = None):

# GitHub requires a valid UA string

headers = {

'User-Agent' : 'Mozilla/5.0 (X11; Linux x86_64; rv:10.0.5) Gecko/20120601 Firefox/10.0.5',

}

# shortcut for GitHub API calls

if url.find("://") == -1:

url = "https://api.github.com%s" % url

if url.find('api.github.com') > -1:

if not os.environ.has_key("GITHUB_TOKEN"):

raise Exception("Set the GITHUB_TOKEN variable")

else:

headers.update({

'Authorization': 'token %s' % os.environ['GITHUB_TOKEN']

})

(proto, host_path) = url.split('//')

(host_port, path) = host_path.split('/', 1)

path = '/' + path

if url.startswith('https'):

conn = httplib.HTTPSConnection(host_port)

else:

conn = httplib.HTTPConnection(host_port)

method = 'GET'

if post_data:

method = 'POST'

post_data = json.dumps(post_data)

conn.request(method, path, body=post_data, headers=headers)

response = conn.getresponse()

if (response.status == 404):

raise Exception("404 - %s not found" % url)

result = response.read().decode('UTF-8', 'replace')

try:

return json.loads(result)

except ValueError:

# not a JSON response

return result

def post_url(url, data):

return get_url(url, data)

def monitor_rss(config):

"""

Scan the PyPI RSS feeds to look for new packages.

If name is found in config then execute the specified callback.

@config is a dict with keys matching package names and values

are lists of dicts

{

'cb' : a_callback,

'args' : dict

}

"""

rss = get_url("https://pypi.python.org/pypi?:action=rss")

dom = parseString(rss)

for item in dom.getElementsByTagName("item"):

try:

title = item.getElementsByTagName("title")[0]

pub_date = item.getElementsByTagName("pubDate")[0]

(name, version) = title.firstChild.wholeText.split(" ")

released_on = datetime.strptime(pub_date.firstChild.wholeText, '%d %b %Y %H:%M:%S GMT')

if name in config.keys():

print name, version, "found in config"

for cfg in config[name]:

try:

args = cfg['args']

args.update({

'name' : name,

'version' : version,

'released_on' : released_on

})

# execute the call back

cfg['cb'](**args)

except Exception, e:

print e

continue

except Exception, e:

print e

continue

def update_travis(data, new_version):

travis = data.rstrip()

new_ver_line = " - VERSION=%s" % new_version

if travis.find(new_ver_line) == -1:

travis += "\n" + new_ver_line + "\n"

return travis

def update_github(**kwargs):

"""

Update GitHub via API

"""

GITHUB_REPO = kwargs.get('GITHUB_REPO')

GITHUB_BRANCH = kwargs.get('GITHUB_BRANCH')

GITHUB_FILE = kwargs.get('GITHUB_FILE')

# step 1: Get a reference to HEAD

data = get_url("/repos/%s/git/refs/heads/%s" % (GITHUB_REPO, GITHUB_BRANCH))

HEAD = {

'sha' : data['object']['sha'],

'url' : data['object']['url'],

}

# step 2: Grab the commit that HEAD points to

data = get_url(HEAD['url'])

# remove what we don't need for clarity

for key in data.keys():

if key not in ['sha', 'tree']:

del data[key]

HEAD['commit'] = data

# step 4: Get a hold of the tree that the commit points to

data = get_url(HEAD['commit']['tree']['url'])

HEAD['tree'] = { 'sha' : data['sha'] }

# intermediate step: get the latest content from GitHub and make an updated version

for obj in data['tree']:

if obj['path'] == GITHUB_FILE:

data = get_url(obj['url']) # get the blob from the tree

data = base64.b64decode(data['content'])

break

old_travis = data.rstrip()

new_travis = update_travis(old_travis, kwargs.get('version'))

# bail out if nothing changed

if new_travis == old_travis:

print "new == old, bailing out", kwargs

return

####

#### WARNING WRITE OPERATIONS BELOW

####

# step 3: Post your new file to the server

data = post_url(

"/repos/%s/git/blobs" % GITHUB_REPO,

{

'content' : new_travis,

'encoding' : 'utf-8'

}

)

HEAD['UPDATE'] = { 'sha' : data['sha'] }

# step 5: Create a tree containing your new file

data = post_url(

"/repos/%s/git/trees" % GITHUB_REPO,

{

"base_tree": HEAD['tree']['sha'],

"tree": [{

"path": GITHUB_FILE,

"mode": "100644",

"type": "blob",

"sha": HEAD['UPDATE']['sha']

}]

}

)

HEAD['UPDATE']['tree'] = { 'sha' : data['sha'] }

# step 6: Create a new commit

data = post_url(

"/repos/%s/git/commits" % GITHUB_REPO,

{

"message": "New upstream dependency found! Auto update .travis.yml",

"parents": [HEAD['commit']['sha']],

"tree": HEAD['UPDATE']['tree']['sha']

}

)

HEAD['UPDATE']['commit'] = { 'sha' : data['sha'] }

# step 7: Update HEAD, but don't force it!

data = post_url(

"/repos/%s/git/refs/heads/%s" % (GITHUB_REPO, GITHUB_BRANCH),

{

"sha": HEAD['UPDATE']['commit']['sha']

}

)

if data.has_key('object'): # PASS

pass

else: # FAIL

print data['message']

if __name__ == "__main__":

config = {

"atodorov-test" : [

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/bztest',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

}

],

"Markdown" : [

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/Markdown-Bugzilla-Extension',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

},

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/Markdown-No-Lazy-Code-Extension',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

},

{

'cb' : update_github,

'args': {

'GITHUB_REPO' : 'atodorov/Markdown-No-Lazy-BlockQuote-Extension',

'GITHUB_BRANCH' : 'master',

'GITHUB_FILE' : '.travis.yml'

}

},

],

}

# check the RSS to see if we have something new

monitor_rss(config)

There are comments.

Hosting Multiple Python WSGI Scripts on OpenShift

With OpenShift you can host WSGI Python applications. By default the Python cartridge comes with a simple WSGI app and the following directory layout

./

./.openshift/

./requirements.txt

./setup.py

./wsgi.py

I wanted to add my GitHub Bugzilla Hook in a subdirectory (git submodule actually) and simply reserve a URL which will be served by this app. My intention is also to add other small scripts to the same cartridge in order to better utilize the available resources.

Using WSGIScriptAlias inside .htaccess DOESN'T WORK! OpenShift errors

out when WSGIScriptAlias is present. I suspect this to be a known limitation

and I have an open support case with Red Hat to confirm this.

My workaround is to configure the URL paths from the wsgi.py file in the root

directory. For example

diff --git a/wsgi.py b/wsgi.py

index c443581..20e2bf5 100644

--- a/wsgi.py

+++ b/wsgi.py

@@ -12,7 +12,12 @@ except IOError:

# line, it's possible required libraries won't be in your searchable path

#

+from github_bugzilla_hook import wsgi as ghbzh

+

def application(environ, start_response):

+ # custom paths

+ if environ['PATH_INFO'] == '/github-bugzilla-hook/':

+ return ghbzh.application(environ, start_response)

ctype = 'text/plain'

if environ['PATH_INFO'] == '/health':

This does the job and is almost the same as configuring the path in .htaccess.

I hope it helps you!

There are comments.

Commit a file with the GitHub API and Python

How do you commit changes to a file using the GitHub API ? I've found this post by Levi Botelho which explains the necessary steps but without any code. So I've used it and created a Python example.

I've rearranged the steps so that all write operations follow after a certain section in the code and also added an intermediate section which creates the updated content based on what is available in the repository.

I'm just appending

versions of Markdown to the .travis.yml (I will explain why in my next post)

and this is hard-coded for the sake of example. All content related operations

are also based on the GitHub API because I want to be independent of the source

code being around when I push this script to a hosting provider.

I've tested this script against itself. In the commits log you can find the Automatic update to Markdown-X.Y messages. These are from the script. Also notice the Merge remote-tracking branch 'origin/master' messages, these appeared when I pulled to my local copy. I believe the reason for this is that I have some dangling trees and/or commits from the time I was still experimenting with a broken script. I've tested on another clean repository and there are no such merges.

IMPORTANT

For this to work you need to properly authenticate with GitHub. I've crated a new token at https://github.com/settings/tokens with the public_repo permission and that works for me.

There are comments.

Inspecting Method Arguments in Python

How do you execute methods from 3rd party classes in a backward compatible manner when these methods change their arguments ?

s3cmd's PR #668 is an example

of this behavior, where python-libs's httplib.py added a new parameter

to disable hostname checks. As a result of this

s3cmd broke.

One solution is to use try-except and nest as much blocks as you need to cover all of the argument variations. In s3cmd's case we needed two nested try-except blocks.

Another possibility is to use the inspect module and create the argument list passed to the method dynamically, based on what parameters are supported. Depending on the number of parameters this may or may not be more elegant than using try-except blocks although it looks to me a bit more human readable.

The argument list is a member named co_varnames of the code object. If you want to get the members for a function then

inspect.getmembers(my_function.__code__)

if you want to get the members for a class method then

inspect.getmembers(MyClass.my_method.__func__.__code__)

Consider the following example

import inspect

def hello_world(greeting, who):

print greeting, who

class V1(object):

def __init__(self):

self.message = "Hello World"

def do_print(self):

print self.message

class V2(V1):

def __init__(self, greeting="Hello"):

V1.__init__(self)

self.message = self.message.replace('Hello', greeting)

class V3(V2):

def __init__(self, greeting="Hello", who="World"):

V2.__init__(self, greeting)

self.message = self.message.replace('World', who)

if __name__ == "__main__":

print "=== Example: call the class directly ==="

v1 = V1()

v1.do_print()

v2 = V2(greeting="Good day")

v2.do_print()

v3 = V3(greeting="Good evening", who="everyone")

v3.do_print()

# uncomment to see the error raised

#v4 = V1(greeting="Good evening", who="everyone")

#v4.do_print()

print "=== Example: use try-except ==="

for C in [V1, V2, V3]:

try:

c = C(greeting="Good evening", who="everyone")

except TypeError:

try:

print " error: nested-try-except-1"

c = C(greeting="Good evening")

except TypeError:

print " error: nested-try-except-2"

c = C()

c.do_print()

print "=== Example: using inspect ==="

for C in [V1, V2, V3]:

members = dict(inspect.getmembers(C.__init__.__func__.__code__))

var_names = members['co_varnames']

args = {}

if 'greeting' in var_names:

args['greeting'] = 'Good morning'

if 'who' in var_names:

args['who'] = 'children'

c = C(**args)

c.do_print()

The output of the example above is as follows

=== Example: call the class directly ===

Hello World

Good day World

Good evening everyone

=== Example: use try-except ===

error: nested-try-except-1

error: nested-try-except-2

Hello World

error: nested-try-except-1

Good evening World

Good evening everyone

=== Example: using inspect ===

Hello World

Good morning World

Good morning children

There are comments.

3 New Python Markdown extensions

I've managed to resolve several of my issues with Python-Markdown behaving not quite as I expect. I have the pleasure to announce three new extensions which now power this blog.

No Lazy BlockQuote Extension

Markdown-No-Lazy-BlockQuote-Extension

makes it possible blockquotes separated by newline to be rendered separately.

If you want to include empty lines in your blockquotes make sure to prefix

each line with >. The standard behavior can be seen in

GitHub

while the changed behavior is visible

in this article. Notice how on

GitHub both quotes are rendered as one big block, while here they are two separate

blocks.

No Lazy Code Extension

Markdown-No-Lazy-Code-Extension allows code blocks separated by newline to be rendered separately. If you want to include empty lines in your code blocks make sure to indent them as well. The standard behavior can be seen on GitHub while the improved one in this post. Notice how GitHub renders the code in the Warning Bugs Present section as one block while in reality these are two separate blocks from two different files.

Bugzilla Extension

Markdown-Bugzilla-Extension

allows for easy references to bugs. Strings like [bz#123] and [rhbz#456] will

be converted into links.

All three extensions are available on PyPI!

Bonus: Codehilite with filenames in Markdown

The standard Markdown codehilite extension doesn't allow to specify filename

on the :::python shebang line while Octopress did and I've used the syntax

on this blog in a number of articles. The fix is simple, but requires changes in

both Markdown and Pygments. See

PR #445 for the initial

version and ongoing discussion. Example of the new :::python settings.py syntax

can be seen

here.

There are comments.

python-libs in RHEL 7.2 broke SSL verification in s3cmd

Today started with Planet Sofia Valley being broken again. Indeed it's been broken since last Friday when I've upgraded to the latest RHEL 7.2. I quickly identified that I was hitting Issue #647. Then I tried the git checkout without any luck. This is when I started to suspect that python-libs has been updated in an incompatible way.

After series of reported bugs,

rhbz#1284916,

rhbz#1284930,

Python#25722, it was clear that

ssl.py was working according to RFC6125, that Amazon S3 was not playing

nicely with this same RFC and that my patch proposal was wrong.

This immediately had me looking upper in the stack at httplib.py and s3cmd.

Indeed there was a change in httplib.py which introduced two parameters,

context and check_hostname, to HTTPSConnection.__init__. The change

also supplied the logic which performs SSL hostname validation.

if not self._context.check_hostname and self._check_hostname:

try:

ssl.match_hostname(self.sock.getpeercert(), server_hostname)

except Exception:

self.sock.shutdown(socket.SHUT_RDWR)

self.sock.close()

raise

This looks a bit doggy as I don't quite understand the intention behind not PREDICATE and PREDICATE. Anyway to disable the validation you need both parameters set to False, which is PR #668.

Notice the two try-except blocks. This is in case we're running with a version that has a context but not the check_hostname parameter. I've found the inspect.getmembers function which can be used to figure out what parameters are there for the init method but a solution based on it doesn't appear to be more elegant. I will describe this in more details in my next post.

There are comments.

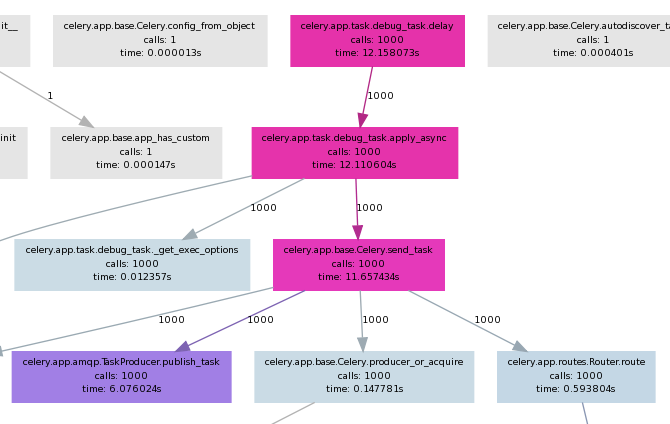

Speeding Up Celery Backends, Part 3

In the second part of this article we've seen how slow Celery actually is. Now let's explore what happens inside and see if we can't speed things up.

I've used pycallgraph to create call graph visualizations of my application. It has the nice feature to also show execution time and use different colors for fast and slow operations.

Full command line is:

pycallgraph -v --stdlib --include ... graphviz -o calls.png -- ./manage.py celery_load_test

where the --include is used to limit the graph to a particular Python module(s).

General findings

- The first four calls is where most of the time is spent as seen on the picture.

- As it seems most of the slow down comes from Celery itself, not the underlying messaging transport Kombu (not shown on picture)

celery.app.amqp.TaskProducer.publish_tasktakes half of the execution time ofcelery.app.base.Celery.send_taskcelery.app.task.Task.delaydirectly executes.apply_asyncand can be skipped if one rewrites the code.

More findings

In celery.app.base.Celery.send_task there is this block of code:

349 with self.producer_or_acquire(producer) as P:

350 self.backend.on_task_call(P, task_id)

351 task_id = P.publish_task(

352 name, args, kwargs, countdown=countdown, eta=eta,

353 task_id=task_id, expires=expires,

354 callbacks=maybe_list(link), errbacks=maybe_list(link_error),

355 reply_to=reply_to or self.oid, **options

356 )

producer is always None because delay() doesn't pass it as argument.

I've tried passing it explicitly to apply_async() as so:

from djapp.celery import *

# app = debug_task._get_app() # if not defined in djapp.celery

producer = app.amqp.producer_pool.acquire(block=True)

debug_task.apply_async(producer=producer)

However this doesn't speedup anything. If we replace the above code block like this:

349 with producer as P:

it blows up on the second iteration because producer and its channel is already None !?!

If you are unfamiliar with the with statement in Python please read

this article. In short the with statement is

a compact way of writing try/finally. The underlying kombu.messaging.Producer class does a

self.release() on exit of the with statement.

I also tried killing the with statement and using producer directly but with limited success. While it was not released(was non None) on subsequent iterations the memory usage grew much more and there wasn't any performance boost.

Conclusion

The with statement is used throughout both Celery and Kombu and I'm not at all sure if there's a mechanism for keep-alive connections. My time constraints are limited and I'll probably not spend anymore time on this problem soon.

Since my use case involves task producer and consumers on localhost I'll try to workaround the current limitations by using Kombu directly (see this gist) with a transport that uses either a UNIX domain socket or a name pipe (FIFO) file.

There are comments.

Speeding up Celery Backends, Part 2

In the first part of this post I looked at a few celery backends and discovered they didn't meet my needs. Why is the Celery stack slow? How slow is it actually?

How slow is Celery in practice

- Queue: 500`000 msg/sec

- Kombu: 14`000 msg/sec

- Celery: 2`000 msg/sec

Detailed test description

There are three main components of the Celery stack:

- Celery itself

- Kombu which handles the transport layer

- Python Queue()'s underlying everything

Using the Queue and Kombu tests run for 1 000 000 messages I got the following results:

- Raw Python Queue: Msgs per sec: 500`000

- Raw Kombu without Celery where

kombu/utils/__init__.py:uuid()is set to return 0- with json serializer: Msgs per sec: 5`988

- with pickle serializer: Msgs per sec: 12`820

- with the custom mem_serializer from part 1: Msgs per sec: 14`492

Note: when the test is executed with 100K messages mem_serializer yielded 25`000 msg/sec then the performance is saturated. I've observed similar behavior with raw Python Queue()'s. I saw some cache buffers being managed internally to avoid OOM exceptions. This is probably the main reason performance becomes saturated over a longer execution.

- Using celery_load_test.py modified to loop 1 000 000 times I got 1908.0 tasks created per sec.

Another interesting this worth outlining - in the kombu test there are these lines:

with producers[connection].acquire(block=True) as producer:

for j in range(1000000):

If we swap them the performance drops down to 3875 msg/sec which is comparable with the

Celery results. Indeed inside Celery there's the same with producer.acquire(block=True)

construct which is executed every time a new task is published. Next I will be looking

into this to figure out exactly where the slowliness comes from.

There are comments.

Speeding up Celery Backends, Part 1

I'm working on an application which fires a lot of Celery tasks - the more the better! Unfortunately Celery backends seem to be rather slow :(. Using the celery_load_test.py command for Django I was able to capture some metrics:

- Amazon SQS backend: 2 or 3 tasks/sec

- Filesystem backend: 2000 - 2500 tasks/sec

- Memory backend: around 3000 tasks/sec

Not bad but I need in the order of 10000 tasks created per sec! The other noticeable thing is that memory backend isn't much faster compared to the filesystem one! NB: all of these backends actually come from the kombu package.

Why is Celery slow ?

Using celery_load_test.py together with

cProfile I

was able to pin-point some problematic areas:

-

kombu/transports/virtual/__init__.py: class Channel.basic_publish() - does self.encode_body() into base64 encoded string. Fixed with custom transport backend I called fastmemory which redefines the body_encoding property:@cached_property def body_encoding(self): return None

-

Celery uses json or pickle (or other) serializers to serialize the data. While json yields between 2000-3000 tasks/sec, pickle does around 3500 tasks/sec. Replacing with a custom serializer which just returns the objects (since we read/write from/to memory) yields about 4000 tasks/sec tops:

from kombu.serialization import register def loads(s): return s def dumps(s): return s register('mem_serializer', dumps, loads, content_type='application/x-memory', content_encoding='binary')

-

kombu/utils/__init__.py: def uuid() - generates random unique identifiers which is a slow operation. Replacing it withreturn "00000000"boosts performance to 7000 tasks/sec.

It's clear that a constant UUID is not of any practical use but serves well to illustrate how much does this function affect performance.

Note:

Subsequent executions of celery_load_test seem to report degraded performance even with

the most optimized transport backend. I'm not sure why is this. One possibility is the random

UUID usage in other parts of the Celery/Kombu stack which drains entropy on the system and

generating more random numbers becomes slower. If you know better please tell me!

I will be looking for a better understanding of these IDs in Celery and hope to be able to produce a faster uuid() function. Then I'll be exploring the transport stack even more in order to reach the goal of 10000 tasks/sec. If you have any suggestions or pointers please share them in the comments.

There are comments.

Performance Profiling in Python with cProfile

This is a quick reference on profiling Python applications with cProfile:

$ python -m cProfile -s time application.py

The output is sorted by execution time -s time

9072842 function calls (8882140 primitive calls) in 9.830 CPU seconds

Ordered by: internal time

ncalls tottime percall cumtime percall filename:lineno(function)

61868 0.575 0.000 0.861 0.000 abstract.py:28(__init__)

41250 0.527 0.000 0.660 0.000 uuid.py:101(__init__)

61863 0.405 0.000 1.054 0.000 abstract.py:40(as_dict)

41243 0.343 0.000 1.131 0.000 __init__.py:143(uuid4)

577388 0.338 0.000 0.649 0.000 abstract.py:46(<genexpr>)

20622 0.289 0.000 8.824 0.000 base.py:331(send_task)

61907 0.232 0.000 0.477 0.000 datastructures.py:467(__getitem__)

20622 0.225 0.000 9.298 0.000 task.py:455(apply_async)

61863 0.218 0.000 2.502 0.000 abstract.py:52(__copy__)

20621 0.208 0.000 4.766 0.000 amqp.py:208(publish_task)

462640 0.193 0.000 0.247 0.000 {isinstance}

515525 0.162 0.000 0.193 0.000 abstract.py:41(f)

41246 0.153 0.000 0.633 0.000 entity.py:143(__init__)

In the example above (actual application) first line is kombu's

abstract.py: class Object(object).__init__()

and the second one is Python's

uuid.py: class UUID().__init__().

There are comments.

Skip or Render Specific Blocks from Jinja2 Templates

I wasn't able to find detailed information on how to skip rendering or only render specific blocks from Jinja2 templates so here's my solution. Hopefully you find it useful too.

With below template I want to be able to render only kernel_options block as a single line and then render the rest of the template excluding kernel_options.

{% block kernel_options %}

console=tty0

{% block debug %}

debug=1

{% endblock %}

{% endblock kernel_options %}

{% if OS_MAJOR == 5 %}

key --skip

{% endif %}

%packages

@base

{% if OS_MAJOR > 5 %}

%end

{% endif %}

To render a particular block you have to use the low level Jinja API template.blocks. This will return a dict of block rendering functions which need a Context to work with.

The second part is trickier. To remove a block we have to create an extension which will filter it out. The provided SkipBlockExtension class does exactly this.

Last but not least - if you'd like to use both together you have to disable caching in the Environment (so you get a fresh template every time), render your blocks first, configure env.skip_blocks and render the entire template without the specified blocks.

#!/usr/bin/env python

import os

import sys

from jinja2.ext import Extension

from jinja2 import Environment, FileSystemLoader

class SkipBlockExtension(Extension):

def __init__(self, environment):

super(SkipBlockExtension, self).__init__(environment)

environment.extend(skip_blocks=[])

def filter_stream(self, stream):

block_level = 0

skip_level = 0

in_endblock = False

for token in stream:

if (token.type == 'block_begin'):

if (stream.current.value == 'block'):

block_level += 1

if (stream.look().value in self.environment.skip_blocks):

skip_level = block_level

if (token.value == 'endblock' ):

in_endblock = True

if skip_level == 0:

yield token

if (token.type == 'block_end'):

if in_endblock:

in_endblock = False

block_level -= 1

if skip_level == block_level+1:

skip_level = 0

if __name__ == "__main__":

context = {'OS_MAJOR' : 5, 'ARCH' : 'x86_64'}

abs_path = os.path.abspath(sys.argv[1])

dir_name = os.path.dirname(abs_path)

base_name = os.path.basename(abs_path)

env = Environment(

loader = FileSystemLoader(dir_name),

extensions = [SkipBlockExtension],

cache_size = 0, # disable cache b/c we do 2 get_template()

)

# first render only the block we want

template = env.get_template(base_name)

lines = []

for line in template.blocks['kernel_options'](template.new_context(context)):

lines.append(line.strip())

print "Boot Args:", " ".join(lines)

print "---------------------------"

# now instruct SkipBlockExtension which blocks we don't want

# and get a new instance of the template with these blocks removed

env.skip_blocks.append('kernel_options')

template = env.get_template(base_name)

print template.render(context)

print "---------------------------"

The above code results in the following output:

$ ./jinja2-render ./base.j2

Boot Args: console=tty0 debug=1

---------------------------

key --skip

%packages

@base

---------------------------

Teaser: this is part of my effort to replace SNAKE with a client side kickstart template engine for Beaker so stay tuned!

There are comments.

FOSDEM 2014 Report - Day #1 Python, Stands and Lightning Talks

As promised I'm starting catching up on blogging after being sick and traveling. Here's my report of what I saw and found interesting at this year's FOSDEM which was held the last weekend in Brussels.

On Friday evening I've tried to attend the FOSDEM beer event at Delirium Cafe but had a bad luck. At 21:30 the place was already packed with people. I managed to get access to only one of the rooms but it looked like the wrong one :(. I think the space is definitely small for all who are willing to attend.

During Saturday morning I did a quick sight-seeing most notably Mig's wine shop and the area around it since I've never been to this part of the city before. Then I took off to FOSDEM arriving at noon (IOW not too late).

I've spent most of my day at building K where project stands were and I stayed quite a long time around the Python and Perl stands meeting new people and talking to them about their upgrade practices and how they manage package dependencies (aka promoting Difio).

Fedora stand was busy with 3D printing this year. I've seen 3D printing before but here I was amazed of the fine-grained quality of the pieces produced. This is definitely something to have in mind if you are building physical products.

Red Hat's presence was very strong this year. In addition to the numerous talks they gave there were also oVirt and OpenShift Origin stands which were packed with people. I couldn't even get close to say hi or take a picture.

Near the end of the day I went to listen to some of the lightning talks. The ones that I liked the most were MATE Desktop and DoudouLinux.

The thing about MATE which I liked is that they have a MATE University initiative which is targeting developers who want to learn how to develop MATE extensions. This is pretty cool with respect to community and developers on-boarding.

DoudouLinux is a Debian based distribution targeted at small children (2 or 3 years old) based on simple desktop and educational activities. I've met project leader and founder Jean-Michel Philippe who gave the talk. We chatted for a while when Alejandro Simon from Kano came around and showed us a prototype of their computer for children. I will definitely give DoudouLinux a try and maybe pre-order Kano as well.

In the evening there was a Python beer event at Delirium and after that dinner at Chez Leon where I had snails and rabbit with cherries in cherry beer sauce. I've had a few beers with Marc-Andre from eGenix and Charlie from Clark Consulting and the talk was mostly about non-technical stuff which was nice.

After that we went back to Delirium and re-united with Alexander Kurtakov and other folks from Red Hat for more cherry beer!

Report of the second day of FOSDEM'14 on Sunday is here.

There are comments.

Page 1 / 2